Unlocking Precision Medicine with AI-Powered Biomarker Discovery

Why AI-Powered Biomarker Findy is Changing Cancer Care

AI-powered biomarker findy is revolutionizing how we identify molecular signatures that predict cancer diagnosis, prognosis, and treatment response. This cutting-edge approach combines machine learning algorithms with multi-omics data to uncover biomarker patterns that traditional methods often miss.

Key components of AI-powered biomarker findy:

- Machine Learning Models: Deep learning and standard ML algorithms analyze high-dimensional genomic, proteomic, and imaging data

- Multi-Modal Integration: Combines genomics, radiomics, pathomics, and real-world clinical data for comprehensive insights

- Predictive Analytics: Identifies both prognostic markers (disease outcome) and predictive markers (treatment response)

- Federated Learning: Enables secure analysis across distributed datasets without moving sensitive patient data

- Explainable AI: Provides transparent, interpretable results that clinicians can trust and act upon

The impact is already measurable. Recent studies show 80% of AI biomarker research was published in 2021-2022, with platforms like the Predictive Biomarker Modeling Framework achieving 15% improvement in survival risk when applied to phase 3 clinical trials. Non-small-cell lung cancer leads research focus at 36% of studies, followed by melanoma at 16%.

Traditional biomarker findy often takes years and relies on hypothesis-driven approaches that may miss complex molecular interactions. AI changes this by systematically exploring massive datasets to find patterns humans couldn’t detect – often reducing findy timelines from years to months or even days.

As Prof. Nicolas Girard noted in recent research: “We need to move to a more comprehensive and multimodal characterization in the setting of innovative therapies such as immunotherapies.”

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where we’ve built a pioneering genomics platform that transforms global healthcare through federated AI-powered biomarker findy. With over 15 years in computational biology and AI, I’ve seen how secure, collaborative analytics can accelerate precision medicine while protecting patient privacy.

Biomarkers: The Building Blocks of Precision Oncology

Biomarkers are measurable biological indicators that help us understand disease states, predict outcomes, and guide treatment decisions. In oncology, they’ve become the foundation of precision medicine, moving us away from the traditional “one-size-fits-all” approach to cancer treatment.

Cancer causes approximately 10 million deaths per year globally, with about 70% of cancer-related mortality occurring in low- and middle-income countries. The challenge is that tumors of the same cell type, origin, and stage can have unique genetic features that dramatically impact disease progression and treatment response. This heterogeneity explains why identical treatments can produce dramatically different outcomes in seemingly similar patients.

The evolution of biomarker science has been remarkable. In the 1970s, we relied on basic tumor markers like carcinoembryonic antigen (CEA) and alpha-fetoprotein (AFP). Today, we can analyze thousands of molecular features simultaneously, creating comprehensive portraits of individual tumors that guide personalized treatment strategies.

There are three main categories of biomarkers that serve different clinical purposes:

Diagnostic markers help identify the presence of cancer and classify tumor types. These include traditional markers like prostate-specific antigen (PSA) for prostate cancer, as well as newer liquid biopsy markers that detect circulating tumor DNA (ctDNA) in blood samples. Modern diagnostic approaches combine multiple biomarkers into panels that provide higher accuracy than single markers. For example, the OVA1 test combines five protein biomarkers to assess ovarian cancer risk, while the 4Kscore test integrates four kallikrein markers with clinical information for prostate cancer detection.

Prognostic markers predict disease outcomes regardless of treatment. For example, Ki67 is a cellular proliferation marker that indicates how quickly cancer cells are growing, helping clinicians understand the aggressiveness of breast cancer tumors. The Oncotype DX Recurrence Score combines 21 genes to predict breast cancer recurrence risk, while the Decipher test analyzes 22 genes to assess prostate cancer aggressiveness. These tools help patients and doctors make informed decisions about treatment intensity.

Predictive markers determine which patients are most likely to benefit from specific treatments. These are crucial for selecting targeted therapies and immunotherapies, where response rates can vary dramatically between patients. HER2 overexpression predicts response to trastuzumab in breast cancer, while EGFR mutations predict response to tyrosine kinase inhibitors in lung cancer. PD-L1 expression, though imperfect, helps guide immunotherapy decisions across multiple cancer types.

Why Biomarkers Matter Across the Cancer Journey

Biomarkers play critical roles at every stage of cancer care, fundamentally changing how we approach disease management:

Early detection has been revolutionized by AI-driven analysis of imaging and molecular data. AI-identified lung cancer biomarkers have demonstrated improved early detection rates compared to traditional imaging methods, enabling earlier intervention and better survival outcomes. The NELSON trial showed that CT screening reduced lung cancer mortality by 24% in men and 33% in women, with AI improvement further improving detection accuracy. Multi-cancer early detection (MCED) tests like Galleri can identify over 50 cancer types from a single blood draw, potentially changing population screening approaches.

Risk stratification helps clinicians identify high-risk patients who need more aggressive monitoring or treatment. Modern AI platforms can analyze thousands of clinicogenomic measurements per individual to create comprehensive risk profiles. The BRCA1/2 genetic testing revolution exemplifies this approach – women with BRCA mutations have up to 87% lifetime breast cancer risk, leading to improved screening protocols and prophylactic interventions. Polygenic risk scores now combine hundreds of genetic variants to assess cancer susceptibility across populations.

Treatment selection is where predictive biomarkers shine. Rather than relying on broad population statistics, we can now identify specific patient subgroups most likely to respond to particular therapies. The development of companion diagnostics has been crucial – tests specifically designed to identify patients who will benefit from particular drugs. Examples include the cobas EGFR Mutation Test for erlotinib selection and the VENTANA PD-L1 assay for pembrolizumab decisions.

Response monitoring allows real-time assessment of treatment effectiveness. Liquid biopsy AI tools can analyze circulating tumor DNA to detect treatment resistance before it becomes clinically apparent. Serial ctDNA monitoring can detect molecular relapse months before imaging shows disease progression, enabling earlier treatment modifications. The CIRCULATE-Japan study demonstrated that ctDNA-guided treatment decisions improved outcomes in colorectal cancer patients.

Predictive vs Prognostic – Getting the Terminology Right

Understanding the distinction between predictive and prognostic biomarkers is crucial for clinical application. This difference isn’t just academic – it fundamentally changes how we use biomarkers in patient care and clinical trial design.

Prognostic biomarkers tell us about disease outcome independent of treatment. They answer the question: “How aggressive is this cancer?” For instance, certain genetic mutations in breast cancer indicate faster tumor growth regardless of which treatment is chosen. The Nottingham Prognostic Index combines tumor size, lymph node status, and histological grade to predict breast cancer outcomes across different treatment approaches.

Predictive biomarkers tell us about treatment benefit. They answer: “Will this specific therapy work for this patient?” HER2 overexpression in breast cancer is a classic example – it predicts exceptional response to HER2-targeted therapies like trastuzumab, but may actually indicate worse prognosis without targeted treatment.

The statistical validation requirements differ significantly. Prognostic markers need to correlate with outcomes across treatment groups, while predictive markers must show differential treatment effects between biomarker-positive and biomarker-negative patients. This requires specific clinical trial designs with biomarker stratification and interaction testing.

Some biomarkers can be both prognostic and predictive. Estrogen receptor (ER) status in breast cancer predicts response to hormonal therapies (predictive) while also indicating generally better prognosis (prognostic). Understanding these dual roles is essential for proper clinical interpretation and patient counseling.

AI-Powered Biomarker Findy: How Machine & Deep Learning Change the Game

Traditional biomarker findy relies on hypothesis-driven approaches where researchers test specific biological theories. While this has yielded important findies, it’s inherently limited by human knowledge and can miss complex, non-intuitive patterns in high-dimensional data.

AI-powered biomarker findy transforms this process by systematically exploring massive datasets to uncover patterns that traditional methods miss. Recent systematic reviews of 90 studies show that 72% used standard machine learning methods, 22% used deep learning, and 6% used both approaches. This represents a fundamental paradigm shift from hypothesis-driven to data-driven biomarker identification.

The computational power required for modern biomarker findy is staggering. A single whole genome sequence generates approximately 200 gigabytes of raw data, while a comprehensive multi-omics analysis of a single patient can involve millions of data points. Traditional statistical methods simply cannot handle this scale and complexity effectively.

The power of AI lies in its ability to integrate and analyze multiple data types simultaneously. Where traditional approaches might examine one biomarker at a time, AI can consider thousands of features across genomics, imaging, and clinical data to identify meta-biomarkers – composite signatures that capture disease complexity more completely.

Machine learning algorithms excel at different aspects of biomarker findy:

- Random forests and support vector machines provide robust performance with interpretable feature importance rankings, making them ideal for identifying key biomarker components

- Deep neural networks can capture complex non-linear relationships in high-dimensional data, particularly useful for multi-omics integration

- Convolutional neural networks excel at analyzing medical images and pathology slides, extracting quantitative features that correlate with molecular characteristics

- Autoencoders can identify hidden patterns in multi-omics data and reduce dimensionality while preserving biological signal

- Graph neural networks model biological pathways and protein interactions, incorporating prior biological knowledge into biomarker findy

Advanced approaches in multivariable model building have demonstrated how systematic feature selection and validation can improve biomarker performance across diverse patient populations.

Typical AI Pipeline for Biomarker Findy

The AI-powered biomarker findy pipeline follows a systematic approach that ensures robust, clinically relevant results:

Data ingestion begins with collecting multi-modal datasets from diverse sources. This includes genomic sequencing data, medical imaging, electronic health records, and laboratory results. The challenge is harmonizing data from different institutions and formats. Data lakes and cloud-based platforms have become essential infrastructure for managing these massive, heterogeneous datasets. Quality control at this stage is critical – poor quality input data will inevitably lead to unreliable biomarkers.

Preprocessing involves quality control, normalization, and feature engineering. Missing data imputation and outlier detection are critical steps that can dramatically impact model performance. Batch effects from different sequencing platforms or imaging equipment must be corrected. Feature engineering may involve creating derived variables, such as gene expression ratios or radiomic texture features, that capture biologically relevant patterns.

Model training uses various machine learning approaches depending on the data type and clinical question. Cross-validation and holdout test sets ensure models generalize beyond the training data. Hyperparameter optimization through techniques like grid search or Bayesian optimization fine-tunes model performance. Ensemble methods that combine multiple algorithms often provide the most robust results.

Validation requires independent cohorts and biological experiments. Computational predictions alone aren’t sufficient – biomarkers must demonstrate clinical utility in real-world settings. This includes analytical validation (does the test work reliably?), clinical validation (does it predict the intended outcome?), and clinical utility assessment (does it improve patient care?).

Deployment involves integrating validated biomarkers into clinical workflows through decision support systems and diagnostic platforms. This requires careful attention to user interface design, workflow integration, and ongoing performance monitoring.

AI-Powered Biomarker Findy in Immuno-Oncology

Immunotherapy has revolutionized cancer treatment, but selecting the right patients remains challenging. AI-powered biomarker findy is particularly valuable here because immune checkpoint inhibitors work through complex mechanisms involving the tumor microenvironment, immune system activation, and host factors.

Traditional biomarkers like PD-L1 expression provide limited predictive value, with response rates varying widely even among PD-L1 positive patients. The complexity arises from the dynamic interplay between tumor cells, immune cells, and the surrounding microenvironment. AI approaches can integrate multiple data modalities to create more comprehensive predictive signatures:

- Genomic features including tumor mutational burden (TMB), microsatellite instability (MSI), immune gene signatures, and neoantigen load

- Radiomic features extracted from CT and MRI scans that reflect tumor heterogeneity, vascular patterns, and immune infiltration

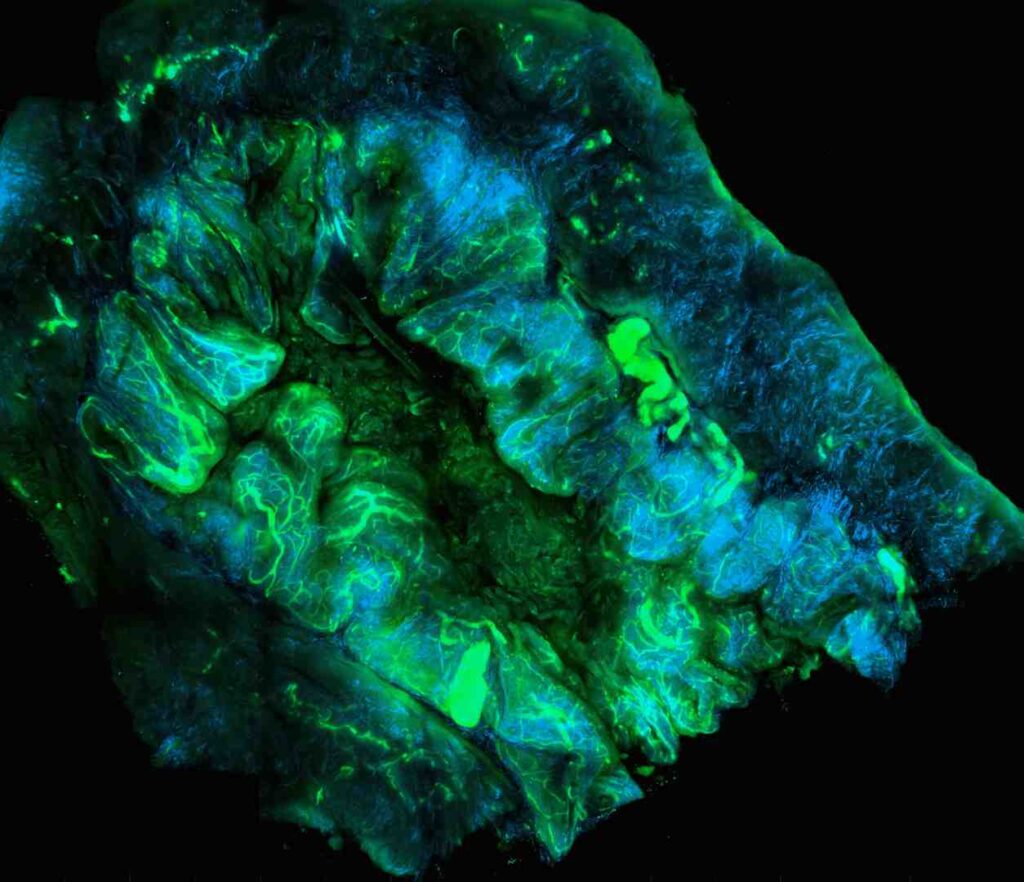

- Pathomic features from digitized pathology slides showing immune cell infiltration patterns, spatial relationships, and tissue architecture

- Clinical features including prior treatments, performance status, laboratory values, and patient demographics

- Microbiome data showing gut bacterial composition that influences immune system function

Non-small-cell lung cancer has been the most studied indication, representing 36% of AI immunotherapy biomarker studies. This focus reflects both the clinical success of immunotherapy in lung cancer and the availability of large, well-characterized datasets. Melanoma follows at 16%, while 25% of studies take pan-cancer approaches looking for universal predictive signatures that work across tumor types.

The integration of these diverse data types through AI has revealed that effective immunotherapy prediction requires understanding the complex interplay between tumor genetics, immune system activation, and tumor microenvironment characteristics. For example, high TMB alone is insufficient – the quality and presentation of neoantigens, the presence of immune-suppressive factors, and the spatial organization of immune cells all contribute to treatment response.

Emerging approaches include spatial transcriptomics, which maps gene expression patterns within tissue architecture, and single-cell sequencing, which reveals the heterogeneity of immune cell populations within tumors. These technologies generate even more complex datasets that require sophisticated AI approaches for biomarker identification.

From Genomics to Radiomics: Integrating Multi-Omics Data with AI

The explosion of multi-omics data over the past two decades has created unprecedented opportunities for biomarker findy. We can now simultaneously analyze genomics, transcriptomics, proteomics, metabolomics, and imaging data from the same patients, providing a comprehensive molecular portrait of disease.

Genomics provides the blueprint – DNA sequences, mutations, and structural variants that drive cancer development. Whole genome sequencing can identify rare variants and structural changes missed by targeted panels.

Transcriptomics reveals gene expression patterns that show which genes are active in tumors versus normal tissue. Single-cell RNA sequencing adds another dimension by showing cell-type-specific expression patterns within tumors.

Proteomics measures the actual functional molecules – proteins – that carry out cellular processes. Mass spectrometry can quantify thousands of proteins simultaneously, revealing post-translational modifications that genomics can’t detect.

Metabolomics captures the end products of cellular processes, providing insights into tumor metabolism and drug resistance mechanisms.

Radiomics extracts quantitative features from medical images that reflect tumor biology. AI can identify subtle imaging patterns that correlate with molecular characteristics, creating “virtual biopsies” that are non-invasive and repeatable.

Pathomics applies AI to digitized pathology slides, quantifying features like cell density, nuclear morphology, and tissue architecture that pathologists use for diagnosis.

Multimodal Integration Techniques

Combining these diverse data types requires sophisticated AI approaches that can handle different scales, distributions, and missing data patterns:

Autoencoders can learn compressed representations of each data type, then integrate these representations for downstream analysis. This approach has shown success in cancer subtype findy and drug response prediction.

Graph neural networks model relationships between different molecular entities – genes, proteins, metabolites – as networks. This captures biological pathway information that traditional methods miss.

Bayesian models explicitly handle uncertainty and can incorporate prior biological knowledge. They’re particularly useful when sample sizes are limited or when integrating data from different sources.

Ensemble learning combines predictions from multiple models trained on different data types. This approach often outperforms single-modality models and provides more robust predictions.

Federated & Privacy-Preserving Analytics

A challenge in AI-powered biomarker findy is accessing diverse, large-scale datasets while protecting patient privacy. Traditional approaches require centralizing data, which creates privacy risks and regulatory barriers.

Federated learning enables AI model training across distributed datasets without moving sensitive patient data. Models are trained locally at each institution, with only model parameters shared for aggregation. This approach has several advantages:

- Data sovereignty – institutions maintain control over their data

- Privacy protection – raw patient data never leaves the originating institution

- Regulatory compliance – easier to meet GDPR, HIPAA, and other privacy regulations

- Bias reduction – models trained on diverse populations perform better across different patient groups

Differential privacy adds mathematical guarantees that individual patient information cannot be extracted from model outputs. This provides formal privacy protection while enabling collaborative research.

At Lifebit, our federated AI platform enables secure, real-time access to global biomedical data through our Trusted Research Environment (TRE), supporting compliant AI-powered biomarker findy across institutions worldwide.

Success Stories & Platforms Accelerating Findy

The transition from research to clinical impact is accelerating as AI-powered biomarker findy platforms demonstrate real-world value. Several breakthrough examples illustrate the transformative potential of this approach, moving beyond proof-of-concept studies to implementations that directly improve patient outcomes.

The Predictive Biomarker Modeling Framework (PBMF) represents a major advance in systematic biomarker findy. In a retrospective analysis of the phase 3 clinical trial NCT02008227, the AI framework identified a predictive biomarker that could have improved survival risk by 15% compared to the original trial results. The framework achieved similar success in two additional phase 3 trials (NCT01668784, NCT02302807), identifying predictive biomarkers with at least 10% improvement in survival risk.

What makes PBMF particularly innovative is its use of contrastive learning to distinguish similar versus dissimilar patient profiles. The system analyzes thousands of molecular features to identify subtle patterns that differentiate responders from non-responders. The framework then uses knowledge distillation to convert complex AI models into simple decision trees that clinicians can easily interpret and apply in practice.

The clinical impact extends beyond individual biomarkers to systematic improvements in trial design and patient selection. By identifying predictive biomarkers retrospectively, PBMF demonstrates how AI can rescue seemingly negative trials by revealing patient subgroups that actually benefited from treatment.

Recent advances in AI-powered biomarker platforms have demonstrated how integrated computational approaches can accelerate the translation from biomarker findy to clinical implementation.

Real-World Clinical Implementation Success Stories

PathAI’s breast cancer screening represents one of the most successful clinical deployments of AI-powered biomarker findy. Their deep learning models analyze digitized pathology slides to identify malignancies with higher accuracy than traditional pathologist review, particularly excelling at detecting smaller or more subtle cancers that might be missed in routine screening. The system has been deployed across multiple health systems, processing thousands of cases monthly and demonstrating consistent performance improvements.

The key innovation lies in the model’s ability to quantify subtle morphological features that correlate with molecular characteristics. Rather than simply detecting cancer presence, the AI identifies specific cellular patterns associated with hormone receptor status, HER2 expression, and proliferation markers, effectively creating a “virtual immunohistochemistry” analysis from standard H&E stained slides.

Tempus ONE platform exemplifies comprehensive multi-omics biomarker findy at scale. The platform integrates genomic sequencing, clinical data, and imaging analysis to identify treatment-predictive biomarkers across cancer types. With over 4 million patients in their database, Tempus can identify rare biomarker patterns and treatment responses that would be impossible to detect in smaller datasets.

Their approach to real-world evidence generation is particularly noteworthy. By continuously analyzing treatment outcomes and molecular profiles, the platform identifies emerging biomarker-treatment associations before they’re validated in formal clinical trials. This has led to compassionate use approvals and expanded access programs for patients with rare molecular subtypes.

Foundation Medicine’s comprehensive genomic profiling has become the gold standard for tumor molecular characterization. Their FoundationOne CDx test analyzes over 300 genes to identify actionable mutations, with AI algorithms continuously updated as new biomarker-drug associations are finded. The platform has enabled precision medicine for thousands of patients who might otherwise have exhausted standard treatment options.

AI-Powered Biomarker Findy Driving Clinical Trials

The impact extends beyond retrospective analysis to prospective clinical trial design. AI platforms can now analyze early-stage trial data to identify and enrich patient subgroups before full enrollment, potentially rescuing trials that would otherwise fail due to inadequate patient selection.

Adaptive enrichment strategies use AI to continuously refine patient selection criteria as trials progress. This approach has shown particular promise in oncology, where response rates to targeted therapies can vary dramatically between patient subgroups. The BATTLE-1 trial in lung cancer pioneered this approach, using real-time biomarker analysis to assign patients to treatment arms based on their molecular profiles.

Modern adaptive trials go further, using AI to identify previously unknown biomarker-treatment interactions as data accumulates. Machine learning algorithms continuously analyze accumulating trial data to identify patient subgroups with differential treatment responses, enabling protocol modifications that improve trial success rates.

Synthetic control arms enable biomarker findy in single-arm trials by using AI to match treated patients with similar historical controls. This approach expands the applicability of biomarker findy to settings where traditional randomized controlled trials aren’t feasible, such as rare diseases or when effective standard treatments make placebo arms unethical.

The FDA has increasingly accepted synthetic control approaches for regulatory submissions, particularly in oncology where historical data is abundant and well-characterized. AI algorithms can match patients across multiple dimensions – molecular profiles, prior treatments, demographics, and disease characteristics – creating control groups that are statistically equivalent to randomized controls.

The POETIC-AI platform exemplifies how AI can accelerate clinical research timelines. By integrating diverse data types – genetic profiling, imaging, digital pathology, and clinical records – the platform reduces analysis timelines from years to months or even days. In the POETIC trial, researchers used AI to measure changes in Ki67 levels after pre-surgical hormone therapy, enabling personalized post-surgical treatment decisions based on individual response patterns.

AI-Powered Biomarker Findy in Real-World Practice

Clinical implementation is advancing rapidly across multiple cancer types, with several platforms now processing thousands of cases monthly:

Breast cancer pathology has seen remarkable progress with AI models achieving higher accuracy than pathologists in identifying malignancies, especially in detecting smaller or less obvious cancers. PathAI’s models demonstrate particular strength in catching subtle features that might be missed in routine screening, while also providing quantitative assessments of tumor characteristics that correlate with treatment response.

Liquid biopsy applications use AI to analyze circulating tumor DNA in blood samples, providing non-invasive alternatives to tissue biopsies. These approaches are particularly valuable for monitoring treatment response and detecting minimal residual disease. Guardant Health’s GuardantOMNI test can detect mutations in over 500 genes from a simple blood draw, while Natera’s Signatera creates personalized ctDNA assays based on each patient’s tumor profile.

Prostate cancer management benefits from AI recommendations derived from large-scale electronic health record and clinical trial data integration. These systems can identify optimal treatment sequences based on patient characteristics and prior therapy responses. The Decipher test combines AI analysis of 22 genes with clinical variables to guide treatment intensity decisions, helping patients avoid unnecessary treatments while ensuring adequate therapy for high-risk disease.

Lung cancer screening and diagnosis has been transformed by AI analysis of CT scans and molecular profiling. Google’s AI system can detect lung cancer in CT scans with greater accuracy than radiologists, while also identifying patients who would benefit from earlier screening. Integration with molecular biomarker analysis enables simultaneous detection and treatment selection.

The key to successful implementation is ensuring that AI-derived biomarkers integrate seamlessly into existing clinical workflows. This requires close collaboration between data scientists, clinicians, and regulatory bodies to ensure that new biomarkers meet clinical needs and regulatory standards. User interface design, result interpretation, and clinical decision support are as important as the underlying AI algorithms.

Challenges, Trustworthy AI & Regulatory Pathways

Despite tremendous progress, AI-powered biomarker findy faces significant challenges that must be addressed for widespread clinical adoption. Understanding these limitations is crucial for developing robust, trustworthy systems.

Data quality remains a fundamental challenge. AI models are only as good as the data they’re trained on, and healthcare data often contains missing values, measurement errors, and systematic biases. The old adage “garbage in, garbage out” applies particularly strongly to biomarker findy.

Class imbalance is endemic in medical datasets where disease outcomes are relatively rare. Standard machine learning metrics can be misleading when 95% of patients don’t have the outcome of interest. Specialized techniques like SMOTE (Synthetic Minority Oversampling Technique) and cost-sensitive learning help address this challenge.

Algorithmic bias can perpetuate or amplify existing healthcare disparities. If training datasets underrepresent certain populations, AI models may perform poorly for those groups. This is particularly concerning given that 70% of cancer deaths occur in low- and middle-income countries, yet most AI research focuses on high-income populations.

Explainable & Trustworthy AI Approaches

The “black box” nature of many AI models creates barriers to clinical adoption. Clinicians need to understand why a model makes specific predictions before they’ll trust it with patient care decisions.

Explainable AI (XAI) approaches provide transparency into model decision-making:

Saliency maps highlight which input features most strongly influence predictions. In medical imaging, these maps can show which regions of a scan drive the AI’s assessment.

SHAP (SHapley Additive exPlanations) values provide mathematically rigorous explanations of how each feature contributes to individual predictions. This is particularly valuable for understanding complex multi-omics biomarkers.

Counterfactual explanations show what would need to change for the model to make a different prediction. This helps clinicians understand the boundaries of model confidence.

Rule-based models like decision trees provide inherently interpretable alternatives to complex neural networks. While they may sacrifice some accuracy, they offer transparency that’s often more valuable in clinical settings.

The challenge is balancing model performance with interpretability. The most accurate models are often the least interpretable, creating tension between predictive power and clinical usability.

Navigating Ethics, Privacy & Equity

Ethical considerations in AI-powered biomarker findy extend beyond technical performance to fundamental questions of fairness, privacy, and access.

Patient consent becomes complex when data may be used for future research applications that weren’t anticipated at the time of collection. Dynamic consent frameworks allow patients to specify how their data can be used and update preferences over time.

Inclusive datasets are essential for ensuring that AI-derived biomarkers work across diverse populations. This requires proactive efforts to recruit underrepresented groups and address historical biases in medical research.

Bias mitigation strategies include algorithmic fairness techniques, diverse training datasets, and continuous monitoring of model performance across different patient subgroups.

Secure sharing technologies like federated learning and differential privacy enable collaborative research while protecting patient privacy. These approaches are becoming essential for large-scale biomarker findy projects.

Regulatory frameworks are evolving to address these challenges. The FDA has issued guidance on AI/ML-based medical devices, emphasizing the need for continuous monitoring and updates as models learn from new data.

Future Directions & Collaborative Roadmap

The future of AI-powered biomarker findy lies in scaling current approaches while addressing existing limitations. Several emerging trends will shape the next decade of precision medicine.

Multi-omics integration at scale will become routine as sequencing costs continue to decline and data harmonization improves. We’re moving toward comprehensive molecular profiling of every cancer patient, creating opportunities for personalized treatment selection.

Real-time evidence generation through continuous learning systems will enable AI models to improve continuously as they encounter new patients. This represents a fundamental shift from static biomarkers to adaptive, learning systems.

Federated consortia will enable global collaboration while respecting data sovereignty. Large-scale initiatives like the Global Alliance for Genomics and Health (GA4GH) are developing standards for secure, federated biomarker findy.

CRISPR-AI synergy combines gene editing with AI-driven target identification. AI can identify promising therapeutic targets, while CRISPR enables rapid validation through precise genetic modifications.

Digital twins of patients will integrate all available data – genomics, imaging, clinical history, wearable device data – to create comprehensive models for treatment optimization.

Personalized trial design will use AI to match patients with optimal clinical trials based on their molecular profiles and treatment history.

How Stakeholders Can Accelerate AI-Powered Biomarker Findy

Success requires coordinated efforts across multiple stakeholders:

Clinician-data scientist teams must work closely together to ensure that AI-derived biomarkers address real clinical needs. This requires shared understanding of both clinical workflows and technical capabilities.

Regulatory sandboxes provide safe environments for testing innovative AI approaches while maintaining patient safety. These initiatives help regulators understand new technologies while giving developers clearer pathways to approval.

Patient advocacy ensures that biomarker findy priorities align with patient needs and values. Patients bring unique perspectives on treatment trade-offs and quality of life considerations.

International collaboration is essential for addressing global health disparities and ensuring that AI-derived biomarkers work across diverse populations.

The Role of Lifebit’s Federated AI Platform

At Lifebit, we’ve built our platform specifically to address the challenges of large-scale, collaborative AI-powered biomarker findy. Our integrated approach combines several key components:

Trusted Research Environment (TRE) provides secure, compliant workspaces for sensitive biomedical data analysis. Researchers can collaborate across institutions while maintaining data privacy and regulatory compliance.

Trusted Data Lakehouse (TDL) harmonizes diverse data types – genomics, imaging, clinical records – into analysis-ready formats. This eliminates the traditional bottleneck of data preparation that can take months in conventional approaches.

R.E.A.L. (Real-time Evidence & Analytics Layer) delivers real-time insights and AI-driven analytics across hybrid data ecosystems. This enables continuous learning and adaptive biomarker findy.

Our federated approach enables secure analysis across distributed datasets without moving sensitive patient data. This is crucial for AI-powered biomarker findy projects that require large, diverse datasets while respecting privacy regulations and institutional policies.

We’ve deployed our platform across five continents, supporting biopharma companies, government agencies, and public health organizations in their precision medicine initiatives. The platform’s built-in capabilities for harmonization, advanced AI/ML analytics, and federated governance make it uniquely suited for large-scale biomarker findy projects.

Frequently Asked Questions about AI-Powered Biomarker Findy

How accurate are AI-finded biomarkers compared to traditional ones?

AI-finded biomarkers often outperform traditional single-marker approaches, particularly in complex diseases like cancer. Recent studies show AI models achieving pooled sensitivity and specificity of 94.3% and 98.9% in certain applications. However, accuracy depends heavily on data quality, sample size, and validation methodology.

The key advantage isn’t just higher accuracy, but the ability to identify complex, multi-dimensional biomarker signatures that traditional hypothesis-driven approaches miss. AI can integrate thousands of features across genomics, imaging, and clinical data to create meta-biomarkers that capture disease complexity more completely.

Can AI help identify biomarkers for rare cancers with limited data?

Yes, but with important caveats. AI approaches like transfer learning can leverage knowledge from common cancers to help with rare diseases. Federated learning enables pooling of rare disease data across institutions without compromising privacy.

However, rare cancers remain challenging because AI models generally require substantial training data. Techniques like data augmentation, synthetic data generation, and few-shot learning are being developed specifically for rare disease applications. The key is combining AI with strong biological knowledge to guide model development.

What does ‘explainable’ really mean in clinical AI models?

Explainable AI (XAI) in clinical contexts means that healthcare providers can understand why a model makes specific predictions and can trace the decision-making process. This goes beyond simply knowing which features are important to understanding how they interact to influence outcomes.

True explainability requires multiple levels: global explanations showing overall model behavior, local explanations for individual predictions, and counterfactual explanations showing what would change the prediction. The goal is giving clinicians enough understanding to make informed decisions about when to trust and act on AI recommendations.

Conclusion

AI-powered biomarker findy represents a fundamental shift in how we approach precision medicine. By combining machine learning with multi-omics data, we can identify molecular signatures that predict treatment response with unprecedented accuracy and speed.

The evidence is compelling: AI approaches are reducing biomarker findy timelines from years to months, achieving 15% improvements in survival risk prediction, and enabling new insights across cancer types from lung cancer to rare diseases. The integration of genomics, radiomics, pathomics, and real-world data creates opportunities for comprehensive patient characterization that was impossible just a few years ago.

However, realizing this potential requires addressing significant challenges around data quality, algorithmic bias, model interpretability, and regulatory frameworks. Success depends on collaborative efforts between clinicians, data scientists, regulators, and patients to ensure that AI-derived biomarkers truly improve patient outcomes.

The future lies in federated, privacy-preserving approaches that enable global collaboration while protecting patient data. At Lifebit, we’re committed to making this vision a reality through our federated AI platform that enables secure, real-time analysis across distributed biomedical datasets.

The goal isn’t just better biomarkers, but more equitable access to precision medicine. By democratizing access to AI-powered analytics and ensuring that biomarker findy includes diverse populations, we can work toward a future where personalized treatment is available to all patients, regardless of geography or economic status.

The change is already underway. The question isn’t whether AI will revolutionize biomarker findy, but how quickly we can implement these advances safely and equitably across global healthcare systems.