What is a Data Warehouse and Why Does Your Business Need One?

Why Data Warehouses Are Essential for Modern Business Intelligence

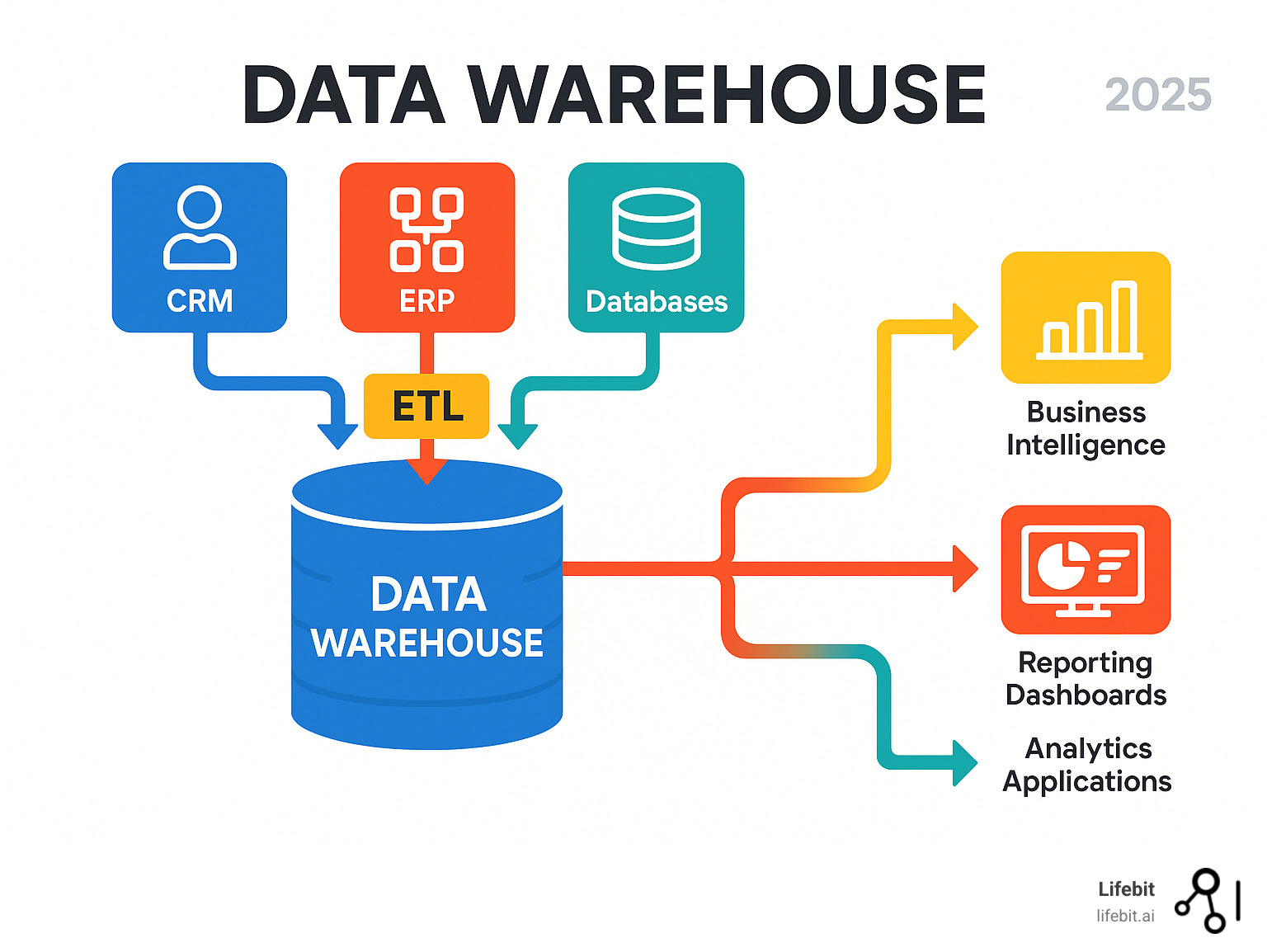

Data warehouses are centralized repositories that integrate data from multiple sources to support business intelligence (BI), reporting, and analytics. They aggregate current and historical data, are optimized for analytical processing (OLAP), and serve as a single source of truth for decision-making.

Key benefits include improved data quality, faster query performance, historical insights, and better business decisions. Common use cases range from BI and reporting to customer analytics and financial analysis.

Organizations generate massive amounts of information, but without a system to consolidate it, they struggle to extract meaningful insights. This is especially true in regulated industries like healthcare, where data often exists in isolated silos. Data warehouses break down these silos, creating a unified view of organizational data.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. With over 15 years of experience in biomedical data integration, I’ve seen how data warehousing can transform siloed datasets into actionable insights for better healthcare outcomes.

What is a Data Warehouse? A Foundational Guide

A data warehouse (DW or DWH) is a central repository where business information is organized for analysis. Unlike operational databases designed for transactions, a data warehouse is built for business intelligence and reporting. It transforms raw data into actionable insights by handling enormous volumes of information and delivering fast query performance for complex analytical questions.

These systems support online analytical processing (OLAP), allowing users to examine data from multiple angles. By providing historical context and an integrated view, a data warehouse helps uncover trends that would otherwise remain hidden, creating a single source of truth for data-driven decisions.

Key Characteristics of Data in Modern Data Warehouses

Data in a warehouse has six essential characteristics:

- Subject-oriented: Data is organized around key business subjects like customers, products, or sales, not the applications that created it. For example, instead of storing data in separate application-specific tables for order processing, customer service calls, and marketing campaigns, a subject-oriented warehouse would organize all this information around the ‘Customer’ subject. This allows an analyst to easily see a customer’s entire history—all purchases, support interactions, and campaign responses—in one place.

- Integrated: Information from disparate sources (CRM, ERP, etc.) is consolidated into a consistent format. This involves resolving inconsistencies like different naming conventions (e.g., ‘USA’ vs. ‘United States’), conflicting data types, and varying units of measure. The goal is to create a single, coherent dataset where ‘revenue’ means the same thing everywhere, establishing a single source of truth.

- Time-variant: Historical data, often spanning years, is preserved. This means data is not overwritten but is instead captured as a series of snapshots over time. For instance, a warehouse might store a customer’s address every time it changes, each with a timestamp. This historical record is crucial for analyzing trends, such as how sales in a region have evolved over the past five years, or comparing Q1 performance year-over-year.

- Non-volatile: Once data enters the warehouse, it is not changed or deleted. Data is loaded into the warehouse periodically (e.g., daily or weekly) and is then read-only. This stability is fundamental for business intelligence, as it ensures that reports run today will yield the same results as reports run yesterday on the same data. It creates a reliable and auditable record, unlike operational systems where data is constantly being updated, inserted, and deleted.

- Data granularity: Information is stored at various levels of detail, from individual transactions to summarized aggregates. For example, a retail warehouse might store individual sales transactions (high granularity) but also provide pre-calculated summaries of daily sales by store and product category (low granularity). This flexibility allows executives to get a quick overview while enabling analysts to drill down into the fine-grained details to investigate anomalies or specific trends.

- Standardized: Data definitions and formats are made consistent across the organization. This is achieved through a shared metadata repository and strong data governance practices. Standardizing data definitions, business rules, and formats eliminates ambiguity and ensures that when different departments discuss ‘active customers,’ they are all referring to the same metric, calculated in the same way.

Why a Data Warehouse is Separate from Operational Databases

Operational databases and data warehouses serve different purposes. Operational systems are designed for Online Transaction Processing (OLTP), handling fast, individual transactions like a sale or an inventory update. They are optimized for speed and accuracy in capturing current data.

Data warehouses, however, are built for Online Analytical Processing (OLAP). They are designed to read and analyze large volumes of historical data to answer complex, strategic questions. Running these intensive analytical queries on an OLTP system would severely degrade its performance, slowing down daily business operations.

In short, operational databases run the business, while data warehouses help you understand it. The separation is crucial for performance, data structure (current vs. historical), and query complexity (simple transactions vs. complex analysis). For a deeper dive, this comparative review of databases and data warehouses offers more technical detail.

How Data Warehouses Work: Architecture and Design Principles

The power of a data warehouse lies in its architecture, which transforms raw data into analysis-ready information. It’s a sophisticated data factory with a clear, structured process.

Most designs use a three-tier architecture:

- Bottom Tier (Data Layer): This tier is the data foundation. It includes the various source systems—transactional databases (OLTP), CRM and ERP applications, logs, flat files, and even external data feeds. The core process here is ETL (or ELT), where data is extracted from these sources, cleansed of errors and inconsistencies in a staging area, and transformed into a structured format suitable for analysis before being loaded into the central warehouse repository.

- Middle Tier (Analytical Layer): This is the engine of the data warehouse. It typically contains an Online Analytical Processing (OLAP) server that enables fast and complex querying. The OLAP server restructures the data for multidimensional analysis. There are three main types: ROLAP (Relational OLAP), which uses the underlying relational database directly; MOLAP (Multidimensional OLAP), which pre-aggregates data into a multidimensional cube for extremely fast slicing and dicing; and HOLAP (Hybrid OLAP), which combines the scalability of ROLAP with the speed of MOLAP.

- Top Tier (Presentation Layer): This is the user-facing tier where the value of the data warehouse is realized. It consists of front-end tools that allow business users to interact with the data. These tools range from simple SQL clients and ad-hoc query tools to sophisticated business intelligence (BI) platforms like Tableau, Power BI, or Looker, which provide interactive dashboards, enterprise reporting, and data visualization capabilities. Data mining and advanced analytics applications also connect at this tier.

Key processes include data cleansing to correct inconsistent records and ETL/ELT to move and format the data. Metadata, which is data about your data, provides context and makes the warehouse navigable.

Core Architectural Components

- Data sources: The origin points, such as CRM and ERP systems, databases, and flat files.

- Staging area: A temporary holding space where data is cleansed and transformed before entering the warehouse.

- Data integration layer: Orchestrates the ETL/ELT tools and processes that move data into the central database.

- Central database: The core of the warehouse, typically a relational database optimized for analytical queries.

- Access tools: Tools like SQL clients, BI platforms (Tableau, Looker), and data mining applications that allow users to retrieve and visualize data.

- Metadata repository: A central catalog of information about data sources, changes, and business rules.

Common Data Modeling Approaches

Data modeling organizes information for analytical impact. Dimensional modeling, pioneered by Ralph Kimball, is the standard. It organizes data into facts (numerical measurements) and dimensions (descriptive context).

- The star schema is the simplest implementation. For example, in a retail sales warehouse, a star schema would have a central

Sales_Facttable containing numerical measures likeRevenue,Quantity_Sold, andDiscount_Amount. This fact table would be directly linked to dimension tables likeDim_Time(with attributes like day, month, year),Dim_Product(product name, category, brand), andDim_Store(store name, city, state). Queries are simple because they only require joining the fact table to the relevant dimension tables. - The snowflake schema is a more normalized version. In our retail example, the

Dim_Producttable might be normalized further. Instead of storing the brand and category directly inDim_Product, there would be a separateDim_Categorytable and aDim_Brandtable, whichDim_Productlinks to. This reduces storage space by avoiding redundancy but requires more complex queries with additional joins. - Data cubes are a conceptual model for viewing data across multiple dimensions, enabling operations like roll-up, drill-down, and slicing.

Historically, a debate existed between Inmon’s top-down and Kimball’s bottom-up approaches. The Inmon approach advocates for building a centralized, normalized Enterprise Data Warehouse (EDW) first, from which smaller data marts are created. This emphasizes enterprise-wide consistency. The Kimball approach focuses on building individual data marts for specific business processes first, which are then integrated. This method is more agile. Many modern implementations use a hybrid of both.

Key Benefits of a Data Warehouse for Your Business

Investing in a data warehouse transforms how a business uses information, leading to significant strategic advantages.

- Improved Data Quality and Consistency: By centralizing data from disparate systems, a data warehouse forces an organization to confront and resolve data inconsistencies. The ETL/ELT process includes rigorous data cleansing, validation, and change rules that standardize formats and definitions. This creates a single source of truth (SSOT), a reliable, consolidated view of business information. When the finance and sales departments both pull a report on quarterly revenue, they get the same number, eliminating confusion, building trust in the data, and enabling decisions based on a shared, accurate understanding of performance.

- Faster Query Performance: Operational databases are designed for fast, small transactions, not complex analytical queries. Asking a strategic question like, ‘What is the average customer lifetime value for customers acquired through our holiday marketing campaign over the last five years, segmented by product category?’ could grind an operational system to a halt. A data warehouse, however, is specifically architected for this type of Online Analytical Processing (OLAP). Its structure, indexing, and use of aggregations allow it to answer such complex queries in minutes or even seconds, not hours or days, empowering decision-makers with timely insights.

- Improved Business Intelligence: A data warehouse is the engine that powers modern business intelligence. With a clean, integrated, and historically rich dataset, creating comprehensive reports and interactive dashboards becomes dramatically simpler and more powerful. Business analysts can move beyond basic reporting to perform deep analytical dives, uncovering trends, patterns, and correlations that were previously hidden in siloed data. This enables sophisticated analysis like customer segmentation, market basket analysis, and churn prediction, changing BI from a reactive reporting function into a proactive strategic tool.

- Historical Insights: Operational systems typically only store current data. A data warehouse, by its nature, is time-variant, preserving a long-term historical record. By storing years or even decades of data, it allows businesses to perform longitudinal analysis. They can analyze long-term sales trends, track how customer behavior has evolved, measure the impact of strategic initiatives over time, and build more accurate forecast models. This historical context is invaluable for learning from past successes and failures and making informed predictions about the future.

- Competitive Advantage: A clear, comprehensive view of operations, customers, and market trends allows organizations to spot opportunities, mitigate risks, and respond to market changes with agility. By understanding which products are most profitable and which customer segments are growing fastest, a company can allocate resources more effectively than its competitors.

- High Return on Investment (ROI): While implementing a data warehouse requires an initial investment, the ROI is often significant and multifaceted. It stems from improved operational efficiency (e.g., automating reporting), reduced costs (e.g., identifying supply chain inefficiencies), and increased revenue (e.g., spotting cross-sell opportunities or optimizing pricing). Better, faster, data-driven decisions at every level of the organization lead directly to tangible business outcomes, making the data warehouse a strategic asset that pays for itself over time.

- Foundation for AI and Machine Learning: Advanced AI/ML models require clean, integrated, historical data to function effectively. A data warehouse provides the ideal, high-quality training data needed for predictive analytics, fraud detection models, and customer churn prediction, serving as the launchpad for transformative AI initiatives.

Data Warehouse vs. Data Lake vs. Database: Choosing the Right System

Understanding the differences between a data warehouse, a data lake, and a database is key to choosing the right system for your needs. Each is a tool designed for a specific job.

- Databases (OLTP systems) are built for fast, real-time transactions, like processing an order. They handle current, structured data and prioritize speed and integrity for daily operations.

- Data warehouses (OLAP systems) are designed for business intelligence and analysis. They store structured, integrated historical data and are optimized for complex queries that support strategic decisions.

- Data lakes store vast amounts of raw data in its native format—structured, semi-structured, or unstructured. They offer flexibility for data scientists to explore data before its purpose is fully defined, using a schema-on-read approach.

| Feature | Databases (OLTP) | Data Warehouses (OLAP) | Data Lakes |

|---|---|---|---|

| Purpose | Operational processing, real-time transactions | Business intelligence, reporting, analytical processing | Store all raw data for future, undefined uses |

| Data Type | Structured, current data | Structured, integrated, historical data | Structured, semi-structured, unstructured (raw) data |

| Schema | Schema-on-write (schema defined before data is stored) | Schema-on-write (schema defined before data is stored) | Schema-on-read (schema applied when data is read) |

| Data Quality | High, enforced by design | High, cleaned and transformed | Variable, raw data (can be messy) |

| Users | Application users, transaction processing | Business analysts, data analysts, BI professionals | Data scientists, data engineers |

| Cost | Moderate to high (per transaction) | High (storage, processing, change) | Low (cheap storage for raw data) |

| Performance | Fast for specific, small transactions | Fast for complex analytical queries | Variable, depends on processing tools |

| Use Cases | Online banking, e-commerce, inventory management | Sales reporting, financial analysis, customer segmentation | Machine learning, predictive analytics, real-time analytics |

| Data Volume | Typically gigabytes to terabytes | Terabytes to petabytes | Petabytes to exabytes |

Data Marts and the Modern Data Ecosystem

Data marts are smaller, focused versions of a data warehouse that serve a specific department, like marketing or finance. They can be dependent (created from a central EDW) or independent (built directly from operational systems). The modern data ecosystem also includes the data lakehouse, a hybrid architecture combining the flexibility of a data lake with the management features of a data warehouse. You can learn more about What is a Data Lakehouse and its impact on data architecture.

The Special Case of Biomedical and Multi-Omic Data

Life sciences research presents unique challenges that push beyond traditional data warehouses. Genomic data, for example, is massive and often semi-structured, requiring specialized tools as detailed in our article on Big Data Challenges in Genomics.

Furthermore, pharmacovigilance and clinical data integration require combining diverse, highly sensitive data from sources like clinical trials and electronic health records. This data is governed by strict regulations like HIPAA and GDPR. These unique requirements necessitate specialized platforms that can harmonize diverse data types, support advanced AI, and maintain federated governance while ensuring patient data remains secure and compliant.

The Evolution of Data Warehousing: From On-Premise to the Cloud and Beyond

The concept of the data warehouse emerged in the late 1980s, pioneered by figures like Bill Inmon and Ralph Kimball. Early systems were on-premise, monolithic architectures running on powerful but expensive hardware from vendors like Teradata, IBM, and Oracle. These systems required massive upfront capital investments in mainframe computers or specialized appliances, dedicated data centers with physical space, power, and cooling, and large, specialized IT teams for maintenance and management. While powerful for their time, they were inflexible, difficult to scale, and the long implementation cycles meant that business value could take years to realize.

The digital revolution, with the rise of the web, social media, and IoT, created an explosion in data volume that strained these traditional systems. This pressure drove the evolution toward more scalable and agile solutions. For more background, see A Short History of Data Warehousing.

Today, the momentum has shifted decisively to cloud-based and hybrid data warehouses, driven by the need for scalability, cost-efficiency, and real-time analytics.

How Cloud Technology Transformed Data Warehousing

Cloud technology revolutionized data warehousing. This shift was led by cloud-native platforms like Amazon Redshift, Google BigQuery, Snowflake, and Azure Synapse Analytics. These services democratized data analytics by offering:

- Scalability and Elasticity: Virtually unlimited storage and compute resources that can be scaled up or down on demand. This separation of storage and compute eliminates the need for expensive, over-provisioned hardware.

- Cost-Effectiveness: A pay-as-you-go model replaces large upfront capital expenditures, significantly reducing the total cost of ownership and making advanced analytics accessible to more companies.

- Faster Deployment: New environments can be set up in hours instead of months, accelerating time-to-insight.

- Improved Security: Cloud providers offer advanced encryption, access controls, and compliance certifications that often surpass what individual companies can achieve on-premise.

- Global Accessibility: Teams can access data from anywhere, enabling global collaboration.

- Seamless AI/ML Integration: Cloud platforms make it easy to connect advanced analytics services directly to warehouse data.

Real-World Applications and Examples

Data warehouses are the engines behind data-driven decisions in many industries:

- Healthcare: They integrate clinical data from EHRs, genomic data, lab results, and insurance claims data. This unified view supports population health management by identifying at-risk patient groups, accelerates clinical trial recruitment, and enables researchers to find new treatment pathways while maintaining strict patient data privacy.

- Banking: Financial institutions use them for comprehensive risk management, calculating credit risk scores, complying with regulatory reporting requirements (like Basel III), detecting fraudulent transactions in near real-time, and performing customer lifetime value analysis to tailor products and services.

- Retail: Beyond inventory management, retailers use warehouse data to power recommendation engines, personalize marketing campaigns based on purchase history, optimize supply chains by analyzing sales forecasts against stock levels, and optimize store layouts by analyzing in-store foot traffic patterns.

- Government: Agencies use them for policy analysis, public health surveillance, resource allocation, and urban planning. For example, a city might analyze traffic, public transit, and population data to optimize bus routes and plan new infrastructure.

In life sciences, traditional architectures are evolving into sophisticated platforms like data lakehouses to handle the unique challenges of multi-omic and clinical data. The Application of Data Lakehouses in Life Sciences shows how these next-gen systems meet modern research needs.

Frequently Asked Questions about Data Warehouses

Here are answers to some of the most common questions about data warehouses.

What is the difference between ETL and ELT?

ETL and ELT are two approaches to data integration. The difference lies in when the “transform” step occurs.

- ETL (Extract, Transform, Load): This is the traditional method. Data is extracted from source systems, transformed in a separate staging area (cleaned, standardized), and then loaded into the data warehouse. This is useful when change logic is complex or warehouse storage is a constraint.

- ELT (Extract, Load, Transform): This is a modern approach, popular with cloud data warehouses. Raw data is extracted and loaded directly into the data warehouse. The change then happens inside the warehouse, leveraging its powerful processing capabilities. This preserves the raw data for future flexibility and is efficient for large data volumes.

What is an Enterprise Data Warehouse (EDW)?

An Enterprise Data Warehouse (EDW) is a centralized warehouse that serves an entire organization, not just a single department. It integrates data from all major business functions—finance, sales, HR, supply chain—to create a single, comprehensive source of truth for the whole company.

An EDW is crucial for master data management, ensuring that core business entities (like customer or product IDs) are consistent across all systems. This enables accurate company-wide reporting and strategic decision-making. Building an EDW is a major strategic commitment to treating data as a corporate asset.

How is data in a data warehouse kept secure?

Securing a data warehouse involves a multi-layered approach to protect sensitive information and ensure compliance.

- Access Control: Role-based access control (RBAC) ensures users can only see the data necessary for their jobs.

- Data Encryption: Data is encrypted both at rest (in storage) and in transit (moving between systems), making it unreadable to unauthorized parties.

- Governance and Auditing: Strong governance policies define data handling rules. Detailed audit logs track who accessed what data and when, creating a clear chain of accountability.

- Compliance: Warehouses handling sensitive information must be designed to meet regulations like HIPAA and GDPR, often including features for data anonymization, pseudonymization, and consent management to protect individual privacy while enabling analysis.

Conclusion: Opening up Future Insights with Advanced Data Platforms

Data warehouses are the foundational bridge between raw data and the meaningful insights that drive business success. They transform chaotic data landscapes into organized sources of knowledge, enabling high-quality, consistent data, fast analytical performance, and valuable historical perspective.

However, the data world is rapidly evolving. The rise of massive unstructured datasets, real-time analytics, and AI/ML is pushing traditional data warehouses to become more flexible and intelligent. This evolution is especially critical in sensitive sectors like life sciences, where the challenge is to securely access and analyze massive, distributed datasets while respecting strict privacy and governance rules.

Federated approaches, which bring analysis to the data, are becoming essential. The future of analytics lies in platforms that combine the reliability of data warehouses with the flexibility needed for tomorrow’s challenges, including AI-driven insights and real-time processing.

At Lifebit, our federated Data Intelligence Platform is built for these next-generation challenges. It enables secure, real-time analysis of global biomedical data through components like our Trusted Research Environment (TRE) and Trusted Data Lakehouse (TDL), powering compliant research and pharmacovigilance for leading organizations.

The future belongs to platforms that integrate diverse data, apply advanced intelligence, and maintain robust security. This evolution is key to open uping truly transformative insights.

Ready to see what the next generation of data platforms can do? We invite you to explore a next-generation Trusted Data Lakehouse and find how it can revolutionize your approach to data analytics and decision-making.