Data Intelligence Platform Explained: AI Meets Analytics

Data Intelligence: Your 2025 Blueprint for Success

Why Data Intelligence is Changing Modern Organizations

Data intelligence is the use of AI systems to learn, understand, and reason with an organization’s data. It combines data management with advanced AI to transform raw information into actionable insights, enabling custom AI applications and democratizing data access.

This approach goes beyond traditional analytics by using AI to understand data context, leveraging metadata for deeper insights, and shifting from reactive reporting to proactive, predictive recommendations. It enables users to query data with natural language and automates critical governance tasks like quality monitoring and compliance.

The need is urgent: 68% of enterprise data is never analyzed, and 82% of enterprises suffer from data silos. Poor data quality costs organizations an average of $12.9 million annually, highlighting a massive untapped potential.

For healthcare and life sciences, data intelligence open ups insights from complex, distributed datasets while maintaining strict compliance. It accelerates drug findy and evidence generation in federated environments without compromising data privacy.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. With over 15 years developing data intelligence solutions for biomedical research, I’ve seen how this approach transforms organizations that handle complex, regulated data.

What is Data Intelligence? Beyond the Buzzword

Data intelligence is more than a buzzword; it’s a strategic evolution in how organizations interact with their most valuable asset. It’s about making sense of the vast, complex, and often chaotic information stored across your systems. At its core, data intelligence uses artificial intelligence and machine learning to build a deep, contextual understanding of your organization’s data. It learns your specific business language, deciphers complex data relationships, and uncovers the semantic meaning behind the data in ways generic tools and human analysis alone cannot.

The key that open ups this deeper understanding is metadata—the “data about your data.” While traditional data management saw metadata as simple technical documentation, data intelligence treats it as a rich, dynamic layer of context. It answers fundamental questions with unprecedented clarity: Who created or owns the data? What does it represent in business terms? Where did it originate and how has it changed? When was it last updated and verified? Why was it created? And how should it be used ethically and effectively? This rich context is what shifts organizations from reactive reporting on past events to generating proactive, AI-driven recommendations for future actions.

For industries like healthcare and life sciences, this capability is transformative. It enables researchers and clinicians to derive critical insights from highly sensitive, distributed datasets while maintaining the strictest security and compliance protocols. For a deeper dive, explore our insights on Creating Research-Ready Health Data.

How AI and Machine Learning Power Data Intelligence

AI and machine learning are the engines that drive modern data intelligence. They go beyond simple automation to enable systems that can learn, reason, predict, and recommend, fundamentally changing how you work with data.

- Pattern Recognition: AI algorithms excel at identifying subtle correlations and complex patterns across massive, disparate datasets that are impossible for humans to detect. For example, a pharmaceutical company could analyze genomic data, clinical trial results, and real-world evidence simultaneously to find a previously unknown correlation between a genetic marker and a drug’s efficacy, accelerating the path to personalized medicine.

- Predictive Capabilities: By learning from historical data, AI builds sophisticated models that forecast future trends, risks, and opportunities. This shifts an organization from reactive decision-making to proactive strategic planning. A hospital, for instance, could use a predictive model to forecast patient admission surges based on seasonal flu trends, community health data, and historical patterns, allowing it to optimize staffing and resource allocation in advance.

- Natural Language Access: This democratizing feature allows any user, regardless of technical skill, to ask complex questions of the data in plain English. A marketing executive could simply ask, “What was the customer lifetime value for users acquired through our Q3 social media campaign, and how does it compare to email?” The system understands the query, retrieves the relevant data, performs the calculation, and returns a clear answer, eliminating the need for a data analyst to act as an intermediary.

- Anomaly Detection: AI continuously monitors data streams and pipelines for unusual activity, catching potential fraud, system failures, or data quality issues before they escalate into costly problems. A financial institution’s data intelligence platform might flag a series of transactions as anomalous because they deviate from a customer’s established spending behavior, triggering an immediate fraud alert.

- Semantic Understanding: Unlike generic AI models, data intelligence systems learn your organization’s unique language, acronyms, and business context. The system learns that in your company, “subscriber attrition,” “customer churn,” and “client drop-off” all refer to the same key performance indicator. This semantic knowledge leads to far more accurate, relevant, and trustworthy insights, which is transformative for processing vast and complex biomedical datasets. Learn more in our article on Best AI for Genomics.

The Critical Role of Metadata

Metadata is the foundation upon which true data intelligence is built. It’s the rich contextual layer that provides clarity, trust, and meaning. Modern platforms actively manage three types of metadata:

- Technical Metadata: Describes the structure of the data, including schemas, tables, columns, data types, and indexes. It’s the blueprint of your data assets.

- Business Metadata: Translates technical assets into plain business language. It includes business definitions, calculations, and governance rules, making data understandable to non-technical users.

- Operational Metadata: Provides information about the data’s processing and usage, including data lineage, refresh rates, access patterns, and quality scores.

- Data Lineage: It maps the complete, end-to-end journey of your data, showing every change from its source to its current state. This is crucial for building trust, debugging errors, and meeting stringent regulatory requirements.

- Data Quality Scores: Metadata includes automated indicators of reliability, completeness, and accuracy. A user can instantly see if a dataset is considered “certified,” “provisional,” or “unreliable,” giving them confidence in their information.

- Business Context: It connects technical data fields (e.g.,

CUST_ID_TXN) to real-world business terms (“Customer Transaction ID”), making information relevant and actionable for all users. - Data Ownership: Clear ownership and stewardship are documented in the metadata, eliminating confusion and enabling better governance and accountability.

Modern data intelligence platforms use AI to automate the findy, harvesting, and enrichment of metadata, which streamlines data findy and is especially valuable when harmonizing diverse datasets. We explore this challenge in our discussion on Data Harmonization: Overcoming Challenges.

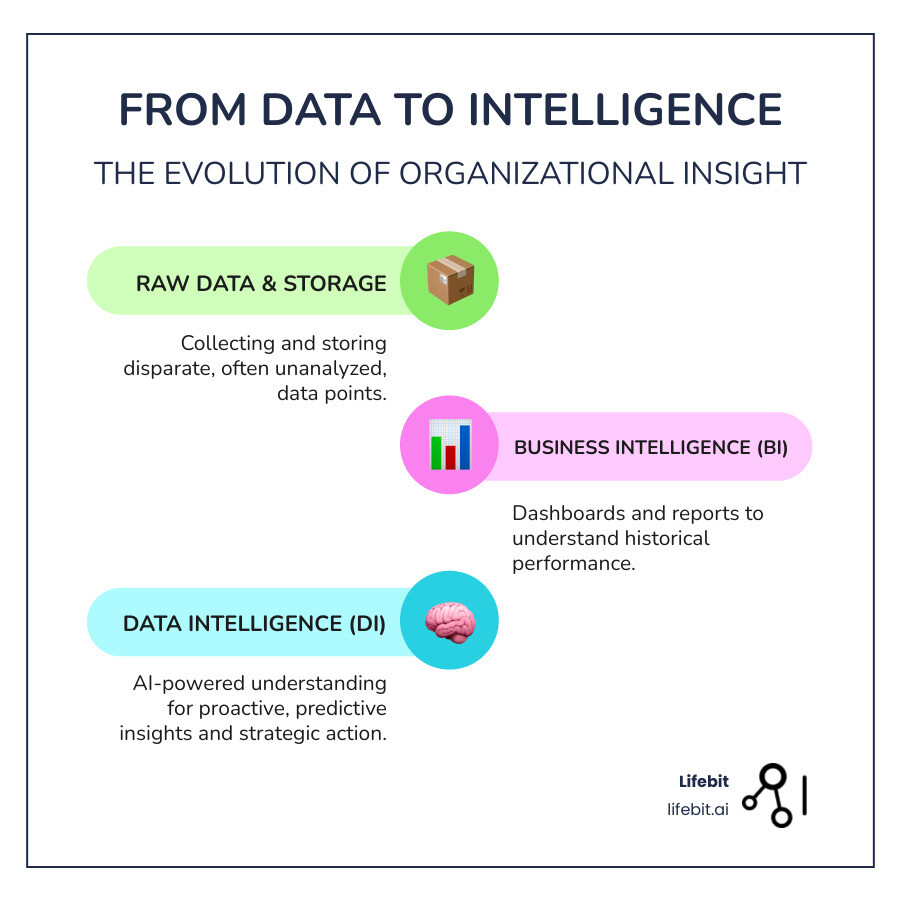

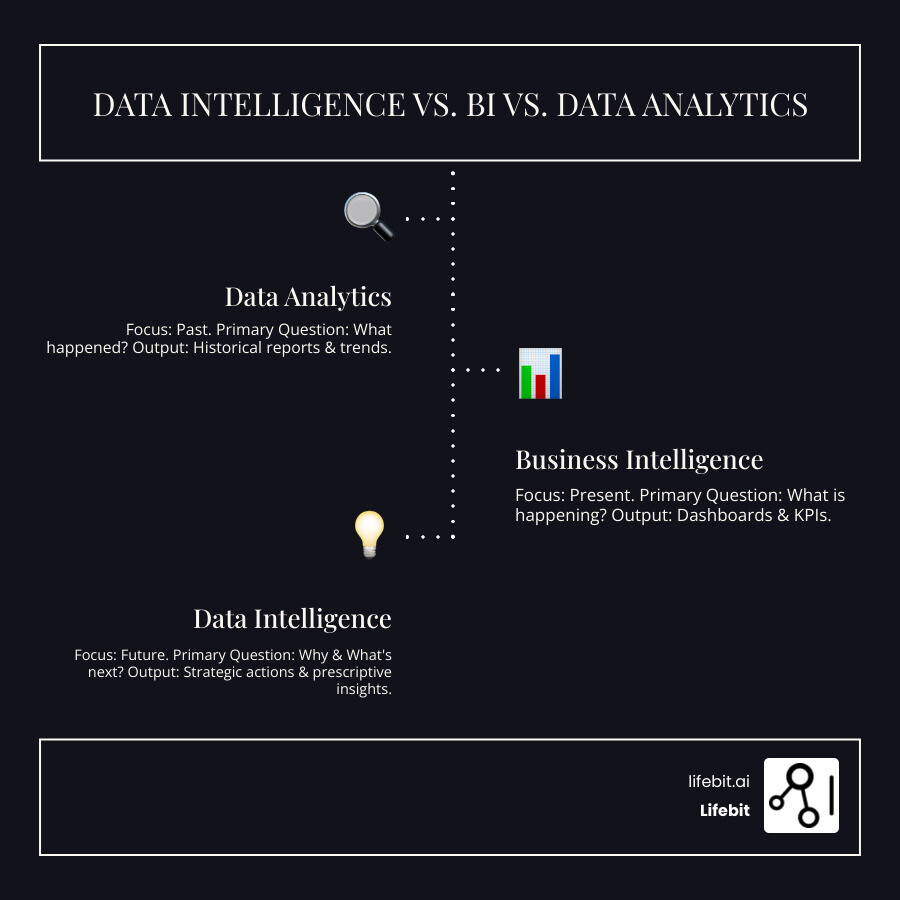

Data Intelligence vs. Business Intelligence vs. Data Analytics

While often used interchangeably, data intelligence, business intelligence (BI), and data analytics represent distinct and evolving stages in the journey to derive value from data. Understanding their differences is key to building a mature data strategy. If your data journey were a road trip, data analytics is the rearview mirror (telling you what happened), BI is the dashboard (showing you what’s happening now), and data intelligence is the AI-powered GPS with real-time traffic data, suggesting the best route forward and why.

Data Analytics: Looking at the Past

Data analytics is the foundational discipline focused on inspecting, cleaning, changing, and modeling historical data to find useful information and support conclusions. It is fundamentally retrospective.

- Primary Focus: Understanding what happened and why it happened.

- Types of Analysis: It primarily involves descriptive analysis, which summarizes past data into digestible reports (e.g., “Total sales increased 10% last quarter”), and diagnostic analysis, which drills down to understand the root causes (e.g., “The increase was driven by a 30% sales lift from our new marketing campaign”).

- Common Tools: Analysts typically use SQL to query databases, scripting languages like Python or R for complex analysis, and spreadsheets like Excel for smaller-scale tasks.

- Limitations: Data analytics is often labor-intensive, requires specialized skills, and provides a view of the past. While essential, it doesn’t inherently tell you what to do next.

Business Intelligence: Understanding the Present

Business intelligence (BI) builds on data analytics to provide ongoing, operational awareness of current business performance. It’s about monitoring the here and now.

- Primary Focus: Answering what is happening now and monitoring key performance indicators (KPIs).

- Output: BI is characterized by dashboards, scorecards, and automated reports that provide a real-time or near-real-time snapshot of business health. Examples include a hospital dashboard showing current bed occupancy rates or a sales dashboard tracking daily progress toward a quarterly target.

- Common Tools: Leading BI platforms include Tableau, Microsoft Power BI, and Qlik, which excel at data visualization.

- Limitations: While powerful for operational management, traditional BI systems are often rigid. They rely on pre-built dashboards and pre-defined queries. They can show you that a KPI is red, but often lack the deep contextual data lineage to explain why or the predictive power to suggest a course correction. Democratizing access to these insights is crucial, a topic we cover in our guide to Health Data Analysis Platforms for All.

Data Intelligence: Shaping the Future

Data intelligence represents a paradigm shift from reactive monitoring to proactive, forward-looking decision-making. It integrates the historical context of analytics and the real-time awareness of BI but adds a powerful layer of AI to guide future actions.

- Primary Focus: Answering what will happen next (predictive) and what is the best course of action (prescriptive).

- Key Capabilities: It leverages AI to provide predictive and prescriptive recommendations. It doesn’t just forecast what might happen; it suggests what you should do about it. For example, a data intelligence system in life sciences might analyze genomic patterns and clinical data to not only predict which patient cohorts will respond best to a new therapy but also recommend prioritizing a specific drug compound for a rare disease based on its high probability of success.

- How it Works: By deeply understanding the context, quality, and lineage of data, a data intelligence platform can run simulations, forecast outcomes, and surface opportunities that would otherwise be missed. It uncovers the “why” and “how” behind complex events, changing organizations from being problem-responders to opportunity-seekers.

Instead of just reacting to market changes, you can anticipate them. Instead of just fixing data errors, you can prevent them. Data intelligence is the crucial bridge between raw data and informed, strategic action that drives competitive advantage.

The Core Components of a Modern Data Intelligence Framework

Effective data intelligence isn’t the result of a single tool but rather an ecosystem of interconnected components working in concert. A successful framework is built on four pillars that work together to transform chaotic, siloed data into a trustworthy, actionable, and governed enterprise asset.

Pillar 1: Data Catalog and Findy

A data catalog acts as your organization’s intelligent master librarian, solving the pervasive problem of scattered, poorly documented, and hard-to-find information. It doesn’t move the data; instead, it creates a centralized, searchable inventory of all your data assets by using AI to automatically connect to your systems and harvest their metadata. This process enriches the metadata with business context, usage patterns, and quality scores, enabling data democratization. Any user, from a data scientist to a business analyst, can quickly find relevant and trustworthy datasets through a simple, natural language search interface. A key component is the Business Glossary, which links cryptic technical table names (e.g., FSCL_Q3_RPT) to clear business terms (“Q3 Financial Summary Report”), bridging the communication gap between IT and the business. Modern data architectures are key to this, as explored in our guide on What is a Data Lakehouse?.

Pillar 2: Data Lineage and Impact Analysis

Data lineage provides a transparent, end-to-end map of a data element’s journey through your systems. It shows where the data came from, every change it underwent, and all the places it’s used downstream. This source-to-target mapping, often visualized at the column level, is the foundation of data trust. If a business user questions a number on a report, lineage allows an analyst to trace it back to its source in seconds, not days, to validate its calculation. Furthermore, it enables powerful impact analysis. For example, if a data engineering team plans to deprecate a column in a source database, lineage can instantly identify every report, dashboard, and ML model that depends on that column. This prevents unexpected breakages, builds trust in data, and dramatically reduces risk. One insurance company used this capability to accelerate its impact analysis from hours of manual work to under a minute of automated findy.

Pillar 3: Data Quality and Observability

Poor data quality is a silent killer of digital change, costing organizations an average of $12.9 million annually. This pillar addresses the issue head-on by moving from reactive data cleaning to proactive data health management. It starts with data profiling, which automatically scans datasets to identify their statistical characteristics and potential issues. This feeds into data quality scores based on several key dimensions:

- Accuracy: Does the data reflect the real-world entity it describes?

- Completeness: Are there missing values where there shouldn’t be?

- Consistency: Is the data free of contradictions across different systems?

- Timeliness: Is the data up-to-date enough for its intended use?

- Validity: Does the data conform to the required format and business rules?

- Uniqueness: Are there duplicate records that could skew analysis?

Building on this is Data Observability, which applies principles from DevOps to data pipelines. It provides proactive monitoring for “data downtime” by watching for anomalies in data freshness, volume, schema, and distribution, alerting you to issues before they impact downstream business decisions.

Pillar 4: AI-Enabled Governance and Security

Traditional data governance is often a slow, manual, and bureaucratic process that hinders agility. AI transforms governance into an intelligent, automated, and scalable function that enables access rather than blocking it. Automated policy enforcement applies rules consistently across the enterprise without human intervention. For example, a policy can be set to automatically identify and mask any column tagged as Personally Identifiable Information (PII) for all users except those with a specific ‘HR-Auditor’ role. Intelligent access controls can manage permissions dynamically based on user roles, data sensitivity classifications, and project needs, ensuring compliance with complex regulations like GDPR and HIPAA. In biomedical research, where data security and patient privacy are paramount, automated governance is not just a nice-to-have; it’s a requirement for ensuring data is handled safely, ethically, and in full compliance with the law. Learn more about our approach in AI-Enabled Data Governance and What is a Secure Data Environment (SDE)?.

Open uping Business Value: Key Benefits and Use Cases

Implementing data intelligence fundamentally changes how an organization operates, driving tangible business value. Key benefits include:

- Increased productivity as teams access data via natural language.

- Accelerated innovation from spotting trends in real-time.

- Significant cost savings by eliminating errors from poor data quality.

- Improved risk management by identifying potential issues early.

- Sustainable revenue growth from a deeper understanding of customers and markets.

These benefits become even more transformative when applied to specific industries.

Use Case: Revolutionizing Healthcare and Life Sciences

In healthcare, where data complexity presents enormous challenges and opportunities, data intelligence has a dramatic impact. One pharmaceutical giant reduced data mapping costs by 60% by applying these principles.

- AI-Driven Drug Findy: AI identifies potential drug candidates in days instead of months, optimizing the R&D pipeline.

- Precision Medicine: Integrating multi-omic data (genomics, clinical records) enables treatment plans custom to a patient’s unique biology.

- Real-World Data Analysis: Tracking how treatments perform across diverse populations helps optimize protocols based on actual outcomes. For more, see our work on Target Identification with Real-World Evidence.

Use Case: Changing Financial Services

For financial services, data intelligence provides a critical competitive advantage. Applications include:

- Algorithmic Trading: AI analyzes market data and executes trades with superhuman speed and precision.

- Fraud Detection: Advanced systems identify suspicious patterns invisible to traditional rule-based methods.

- Credit Risk Assessment: AI provides more accurate and fair risk models by analyzing hundreds of data points.

- Personalized Customer Experiences: Sophisticated segmentation allows for highly personalized services that build loyalty.

Use Case: Optimizing Manufacturing and Retail

In manufacturing and retail, data intelligence transforms physical operations and customer relationships. A retail company cut analysis time by 30% while improving engagement through AI.

- Supply Chain Optimization: AI predicts bottlenecks and optimizes delivery routes to prevent disruptions.

- Predictive Maintenance: Sensor data is analyzed to schedule maintenance before equipment fails, eliminating downtime.

- Demand Forecasting: AI accurately predicts demand by analyzing historical sales, weather patterns, and social media trends.

- Personalized Marketing: AI-powered recommendations and targeted campaigns drive customer engagement.

Implementation: Overcoming Challenges and Ensuring Success

A data intelligence journey is transformative, but it’s not without its challenges. The good news is that a modern data intelligence platform is designed to solve the very problems that have historically hindered data initiatives, turning roadblocks into building blocks for success.

Common Challenges Data Intelligence Solves

Organizations often wrestle with a set of persistent data problems that data intelligence is uniquely equipped to resolve:

- Data Silos: A staggering 82% of enterprises experience data silos, which prevent a holistic view of the business. Data intelligence breaks down these barriers not by physically moving all the data, but by creating a unified metadata layer—a virtual data fabric—that provides a single, contextual view across all sources.

- Inconsistent Data Quality: Poor data quality erodes trust and leads to flawed decisions. Data intelligence tackles this with automated profiling, quality scoring, and continuous observability. This creates a feedback loop that helps data producers see the impact of their data quality and improve it at the source.

- Lack of Data Trust: When the origin and changes of data are unclear, users lose confidence in it. Data intelligence builds trust through transparent, end-to-end data lineage and robust, automated governance, making the data’s journey auditable and understandable.

- Regulatory and Compliance Risks: Navigating a complex web of regulations like GDPR and HIPAA is a major challenge. Data intelligence automates data classification (e.g., identifying PII), access controls, and policy enforcement, embedding compliance into the data workflow and reducing risk.

Key Considerations for a Successful Implementation

Success requires a holistic approach that extends beyond just implementing new technology. It’s about fostering a new relationship with data across the organization.

- Foster a Data-Driven Culture: This is the most critical element. Leadership must champion the value of data as a strategic asset. This involves encouraging curiosity, promoting evidence-based decision-making over gut feelings, and celebrating data-driven wins to build momentum. It means creating a culture where asking “What does the data say?” becomes a natural reflex for everyone.

- Define Clear Business Objectives: Don’t boil the ocean. Start with a well-defined, high-impact business problem. By focusing on a specific use case—like reducing customer churn or optimizing a supply chain process—you can deliver tangible ROI quickly. This initial success will serve as a powerful proof point and build the business case for broader adoption.

- Ensure Scalability and Flexibility: Your data landscape will only grow in volume and complexity. Choose a cloud-native solution built on a flexible, scalable architecture. The platform must be able to handle diverse data types (structured, semi-structured, unstructured) and integrate seamlessly with your existing and future technology stack.

- Invest in Data Literacy: A powerful tool is useless if no one knows how to use it. Invest in continuous training and enablement programs custom to different user personas. Business users need to learn how to ask the right questions, analysts need to master the advanced tools, and executives need to understand how to interpret insights for strategic planning. Empowering your workforce is key to open uping the full value of your investment.

The Rise of Federated Approaches for Sensitive Data

For organizations dealing with highly sensitive or sovereign data, such as in biomedical research or global finance, moving data to a central location is often not feasible or permissible. A federated approach is the indispensable solution. Federated Data Analysis enables secure collaboration by bringing the analysis to the data, rather than the other way around. This “computation-to-data” model allows algorithms to be executed securely across multiple distributed datasets without the raw data ever leaving its protected environment. For example, a researcher could train a machine learning model to predict disease risk across patient data from ten different hospitals globally, without any of the hospitals having to share their sensitive patient records. This approach minimizes security risks, protects patient privacy, and simplifies compliance with data residency laws like GDPR. It open ups unprecedented insights from previously isolated datasets, enabling large-scale studies that were once impossible. Our platform exemplifies this, providing a federated AI platform for secure access to global biomedical data. It combines a Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. analytics layer to power compliant research and real-time pharmacovigilance. Explore the Benefits of Federated Data Lakehouse in Life Sciences to learn more.

Frequently Asked Questions about Data Intelligence

As organizations adopt data intelligence, several common questions arise. Here are insights based on my experience.

How long does it take to see ROI from data intelligence?

The timeline varies, but meaningful results can appear faster than expected, often within the first year. A non-profit we worked with saw tangible benefits in twelve months. ROI typically manifests in a few key ways:

- Cost Savings: Automating data quality and streamlining findy immediately reduces operational costs. An energy company identified over 2 million Euros in business impact in 24 months.

- Improved Decision-Making: Faster access to trusted insights leads to better, more confident decisions. An insurance company cut its impact analysis time from hours to under a minute.

- Increased Productivity: When non-technical users can access data through natural language, efficiency soars across the organization.

Is data intelligence only for large enterprises?

No. While large enterprises have been early adopters, the technology is now accessible to organizations of all sizes. Scalable, cloud-native platforms offer a pay-as-you-go model, making sophisticated tools cost-effective for smaller teams. The benefits—better data quality, improved decisions, and competitive advantage—are universal. Data intelligence democratizes data access, allowing a startup to leverage the same powerful insights as a Fortune 500 company. The focus should always be on value creation, not company size.

What is a data fabric and how does it relate to data intelligence?

A data fabric is an architectural approach that acts as the foundation for data intelligence in complex, distributed environments. It weaves together disparate data sources—from on-premise databases to cloud applications—into a single, unified, and accessible layer. It automates the integration, preparation, and governance of data assets, hiding complexity from the end-user.

Data intelligence platforms leverage this fabric to access and understand data across the entire enterprise. The fabric provides the robust, governed foundation, while the intelligence layer adds the AI and machine learning to derive deep, semantic insights. In our federated approach at Lifebit, this concept enables secure collaboration on distributed biomedical data without physically moving it, open uping powerful collective intelligence.

Conclusion: The Future is Intelligent

We’ve reached a point where data intelligence is no longer a luxury but a strategic necessity. While traditional analytics and BI look at the past and present, data intelligence helps us shape the future. By unifying AI with robust governance, we create systems that understand data’s context and potential, enabling the proactive decision-making that defines industry leaders.

Across industries, this shift is driving tangible results—from accelerating drug findy in healthcare to optimizing supply chains in manufacturing. The democratization of these tools means that sophisticated, AI-driven insights are now available to everyone in an organization, not just data scientists.

At Lifebit, we see this change firsthand, especially in complex fields like biomedical research. Our federated AI platform enables organizations to open up insights from sensitive, distributed data while upholding the highest security and compliance standards.

The future belongs to organizations that turn data into a strategic advantage. Those who accept data intelligence will be best positioned to innovate, compete, and thrive.

Ready to see what’s possible when your data becomes truly intelligent? Explore a next-generation Data Intelligence Platform and transform how your organization uses its most valuable resource.