Lakehouse Living: Blending Data Lakes and Warehouses

Data Lakehouse: Ultimate 2025 Guide

Why Data Lakehouses Are Changing Modern Analytics

A data lakehouse is a unified data architecture combining the flexibility of data lakes with the reliability of data warehouses. It’s a single platform for all data types that provides transaction support, schema enforcement, and governance. This eliminates the need for separate systems, reducing complexity and enabling both business intelligence (BI) and artificial intelligence (AI) workloads from one data source.

The modern data landscape is challenging. With data generation projected to reach 463 exabytes by 2025, organizations struggle to gain timely insights. Traditional data warehouses are expensive and inflexible, while data lakes often suffer from poor governance, becoming “data swamps.”

This leads to a “two-tier data architecture,” where data is duplicated between lakes and warehouses, causing data staleness, security risks, and high operational costs. Research shows that “86% of analysts use out-of-date data,” highlighting the failure of current systems to meet business needs.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. We use data lakehouse architectures to enable secure, federated analysis of complex biomedical data for pharmaceutical and public sector clients. My experience has shown me how the right architecture is transformative for generating insights from diverse data.

Similar topics to data lakehouse:

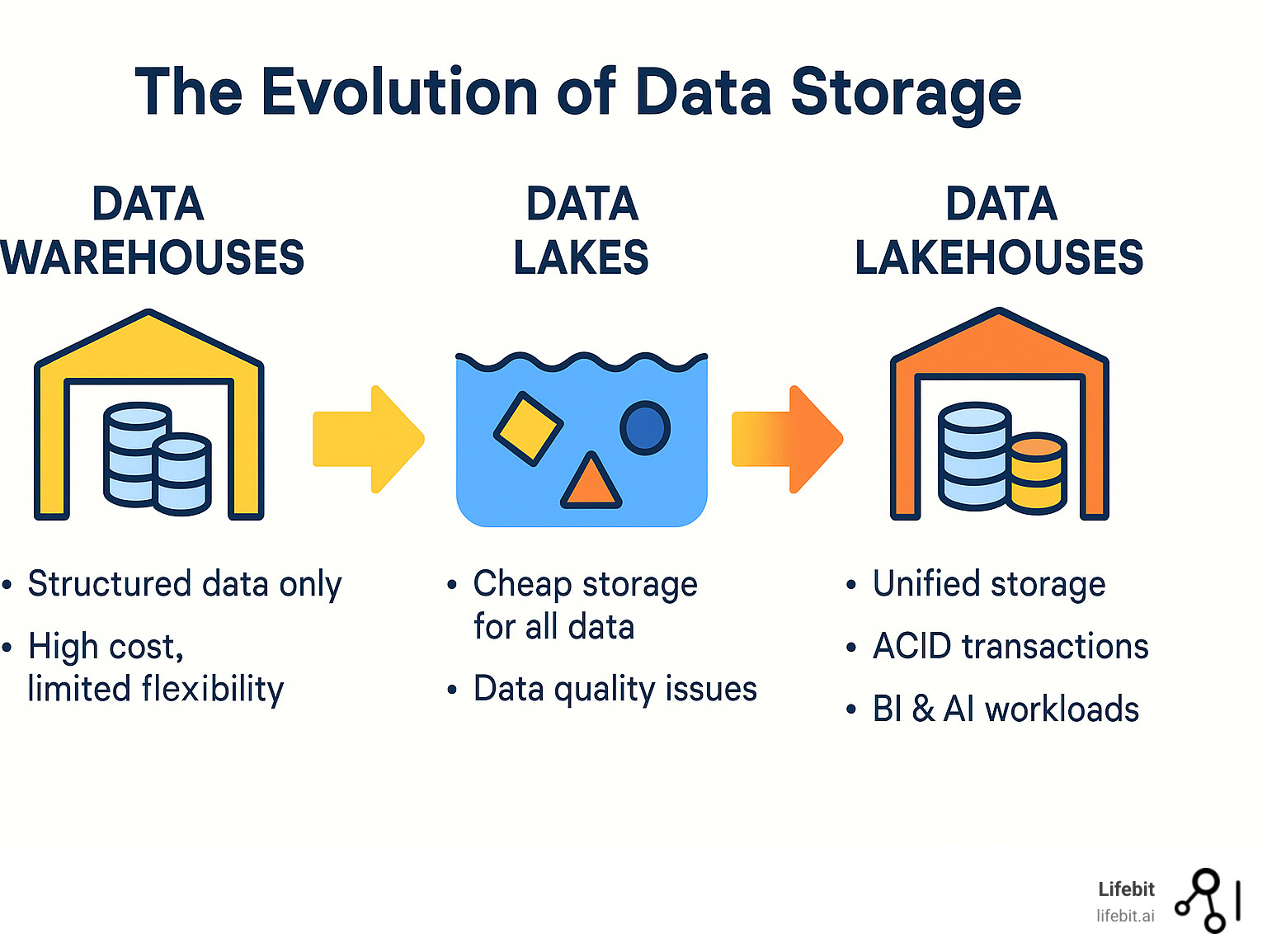

The Architectural Evolution: Warehouses, Lakes, and Their Limits

For decades, data architecture has been dominated by two approaches: the data warehouse and the data lake. Each offered distinct advantages but also came with significant trade-offs, leading to the hybrid model we see today.

| Feature | Data Warehouse | Data Lake | Data Lakehouse |

|---|---|---|---|

| Data Type | Structured | All (Structured, Semi-structured, Unstructured) | All (Structured, Semi-structured, Unstructured) |

| Schema | Schema-on-write (predefined) | Schema-on-read (flexible) | Schema-on-read with enforced schema-on-write capabilities |

| Primary Users | Business Analysts, BI Professionals | Data Scientists, ML Engineers, Data Engineers | All data professionals (BI, ML, Data Science, Data Engineering) |

| Use Cases | BI, Reporting, Historical Analysis | ML, AI, Exploratory Analytics, Raw Data Storage | BI, ML, AI, Real-time Analytics, Data Engineering, Reporting |

| Cost | High (proprietary software, structured storage) | Low (cheap object storage) | Moderate (cheap object storage with added management layer) |

The Traditional Data Warehouse

Data warehouses have long been the standard for business intelligence. They are highly organized repositories using a “schema-on-write” approach, where data is cleaned, transformed, and structured into predefined tables before being stored. This strict organization ensures high reliability and a single source of truth for SQL analytics and reporting, delivering fast query performance for known questions.

However, this rigidity is also their primary weakness in the modern era. Warehouses are expensive, often relying on proprietary hardware and software with high licensing costs. Their schema-on-write model makes it difficult and time-consuming to incorporate new data sources or change existing structures. Most critically, they are ill-equipped to handle the explosion of unstructured data (like video, audio, free-form text, and social media feeds) and semi-structured data (like JSON logs). This makes them a poor fit for many advanced Machine Learning applications, which thrive on raw, diverse datasets.

The Rise of the Data Lake

Data lakes emerged to address the limitations of warehouses, particularly regarding data variety and volume. Using a “schema-on-read” approach, they store vast quantities of raw data in its native format on low-cost object storage like Amazon S3 or Azure Blob Storage. This flexibility is ideal for data science and exploratory analytics, allowing teams to access diverse datasets for AI model development without being constrained by a predefined schema.

The main drawback is a lack of transactions and governance, which often leads to poor data quality and reliability issues. Without ACID transactions, concurrent data writes and reads can lead to inconsistent or corrupted results. This lack of control frequently turns promising data lakes into unusable “data swamps”—disorganized repositories filled with untraceable, untrustworthy, and redundant data. Consequently, ensuring data consistency and security becomes a major challenge, and query performance for BI tools can be slow because engines must scan large amounts of unindexed data.

The Problem with the Two-Tier System

To get the best of both worlds, many organizations adopted a two-tier system: a data lake for ingesting and storing raw data, and a separate data warehouse for structured analytics and BI. While logical in theory, this approach created a new set of significant problems:

- Data duplication and increased complexity: The same data exists in at least two places, increasing storage costs and the overhead of managing two distinct, complex systems.

- Fragile ETL pipelines: Constant, resource-intensive ETL (Extract, Transform, Load) jobs are required to move and transform data from the lake to the warehouse. These pipelines are often brittle, prone to failure, and require significant engineering effort to maintain.

- Data staleness: By the time data is moved to the warehouse, it is no longer real-time. Analysts and decision-makers often work with information that is hours or even days old, hindering timely insights.

- Higher operational costs and security challenges: Managing two systems doubles the operational burden and creates a larger surface area for security vulnerabilities. Governance policies must be implemented and synchronized across both platforms.

This inefficient, costly, and slow model created a clear need for a unified architecture that combines the strengths of both systems without the drawbacks: the data lakehouse.

What is a Data Lakehouse? A Unified Foundation for Data

A data lakehouse is a modern data architecture that eliminates the need to choose between a data lake and a data warehouse. It creates a unified platform by implementing robust data management and transaction features directly on top of the low-cost object storage used by data lakes.

This approach creates a single source of truth for all data—structured, semi-structured, and unstructured—managed with the reliability and governance of a traditional warehouse. It combines the cost-effectiveness of cloud storage with sophisticated features like transactional capabilities and schema enforcement.

Key Benefits of a Data Lakehouse

Adopting a data lakehouse architecture provides several immediate advantages:

- Reduced complexity: A single, unified platform replaces multiple, siloed systems, simplifying data management and eliminating complex data movement pipelines.

- Cost efficiency: Leveraging inexpensive cloud object storage and eliminating data duplication across systems significantly lowers storage and operational costs.

- Support for diverse workloads: Business analysts and data scientists can run BI, AI, and machine learning workloads on the same up-to-date data, accelerating insights. This is especially powerful in fields like life sciences, as explored in the benefits of a federated data lakehouse.

- Improved data freshness: Direct access to data eliminates the delays caused by ETL processes, making real-time analytics possible and ensuring decisions are based on current information.

- Simplified data governance: A single architecture allows for consistent implementation of access controls, data quality rules, and compliance policies across all data assets.

Core Principles of the Architecture

The data lakehouse is built on several key principles:

- Decoupling storage and compute: Data is stored in low-cost object storage, while compute resources can be scaled independently, optimizing costs by paying only for what you use.

- Openness and standardization: It relies on open data and table formats (e.g., Parquet, Delta Lake, Apache Iceberg) to prevent vendor lock-in and ensure data portability.

- Transaction support: ACID transactions bring database-level reliability to the data lake, ensuring data consistency for concurrent operations.

- Schema enforcement and governance: It balances flexibility with quality by allowing schema enforcement and evolution, providing structure when needed without sacrificing adaptability.

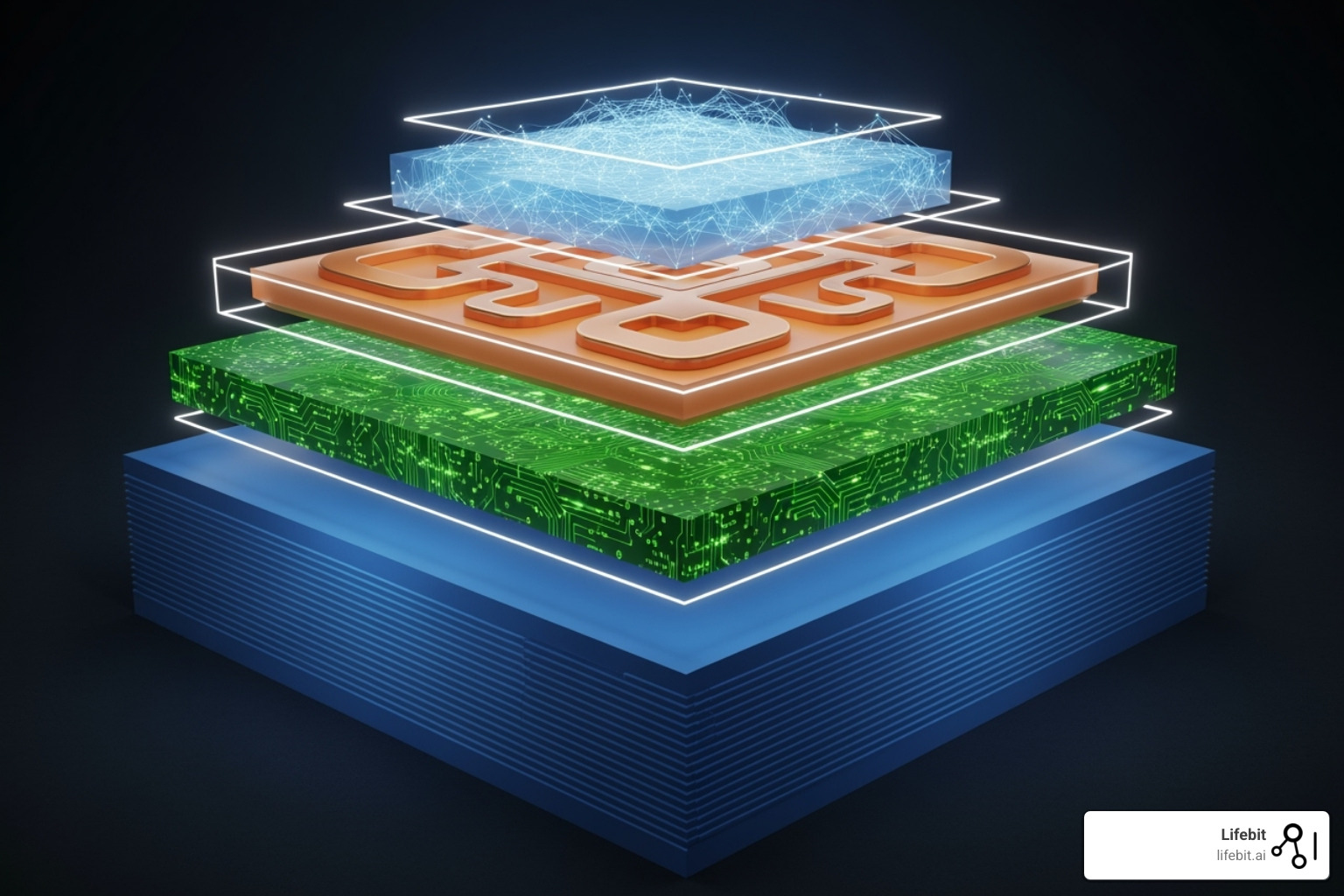

Under the Hood: Key Technologies and Architectural Layers

The data lakehouse is enabled by specific technologies organized into three interconnected layers. These layers work together to combine the scale of a data lake with the reliability of a data warehouse.

A data lakehouse architecture typically consists of the following layers:

The Foundational Storage Layer

The foundation is cloud object storage (e.g., Amazon S3, Azure Data Lake Storage, Google Cloud Storage), which offers virtually unlimited, durable, and highly cost-effective storage for all data types. A core principle of the lakehouse is to avoid proprietary data formats. Instead, data is stored in open data formats like Parquet, Avro, and ORC. Columnar formats like Parquet are particularly crucial, as they store data by column rather than by row. This structure is highly optimized for analytics, as queries only need to read the specific columns required, dramatically reducing I/O and improving performance. It also enables better compression, further reducing storage costs.

The Transactional Metadata Layer

This is the layer that transforms a data lake into a reliable, transaction-safe repository. It is a metadata layer that sits on top of the raw data files in object storage and provides critical data management features previously exclusive to warehouses.

- ACID transactions: Guarantees that operations (like inserts, updates, and deletes) are atomic, consistent, isolated, and durable, ensuring data integrity even with many concurrent users.

- Time travel (data versioning): Captures every change to the data, allowing you to query previous versions of a table. This is essential for auditing, debugging failed data pipelines, and reproducing machine learning experiments.

- Schema enforcement and evolution: Enforces data quality rules by ensuring new data conforms to a table’s schema. It also allows the schema to evolve over time (e.g., adding new columns) without breaking existing pipelines.

This functionality is powered by open table formats. The three most prominent are Delta Lake, Apache Iceberg, and Apache Hudi.

Comparing Open Table Formats

- Delta Lake: Originally developed by Databricks, Delta Lake is built on top of Parquet files and uses an ordered transaction log (the

_delta_logdirectory) to provide ACID transactions and time travel. It is deeply integrated with Apache Spark and is known for its simplicity and robust performance for both streaming and batch workloads. - Apache Iceberg: Created at Netflix and now an Apache project, Iceberg uses a tree-like metadata structure of manifest files to track the individual data files that form a table. This approach avoids performance bottlenecks associated with listing files in large directories, making it highly scalable for petabyte-sized tables. It offers broad engine compatibility and robust schema evolution capabilities.

- Apache Hudi: Originating at Uber, Hudi (Hadoop Upserts Deletes and Incrementals) excels at managing record-level changes, particularly for streaming use cases that require fast upserts and deletes. It offers two storage types—Copy-on-Write for read-optimized workloads and Merge-on-Read for write-optimized workloads—providing flexibility for different performance needs.

The Processing and Analytics Layer

The top layer consists of the engines and tools used to process and analyze data. A key benefit of the lakehouse is that it provides direct, high-performance access to the underlying data for a wide variety of workloads.

- High-performance query engines: Engines like Databricks SQL, Presto/Trino, Dremio, and Apache Spark execute SQL queries at speeds comparable to traditional data warehouses.

- Universal SQL support: Offers a familiar SQL interface, allowing business analysts to connect their preferred BI tools (like Tableau, Power BI, or Looker) for reporting and visualization.

- APIs for ML and Data Science: Provides native APIs for libraries like TensorFlow, PyTorch, and scikit-learn, allowing data scientists to access data directly for model training without creating separate data copies. Libraries like Petastorm can further optimize this integration.

- Support for streaming data: Natively supports real-time data ingestion and analytics from sources like Apache Kafka, enabling live dashboards and immediate operational insights.

Powering Modern Analytics: From Business Intelligence to AI

A key strength of the data lakehouse is its ability to break down silos between different analytical workloads. It serves as a single platform for everything from standard reporting to advanced AI model training.

How a Data Lakehouse Supports Diverse Workloads

A data lakehouse provides a unified foundation for all data teams:

- Business Intelligence: Analysts can use familiar SQL queries and BI tools on reliable, up-to-date data, leading to faster and more accurate reports.

- Data Science and Advanced Analytics: Data scientists gain direct access to all data types (structured, semi-structured, unstructured) in one place, enabling rapid exploration and iteration with tools like Python and R.

- Machine Learning Model Training: ML engineers can efficiently access and version datasets directly from the lakehouse, improving model reproducibility.

- Real-time Analytics: The architecture supports streaming data, enabling live dashboards, fraud detection, and other immediate operational insights.

This unified approach is especially impactful in complex fields like life sciences, where researchers can analyze clinical, genomic, and imaging data together. Learn more in our article on the Application of Data Lakehouses in Life Sciences.

Ensuring Data Integrity and Security

The data lakehouse architecture includes built-in features to prevent “data swamps” and ensure robust governance and security across all data assets:

- Unified governance: A single platform allows for a single, consistent set of policies for access control, data quality, and compliance. Centralized catalogs, like Databricks Unity Catalog, can manage permissions and metadata across all assets, simplifying administration.

- Data quality checks: Schema enforcement prevents bad data from corrupting tables. Furthermore, modern table formats allow for declarative data quality constraints (e.g.,

CHECKconstraints) that automatically validate data as it is written, ensuring it meets business rules. - Auditing capabilities: The transactional layer tracks every change made to the data. This provides a complete, immutable audit trail accessible via the “time travel” feature, which is invaluable for compliance reporting (like GDPR and CCPA) and for troubleshooting data issues.

- Fine-grained access controls: Administrators can define specific user permissions at the row, column, table, or file level. This ensures that users can only see the data they are authorized to access, protecting sensitive information and preventing data leakage.

- Data lineage: Automatically track data from its source through all changes to its final use in a report or model. This provides critical transparency, helping teams understand data dependencies, perform impact analysis, and conduct root cause analysis when an issue is finded.

Challenges and Considerations

While powerful, implementing a data lakehouse requires careful planning and expertise:

- Implementation complexity: Architecting a comprehensive platform involves making key decisions about cloud providers, storage formats, table formats (Delta vs. Iceberg vs. Hudi), and query engines. Integrating these components into a seamless, performant system requires significant technical expertise.

- Platform maturity: The lakehouse ecosystem is dynamic and still evolving. While the core technologies are robust, features, performance, and stability can vary between different tools and vendor solutions. Organizations must stay informed about the latest developments and potential compatibility issues.

- Skilled data engineers are essential: Success depends on a team with deep knowledge of cloud infrastructure, distributed processing frameworks (especially Apache Spark), data modeling for the lakehouse, and performance tuning for both storage and compute.

- Cost management for compute resources: While storage is cheap, compute costs can escalate if not managed carefully. Effective cost control requires implementing strategies like auto-scaling compute clusters, using spot instances for non-critical workloads, optimizing queries, and setting budgets and alerts.

- Cultural and organizational shift: A lakehouse is not just a technical change; it’s a cultural one. It requires breaking down the traditional silos between BI, data science, and engineering teams. Organizations must invest in training and foster a collaborative environment to fully leverage the unified platform.

Frequently Asked Questions about Data Lakehouses

Here are answers to some of the most common questions about the data lakehouse architecture.

Is a data lakehouse just a marketing term?

No. The data lakehouse is a genuine architectural shift enabled by new technologies. It’s not just a rebranded data lake. The key difference is the addition of a transactional metadata layer (using open table formats like Delta Lake or Apache Iceberg) on top of low-cost object storage. This brings warehouse-like features such as ACID transactions, schema enforcement, and data versioning directly to the data lake. This solves concrete, long-standing problems like data duplication, staleness, and complexity that plague traditional two-tier systems, making it a distinct and valuable architectural pattern.

Do I need to replace my existing data warehouse or data lake?

Not necessarily. A “rip and replace” approach is rarely the best first step. Many organizations begin by implementing a metadata layer over an existing data lake to gain immediate benefits—like transactional reliability and better performance—without a disruptive migration. The decision to replace systems depends on your specific pain points. If you struggle with high ETL costs, data duplication, or siloed analytics, a gradual migration to a data lakehouse is a logical strategy. You can move workloads incrementally, starting with those that will benefit most, and prove value at each stage.

How does a data lakehouse compare to modern cloud data warehouses like Snowflake or Google BigQuery?

This is a crucial question, as the lines between these architectures are blurring. Modern cloud data warehouses (CDWs) like Snowflake and BigQuery have evolved beyond their traditional roots and are adding features to interact with data in open formats stored in external data lakes (e.g., Snowflake’s support for Iceberg Tables, Google’s BigLake).

However, key differences remain:

- Core Architecture: A data lakehouse is built from the ground up on open-source standards, with open data formats (Parquet), open table formats (Delta Lake, Iceberg), and often open-source engines (Spark, Trino). A CDW typically uses a proprietary, highly optimized internal format for its primary storage, which can deliver excellent SQL performance but may lead to vendor lock-in.

- Data Access: In a lakehouse, data science and machine learning workloads can access the data directly on object storage using a multitude of tools and libraries without needing to move or copy it. In a CDW, non-SQL workloads may require exporting data or using specific, sometimes less performant, connectors.

- Cost and Flexibility: The lakehouse’s decoupled storage and compute model, based on commodity object storage, can offer greater cost control and flexibility. CDWs bundle storage and compute in a more integrated, managed service, which can be simpler to operate but potentially more expensive and less flexible.

The market is converging. CDWs are becoming more like lakehouses, and lakehouses are improving their SQL performance to match CDWs. The choice often comes down to prioritizing openness and direct ML/AI support (lakehouse) versus a highly managed, SQL-centric experience (CDW).

What skills are needed for a data lakehouse?

A successful data lakehouse team requires a blend of skills, most of which are likely already present in your organization:

- Data Engineering: Expertise in data pipelines, streaming ingestion, distributed processing (e.g., Apache Spark), and a strong understanding of open data/table formats.

- Data Analytics: Strong SQL skills for BI and reporting. Analysts can leverage their existing knowledge on the new platform with minimal retraining.

- Data Science and Machine Learning: Proficiency in Python, R, and ML frameworks to leverage direct, efficient access to diverse datasets.

- Cloud Computing: Deep knowledge of your cloud provider’s storage, compute, networking, and security services is essential for building and managing the platform.

- Data Governance and Security: Skills to implement access controls, ensure data quality, manage compliance, and use modern data cataloging tools.

Conclusion: Building Your Future on a Unified Data Platform

The data lakehouse marks a significant evolution in data management, offering a practical solution to the long-standing problems of data silos and complexity. It eliminates the need to choose between the flexibility of a data lake and the reliability of a data warehouse.

By combining low-cost storage with ACID transactions and strong governance, the lakehouse allows business analysts and data scientists to work from a single source of truth. This unified approach delivers tangible business value through improved data freshness, reduced complexity, and greater cost efficiency.

The biggest advantage is future-proofing your data strategy. A data lakehouse is a scalable and flexible architecture that adapts to new data types, user demands, and changing regulations. This is especially critical in specialized fields like life sciences, where a federated data lakehouse can securely manage sensitive genomic and clinical data while upholding strict compliance.

At Lifebit, we have seen how this approach transforms research. Our Trusted Data Lakehouse helps pharmaceutical companies and research organizations open up insights from their most valuable data while ensuring complete security. To see how a unified data strategy could benefit your organization, learn more about Lifebit’s Trusted Data Lakehouse solution.

The future of data is unified, flexible, and secure—and the data lakehouse makes that future available today.