The Art of Disguise: Anonymizing Patient Data Explained

Anonymized Patient Data: Master the 5 Safes

The Power of Anonymized Patient Data

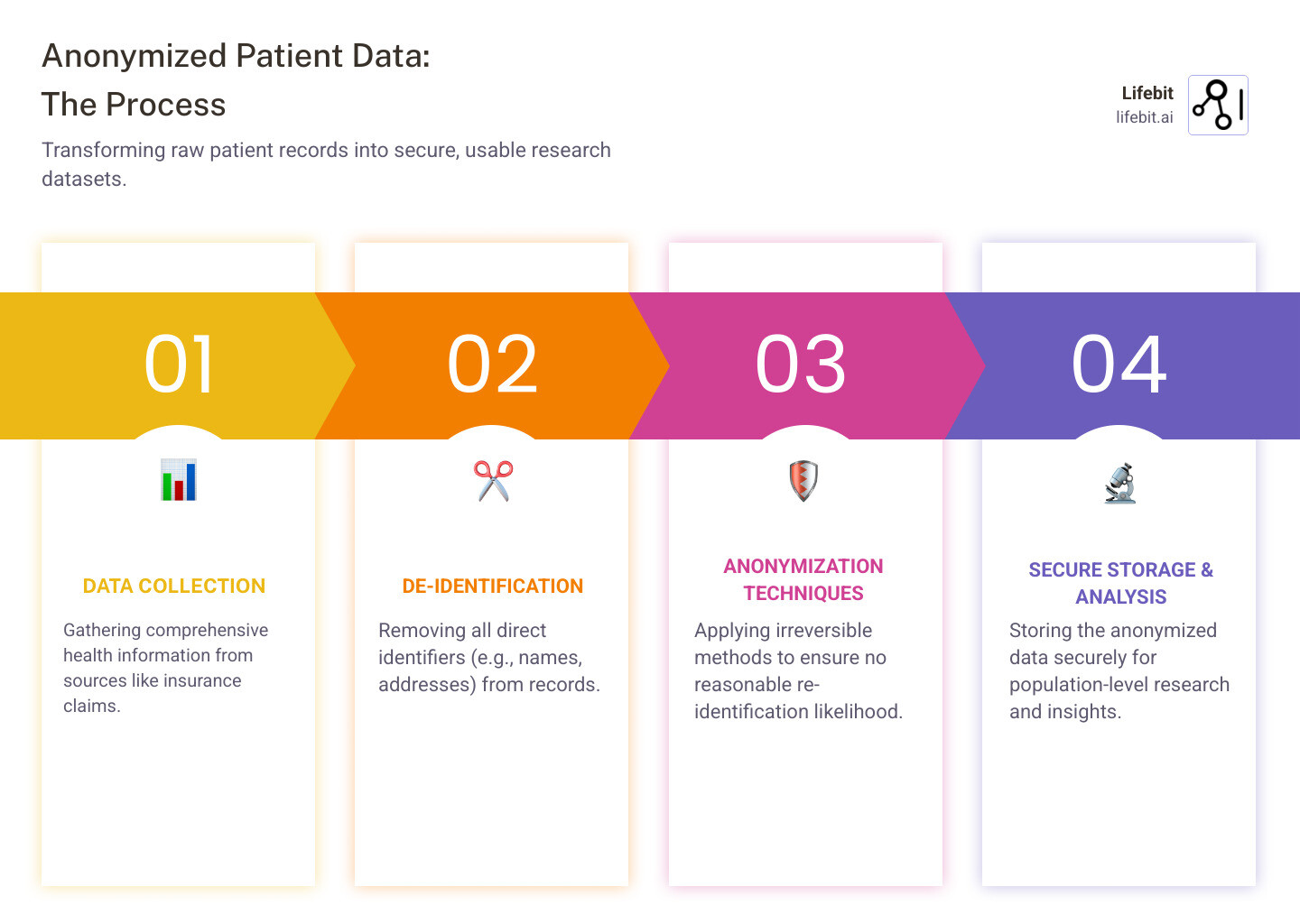

In healthcare and research, anonymized patient data is a game-changer, open uping critical insights while keeping sensitive information private. It is healthcare information stripped of all personal identifiers—like names or addresses—through a process designed to be irreversible. This makes it practically impossible to re-identify an individual, distinguishing it from de-identified data that can sometimes be linked back.

This type of data is essential for medical research, drug findy, and public health studies, allowing experts to understand diseases and treatments without compromising patient privacy.

The healthcare world holds a goldmine of information in its patient records, but using it requires absolute trust. Anonymization is the key to using this data for progress while ensuring no one can trace it back to an individual.

As CEO and Co-founder of Lifebit, I’ve seen how anonymized patient data transforms global healthcare, powering data-driven drug findy and enabling precision medicine. My background in computational biology and AI, combined with over 15 years in health-tech, focuses on empowering secure, compliant data environments.

What is Anonymized Patient-Level Data (APLD) and Why is it Crucial?

Anonymized patient data, or Anonymized Patient-Level Data (APLD), is health information where all personal identifiers have been permanently removed. This includes details from a patient’s medical history, therapies, and doctor visits. The goal is not to study individuals, but to analyze patterns across large patient populations, painting a clearer picture of the patient journey at scale.

The anonymization process is designed to be irreversible, making APLD crucial for accelerating drug findy, improving clinical trials, and enhancing public health surveillance without compromising privacy. The secondary use of health data is central to modern biomedical research, and APLD facilitates this critical work.

The Value for Healthcare and Research

The value of anonymized patient data is immense. It allows us to:

- Advance Scientific Research: APLD helps researchers understand disease treatment aspects not always clear from clinical trials, such as long-term outcomes and rare adverse effects.

- Improve Drug Development: Pharmaceutical companies can identify unmet patient needs, compare treatment effectiveness, and monitor drug safety, reducing the time and cost of bringing new therapies to market.

- Improve Personalized Medicine: APLD helps identify care pathway blockages, tailor interventions, and manage public health at scale through disease registers.

- Power AI Model Training: Vast amounts of anonymized patient data are essential for training AI and ML models that can predict disease progression and optimize treatment plans.

- Drive Data-Driven Insights: The global healthcare analytics market is growing exponentially. Our platform’s components, including the Trusted Research Environment (TRE) and R.E.A.L. (Real-time Evidence & Analytics Layer), deliver real-time insights and secure collaboration.

- Facilitate Real-World Evidence (RWE): APLD is the cornerstone of RWE, which provides insights into how treatments perform in routine clinical practice. You can learn more about the scientific research on data sharing benefits here.

Who Uses Anonymized Data?

Anonymized patient data is a shared resource that benefits a wide range of stakeholders.

- Academic Researchers: Universities use APLD for epidemiological studies and to evaluate novel treatments.

- Pharmaceutical and Biotech Companies: These organizations use APLD to understand unmet patient needs, compare treatment effectiveness, and accelerate drug development.

- Government Health Agencies: Public health bodies use APLD for surveillance, to measure program impact, and to inform policy decisions.

- AI Developers and Med-tech Innovators: Companies rely on large, diverse datasets to train and validate their healthcare algorithms.

- Healthcare Providers: Hospitals and clinics use aggregated APLD to understand patient populations and improve the overall patient experience.

The Spectrum of De-Identification: Anonymization vs. Pseudonymization

Protecting patient privacy involves a spectrum of options that balance data utility vs. privacy. The goal is to find the sweet spot where data remains useful for research while keeping patients protected. This has led to different approaches, mainly anonymization and pseudonymization, though inconsistent definitions can cause confusion.

Under regulations like HIPAA and GDPR, these techniques are treated differently. HIPAA focuses on de-identification methods, while GDPR makes clear distinctions based on whether data is considered personal. Understanding this data protection spectrum is crucial for anyone working with health data.

True Anonymization: The Gold Standard

True anonymization is an irreversible data change that permanently severs any link to an individual. There is no reasonable likelihood of re-identification. Because of this, truly anonymized data falls outside the scope of GDPR, meaning it is no longer considered personal data under European law. This allows for greater freedom to share data for research. However, achieving this high privacy protection can sometimes come at the cost of data utility, as removing or altering information can make the dataset less detailed.

Pseudonymization and Tokenization: A Reversible Approach

Pseudonymization replaces identifiers with pseudonyms or secret codes, while the original information is kept separately with a key. Because this is a reversible process, pseudonymized data is still considered personal data under GDPR. It provides improved security for data processing while allowing researchers to link records over time, which is invaluable for longitudinal studies that track patient outcomes.

Tokenization works similarly, replacing sensitive data with unique, non-sensitive tokens. Here’s how these approaches compare:

| Feature | Anonymization | Pseudonymization | Tokenization |

|---|---|---|---|

| Reversibility | Irreversible | Reversible with a key | Reversible with a key/mapping table |

| Risk Level | Very low (if properly done) | Reduced, but still personal data risk | Reduced, but still personal data risk |

| Data Utility | Can be reduced due to information loss | High, as original data can be restored | High, as original data can be restored |

| GDPR Status | Falls outside GDPR scope (if truly irreversible) | Personal data, subject to GDPR rules | Personal data, subject to GDPR rules |

| HIPAA Status | Considered “de-identified” (Expert or Safe Harbor) | Not explicitly defined as “de-identified” | Not explicitly defined as “de-identified” |

| Purpose | Maximize privacy, enable broad sharing | Enable processing with reduced risk, link data | Protect sensitive data in transactions |

The choice depends on your needs. For maximum privacy and broad sharing, true anonymization is ideal. For longitudinal studies or combining datasets, pseudonymization is often the better choice.

Core Techniques for Creating Anonymized Patient Data

Creating anonymized patient data requires balancing privacy protection with data utility. The process begins by identifying direct identifiers (like names) and quasi-identifiers (QIs), such as date of birth or ZIP code, which can be combined to re-identify someone. The challenge is significant, as aggressive anonymization can strip away valuable details. Studies show this can result in information loss ranging from 13% to 87%, highlighting the constant tension between privacy and research value.

Foundational Methods: Suppression and Generalization

Two core techniques form the foundation of data anonymization:

Suppression is the most straightforward method, involving the complete removal of data. Cell suppression removes individual data points, while record suppression removes an entire patient record if it is too unique. While effective, excessive suppression can create gaps and introduce bias.

Generalization reduces the precision of data. For example, an exact birthdate like “October 23, 1985” is replaced with an age range (e.g., “30-39 years”), and a specific ZIP code is broadened to a larger region. The goal is to reduce granularity just enough to protect privacy while keeping the data meaningful. Data masking, a related technique, replaces sensitive data with realistic but fake values to maintain data format.

Advanced Privacy-Preserving Models

To counter sophisticated threats, modern anonymization employs advanced mathematical models:

- K-anonymity: This model ensures each individual in a dataset is indistinguishable from at least k-1 others based on their QIs. If a dataset is 5-anonymous, any person shares their combination of QIs with at least four others, creating a “crowd” to hide in. The EMA and Health Canada recommend a re-identification risk threshold equivalent to a k-anonymity of 11.

- L-diversity: This model addresses a weakness in k-anonymity. It requires that each group of records with identical QIs also contains at least l diverse values for each sensitive attribute (e.g., diagnosis), preventing inference if all individuals in a group share the same sensitive information.

- T-closeness: A refinement of l-diversity, this model requires that the distribution of a sensitive attribute within each group is close to its distribution in the overall dataset. This prevents attacks that rely on understanding the overall data distribution.

- Differential privacy: Considered a gold standard, this approach provides a formal privacy guarantee by adding calibrated statistical noise to query results. The amount of noise is controlled by a privacy budget (epsilon, ε)—a smaller epsilon means more noise and stronger privacy. The key guarantee is that the outcome of any analysis is nearly identical whether or not any single individual’s data is included, making it almost impossible to learn anything specific about an individual.

Recent advances have made these techniques highly efficient for large-scale research. At Lifebit, we combine these methods to create anonymized patient data that is both highly secure and maximally valuable for medical research.

The Re-Identification Challenge and How to Manage It

Even with strong anonymization, a residual risk of re-identification always remains. Understanding these threats is the first step to mitigating them.

- Linkage attacks match anonymized health records with other public data (e.g., voter registries) using shared quasi-identifiers like gender, birth year, and ZIP code. The classic example is Latanya Sweeney’s re-identification of the Massachusetts governor in the 1990s by linking an “anonymized” health dataset with public voter lists using birth date, gender, and ZIP code.

- The mosaic effect involves piecing together small bits of information from different sources to build a revealing profile.

- Inference attacks use statistical analysis to deduce sensitive information. For example, knowing a person fits a profile where 99% of individuals have a certain disease allows an attacker to infer their health status.

- Data breaches can expose anonymized datasets or the keys to pseudonymized data.

The challenge is amplified with genomic data, which is inherently unique. While a name can be removed, the genetic sequence itself is a powerful identifier that can be traced through public genealogy databases.

Assessing and Managing Risks with Anonymized Patient Data

Since risk cannot be eliminated, it must be managed through a multi-layered approach to make re-identification acceptably low.

- Robust risk assessment models are used to statistically analyze how unique each record is before data is shared.

- Motivated intruder tests involve hiring experts to actively try and break the anonymization. In one such test commissioned for a major pharmaceutical company, attackers failed to achieve any high-confidence matches, proving the effectiveness of robust anonymization.

- Data Use Agreements (DUAs) are legal contracts that forbid re-identification attempts and mandate specific security controls.

- Access controls like strong authentication and role-based permissions ensure only authorized individuals can access the data.

- Periodic review of anonymization methods is essential to stay ahead of evolving threats.

The Five Safes Framework

The gold standard for managing data access is the Five Safes framework, a holistic security model for creating a secure environment for data use:

- Safe People: Vetting researchers to ensure they are trustworthy, trained, and have a legitimate reason for access.

- Safe Projects: Ensuring the research is ethical, scientifically sound, and in the public interest, often overseen by an Institutional Review Board (IRB).

- Safe Settings: Using a secure technical environment, like a Trusted Research Environment (TRE), where data can be analyzed but not downloaded, preventing unauthorized linkage.

- Safe Data: Applying the appropriate level of anonymization for the specific data and project, recognizing it’s not a one-size-fits-all approach.

- Safe Outputs: Reviewing all research results before they leave the secure environment to prevent the accidental disclosure of identifying information (e.g., by suppressing small cell counts in tables).

This framework, which you can learn about in this guide to the Five Safes framework, creates multiple layers of protection, making data sharing safe and responsible.

Legal and Ethical Standards for Anonymized Patient Data

Using anonymized patient data is built on a foundation of public trust, maintained through robust legal and ethical frameworks. These rules ensure responsible handling of sensitive health information and provide essential oversight.

Global Regulatory Frameworks: HIPAA and GDPR

Global regulations share the goal of protecting patient privacy, with two influential examples being HIPAA and GDPR.

- HIPAA (Health Insurance Portability and Accountability Act) (USA): HIPAA’s Privacy Rule allows for the use of “de-identified” health information, which is no longer considered sensitive Protected Health Information (PHI). It provides two pathways to de-identification:

- The Safe Harbor Method is a prescriptive checklist requiring the removal of 18 specific identifiers, including names, specific dates, geographic subdivisions smaller than a state, and biometric identifiers.

- The Expert Determination Method is a risk-based approach where a qualified statistician certifies that the risk of re-identification is “very small” based on scientific principles. This is often used for complex datasets where Safe Harbor would remove too much valuable information.

- GDPR (General Data Protection Regulation) (Europe): GDPR sets a higher bar, distinguishing between “pseudonymized” data (reversible, still personal data) and truly “anonymous” information. Data is only anonymous under GDPR if the process is irreversible and there is no “reasonable likelihood” of re-identification. This high standard means much of what is called “anonymized” data elsewhere would be considered pseudonymized under GDPR and remain subject to its rules.

At Lifebit, we adhere to these rigorous global standards to ensure the highest levels of data protection. For more details, you can find guidance on clinical data publication from the EMA here.

Core Ethical Principles

Beyond the law, core ethical principles must guide all work with anonymized patient data:

- Respect for Persons: Valuing individual autonomy through transparency and honoring the original intent of the data donor, even when formal consent is not legally required for anonymized data.

- Beneficence: Maximizing the societal benefits of research (e.g., developing new treatments) while actively minimizing potential harms to individuals, such as privacy breaches or group-based stigma.

- Justice: Demanding fairness in the burdens and benefits of research. This means ensuring datasets are representative of diverse populations to avoid creating or exacerbating health disparities.

- Transparency: Being open about how data is collected, protected, and used to build and maintain public trust.

- Accountability: Maintaining clear governance systems and taking responsibility for data handling practices.

- Public Benefit: Prioritizing research based on its ability to genuinely improve healthcare outcomes and advance scientific knowledge for the benefit of society.

Building patient trust through unwavering adherence to these standards is essential for the life sciences to continue leveraging data for a healthier future.

The Future of Secure Data Collaboration

To accelerate medical breakthroughs, we must overcome challenges like data silos, interoperability, and the computational cost of processing massive datasets. This requires smart, secure data sharing built on clear rules and standards.

Current Challenges in Data Anonymization

Creating effective anonymized patient data still presents challenges. The primary one is balancing data utility with privacy—over-anonymizing can render data useless, while under-anonymizing creates risk. The complexity of modern data, including unique genomic data, medical images, and unstructured clinical notes, makes removing all Protected Health Information (PHI) a significant task. Furthermore, anonymizing petabytes of data at scale requires immense computing power and expertise.

Future Trends: Federated Learning and Synthetic Data

Fortunately, innovation is tackling these challenges head-on.

One of the most powerful trends is Federated Learning. Instead of moving sensitive anonymized patient data, this approach brings algorithms directly to the data’s secure location. Models are trained locally, and only the aggregated insights—not the raw data—are shared. This improves privacy, breaks down data silos, and enables global collaboration. Lifebit’s next-generation federated AI platform provides secure, real-time access to global biomedical data using this method.

Another key development is Synthetic Data Generation. AI models learn the statistical patterns of real patient data and then create entirely new, artificial datasets. These datasets mirror the properties of the original but contain no real patient information, allowing for safe research and development.

Other advancements include AI-driven Privacy-Enhancing Technologies that more effectively identify and transform sensitive information, and advanced cryptography like homomorphic encryption. The ability to analyze anonymized patient data in real-time is also becoming critical for applications like spotting new drug side effects or public health trends.

At Lifebit, we are at the forefront of these advancements. Our platform creates a secure and compliant environment for research and pharmacovigilance across biopharma and public health. You can learn more about how our Federated Trusted Research Environment (TRE) is making this future a reality right here.

Frequently Asked Questions about Anonymizing Patient Data

Is “anonymized data” the same as “de-identified data”?

While often used interchangeably, there’s a key distinction. De-identification is the process of removing personal identifiers. Anonymization is the outcome—a state where data has been transformed so thoroughly that re-identifying an individual is not reasonably likely. The process is considered irreversible.

In the US, HIPAA uses the term “de-identified data” and provides two methods (Safe Harbor and Expert Determination) to achieve it. Europe’s GDPR is stricter, distinguishing between pseudonymized data (which is reversible and still considered personal data) and truly anonymized patient data, which is irreversible and falls outside GDPR’s scope.

Is anonymized data completely risk-free?

No process is 100% risk-free. While robust anonymization reduces the risk of re-identification to a very low level, it’s impossible to guarantee zero risk. New technologies and the availability of more public data create an evolving threat landscape. The goal is not to eliminate all risk but to manage it effectively, reducing it to an acceptably small level. We use tools like the “motivated intruder” test, where experts actively try to re-identify individuals, to validate the strength of our anonymization methods and ensure data can be used confidently for research.

How does GDPR define truly anonymized data?

Under GDPR, data is considered truly anonymized if it does not relate to an identified or identifiable natural person. The core requirement is that the anonymization process must be irreversible by any reasonable means. This means that even when combined with other information, there should be no reasonable way to trace the data back to an individual. If there is any reasonable likelihood of re-identification, GDPR considers the data pseudonymized personal data, which remains subject to its strict privacy rules.

Conclusion

We’ve explored anonymized patient data, a field that balances open uping life-saving insights with the critical need to safeguard individual privacy. We’ve seen how Anonymized Patient-Level Data (APLD) accelerates drug findy and powers vital research, and we’ve examined the techniques, from suppression to differential privacy, that make it possible.

Managing the risk of re-identification through robust governance, like the Five Safes framework, and adhering to legal standards like HIPAA and GDPR is non-negotiable. This commitment to security and ethics is the foundation of patient trust.

Looking ahead, the future of secure data collaboration is bright. Innovations like federated learning and synthetic data are breaking down old barriers, allowing researchers to ask bolder questions than ever before. At Lifebit, we are building this future, creating a world where data can be used to its full potential to improve global health outcomes, all while protecting the privacy of every individual whose data makes this progress possible.