Data Chaos? AI Harmonization to the Rescue!

AI for Data Harmonization: 2025’s Ultimate Rescue

Why Organizations Are Drowning in Data Chaos

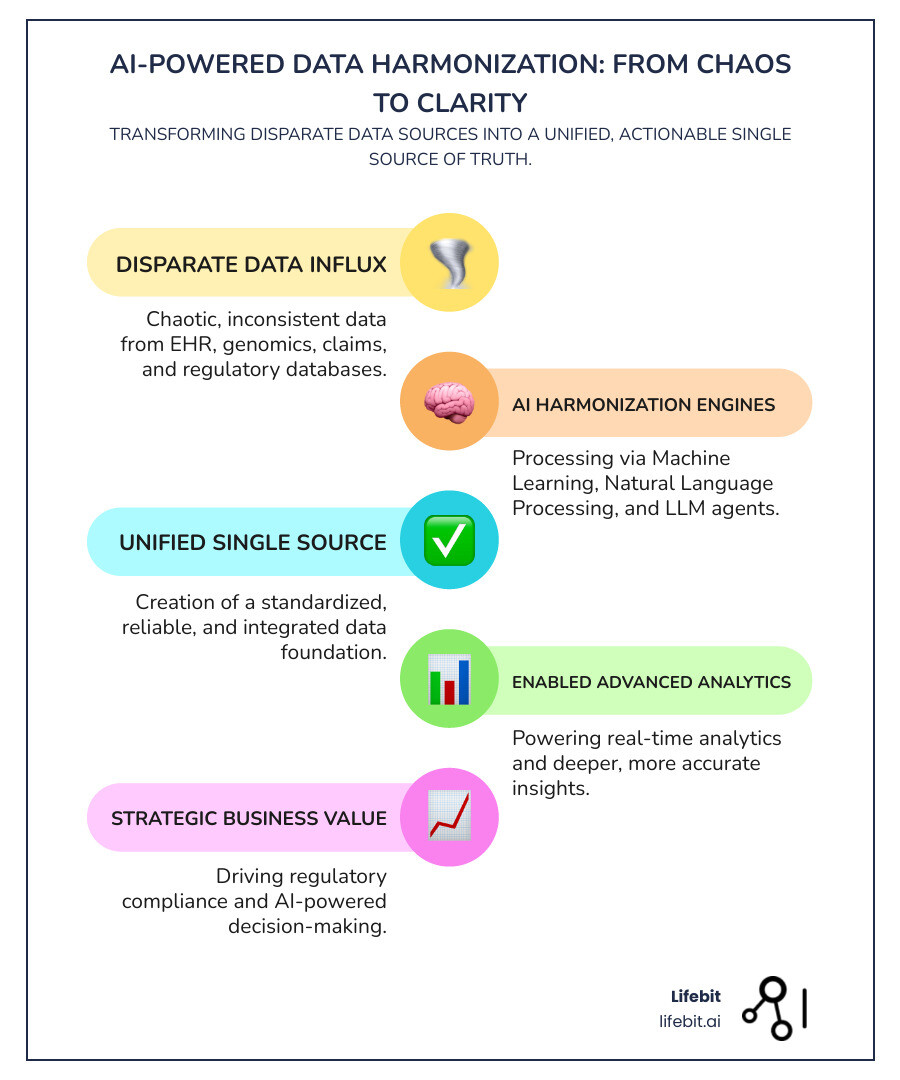

AI for data harmonization is changing how organizations manage their scattered, inconsistent datasets by using machine learning algorithms to automatically standardize, clean, and integrate data from multiple sources into a unified format.

Key AI approaches for data harmonization:

- Unsupervised (Zero-Shot) – Uses semantic similarity without training data (~43% accuracy)

- Few-Shot Learning – Achieves high accuracy with minimal examples (92.7% accuracy with SetFit)

- Supervised Learning – Leverages full training datasets for maximum precision (95%+ accuracy)

- LLM Agents – Interactive harmonization with automated pipeline synthesis

The numbers tell a stark story. By 2025, we’ll generate over 181 zettabytes of data globally. Yet 69% of CFOs consider having a single source of truth for enterprise data critical for running their business. The problem? Only 20% of organizational data is structured, leaving 80% of valuable insights trapped in unstructured silos.

Traditional rule-based harmonization methods are breaking down under this data explosion. Manual processes are too slow, brittle systems can’t adapt, and the expertise required creates bottlenecks that prevent organizations from open uping their data’s full potential.

AI changes everything. Organizations using AI-powered harmonization report a 50% reduction in manual efforts, 60% faster processing times, and 95%+ accuracy in data standardization. Machine learning models adapt to diverse labeling conventions, while natural language processing breaks through language barriers that have historically fragmented global datasets.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where I’ve spent over 15 years developing computational biology tools and AI for data harmonization solutions that power precision medicine across federated environments. My experience building cutting-edge genomic analysis frameworks has shown me how the right harmonization approach can transform chaotic biomedical datasets into actionable insights for drug findy and patient care.

Essential AI for data harmonization terms:

What is Data Harmonization and Why Is It Critical?

Picture this: you’re at a global conference where everyone speaks their own dialect, uses completely different slang, and keeps changing what their words mean. That’s basically what happens with data in most organizations today.

Data harmonization is like teaching all your scattered data sources to finally speak the same language. It’s the process of taking messy, inconsistent information from different systems and changing it into a unified format that everyone can understand. Think of it as creating a universal translator for your enterprise data. For example, a retail company might need to harmonize product data from dozens of suppliers, where one calls a shirt a “T-Shirt, L, Blue” and another calls it “Tee – Large – Navy.”

When we talk about harmonization, we’re really talking about building a single source of truth. Instead of having customer information stored differently across your sales platform (e.g., “J. Smith”), marketing database (e.g., “John Smith”), and support system (e.g., Customer ID 90210), everything gets standardized into one consistent, master record. This process, known as entity resolution, is a core outcome of successful harmonization.

The business impact is huge. The statistic about 69% of CFOs considering unified data critical highlights this urgency. When your data speaks the same language, decision-making becomes dramatically more reliable. You’re no longer building strategies on potentially misleading insights from fragmented information. A financial institution, for instance, can’t get a true 360-degree view of customer risk if their investment, credit, and retail banking data don’t align.

But data harmonization goes way beyond just making better decisions. It’s absolutely essential for regulatory compliance, especially in heavily regulated industries like healthcare and finance. When auditors come knocking for regulations like GDPR or BCBS 239, you need to prove your data is consistent, traceable, and trustworthy across the entire enterprise.

AI for data harmonization also forms the backbone of Master Data Management (MDM). While MDM focuses on governing your core business entities (customers, products, suppliers), harmonization is the technical engine that cleans and standardizes the underlying data, making effective MDM possible. It’s the difference between having a beautiful house built on sand versus one built on a solid concrete foundation.

Common Types of Data Inconsistency

Data chaos isn’t random; it falls into predictable patterns of inconsistency that harmonization is designed to fix:

- Schematic Inconsistency: This occurs when different data sources use different structures or names for the same concept. For example, your CRM might store a customer identifier as

Cust_ID, while your billing system usesCustomerID, and a third-party data source usesEntity_Identifier. Harmonization involves mapping these different field names to a single, standardized name likeGlobalCustomerID. - Semantic Inconsistency: This is a more subtle problem where different sources use the same name for different concepts, or different names for the same concept. A classic example is the field “Region.” In the sales database, it might refer to a sales territory, while in the logistics database, it refers to a shipping zone. Harmonization requires understanding the context to correctly map these fields.

- Format and Value Inconsistency: This is the most common issue, where the same piece of data is represented in multiple ways. This includes different date formats (

12/01/2024vs.2024-12-01), units of measurement (kgvs.lbs), country codes (USAvs.USvs.United States), and inconsistent categorical values (Californiavs.CA).

The Problem with Traditional Data Harmonization

For decades, organizations have been wrestling with data harmonization using traditional Extract, Transform, Load (ETL) processes. These methods worked fine when data was simpler and smaller, but they’re breaking under today’s pressure.

Rule-based systems are the biggest culprit. These approaches require teams to manually write complex rules for every possible data scenario. It’s like trying to write a dictionary for every possible conversation that might happen in your organization. The rules are brittle, break constantly when data sources change, and require constant maintenance from expensive, specialized teams.

The manual effort involved is staggering. Data stewards spend weeks or months defining change rules, mapping fields, and handling exceptions. When new data sources get added (which happens constantly), the whole process starts over again. It’s simply not sustainable when you’re dealing with the 80% of unstructured data—like clinical notes, emails, and reports—that most organizations struggle with.

Scalability becomes impossible. Traditional methods were designed for predictable, structured data. They can’t handle the explosive growth in data volume or the incredible variety of modern data sources, from IoT sensor streams to social media feeds. What worked for a few databases doesn’t work for hundreds of systems generating data in real-time.

Perhaps most frustrating is the lack of adaptability. Real-world data is messy and constantly evolving. Traditional systems can’t handle different naming conventions, multiple languages, or the unpredictable nature of unstructured information. When the person who built these complex rule systems leaves the company, you’re often left with a black box that nobody understands, making future changes nearly impossible.

How Harmonization Powers Your Data Strategy

Here’s where things get exciting. Data harmonization isn’t just about cleaning up existing messes—it’s about building a foundation that transforms your entire data strategy.

Think of harmonization as the foundation layer of your data pyramid. Everything else—business intelligence, advanced analytics, AI initiatives—depends on having clean, consistent data underneath.

Opening up hidden potential is where you’ll see immediate value. That 80% of unstructured data sitting in your organization contains incredible insights, but only if you can make sense of it. Harmonization transforms scattered information into actionable intelligence.

Advanced analytics becomes dramatically more powerful when built on harmonized data. Your business intelligence dashboards finally show accurate comparisons, your trend analyses become trustworthy, and your strategic insights reflect reality instead of data inconsistencies.

For AI and machine learning initiatives, harmonized data is absolutely critical. These algorithms need consistent, high-quality input to learn meaningful patterns. AI for data harmonization creates a virtuous cycle—better data enables smarter AI, which in turn improves your harmonization processes.

Perhaps most importantly, harmonization breaks down data silos between departments. When marketing, sales, and customer service all work from the same standardized customer data, collaboration becomes seamless. Everyone speaks the same data language, leading to better coordination and more effective cross-functional initiatives.

The result? Organizations that get harmonization right see faster decision-making, improved regulatory compliance, and the ability to leverage advanced technologies that were previously impossible with fragmented data.

The AI Revolution: How AI for data harmonization Transforms Your Workflow

Think of traditional data harmonization like trying to translate a document using only a dictionary from 1990. You’ll get somewhere, but it’s going to be slow, painful, and probably wrong in quite a few places. AI for data harmonization is like having a brilliant, multilingual assistant who never gets tired and learns something new from every document they see.

This isn’t just about making the old way faster. Machine learning, Natural Language Processing (NLP), and Large Language Models (LLMs) are completely changing how we think about bringing messy data together. Instead of writing endless rules that break the moment something unexpected shows up, AI models actually understand the semantic meaning of your data.

The results speak for themselves. Organizations using AI for data harmonization are seeing a 50% reduction in manual efforts and 60% faster processing times. But here’s the really impressive part: they’re achieving 95%+ accuracy while handling data volumes that would have been impossible to manage manually.

What makes AI so much better? Unlike those brittle rule-based systems that need constant babysitting, AI models get smarter over time. They learn to spot patterns, adapt to new data formats, and handle the kind of messy, real-world information that used to require an army of data specialists to clean up. This continuous learning, often through human-in-the-loop feedback, means the system’s accuracy improves with use.

The cost savings alone are remarkable. When you’re not paying teams of people to manually map data fields and fix errors, and when your insights are ready in days instead of months, the return on investment becomes pretty obvious.

Unsupervised (Zero-Shot) Harmonization: Getting Started Without Labels

Here’s one of the coolest things about modern AI for data harmonization: you don’t always need perfectly labeled training data to get started. Zero-shot learning is like having a really smart intern who can figure out what you want them to do, even when you haven’t had time to write detailed instructions.

The magic happens through semantic similarity. AI models, particularly transformer-based language models, convert your data labels (like “Patient Date of Birth” or “DOB”) into high-dimensional numerical representations called vectors or embeddings. In this mathematical “semantic space,” labels with similar meanings are positioned close together. It’s like the AI creates a map where “customer,” “client,” and “buyer” all cluster in the same neighborhood because they represent the same core concept.

Sentence Transformers is a popular library that makes this possible. It can take labels from different systems and figure out which ones are talking about the same thing by calculating their cosine similarity—a measure of the angle between their vectors. The higher the similarity score (closer to 1.0), the more likely two labels mean the same thing.

Now, let’s be honest about the limitations. An unsupervised model using Sentence Transformers achieved about 43% accuracy on a broad dataset, which improved to around 53% when working with a smaller, more focused set of labels. That might not sound amazing, but think about it this way: you just automated nearly half of your data harmonization work without any training data at all. This is a massive head start.

This approach is perfect for exploratory data analysis and getting that crucial initial mapping done. It’s like having a rough draft that gets you 50% of the way there, so your team can focus on the tricky edge cases instead of starting from a blank slate.

Few-Shot Learning: High Accuracy with Minimal Effort

What happens when you have just a little bit of labeled data? Maybe a few dozen examples, but nowhere near the thousands typically needed for traditional machine learning? Few-shot learning steps in like a quick study who can learn a new language from just a few conversation examples.

The beauty of few-shot learning is in its efficiency. Instead of needing massive training datasets, these models can generalize from just a handful of examples per category. We’re talking about providing just one or two examples of a mapping (e.g., showing it that user_id maps to UserID), not hundreds.

The SetFit model is a great example of this approach in action. It works by taking a pre-trained language model and fine-tuning it on your small set of labeled pairs. This two-stage process first generates rich embeddings for your specific data and then trains a simple classification head on top. In testing, this method achieved an impressive 92.7% accuracy on a dataset with 162 labels. What’s really remarkable is that this performance was competitive with much larger models that had been trained on thousands of examples.

This is a game-changer for organizations dealing with specialized or constantly evolving data. Maybe you’re working with medical terminology that changes as new treatments emerge, or you’re handling data from different countries with unique naming conventions. Few-shot learning lets you quickly adapt to these situations without the massive data collection effort that traditional approaches require.

The practical impact is huge. You can go from having a new data source to having a working harmonization solution in days instead of months, with accuracy levels that would have required extensive manual work to achieve.

Supervised Learning & LLMs: The Power of Full-Scale AI for data harmonization

When you do have plenty of labeled data, supervised learning becomes your powerhouse approach. Think of it as training a specialist who’s seen thousands of examples and can handle even the trickiest cases with confidence.

Supervised learning treats harmonization like a classification problem. You show the AI model lots of examples of messy data paired with their clean, harmonized versions. The model learns the patterns, relationships, and subtle rules that govern how your data should be transformed. It can work with both the actual data values and the context around them, learning from synonyms and understanding label characteristics.

But here’s where things get really exciting: Large Language Models are changing everything. LLM agents aren’t just classification tools – they’re like having a data expert who can think, reason, and even ask questions when something doesn’t make sense.

These LLM agents can engage in interactive harmonization conversations. Imagine this dialogue:

- You: “Harmonize the ‘gender’ field from our new patient dataset with our standard schema.”

- LLM Agent: “I’ve analyzed the source data and found the values ‘M’, ‘F’, ‘Male’, ‘Female’, ‘1’, and ‘2’. Our standard schema uses ‘Male’ and ‘Female’. I propose mapping ‘M’, ‘Male’, and ‘1’ to ‘Male’, and ‘F’, ‘Female’, and ‘2’ to ‘Female’. Is this correct?”

- You: “Yes, that’s correct. Please apply this rule.”

This is like pair programming, but for data harmonization. The automation potential is remarkable. LLM agents can synthesize entire data harmonization pipelines, generating the complete sequence of steps needed to clean and standardize your datasets. This means they’re not just processing your data – they’re designing reusable workflows that can handle similar data in the future.

Recent scientific research, including work on Interactive Data Harmonization with LLM Agents, explores how these agents can empower data experts while automating the creation of reusable pipelines for mapping datasets to standard formats. It’s the kind of advancement that transforms harmonization from a technical bottleneck into a strategic advantage.

The combination of reasoning capabilities with harmonization expertise means these systems can handle the complexity and nuance that real-world data throws at them, while still maintaining the speed and scalability that modern organizations demand.

Implementing AI for Data Harmonization: A Practical Roadmap

Getting started with AI for data harmonization doesn’t have to feel overwhelming. Think of it like learning to cook – you wouldn’t start by preparing a seven-course meal for twenty guests. Instead, you’d master one dish at a time, building confidence and skills along the way.

The journey involves four key phases: defining clear goals, choosing the right approach, implementing with validation, and creating sustainable processes. Success depends on strong data governance and federated governance where data stays secure in its original location while insights flow freely across your organization.

Step 1: Define Clear Goals and Scope

Before diving into algorithms and models, take a step back and ask the fundamental question: what problem are you actually trying to solve?

Start with one focused project rather than attempting to harmonize your entire data universe. This isn’t about thinking small – it’s about thinking smart. Pick a specific pain point that keeps your team up at night. Maybe it’s customer records that don’t match between your sales and marketing systems, or clinical trial data that takes weeks to standardize before analysis can begin.

Identify the key business questions that your harmonized data will answer. What insights are currently buried under inconsistent formats and conflicting field names? What decisions are your teams making based on incomplete pictures because data can’t talk to each other? These questions will guide every technical choice you make.

Define what success looks like with concrete metrics. Will you measure success by cutting manual data cleaning time in half? Improving data quality scores by 30%? Reducing the time from raw data to actionable insights from weeks to days? Having clear targets helps you stay focused when technical challenges arise.

Understanding your data sources is equally critical. What types of data are you working with – structured databases, unstructured documents, or a mix of both? How often does it update? What formats and standards already exist? This groundwork prevents surprises later in the process.

Step 2: Choose the Right AI Approach

Now comes the strategic decision: which AI for data harmonization approach fits your situation? It’s like choosing the right tool for a job – a hammer works great for nails, but you wouldn’t use it to tighten screws.

| Approach | Data Availability | Required Accuracy | Effort (Labeling) | Best Use Cases |

|---|---|---|---|---|

| Unsupervised | No/Minimal Labeled | Moderate | Low | Exploratory analysis, initial mapping, large datasets with no prior labeling |

| Few-Shot Learning | Limited Labeled | High | Medium | Niche datasets, rapidly evolving data, quick prototyping, cost-effective accuracy |

| Supervised Learning | Abundant Labeled | Very High | High | Established data types, maximum precision required, critical business processes |

Assess your data quality and availability honestly. Do you have clean, labeled examples that show how data should be harmonized? If your answer is “not really,” don’t worry – unsupervised methods can get you started with surprisingly good results. If you have some examples but not thousands, few-shot learning might be your sweet spot.

Match the model to your use case based on your tolerance for imperfection and available resources. For broad data exploration where 43% accuracy gives you valuable insights, unsupervised approaches work well. When you need 92% accuracy with minimal labeling effort, few-shot learning delivers impressive results. For mission-critical processes where 95%+ accuracy is non-negotiable, supervised learning is worth the investment.

Step 3: Implement, Validate, and Iterate

Implementation is where theory meets reality, and reality often has a sense of humor about our best-laid plans.

Combine automation with human expertise from the start. Your AI for data harmonization system should work with your data stewards, not replace them. This is often called a “human-in-the-loop” system. The AI performs the initial mapping, flagging suggestions with a confidence score. High-confidence mappings (>95%) can be automated, while low-confidence ones are routed to a data steward via a simple user interface. The steward can then accept, reject, or correct the AI’s suggestion. This feedback is crucial, as it’s used to continuously retrain and improve the model, reducing the need for manual review over time. Think of it as a partnership where AI handles the heavy lifting while humans provide the wisdom and context.

Create reusable pipelines that can adapt and grow with your needs. Build your harmonization processes like LEGO blocks – modular pieces that connect in different ways for different projects. This approach, supported by a general-purpose data harmonization framework, uses parameterizable operations and automated bookkeeping to ensure your work scales beyond the first project.

Monitor performance and iterate continuously because data never stays still. Set up feedback loops to track accuracy, efficiency, and user satisfaction. When new data types appear or business requirements shift, your system should adapt gracefully. This iterative approach ensures your harmonization solution remains effective as your organization evolves.

Step 4: Scale, Govern, and Maintain

The key to long-term success is moving beyond a single project to an enterprise-wide capability. This requires a focus on sustainable processes.

Establish Strong Data Governance: Create a data governance council with representatives from business and IT. This group is responsible for defining and approving the standard data models (e.g., the official definition of a “customer”), setting data quality rules, and resolving disputes. Without clear ownership, even the best AI will struggle with ambiguity.

Centralize and Version Your Schemas: Use a data catalog or a central schema repository to store your standardized data models. This becomes the single source of truth for how data should look. Implement version control for these schemas and the harmonization rules, just as you would with software code. This allows you to track changes, roll back if necessary, and understand the evolution of your data landscape.

Automate Monitoring for Data Drift: Data sources change unexpectedly. A supplier might add a new column, or an internal application might change its date format. Implement automated monitoring to detect this “data drift.” When a change is detected, the system can alert the data stewards to review and update the harmonization pipeline before it causes downstream failures in your analytics.

The key to success is remembering that AI for data harmonization is a journey, not a destination. Each iteration teaches you something new about your data, your processes, and your organization’s needs. Accept the learning process, and you’ll build something truly valuable and sustainable.

The Future of Harmonized Data: Trends and Innovations

The world of AI for data harmonization is evolving at breakneck speed, and frankly, it’s exciting to witness. We’re not just talking about incremental improvements – we’re looking at fundamental shifts that will make today’s data challenges seem quaint by comparison.

Agentic Data Harmonization: LLM agents are already showing us what’s possible with interactive harmonization, but they’re just getting started. Picture this: instead of wrestling with complex mapping rules or spending hours training models, you’ll simply have a conversation with an AI. “Hey, can you harmonize these patient records with our clinical trial data?” And it just… does it. These agents will learn your preferences, remember your business rules, and get smarter with every interaction. This agentic data harmonization approach means your marketing team won’t need a PhD in data science to merge customer datasets.

Real-Time Harmonization at the Edge: The demand for instant insights is pushing us toward real-time harmonization – and not a moment too soon. Batch processing feels increasingly outdated when decisions need to happen in minutes, not hours. This involves integrating harmonization logic directly into data streaming platforms like Apache Kafka and processing events as they occur. This shift is particularly crucial in pharmacovigilance, where identifying adverse drug reactions in real-time can prevent serious harm. When someone reports a side effect in Tokyo, that information needs to be harmonized and analyzed alongside similar reports from London and New York instantly.

Privacy-Preserving Federated Learning: This is solving one of the biggest headaches in data harmonization: getting everyone to share their data. Spoiler alert – they won’t, and they shouldn’t have to. Instead, harmonization models will train across distributed datasets without the data ever leaving its secure home. The model is sent to the data, trained locally, and only the aggregated, anonymized model updates are shared back to a central server. This is a game-changer for biomedical research, where hospitals and research institutions can collaborate on massive studies while keeping their sensitive patient data exactly where it belongs.

Explainable AI (XAI) for Harmonization: As AI takes on more responsibility, the need for transparency becomes paramount. Explainable AI (XAI) provides a window into the AI’s decision-making process. For harmonization, this means understanding why the model mapped “Acme Corp.” to “Acme Corporation, Inc.” Was it based on address similarity, a known alias, or another factor? This auditability is non-negotiable in regulated industries like finance for anti-money laundering (AML) checks or in healthcare for clinical data provenance. XAI builds trust with users and simplifies compliance.

AI-Driven Data Quality Frameworks: The future also holds frameworks that go far beyond simple harmonization. Think of them as your data’s personal health monitor – constantly checking for issues, automatically fixing problems, and alerting you when something needs attention. These systems won’t just harmonize your data; they’ll ensure it stays clean, consistent, and ready for whatever analysis you throw at it.

At Lifebit, we’re already seeing this future unfold through our federated platform. We’re enabling secure, real-time access to global biomedical data with built-in harmonization capabilities that work across hybrid data ecosystems. It’s not science fiction anymore – it’s happening now.

The change ahead will turn data harmonization from a technical nightmare into something that just works in the background. And honestly? It’s about time.

Conclusion

The data explosion we’re experiencing isn’t just a technical challenge – it’s fundamentally changing how successful organizations operate. We’ve explored how the traditional world of manual, rule-based harmonization simply can’t keep pace with the 181 zettabytes of data we’ll generate by 2025. Those old approaches leave too many organizations struggling with fragmented insights and missed opportunities.

AI for data harmonization represents more than just a technological upgrade – it’s a complete paradigm shift. The numbers speak for themselves: 50% reduction in manual efforts, 60% faster processing times, and 95%+ accuracy in data standardization. Whether you’re starting with zero-shot learning to explore uncharted datasets, leveraging few-shot approaches for quick wins with minimal training data, or deploying full supervised learning with LLM agents for maximum precision, AI gives you the flexibility to match your approach to your specific needs.

What excites me most is how these advances are democratizing data harmonization. You no longer need armies of data engineers spending months writing brittle ETL rules. Instead, AI learns patterns, adapts to new formats, and scales effortlessly as your data grows.

At Lifebit, we’ve seen how transformative harmonized data can be in the biomedical field. Our federated AI platform enables researchers and organizations to securely access and harmonize global biomedical and multi-omic data in real-time. Through our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer), we’re powering breakthrough research while maintaining the strict compliance and governance standards that biopharma, governments, and public health agencies require.

The future belongs to organizations that can turn their data chaos into clarity. When you harmonize your datasets using AI, you’re not just cleaning up a mess – you’re building the foundation for every advanced analytics initiative, every AI model, and every data-driven decision that will drive your organization forward.

Ready to see what harmonized data can do for your organization? Learn how AI enables real-time adverse drug reaction surveillance and find how the right approach to data harmonization can open up insights you never knew existed.