The Power of Collaboration: How Federated Learning Transforms Healthcare Data

Federated learning in healthcare: Transformative 2025

Why Healthcare Needs Federated Learning to Break Down Data Silos

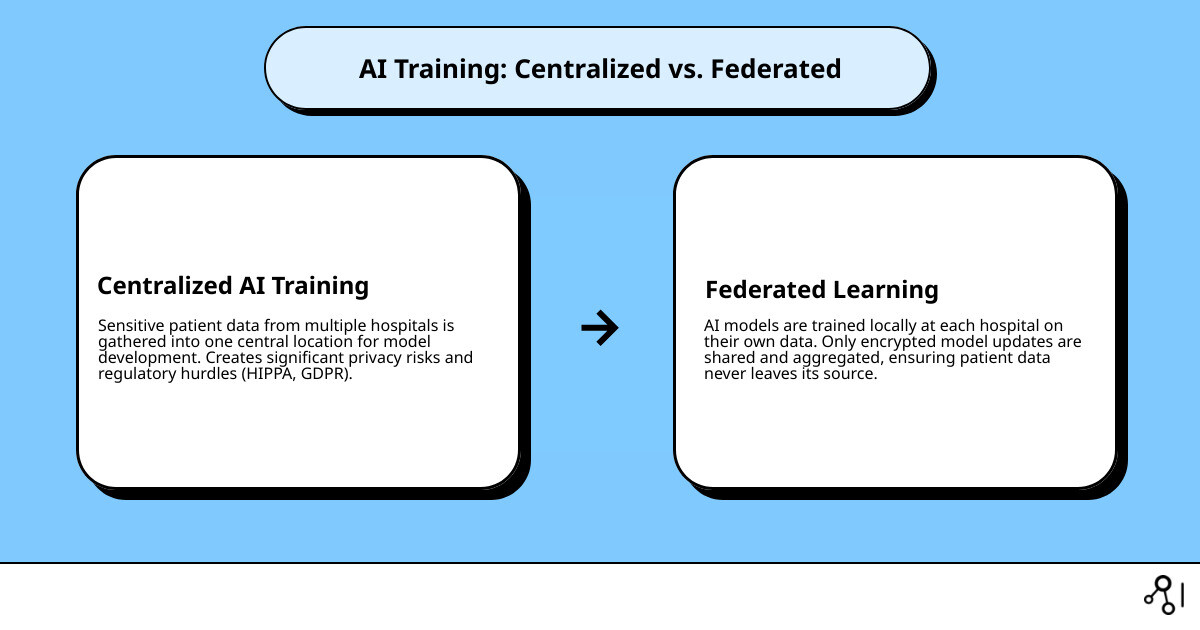

Federated learning in healthcare enables collaborative AI model training across hospitals and research institutions without sharing sensitive patient data. Instead of moving data to a central server, the model is brought to the data. This allows each site to train locally while contributing to a shared, more powerful AI system. Key benefits include improved privacy protection, simplified regulatory compliance (HIPAA, GDPR), improved model performance from diverse datasets, reduced bias, and cost efficiency by avoiding data centralization.

The healthcare industry’s vast data resources—from EHRs and medical images to genomics—are often locked in institutional silos. Traditional AI requires centralizing this data, creating significant privacy and legal barriers.

Recent research indicates a gap between the explosion in federated learning publications and real-world clinical deployment, which stands at only 5.2%. This highlights both the potential and the implementation challenges. The COVID-19 pandemic underscored the need for rapid, collaborative research, where federated learning proved critical for developing predictive models without compromising patient privacy.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. With over 15 years of experience in genomics and federated learning in healthcare, my work focuses on breaking down data silos while upholding the highest standards of privacy and compliance.

Core Concepts and Applications of Federated Learning in Healthcare

Think of federated learning in healthcare as a collaborative research project where participants analyze data locally and share only learned patterns—not the raw data itself. This architecture elegantly solves the data silo problem without compromising patient privacy or institutional data ownership. FL is the ideal approach when data is inherently distributed across multiple locations, is highly sensitive and subject to strict legal protections (like PHI), and when combining insights from these distributed datasets can create more robust, accurate, and less biased predictive models.

Key applications include:

- Rare disease research: For diseases affecting few individuals, no single institution has enough data for meaningful analysis. FL allows international consortia to pool statistical power from their patient cohorts to identify genetic markers, understand disease progression, and develop diagnostic models without moving sensitive patient data across borders.

- Medical imaging analysis: This is the most mature application of FL. Hospitals can collaboratively train deep learning models for tasks like tumor segmentation in brain MRIs, cancer detection in mammograms, or classifying lung diseases from chest X-rays. By learning from diverse scanner types and patient populations, the resulting model is more generalizable and accurate than any single-site model.

- Predictive modeling with EHR data: By training on EHR data from different healthcare systems, FL models can learn from diverse patient journeys and care pathways. This improves predictions for critical outcomes like sepsis onset, 30-day hospital readmission rates, or individual patient response to a specific medication, accounting for variations in clinical practice and demographics.

- Genomics and drug discovery: FL enables the analysis of highly sensitive genomic information at scale. Pharmaceutical companies can collaborate to train models that predict a compound’s efficacy or toxicity, and academic researchers can identify novel drug targets by analyzing genetic data from thousands of individuals—all while the underlying genomic sequences remain securely within each institution’s firewall.

- Wearable and IoMT data: Data from consumer health monitors (e.g., smartwatch ECGs, continuous glucose monitors) can be used to build models for early disease detection or chronic condition management. With FL, this analysis can happen directly on the user’s device (cross-device FL), ensuring that personal health data never leaves their control.

Traditional centralized approaches may still be preferable when data can be safely and legally pooled (e.g., using publicly available, de-identified datasets like MIMIC-IV) or for applications requiring real-time, sub-second interactions that FL’s iterative communication process cannot support.

Data Modalities and FL Maturity

The maturity and adoption of FL vary significantly by data type. Medical imaging is the most mature area, accounting for 41.7% of healthcare FL studies, driven by standardized formats like DICOM and clear clinical use cases. Electronic Health Records (EHR/EMR) follow at 23.7%, primarily used for predictive analytics on structured data. Wearable and IoMT sensor data (13.6%) is a rapidly growing field for real-time health monitoring. Genomics and multi-omics data (2.3%) holds enormous potential for precision medicine but sees cautious adoption due to extreme data sensitivity and complexity.

Across all these modalities, data harmonization—ensuring that data from different sources is comparable and semantically consistent—is the single biggest technical challenge. This involves standardizing variable definitions (e.g., ensuring “blood pressure” is measured and recorded uniformly), aligning measurement units, and handling missing data consistently. The clinical specialties leading FL adoption are currently Radiology, Internal Medicine, Ophthalmology, and Oncology, where large, standardized datasets are most readily available.

Flavors of Federated Learning in Practice

The specific FL approach depends on how data is distributed across participants:

- Horizontal FL (HFL): This is the most common type. Participants have the same type of data (same features) but for different patients. For example, several hospitals collaborate to train a pneumonia detection model. Each hospital has chest X-rays and corresponding labels (pneumonia/no pneumonia) for its own patient population.

- Vertical FL (VFL): This is used when participants have different types of data (different features) for the same group of patients. For instance, a hospital has clinical records for a cancer patient cohort, while a research lab has their genomic data. VFL allows them to build a unified predictive model incorporating both clinical and genomic features without either party having to share its raw data.

- Federated Transfer Learning (FTL): This is a more advanced approach applied when participants have different data types and different patient populations. For example, a model trained on a large, comprehensive dataset for disease X can be adapted and fine-tuned for predicting disease Y at a different hospital with a smaller, distinct dataset, transferring the “knowledge” from the first model.

Real-world validation during the COVID-19 pandemic, detailed in scientific research on real-world FL for COVID-19, proved that federated approaches can deliver critical insights in a global health emergency. Today, cross-silo FL (involving a few large organizations like hospitals) dominates the landscape, but cross-device approaches (involving millions of personal devices like smartphones and wearables) are emerging as a powerful paradigm for personalized health.

Technical Deep Dive: How Federated Learning Works

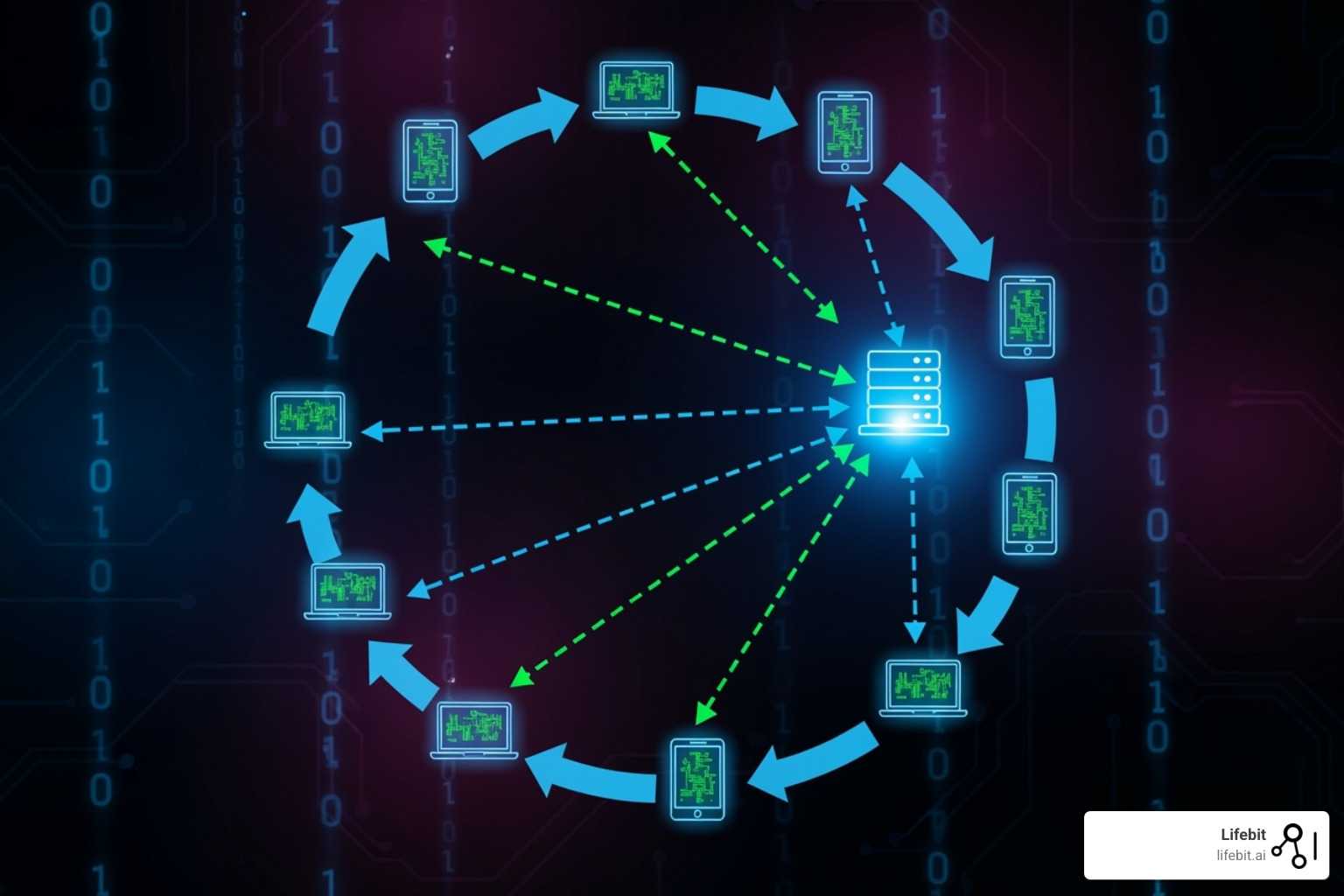

At its core, federated learning in healthcare is a multi-step, cyclical process orchestrated to train a shared model without sharing data. The process can be broken down as follows:

- Initialization: A central coordinator or server defines the model architecture (e.g., a neural network for image analysis) and initializes its parameters (weights).

- Broadcast: The coordinator sends this initial global model to a selection of participating clients (e.g., hospitals).

- Local Training: Each hospital trains this model on its local patient data for a few epochs. This computes an update (typically gradients or model weights) that represents the “lessons learned” from its unique dataset. The sensitive patient data never leaves the hospital’s secure environment.

- Secure Aggregation: Each hospital sends its encrypted or protected model update back to the central coordinator. The coordinator aggregates these updates—for example, by calculating a weighted average—to create a new, improved global model. Privacy-enhancing technologies are used here to ensure the coordinator cannot reverse-engineer any individual hospital’s update.

- Model Update & Iteration: The coordinator broadcasts the newly improved global model back to the participants, and the cycle (steps 2-5) repeats. This iterative process continues until the model’s performance on a validation set converges and meets a predefined accuracy threshold.

Communication Topologies

The network structure for sharing these updates is the communication topology.

- Centralized (Client-Server) Topology: This is the dominant architecture, used in 83.7% of studies. A single, trusted coordinator (the server) manages the entire process, from model distribution to aggregation. This is straightforward to implement, manage, and audit, but it creates a single point of failure and requires all participants to trust the central entity.

- Decentralized (Peer-to-Peer) Approaches: Used in 10.6% of studies, this topology eliminates the central server. Participants communicate and aggregate model updates directly with each other. This increases robustness and removes the single point of failure, but coordination, security, and auditing become significantly more complex.

- Hierarchical (or Hybrid) Models: These models offer a middle ground, often involving regional aggregators that coordinate with a smaller group of local hospitals before sending a consolidated update to a global coordinator. This balances the simplicity of the centralized model with the robustness of the decentralized one.

Comparing Performance: Federated Learning in Healthcare vs. Centralized Training

Does FL perform as well as a model trained on all the data in one place? Under ideal conditions, yes. However, real-world healthcare data is notoriously “non-IID” (non-independent and identically distributed). This statistical heterogeneity is a core challenge. For example:

- Feature Skew: One hospital might use GE MRI scanners while another uses Siemens, leading to systematic differences in image properties.

- Label Skew: A specialist cancer center will have a much higher prevalence of malignant tumors in its data than a general hospital.

- Quantity Skew: A large university hospital may contribute millions of data points, while a small rural clinic contributes only thousands.

This non-IID data can cause local models to diverge during training, degrading the performance of the aggregated global model. The standard aggregation algorithm, Federated Averaging (FedAvg), can struggle under these conditions. To combat this, researchers have developed advanced solutions like FedProx, which adds a term to the local training objective to keep the local model updates from drifting too far from the global model. Other techniques like domain adaptation and smart client selection (prioritizing clients with more informative data) also help. With these advances, well-designed federated models can now routinely achieve 95-98% of the performance of a centralized model, offering a powerful trade-off for vastly superior privacy protection.

Ensuring Reliability and Efficiency

Real-world FL deployments must be robust to practical systems issues, such as clients having different IT resources, network speeds, or dropping out of a training round. Asynchronous aggregation protocols allow the central server to update the global model as soon as it receives a certain number of updates, rather than waiting for every single client, which prevents stragglers from slowing down the entire process. Communication efficiency is also paramount, as model updates can be large. Techniques like gradient compression and quantization (reducing the precision of the numbers in the update) can reduce network load by over 90% with minimal impact on model accuracy.

MLOps (Machine Learning Operations) for federated learning introduces unique complexities. It requires tools for secure cross-organizational orchestration, federated observability (monitoring model performance on data you cannot directly inspect), federated model debugging, and maintaining model lineage and version control across dozens of independent institutions. Platforms like Lifebit’s federated AI infrastructure are specifically designed to manage this complexity, providing a robust framework that allows healthcare and research teams to focus on clinical outcomes rather than distributed systems engineering.

The Trust Framework: Privacy, Security, and Governance

Trust is the absolute cornerstone of any successful federated learning in healthcare initiative. It’s not enough for the technology to work; institutions must trust the technology itself, the other participants in the network, and the governing processes that oversee the collaboration. This trust is built on a foundation of robust governance, stringent multi-layered security, and clear, pre-agreed frameworks for intellectual property (IP) and patient consent.

Governance Models

Effective governance provides the operational and ethical guardrails that transform a group of independent institutions into a trustworthy collaborative network. A central component is often a trusted aggregator or orchestrator, which may be a neutral third party responsible for managing the technical process and ensuring compliance. Key governance elements include:

- Auditability and Logging: Maintaining immutable, transparent logs of all operations (e.g., which models were trained, by whom, on what basis) is critical for accountability and regulatory review.

- Access Control: A rigorous process for vetting and credentialing participating institutions and their users, often managed by a Data Access Committee (DAC).

- Regulatory Compliance: FL’s design, which keeps data localized, inherently simplifies compliance with regulations like HIPAA and GDPR. For example, since Protected Health Information (PHI) does not move, many of the most burdensome requirements of HIPAA’s Safe Harbor de-identification method are avoided. Similarly, by keeping EU patient data within the EU, it mitigates the challenges of cross-border data transfer rules like the Schrems II ruling.

Privacy and Security in Federated Learning in Healthcare

While FL’s data minimization approach is a massive leap forward for privacy, it is not a silver bullet. The model updates, while not raw data, can still leak information under certain conditions. Sophisticated attacks like membership inference (determining if a specific patient was in the training set), property inference (deducing aggregate properties of the data), model inversion (reconstructing representative samples of the training data), or data poisoning (maliciously altering training data to corrupt the global model) are theoretically possible.

To counter these threats, production-grade FL systems must implement a layered defense using Privacy-Enhancing Technologies (PETs):

- Differential Privacy (DP): Adds carefully calibrated mathematical noise to the model updates before they are shared. This makes it statistically impossible to determine whether any single individual’s data was included in the training process. The level of privacy is formally guaranteed by a parameter called epsilon (the privacy budget).

- Homomorphic Encryption (HE): Allows the central aggregator to perform calculations (like averaging the updates) directly on encrypted data without ever decrypting it. This ensures the aggregator learns nothing about the individual updates, only the final combined result.

- Secure Multi-Party Computation (SMPC): A cryptographic protocol that enables multiple parties to jointly compute a function (e.g., the average of their model weights) over their private inputs without revealing those inputs to each other.

- Blockchain: While not a direct data privacy tool, blockchain can be used to create a decentralized, tamper-proof ledger for auditing model training rounds and managing access control, enhancing transparency and trust in the network.

| Technology | Privacy Guarantee | Utility Cost (Performance Impact) | Computational Overhead |

|---|---|---|---|

| Differential Privacy (DP) | Strong, quantifiable (epsilon/delta) | Moderate to High (accuracy drop) | Low to Moderate |

| Homomorphic Encryption (HE) | Cryptographically strong | Very High (significant slowdown) | Very High |

| Secure Multi-Party Computation (SMPC) | Cryptographically strong | High | High |

| Blockchain | Auditability, integrity (not direct data privacy) | Low to Moderate | Moderate |

Choosing the right combination of PETs requires a careful trade-off analysis, balancing the desired level of privacy against the acceptable impact on model accuracy (utility) and computational resources.

Managing IP, Consent, and Collaboration

The human and legal elements of collaboration are just as critical as the technical ones. A comprehensive consortium agreement is essential to define:

- Intellectual Property (IP): Who owns the final, trained global model? Is it the consortium as a whole, the orchestrating body, or is it released as an open-source artifact? What about novel discoveries made using the model? These terms must be defined upfront.

- Benefit Sharing Models: How are the benefits of the collaboration distributed? This can include co-authorship on publications, tiered access rights to the final model, or even financial models based on the quantity or quality of data contributed.

- Patient Consent: For large-scale FL, traditional per-project consent is often impractical. Models like broad consent (allowing data to be used for future, unspecified research under ethical oversight) or opt-out systems are often employed, always managed under the strict supervision of an Institutional Review Board (IRB) or ethics committee.

- Legal Agreements: Formal contracts like Data Use Agreements (DUAs) and a master consortium agreement form the legal foundation, defining the roles, responsibilities, liabilities, and rules of engagement for all participants.

Lifebit’s platform is designed to help navigate these complex governance and legal frameworks, providing the tools and expertise to establish secure, compliant, and trustworthy research collaborations.

From Research to Reality: Implementing and Validating FL

Bringing federated learning in healthcare from a research concept to a validated tool integrated into clinical practice is a complex journey of engineering, validation, and stakeholder buy-in. While academic research is booming, only an estimated 5.2% of FL studies have reached the stage of real-world clinical implementation. Closing this gap is the critical next step for FL to deliver on its transformative potential.

Infrastructure Requirements and Standards

A solid technical foundation at each participating site is non-negotiable. This includes adequate compute power (e.g., multi-core CPUs for EHR data, high-end GPUs like NVIDIA A100s for deep learning on medical images), secure local storage, and reliable, high-bandwidth network connectivity. Deployments can follow several models, each with trade-offs:

- On-Premise: All hardware and data reside within the hospital’s own data center. This offers maximum control and security but requires significant capital investment and in-house IT expertise.

- Cloud: Computation is performed on virtual machines in a public cloud. This is scalable and flexible but can raise concerns about data residency and security, requiring careful configuration of Virtual Private Clouds (VPCs).

- Hybrid: A popular model where a secure “edge” appliance is placed on-premise to perform the local training, while the orchestration and aggregation happen in the cloud. This balances security, control, and scalability.

Data standardization is a critical prerequisite for success. Data must be mapped to a Common Data Model (CDM) to ensure consistency. Key standards include DICOM for medical images, HL7 FHIR for exchanging clinical data, and the OMOP Common Data Model for observational health data. Implementing robust data curation and ETL (Extract, Transform, Load) pipelines to convert raw source data into this standardized format is often the most time-consuming part of an FL project. Our Lifebit CloudOS Genomic Data Federation and Lifebit Federated Biomedical Data Platform are built to handle these complex standards and streamline the data harmonization process.

Validation, Fairness, and Explainability

For a federated model to be trusted in a clinical setting, it must undergo validation that is even more rigorous than for traditional AI. This includes:

- Multi-site External Validation: Testing the final model’s performance on data from hospitals that did not participate in the training federation. This is the gold standard for assessing generalizability.

- Prospective Clinical Studies: The ultimate test is to run a study where the FL model’s predictions are used to inform clinical decisions, measuring its real-world impact on patient outcomes and workflows.

- Post-Deployment Monitoring: Continuously monitoring the model’s performance after deployment to detect “model drift,” where accuracy degrades over time as patient populations or clinical practices change.

Measuring and enforcing fairness is a profound ethical responsibility. A model can have high overall accuracy but perform poorly for an underrepresented demographic group present at only one or two sites. Federated fairness algorithms are an active area of research, aiming to measure and mitigate bias across different sites and demographic subgroups without centralizing the data. Explainable AI (XAI) is also crucial for clinical adoption. Techniques like SHAP and LIME can be adapted for the federated setting, allowing clinicians to generate local explanations for why a model made a specific prediction for their patient, building trust without exposing any data from other institutions, a topic explored in A perspective on the future of digital health with FL.

Lessons from Real-World Deployments

Successful large-scale deployments offer a blueprint for the future. Radiology has been a pioneer, with projects like the NVIDIA-led EXAM (EMR CXR AI Model) study for COVID-19. This collaboration involved 20 institutions across North America, Europe, and Asia to develop a model that could predict the oxygen requirements of symptomatic COVID-19 patients from their chest X-rays, achieving high accuracy and generalizability. In drug discovery, the MELLODDY project brought together 10 rival pharmaceutical companies to train a shared model on their proprietary chemical libraries, improving the ability to predict a compound’s properties without any company having to reveal its valuable chemical structures.

Despite these successes, key challenges remain. Incentive mechanisms are needed to encourage institutions to invest the time and resources to participate. These can be financial, reputational (e.g., co-branding a major study), or scientific (gaining access to the powerful final model). Integration into existing clinical workflows (e.g., pulling data from EHR/PACS systems and pushing results back in an actionable format) is a major technical and logistical hurdle. Finally, more research is needed on the health economic impacts and Return on Investment (ROI) to build a compelling business case for hospital executives to invest in federated learning infrastructure.

Frequently Asked Questions about Federated Learning in Healthcare

Healthcare organizations considering federated learning often have questions about its benefits, security, and practical applications. Here are answers to the most common concerns.

What is the main advantage of federated learning over traditional data sharing?

The main advantage is that institutions can collaborate on AI model development without moving or sharing sensitive patient data. Federated learning brings the model to the data, breaking down institutional silos. This enables access to larger, more diverse datasets for building powerful AI models while maintaining patient privacy and simplifying regulatory compliance with laws like HIPAA and GDPR.

Is federated learning completely secure?

Federated learning is significantly more secure than centralizing data, but it is not inherently immune to all risks. The model updates that are shared can potentially leak information about the training data. For this reason, robust FL implementations layer on Privacy-Enhancing Technologies (PETs). Technologies like differential privacy and secure aggregation provide strong, mathematically provable security guarantees, ensuring that individual patient information cannot be reverse-engineered from the model updates.

Can federated learning work with different types of medical data?

Yes, FL is highly flexible and can be applied to nearly any type of medical data. It is most mature for medical imaging (42% of studies) and electronic health records (24% of studies). However, it is also showing tremendous promise for highly sensitive data types like genomics and data from wearable devices (IoMT). The technology’s adaptability makes it a versatile solution for a wide range of healthcare AI collaborations.

Conclusion

The healthcare landscape is on the brink of a major change. Federated learning in healthcare is the key to open uping the vast potential of medical data that has long been trapped in institutional silos. By enabling collaboration without compromising privacy, federated learning allows institutions to build more powerful, accurate, and less biased AI models.

This approach accelerates medical research, improves diagnostic accuracy, and drives the development of personalized medicine. The COVID-19 pandemic demonstrated the power of federated networks to deliver rapid insights that would have been impossible with traditional data-sharing methods. While research has grown exponentially, the gap between studies and real-world deployment presents a significant opportunity for forward-thinking organizations.

The future of healthcare AI is undeniably federated, fair, and secure. It’s a future where insights from global data can benefit any patient, anywhere.

At Lifebit, we are driving this change. Our federated AI platform provides the infrastructure, security, and governance to make this vision a reality today. Our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer) enable secure, real-time collaboration across global biomedical data. Whether you are in biopharma, government, or a healthcare system, federated learning offers a proven path to innovation. The question is not if this technology will transform healthcare, but whether your organization will lead the way.