The Evolution of Data: Understanding Next-Gen Platforms for Tomorrow’s Insights

Next Generation Data Platform: Unleash Power in 2025

Stop Drowning in Data: Why Your Platform Is Failing (And What to Do About It)

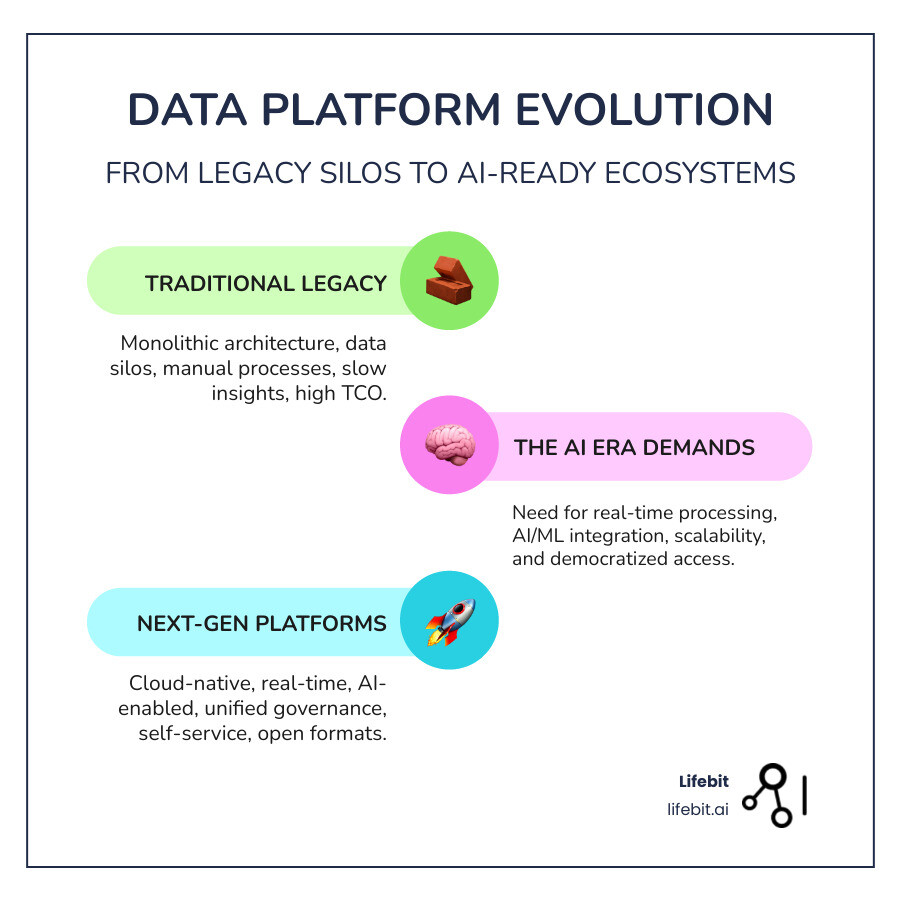

A next generation data platform is a cloud-native, AI-enabled ecosystem that unifies data engineering, analytics, and machine learning. Unlike traditional data warehouses or lakes that create data swamps and silos, these modern platforms enable real-time insights and autonomous data products across distributed environments.

The data landscape is drowning in complexity. Traditional platforms create more problems than they solve—data sits trapped, insights take weeks, and AI initiatives stall because teams spend 80% of their time on plumbing instead of value creation.

The numbers tell a stark story. Companies using next-generation platforms see 30-84% cost reductions, process data 4x faster, and are three times more likely to contribute at least 20% to EBIT through their data initiatives. As 83% of companies plan to adopt modern data mesh architectures and AI becomes table stakes, the question isn’t whether to modernize, but whether you’ll lead or be left behind.

As Maria Chatzou Dunford, CEO and Co-founder of Lifebit, I’ve spent over 15 years building next generation data platforms for secure, federated analysis across biomedical datasets. I’ve seen how the right architecture can transform global healthcare outcomes.

Your Data Platform is Obsolete: Here’s What’s Next

Running a modern business on a traditional data platform is like using a 1980s filing cabinet in the age of cloud computing. While the world has moved on, 84% of companies still rely on data warehouses designed for a different era, creating bottlenecks that slow down innovation.

A next generation data platform is a living ecosystem that treats data as an active asset. At Lifebit, we’ve seen how legacy platforms hinder life-saving research. That’s why we built our platform on the principle that data should work for you, not against you.

Traditional warehouses and lakes are monolithic architectures that create centralized bottlenecks. A next-gen platform breaks free with a cloud-native architecture that scales automatically, so you only pay for what you use. Real-time processing delivers insights in minutes, not days, while distributed computing with tools like Apache Spark tackles massive datasets with ease. The platform doesn’t just store data—it learns from it, finds patterns, and even fixes quality issues automatically through integrated AI and machine learning. Open formats like Apache Iceberg and Delta Lake prevent vendor lock-in, ensuring you can always use the best tools for the job.

From Traditional Pain to Modern Gain

Legacy systems hurt your bottom line. Data silos obscure the big picture, latency delays critical decisions, and high total cost of ownership drains budgets with expensive hardware and licensing. Their inflexibility kills innovation, while a growing skills gap for outdated systems creates risk. Next-gen platforms solve these issues with unified access, real-time insights, cost-efficient cloud models, and unparalleled agility.

Core Principles of a Modern Data Architecture

Successful modern platforms are built on several key principles:

- Decentralization: Domain-specific teams own their data products, eliminating central bottlenecks.

- Self-Service Analytics: Intuitive tools democratize data access for non-technical users.

- Automation: Tedious manual work like data ingestion and quality checks are automated, reducing costs and errors.

- Interoperability: Components communicate seamlessly, enabling powerful federated data analysis without moving sensitive data.

- Security by Design: Protection and compliance are integrated from the ground up, as exemplified by our Trusted Research Environment for sensitive biomedical research.

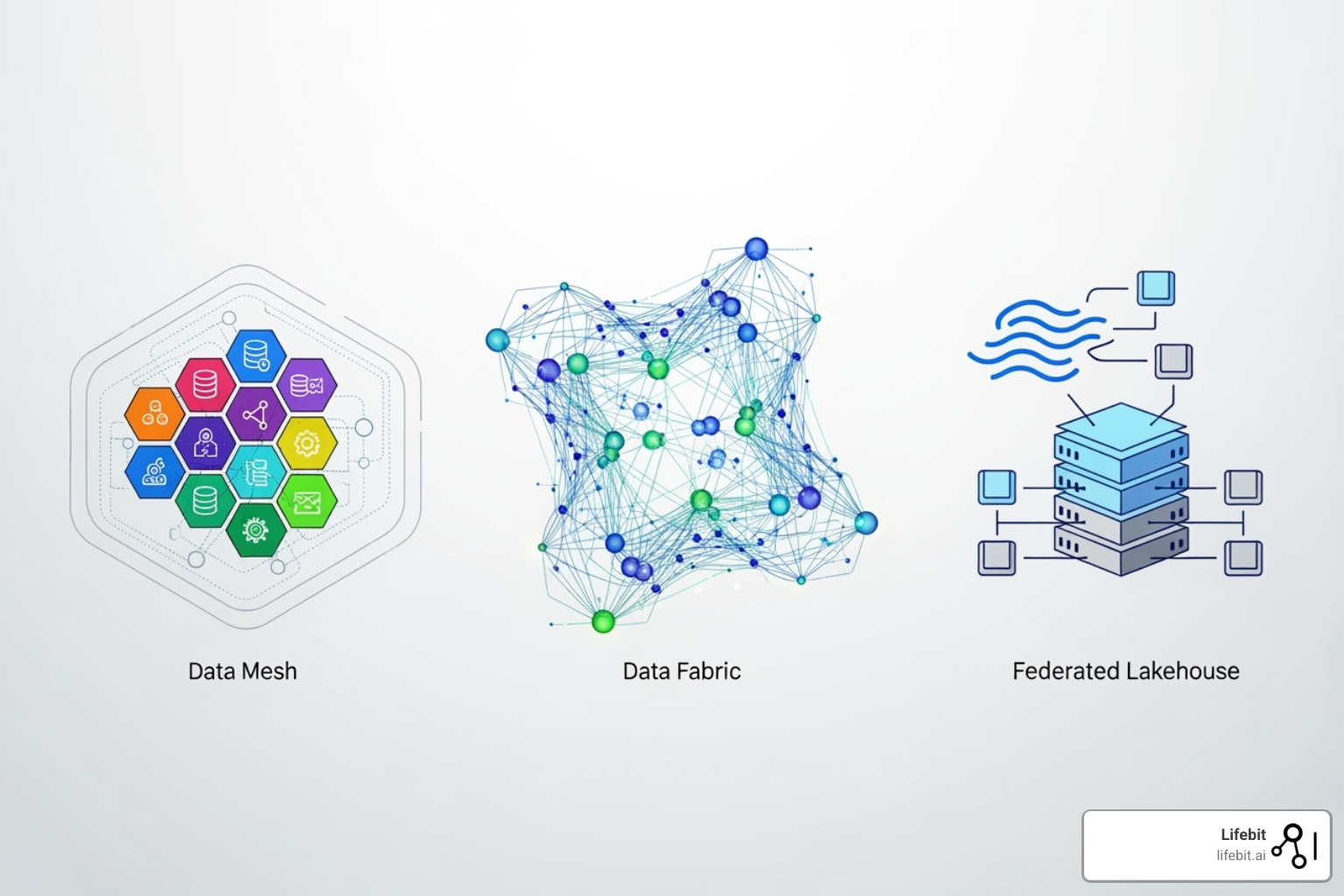

Data Mesh, Fabric, or Lakehouse: Which Architecture Unlocks Your Data’s Value?

Modern data architecture is dominated by three models: Data Mesh, Data Fabric, and Lakehouse. They aren’t just buzzwords; they are proven approaches for solving the problems of traditional, monolithic systems. Some champion a decentralized, sociotechnical architecture (Mesh), others prefer an intelligent, technology-driven abstraction layer (Fabric), and many are adopting a unified, hybrid storage model (Lakehouse). Understanding which fits your organization—or how to combine them—is key to unlocking your data’s full potential.

The Data Mesh: Decentralized Ownership and Domain-Driven Design

Data Mesh is a paradigm shift that decentralizes data ownership, moving it from a central data team to the business domains that are the true experts. This approach is built on four core principles:

- Domain-Oriented Ownership: Instead of a central team becoming a bottleneck, individual business units (e.g., marketing, finance, supply chain) own the data they produce. They are responsible for its quality, accessibility, and lifecycle.

- Data as a Product: Each domain treats its key datasets as products. This means the data is not just a table in a database; it is discoverable, addressable, trustworthy, and secure, complete with service-level objectives (SLOs), clear documentation, and a dedicated owner. For example, the marketing team might offer a “Customer Engagement Score” data product that other teams can consume via a clean API.

- Self-Serve Data Platform: A central platform team enables domain teams by providing the underlying infrastructure and tools as a service. This platform allows domains to easily build, deploy, and manage their own data products without needing to be infrastructure experts.

- Federated Computational Governance: To avoid chaos, a federated governance model establishes global rules for security, interoperability, and quality. However, the implementation and enforcement of these rules are automated within the self-serve platform, balancing central control with domain autonomy. Learn more about data mesh concepts.

The Data Fabric: Weaving a Unified Data Layer

Data Fabric takes a technology-centric approach to create an intelligent, virtual layer that connects all of an organization’s data, regardless of where it is stored. It doesn’t require moving data; instead, it weaves it together through:

- Active Metadata and Knowledge Graphs: A data fabric goes beyond a static data catalog. It continuously scans data sources to build a dynamic knowledge graph of all data assets, understanding their lineage, relationships, and business context. This active metadata is the fabric’s brain.

- AI-Driven Automation: The real power of a fabric comes from its use of AI to automate complex data management tasks. It can recommend datasets to users, suggest optimal data integration pathways, and even auto-generate data pipelines, dramatically reducing manual effort and accelerating time-to-insight.

This makes disparate data sources—from legacy mainframes to modern cloud applications—feel like a single, logical, and queryable system.

The Lakehouse: Unifying Lakes and Warehouses

The Lakehouse architecture directly addresses the costly and inefficient two-platform problem where companies use data lakes for data science and ML, and separate data warehouses for BI and reporting. It brings the reliability, performance, and ACID transactions of a warehouse directly to low-cost data lake storage (like Amazon S3 or Azure Data Lake Storage). This is achieved using open table formats like Apache Hudi, Apache Iceberg, and Delta Lake. The result is a single, unified platform where you can store all your structured and unstructured data and run everything from SQL analytics to ML model training on the same copy of the data. At Lifebit, our federated lakehouse allows life sciences organizations to analyze genomic, clinical, and real-world data in a single, secure environment. Explore the Benefits of a Federated Data Lakehouse in Life Sciences.

Which Model Is Right for You?

- Choose Data Mesh if you are a large, complex organization with distinct business domains and you want to foster innovation and agility at the edges. It requires a significant cultural and organizational shift.

- Choose Data Fabric if your primary challenge is a complex, heterogeneous data landscape with many legacy systems. It provides a unified view without requiring a massive data migration or organizational restructure.

- Start with a Lakehouse as the foundational architectural pattern. It is the modern replacement for the data lake and warehouse and can serve as the technological core for either a Data Mesh or Data Fabric implementation.

The Tech Stack That Powers Real-Time AI and Slashes Costs

A next generation data platform integrates a curated set of best-in-class components to deliver speed, scalability, and reliability. The foundation is built on cloud services from providers like AWS, Azure, and Google Cloud, which offer the elastic infrastructure needed to provision complex data environments in days, not months.

To handle today’s massive data volumes, distributed computing frameworks like Apache Spark are essential. For real-time analysis, streaming data technologies such as Apache Kafka and Apache Flink process information as it arrives. The game-changer has been the rise of open table formats—Apache Hudi, Apache Iceberg, and Delta Lake—which bring database-like reliability and performance to data lakes, preventing them from becoming unusable data swamps.

These platforms are built with AI/ML frameworks like TensorFlow and PyTorch as first-class citizens. Containerization with Kubernetes provides a consistent and scalable environment for running data workloads from development to production, while orchestration tools like Apache Airflow manage complex data pipelines, ensuring every step runs reliably and in the correct order.

Core Components of a Next Generation Data Platform

Every effective platform includes six core components that work in concert:

- Data Ingestion: This layer reliably consumes data from any source. It handles everything from batch ingestion of files and database dumps using tools like Fivetran and Airbyte, to real-time Change Data Capture (CDC) from transactional databases with Debezium, and high-throughput event streams from IoT devices and applications via Apache Kafka.

- Data Processing: Raw data is rarely useful. This component cleans, enriches, and transforms it into valuable information using powerful compute engines. Apache Spark excels at large-scale batch and micro-batch processing, while Apache Flink is designed for true, low-latency stream processing with sophisticated event-time semantics.

- Data Storage: Modern platforms use a multi-tiered storage strategy. Raw and transformed data is stored cost-effectively in cloud object storage (like AWS S3 or Google Cloud Storage), forming the core of the data lakehouse. For specific high-performance or low-latency use cases, specialized analytical databases or key-value stores may also be used.

- Query Engines: These engines translate business questions into data answers. This includes federated query engines like Presto and Trino, which can query data in place across multiple sources, and integrated data cloud platforms like Snowflake or Databricks SQL, which provide a tightly coupled storage and compute experience optimized for performance.

- Analytics and BI: This is the user-facing layer that delivers insights. It serves a wide spectrum of users, from executives viewing high-level dashboards in tools like Tableau or Power BI, to data scientists exploring data and building models in collaborative notebook environments like Jupyter or Hex.

- Data Governance Tools: Acting as the platform’s central nervous system, these tools manage metadata, data lineage, access controls, and quality. Modern governance platforms like Collibra, Alation, or open-source options like OpenMetadata provide an active, intelligent data catalog that makes data discoverable, understandable, and trustworthy. Explore an example streaming data platform on GitHub.

The Role of Automation and AI

Next-gen platforms are self-improving systems where automation and AI transform data management from a manual chore into an intelligent, proactive process.

DataOps and CI/CD pipelines apply the discipline of software engineering to the data lifecycle. This means versioning data transformation code in Git, automatically testing data quality and pipeline logic before deployment, and continuously monitoring performance in production. This dramatically reduces errors and increases the reliability of data products. Automated quality checks and anomaly detection use AI to continuously monitor data streams. For example, an AI model can monitor e-commerce transactions and automatically flag a sudden drop from a specific region, alerting engineers to a potential outage before it significantly impacts revenue. In our AI for Genomics work, these automated processes identify critical patterns in vast biological datasets that are impossible for humans to spot. The result is a self-optimizing platform that frees your team to focus on strategic initiatives.

Your Roadmap to a Next-Gen Platform: Benefits, Pitfalls, and Strategy

Migrating to a next generation data platform is a strategic undertaking that promises transformative benefits but also presents significant challenges. Organizations that navigate this journey successfully achieve remarkable results, creating a durable competitive advantage.

Key Benefits of a Next Generation Data Platform

The impact of a modern data platform is clear and measurable:

- Massive Cost Savings: Organizations report 30-84% cost reductions by moving from expensive, fixed-capacity legacy systems to elastic cloud models and consolidating redundant data pipelines.

- Radical Speed Improvements: With 4x faster data processing, teams can answer critical business questions in hours or minutes, not weeks, accelerating the pace of decision-making across the enterprise.

- Smarter Decision-Making: High-performing data organizations are three times more likely to contribute at least 20% to EBIT, turning the data function from a cost center into a primary driver of value.

- Improved Customer Experience: Real-time insights enable deep personalization, proactive customer service, and the creation of data-driven products that delight users.

- Durable Competitive Advantage: The agility to rapidly experiment, innovate, and deploy AI-powered products and services creates a significant and lasting edge over slower-moving competitors.

Overcoming Common Implementation Challenges

Implementation requires careful planning and a strategic mindset to avoid common pitfalls:

- Data Migration: Instead of a high-risk “big bang” migration, adopt an incremental strategy. Start by identifying a single, high-value business domain, such as marketing analytics. Migrate its data and workloads first. This creates a “lighthouse” project that demonstrates value, allows the team to develop reusable migration patterns, and builds organizational momentum for subsequent phases.

- Managing Complexity: A successful pilot project must have a clearly defined scope and measurable success criteria tied to a business outcome. For example, aim to reduce the time-to-insight for a critical fraud detection report from 24 hours to under 5 minutes. This tangible goal focuses the technical team and provides a clear win to showcase to executive sponsors, justifying further investment.

- Bridging Skill Gaps: Proactively address skill gaps by investing in a multi-pronged talent strategy. This includes upskilling your current team with certified training for cloud platforms (AWS, Azure, GCP) and distributed computing (Spark). Foster a culture of continuous learning by establishing internal “guilds” or communities of practice. Simultaneously, hire key external talent with deep expertise to act as mentors and accelerate the team’s learning curve.

- Ensuring Data Quality: Embed data quality as a non-negotiable part of your platform from day one. Implement automated testing directly within your data pipelines using frameworks like Great Expectations or dbt tests. Formalize “data contracts” between data producers and consumers. These contracts programmatically define the expected schema, freshness, and validation rules for a dataset, ensuring that breaking changes are caught before they pollute downstream analytics.

- Cost Management: Cloud costs can spiral without discipline. Implement FinOps practices by meticulously tagging all resources by project, team, and environment. Configure automated budget alerts to prevent surprises. Conduct regular cost optimization reviews to identify idle resources, right-size compute instances, and leverage cost-saving options like AWS Savings Plans or Spot Instances. This transforms cost management from a reactive chore into a proactive, continuous process.

- Change Management: Technology is only half the battle; user adoption is critical. Develop a comprehensive change management plan that communicates the “why” behind the migration and highlights the specific benefits for each user group. Identify and empower “data champions” within business units to advocate for the new platform, provide peer support, and channel feedback to the platform team. Regular demos and hands-on workshops are essential to drive widespread adoption.

Building Your Next Generation Data Platform Strategy

A successful strategy rests on three pillars: governance, security, and self-service.

Data governance must be woven into the platform’s DNA, not bolted on as an afterthought. This means automated metadata capture and lineage tracking embedded in orchestration tools, and implementing access policies as code for consistent and auditable enforcement. This ensures data is not only high-quality but also discoverable, understandable, and compliant. Data security is paramount, requiring a defense-in-depth approach. This includes robust role-based access controls (RBAC) with row- and column-level security, end-to-end encryption (in transit and at rest), and continuous threat monitoring to comply with regulations like GDPR and HIPAA. For highly sensitive data, a Trusted Research Environment (SDE) provides a secure, isolated space for analysis. Finally, self-service analytics is the ultimate goal, empowering users to find answers independently through intuitive interfaces, data catalogs, and query tools, which accelerates innovation across the organization.

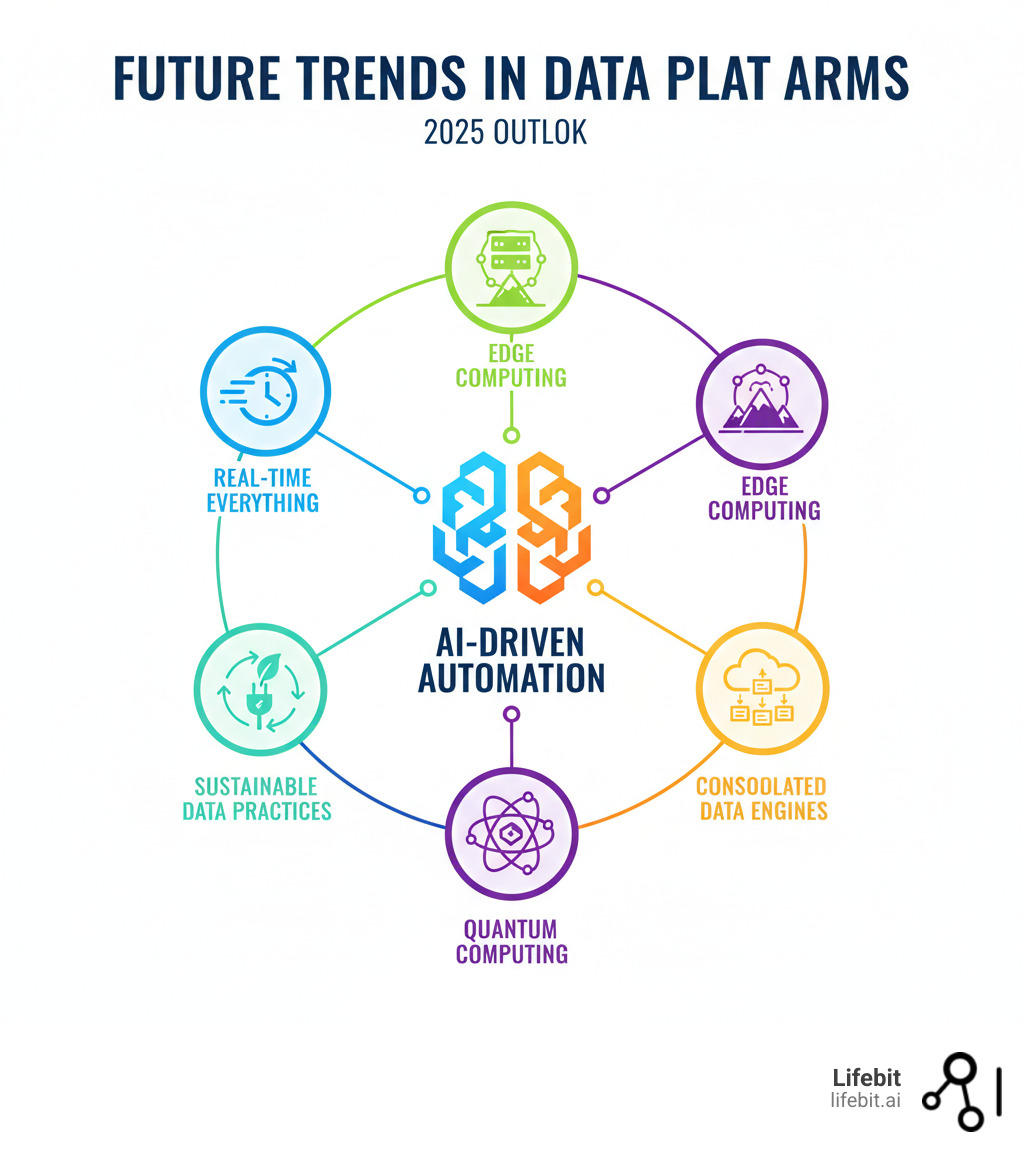

The Future is Autonomous: AI Agents, GenAI, and What’s Next for Data

The next era of data platforms is unfolding now, defined by intelligent, autonomous systems that think and act on their own.

The most exciting development is the rise of AI agents and autonomous data products that monitor themselves, fix quality issues, and evolve their own governance. This is enabled by consolidated engines that handle streaming, batch, and analytics in a single, unified system, eliminating complexity. The underlying tech stack is also getting faster and more efficient, with languages like Rust and C++ gaining traction.

As outlined in Data Platforms in 2030, we’re moving toward platforms that are less about managing data and more about activating it intelligently.

Measuring Success and ROI

To justify the investment, you must measure impact. Key metrics include:

- Time-to-Insight: How quickly can your teams answer critical business questions?

- Cost per Query: Are you optimizing cloud usage and improving query efficiency?

- Data Product Adoption: Are teams actually using the data products you build?

- Contribution to EBIT: Is your data platform a cost center or a revenue driver? High-performers contribute over 20% to earnings.

Emerging Trends to Watch

Several key trends are shaping the future:

- Generative AI Integration: Organizations are building custom AI models on proprietary data using vector databases and Retrieval-Augmented Generation.

- Serverless Compute: Further abstraction of infrastructure will allow teams to focus purely on business logic.

- Edge Computing: Processing data at the source is becoming crucial for low-latency applications like IoT and real-time patient monitoring.

- Sustainable Computing: Energy efficiency and environmental responsibility are becoming essential design considerations for data platforms.

Frequently Asked Questions about Next-Gen Data Platforms

Navigating the shift to a modern data platform brings up common questions. Here are clear, concise answers to the most frequent ones.

What is the main difference between a data lakehouse and a data warehouse?

A traditional data warehouse stores structured, processed data for BI and reporting. It’s reliable but rigid and expensive. A data lakehouse combines the low-cost, flexible storage of a data lake with the reliability of a warehouse. It handles all data types (structured and unstructured) and supports both BI and AI/ML workloads on a single, unified copy of data, eliminating silos and duplication.

How does a data mesh differ from a traditional centralized data platform?

A traditional platform centralizes data ownership, creating a bottleneck where one team serves the entire organization. A data mesh is a decentralized approach where domain-specific teams (e.g., sales, marketing) own and manage their data as a product. This sociotechnical shift eliminates bottlenecks, improves data quality, and accelerates innovation by empowering the experts who know the data best.

Can I build a next-generation data platform on-premise?

While technically possible, you would miss the core benefits. Next generation data platforms are inherently cloud-native to leverage the elasticity, scalability, and managed services that cloud providers offer. An on-premise build would struggle with high costs, slow deployment, and the operational burden of replicating what the cloud provides effortlessly. The ability to dynamically scale compute and storage is fundamental to a modern platform’s value, making the cloud the only practical choice.

Conclusion: Activating Your Data for the AI Era

The shift to a next generation data platform is about fundamentally changing from passive data management to active data activation. The days of data locked in silos are over; the era of real-time, AI-driven intelligence is here.

Organizations making this leap see cost reductions of 30-84%, process data 4x faster, and are three times more likely to drive significant revenue growth. Architectural models like Data Mesh, Data Fabric, and the Lakehouse are strategic choices to break down walls and turn data into a living asset.

Automation and AI are the engine, changing data teams from janitors into innovators. A successful strategy weaves together governance, security, and self-service analytics to build a platform that is not only powerful but also trusted and usable.

At Lifebit, we’ve proven this model in the most complex healthcare environments. Our next generation data platform, including our Trusted Research Environment (TRE) and Trusted Data Lakehouse (TDL), enables secure, real-time access to global biomedical data. We deliver the AI-driven insights that are accelerating drug findy and improving patient care.

The future is federated and intelligent. Your data is waiting to be activated. The technology is ready, the benefits are proven, and the time to lead is now.

Find Lifebit’s federated AI platform and see how we can help you open up tomorrow’s insights, today.