The ABCs of Cloud Data Management: A Comprehensive Overview

Why Cloud Data Management is Changing Healthcare and Life Sciences

Cloud data management leverages cloud-native tools to store, process, and analyze data across distributed environments, replacing traditional on-premises infrastructure. This approach creates scalable, secure, and cost-effective data ecosystems defined by:

- Centralized storage across hybrid and multi-cloud environments

- Elastic scalability that grows with data needs

- Pay-as-you-go pricing that aligns costs with usage

- Built-in security and compliance features

- Real-time global accessibility

- Automated maintenance by cloud providers

With 85% of organizations expected to be ‘cloud-first’ by 2025, this shift open ups the strategic value of data. For healthcare and life sciences, this is critical. You’re handling complex datasets—from genomics to real-world evidence—where traditional data silos fail to keep pace with modern research and population health initiatives.

The challenge is amplified by strict regulatory, sovereignty, and security requirements. You need solutions that enable federated analytics, keeping sensitive data in place while powering AI-driven insights and collaborative research.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. I’ve spent over 15 years building cloud data management solutions for genomics and biomedical research. My experience shows that the right approach can accelerate findy while upholding the highest standards of data privacy and compliance.

What is Cloud Data Management and How Does It Differ from On-Premises?

Cloud data management is the practice of storing, organizing, and analyzing data using internet-based services instead of your own physical servers. It provides secure, accessible, and analysis-ready information on demand.

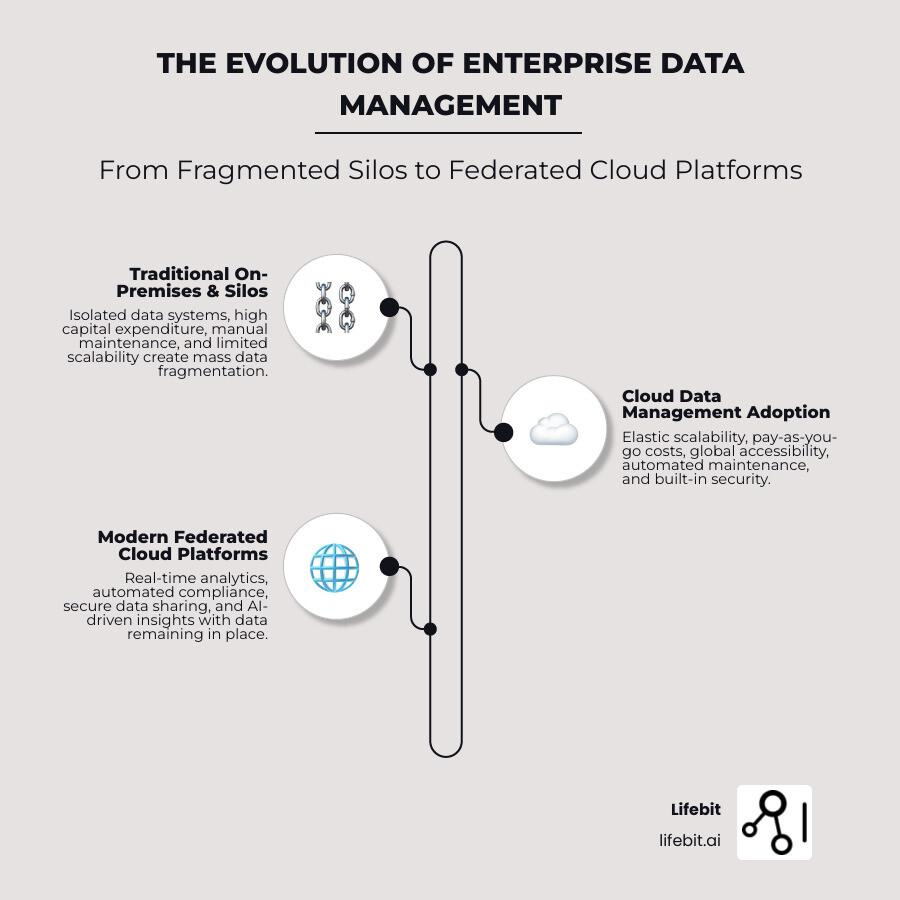

For decades, organizations relied on on-premises data management, which involved buying and maintaining expensive servers in data centers. This approach created data silos, where information was trapped in fragmented systems. For example, a patient’s medical records, genetic data, and clinical trial results might live on separate servers, hiding valuable insights.

Here’s how the two approaches stack up:

| Feature | Traditional On-Premises Data Management | Cloud Data Management |

|---|---|---|

| Scalability | Limited by physical hardware; upgrades are slow and costly | Elastic and virtually unlimited; scales up or down instantly |

| Cost Model | High upfront capital expenditure (CapEx) for hardware and software | Pay-as-you-go operational expenditure (OpEx) based on usage |

| Maintenance | Manual patching, upgrades, and hardware upkeep by internal IT teams | Automated by cloud providers; reduces IT overhead |

| Accessibility | Restricted to local network or VPN; limited remote access | Accessible from anywhere with an internet connection |

| Security | Dependent on in-house expertise and budget; often struggles to keep pace with threats | Shared responsibility model with advanced security features; 94% of adopters report security improvements |

The shift from a CapEx vs OpEx model is a game-changer. Instead of large upfront investments in hardware, you pay only for the resources you use.

The Core Principles of Effective Cloud Data Management

Effective cloud data management follows six key principles:

- Centralization: Breaks down data silos by creating a single source of truth.

- Governance: Establishes clear policies for data access, use, and retention.

- Security: Leverages cloud provider investments to deliver enterprise-grade security.

- Accessibility: Enables secure collaboration for teams, regardless of location.

- Scalability: Allows infrastructure to grow instantly with your data needs.

- Cost optimization: Aligns spending with actual resource consumption.

Key Differences in Infrastructure and Operations

Cloud data management fundamentally changes operations. Managed services handle infrastructure maintenance, freeing your team to focus on innovation. The shared responsibility model creates a security partnership between you and your cloud provider. Automation transforms routine tasks like data ingestion and quality checks into seamless background processes. Global reach allows you to comply with data sovereignty rules, while elasticity lets you scale computing power up or down in minutes. This strategic shift frees organizations to focus on their core mission, leaving complex infrastructure management to the experts.

Core Components of a Modern Cloud Data Platform

A modern cloud data management platform is an integrated ecosystem for the entire data lifecycle, from ingestion to advanced analytics. It brings together data integration, secure storage, analytics, data science, and unified access into a single, cohesive system.

Data Integration: The Foundation of Connectivity

Data integration connects your disparate data sources to create a unified view. Data pipelines automate the movement and transformation of this data. Key patterns include batch processing for large, periodic updates (like nightly clinical trial data loads), streaming data processing for real-time insights from sources like patient monitoring systems, and Change Data Capture (CDC), which efficiently processes only new or modified data, enabling near real-time synchronization from systems like EHRs.

Platforms support both ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) workflows. ELT is often preferred for healthcare data, as it loads raw data into a scalable data lake and uses the cloud’s computing power for transformations. This preserves the original raw data, which is crucial for reproducibility and re-analysis in research. For massive datasets, distributed computing frameworks like Apache Spark break down large tasks to make large-scale genomic analysis possible. You can find more info about workflows and distributed computing on our blog.

Storage Architectures: Modern Approaches

Choosing the right storage architecture is crucial for handling both structured data (e.g., patient demographics) and unstructured data (e.g., medical images, genomic sequences). Cloud-native object storage (e.g., Amazon S3) is the foundation, offering immense scalability.

- Data warehouses (e.g., Snowflake, BigQuery) are optimized for complex SQL queries on structured data for business intelligence.

- Data lakes store vast amounts of raw data in its original format, offering maximum flexibility for data science.

- Lakehouse architecture combines the best of both, implementing warehouse-like management features directly on low-cost data lake storage, providing reliability and performance at scale.

Some platforms also offer converged databases that support multiple data types in a single system. The goal is unified data management, ensuring all data is accessible, governed, and analysis-ready.

Analytics, BI, and Machine Learning Capabilities

This is where a data platform generates insights. Self-service analytics empowers researchers and clinicians to explore data and create dashboards without IT intervention. While traditional Business Intelligence (BI) helps understand past trends, modern platforms focus on predictive power. In-platform machine learning allows you to run AI algorithms directly on your data without moving it—a critical feature for sensitive healthcare information.

This is operationalized via MLOps, which automates the machine learning lifecycle, including model versioning, automated deployment, and performance monitoring. Feature stores further accelerate this by centralizing curated data features (e.g., patient cohorts) for reuse across projects, ensuring consistency and reducing redundant work. This integrated approach enables a seamless path from raw data to actionable insights. Learn more in our guide to building a data intelligence platform.

The Business Imperative: Benefits and Top Use Cases

The move to cloud data management is a strategic imperative that delivers significant business value, competitive advantage, and innovation.

Tangible Benefits for Your Organization

Adopting cloud data management open ups changeal benefits:

- Agility and speed: Spin up analytical environments in minutes, not months, to accelerate research and prototyping.

- Scalability on demand: Instantly scale compute resources for large datasets, paying only for what you use.

- Cost optimization through FinOps: Shift from high capital expenses to a pay-as-you-go model. Smart FinOps practices are key to managing cloud spend, as one-third of businesses exceed their budgets.

- Improved security: 94% of companies report better security after moving to the cloud, benefiting from the massive security investments of cloud providers.

- Improved collaboration: Break down data silos and enable secure, real-time collaboration between global research teams.

- Sustainability through Green IT: Leverage efficient, shared cloud infrastructure to reduce your environmental footprint.

Top Business Use Cases for Cloud Data

The versatility of cloud data management enables powerful applications:

- Modernizing data infrastructure: Replace rigid legacy systems with flexible, cloud-native services that evolve with your needs.

- Advanced analytics and AI/ML: Build predictive models and run complex genomics analyses directly within your data platform, avoiding the risks of data movement.

- Real-time streaming analytics: Process continuous data streams from clinical trials or IoT devices for immediate insights and proactive decision-making.

- Application integration: Create a single source of truth by unifying data from EHRs, LIMS, and other systems.

- Departmental data solutions: Empower research teams to create their own analytical environments, accelerating findy while maintaining governance.

A Practical Guide to Implementation and Best Practices

Transitioning to cloud data management can be straightforward with a solid plan. A phased approach is often best to minimize disruption and maximize value.

Planning Your Migration to the Cloud

A successful migration starts with careful planning:

- Readiness assessment: Take a comprehensive inventory of your current data landscape, including sources, volumes, interdependencies, and existing governance.

- Choose a Migration Strategy: Select the right approach for each workload. Common strategies include Rehosting (lift-and-shift), Replatforming (making minor cloud optimizations), Rearchitecting (redesigning for cloud-native), Repurchasing (moving to a SaaS solution), Retiring (decommissioning), and Retaining (keeping on-premises).

- Prioritizing workloads: Start with less critical workloads or new projects to build experience and confidence before tackling mission-critical systems.

- Data modeling: Adapt data models to take full advantage of cloud-native capabilities for better performance and lower costs.

- Cutover strategy: Plan the final transition of data and applications with clear downtime windows and robust rollback plans.

- Hybrid and multi-cloud architectures: Consider keeping some data on-premises while leveraging the cloud for specific workloads. A federated architecture in genomics is a powerful model for analyzing sensitive data in place across these distributed environments.

Best Practices for Performance, Scalability, and Cost Control

Optimizing your cloud platform is an ongoing process:

- Performance tuning: Use techniques like data partitioning, clustering, and caching to speed up queries. Autoscaling automatically adjusts resources to meet demand, ensuring performance during peaks and cost savings during lulls.

- Data tiering and lifecycle management: Automatically move less frequently accessed data to cheaper archival storage to reduce costs. Implement lifecycle policies to automate the data journey from active use to archival and deletion, ensuring compliance.

- FinOps and cost control: Adopt a FinOps culture to manage cloud spend through continuous monitoring, budgeting, and forecasting. Pay close attention to data egress fees (charges for moving data out of the cloud), which can be substantial. Architectures that minimize data movement, like federated analytics, are key to controlling these costs.

Building the Right Team and Operating Model

Technology is only half the equation. The right people and processes are crucial:

- DataOps: Adopt principles that automate data pipelines and improve collaboration between data engineers, scientists, and operations teams to accelerate the delivery of insights.

- Platform engineering team: A dedicated team can build and maintain the core data infrastructure, freeing others to focus on analysis. This team typically includes Cloud Architects, Data Engineers, and DevOps specialists.

- Governance committees and a Center of Excellence (CoE): Establish cross-functional groups to set and enforce data policies. A CoE acts as a central hub of expertise to guide the organization’s cloud strategy and promote best practices.

- Skills gap: Address the cloud skills gap through continuous training and certification to keep your team current with evolving technologies.

Mastering Governance, Security, and Compliance in the Cloud

For sensitive healthcare and biomedical data, cloud data management requires a robust framework for governance, security, and compliance to build trust and mitigate risk.

Establishing Robust Data Governance and Quality

Data governance provides the policies and controls to manage data as a strategic asset. Key components include:

- Data catalog: A comprehensive, searchable directory of all data assets, making information findable and understandable.

- Metadata management: Actively managing data about your data—its origin, format, and quality—is essential for context and automated governance.

- Data lineage: This tracks the complete journey of your data, providing an auditable trail for regulatory needs and troubleshooting. For example, it can trace an analytical result back to its source instrument data.

- Master Data Management (MDM): Creates a single, authoritative “golden record” for critical entities like patients or products by reconciling data from different systems, ensuring a consistent, reliable view.

- Data quality monitoring: Involves embedding automated checks into data pipelines to validate data as it flows, catching errors early and preventing the “garbage in, garbage out” problem in AI models. Our guide on health data standardisation: end-to-end analysis explores these challenges.

Essential Security Controls and Ransomware Protection

Cloud security operates on a Shared Responsibility Model. The cloud provider is responsible for the security of the cloud (physical infrastructure), while you, the customer, are responsible for security in the cloud (data, access, configuration). A multi-layered defense is essential.

- Encryption: Use encryption in transit and encryption at rest to protect data everywhere. Cloud providers make this a standard, easily implemented control.

- Identity and Access Management (IAM): Enforce the principle of least privilege, giving users only the access they need, and strengthen controls with multi-factor authentication (MFA).

- Zero Trust architecture: This modern security model assumes no implicit trust and verifies every request. It moves defenses from static, network-based perimeters to a focus on users, assets, and resources.

- Immutable backups and Disaster Recovery (DR): Create unalterable backups as a powerful defense against ransomware. A well-orchestrated DR plan with clear Recovery Point Objectives (RPO) and Recovery Time Objectives (RTO) ensures business continuity. Our guide on preserving patient data privacy and security offers more insights.

Navigating Compliance, Sovereignty, and Industry Frameworks

Cloud platforms are designed with compliance in mind, offering tools and attestations to meet complex regulatory demands.

- Data privacy and sovereignty: Use region-specific deployments to comply with local laws requiring health data to remain within national borders.

- GDPR and HIPAA: Cloud services offer built-in features to help meet the stringent requirements of regulations like Europe’s GDPR (e.g., tools for data erasure) and the U.S.’s HIPAA. Providers will sign a Business Associate Agreement (BAA) and offer the necessary controls for access logging and auditing. Learn more about HIPAA-compliant data analytics.

- Data management frameworks: For life sciences, validating environments for GxP compliance is critical. Additionally, frameworks like the EDM Council’s CDMC (Cloud Data Management Capabilities) offer an auditable assessment for cloud environments, with The CDMC framework’s 14 Key Controls becoming a gold standard for cloud data governance.

Advanced Analytics, AI, and the Future of Data in the Cloud

The true power of cloud data management is its ability to open up advanced analytics and AI, enabling real-time insights and secure collaboration that were previously impossible.

Enabling Secure Data Sharing and Collaboration

In life sciences, combining diverse datasets drives findy. Governed data sharing makes this possible while maintaining privacy and security. Instead of copying data, secure data exchange technologies like data clean rooms allow multiple parties to run joint analyses without exposing raw data. This collaborative analytics approach breaks down barriers, enabling global studies and coordinated public health responses. Our approach to federated data analysis allows analysis across distributed datasets without centralizing sensitive information.

Operationalizing AI and Real-Time Streaming Analytics

Moving AI from experiment to production requires robust cloud infrastructure. Event processing handles massive volumes of real-time streaming data, from patient vitals to genomic sequencers. A key shift is in-platform machine learning, which brings models to the data, not the other way around. This concept of MLOps without data movement minimizes latency and security risks, which is foundational to a Trusted Research Environment, where cutting-edge research can occur securely.

Future Trends: What to Prepare For

The cloud data landscape is evolving rapidly:

- Data fabric: An intelligent layer that connects disparate data sources through metadata, creating a unified view without mass data movement.

- Knowledge graphs: Organize complex, interconnected biomedical data to enable more sophisticated AI and findy workflows.

- AI-driven autonomous operations: Self-tuning and self-repairing data platforms that optimize for performance, cost, and security with minimal human intervention.

- Edge computing integration: Process data closer to its source (e.g., IoT devices) and move only key insights to the cloud. Our Federation approach is designed for these distributed environments.

Frequently Asked Questions about Cloud Data Management

What is the biggest challenge in cloud data management?

The biggest challenge is managing complexity. The flexibility of hybrid and multi-cloud environments requires expertise to orchestrate different platforms and services. Other key challenges include:

- Cost control (FinOps): The pay-as-you-go model can lead to unexpected costs, especially from data egress fees, if not managed carefully.

- Skills gap: The rapid evolution of cloud technology makes it difficult to keep teams’ skills current.

- Vendor lock-in: Relying on a single provider’s proprietary services can make future migrations difficult and expensive.

- Security and governance: Ensuring consistent policies across different cloud environments is a constant priority, especially with sensitive data.

How do you ensure data quality in the cloud?

Ensuring data quality requires a multi-faceted approach:

- Data profiling and validation rules: Understand your data’s characteristics and set up automated rules to catch errors at the source.

- Monitoring pipelines: Continuously watch data flows in real-time to detect and address issues immediately.

- Data stewardship: Assign ownership of data quality to domain experts who define and maintain standards.

- Automated quality checks: Use cloud-native tools to continuously verify data completeness, accuracy, and consistency throughout its lifecycle.

What is a federated approach to cloud data management?

A federated approach brings the analysis to the data, rather than moving all data to a central location. This is like holding a virtual meeting instead of flying everyone to one office.

Here’s how it works: data stays in place in its original secure environment. Compute-to-data processing sends the analysis to run where the data resides. This enables cross-organizational analysis without requiring any party to share its raw data, which is critical for research involving sensitive information.

The primary benefit is improved security, as it dramatically reduces the risks associated with data movement. Lifebit’s Federated Trusted Research Environment exemplifies this approach, enabling secure analysis of sensitive biomedical data across distributed sources.

Conclusion

We’ve explored how cloud data management is evolving from a technical upgrade to a fundamental business strategy. The journey from on-premises systems to modern cloud platforms open ups new possibilities by enabling instant scalability, real-time global collaboration, and secure in-place AI analysis.

The key benefits—agility, scalability, cost optimization, and improved security—are now essential for staying competitive. Organizations that master these capabilities are leading breakthroughs in drug development and patient care. While challenges like complexity and cost management are real, the rewards are significant, with 94% of companies reporting better security after migrating.

The future of data management is federated, intelligent, and autonomous. Trends like data fabric architectures and AI-driven operations are already emerging. At Lifebit, we see this change firsthand. Our federated AI platform enables secure access and analysis of sensitive biomedical data, helping partners turn their data challenges into findies that improve human health.

A federated approach balances collaboration with security, allowing researchers to analyze global datasets without moving sensitive information. This opens the door to unprecedented research while upholding the highest standards of privacy.

Your data strategy is about future-proofing your organization’s ability to innovate. Effective cloud data management is the foundation that turns data from a burden into a strategic asset.

If you’re ready to open up the full potential of your data with federated analytics, find a next-generation federated data platform that can help you achieve your goals.