The AI Lakehouse Explained: From Data to Discovery

AI data lakehouse: Your #1 2025 Guide

Why AI Data Lakehouse Architecture Matters for Modern Organizations

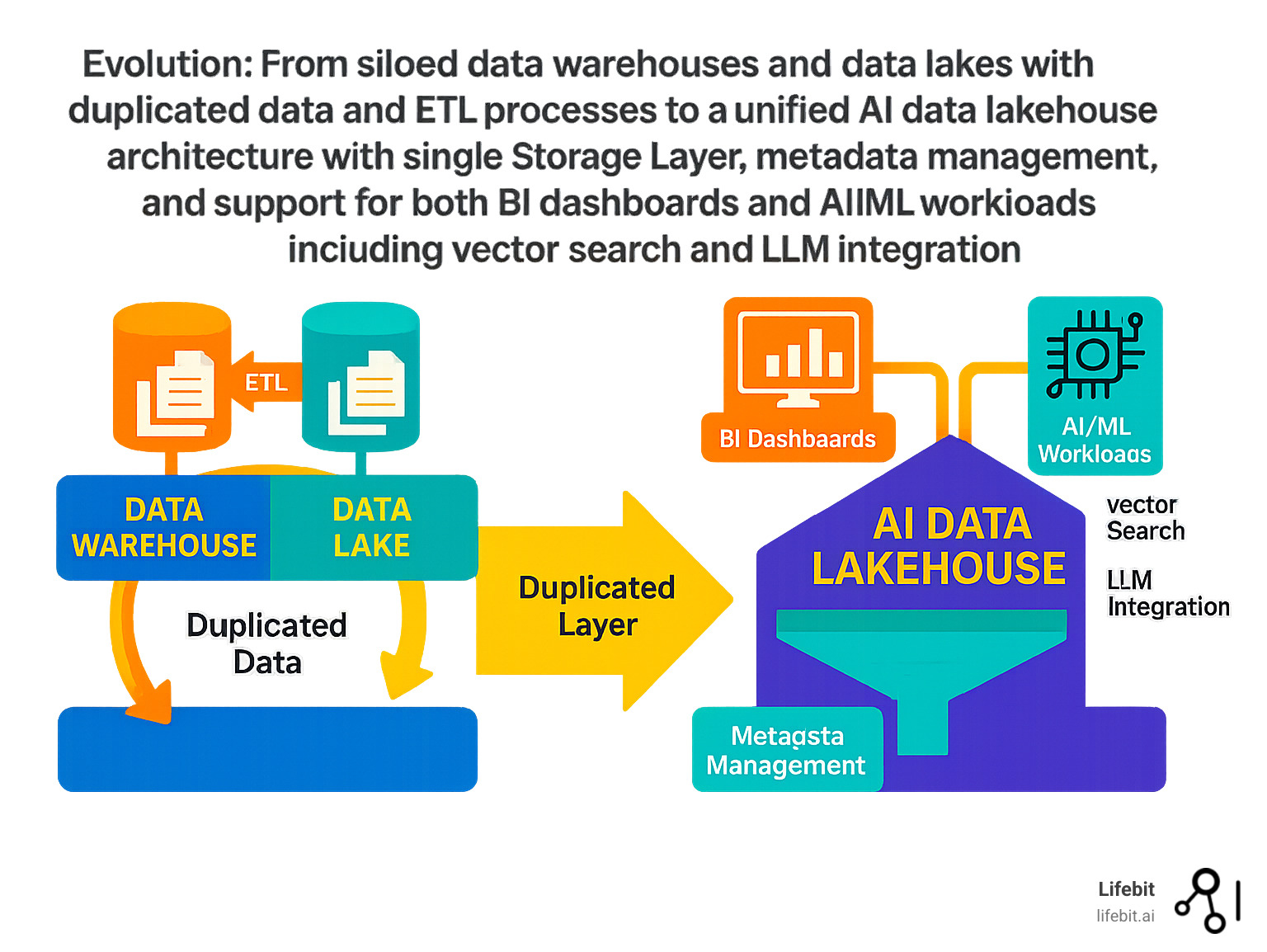

An AI data lakehouse merges the best of data warehouses and data lakes into a single, unified platform. It’s a cost-effective, AI-ready architecture that stores all data types—structured, semi-structured, and unstructured—in one place. This eliminates data duplication, reduces infrastructure costs by 30-50%, and provides the centralized governance and high performance needed for both business intelligence (BI) and advanced AI workloads like vector search and generative AI.

The AI revolution has enterprises racing to modernize their data infrastructure. However, most face a critical challenge: fragmented data trapped in silos. Traditional two-tier architectures, which shuttle data between lakes and warehouses, create data duplication, increased costs, and data staleness. This complexity is a major reason why nearly 48% of all AI models still fail to reach production in 2024. Data scientists simply can’t access the fresh, diverse datasets they need.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. With over 15 years in computational biology and AI, I’ve seen how the right data architecture can accelerate findy. At Lifebit, we build cutting-edge AI data lakehouse solutions that enable federated analysis of sensitive biomedical data, turning months of work into minutes.

The Foundation: From Data Warehouses and Lakes to the Lakehouse

For decades, data warehouses were the cornerstone of business intelligence. Built on a “schema-on-write” principle, they required data to be cleaned and structured before being loaded. This ensured high-quality data and fast SQL query performance for reporting. However, this rigidity became a major bottleneck. They struggled with the explosion of unstructured data—text, images, audio, and sensor readings—which couldn’t fit into predefined tables. Furthermore, scaling traditional warehouses, often tied to proprietary hardware, became prohibitively expensive.

Enter the data lake, designed for the big data era. It offered a “schema-on-read” approach, allowing organizations to dump massive volumes of raw, diverse data into cheap object storage without prior transformation. While data scientists loved this flexibility for exploratory analysis and ML model training, the lack of governance, transactional integrity, and quality enforcement meant many data lakes devolved into unusable “data swamps.” Business analysts found them unreliable and slow for standard reporting.

This led most organizations to a costly and complex two-tier architecture: a data lake for raw data staging and ML, and a separate, downstream data warehouse for curated BI data. This approach created significant operational friction:

- Data duplication and high ETL costs: The same information was copied and moved via complex Extract, Transform, Load (ETL) pipelines. Maintaining these brittle pipelines required significant engineering effort and compute resources.

- Data staleness and inconsistency: By the time data was processed and moved from the lake to the warehouse, it was often hours or even days old, making real-time analytics impossible. Different teams often worked with different versions of the data, leading to conflicting reports.

- Cost inefficiency: Running and managing two separate, large-scale storage and compute systems was expensive and created infrastructure silos.

This is why the AI data lakehouse emerged as a game-changer, delivering the flexibility of a lake and the reliability of a warehouse in a single, unified platform.

| Feature | Data Warehouse | Data Lake | AI Data Lakehouse |

|---|---|---|---|

| Data Types | Structured only | All types, but messy | All types, organized |

| Primary Use | Business reports | ML experiments | Everything: BI, ML, AI |

| Performance | Fast for structured queries | Variable, often slow | Fast for all workloads |

| Cost | High for large volumes | Low storage costs | Low storage, flexible compute |

| Data Quality | High, validated | Low, raw | High, with ACID transactions |

What is a Data Lakehouse?

An AI data lakehouse is a hybrid architecture that combines the low-cost, flexible storage of a data lake with the performance and reliability of a data warehouse. It achieves this by applying a transactional metadata layer over open file formats (like Parquet) in cloud storage. This brings enterprise-grade features like ACID transactions (atomicity, consistency, isolation, durability), schema enforcement, and time travel (data versioning) directly to the data lake. This makes all data—structured and unstructured—reliable and ready for any analysis, from SQL to Python.

Key Benefits of a Unified Architecture

Moving to an AI data lakehouse simplifies the entire data estate. Organizations see a reduced Total Cost of Ownership (TCO), often by 30-50%, by eliminating redundant storage systems and complex ETL pipelines. With no need to move data between systems, data freshness improves dramatically, enabling real-time use cases and ensuring all teams work from a single source of truth. This unified platform reliably supports diverse workloads, from BI dashboards to AI model training, on the exact same data. The trend is clear: a recent survey found that 73% of organizations are already combining their data warehouse and data lakes, making the lakehouse the new de facto standard for modern data architecture.

Core Components: The Technology Powering the Lakehouse

An AI data lakehouse is built on open standards, with a key architectural principle being the decoupling of compute and storage. This allows you to store petabytes of data affordably in cloud object storage and scale processing power up or down independently, paying only for what you use. This delivers massive scalability and cost efficiency compared to traditional, tightly-coupled systems.

Open Storage and Table Formats

At its foundation, a lakehouse uses cloud object storage (like Amazon S3, Azure Blob Storage, or Google Cloud Storage) to hold all data types in highly efficient, open file formats like Apache Parquet or ORC. The real innovation is the open table format, a metadata layer that sits on top of these files and defines a “table.”

This layer, powered by standards like Apache Iceberg, Delta Lake, and Apache Hudi, is what brings data warehouse capabilities directly to the data lake. While all three provide similar core functionality, they have different origins and strengths:

- Apache Iceberg: Designed by Netflix for petabyte-scale tables, it is engine-agnostic and known for its robust schema evolution and hidden partitioning, which prevents performance degradation over time.

- Delta Lake: Created by Databricks, it is deeply integrated with the Apache Spark ecosystem and offers strong performance optimizations and a simple user experience within that environment.

- Apache Hudi: Originating at Uber, it excels at record-level insert, update, and delete operations, making it well-suited for streaming and change-data-capture (CDC) use cases.

This table format layer enables critical features:

- ACID transactions for data reliability, ensuring that concurrent reads and writes don’t corrupt the data.

- Schema enforcement and evolution to maintain data quality while allowing tables to change gracefully over time.

- Time travel to query previous data versions for auditing, debugging, or reproducing ML experiments.

- Unified batch and streaming to handle both real-time and historical data in the same table.

High-Performance Query and Processing Engines

To analyze this data, a lakehouse uses high-performance, distributed query engines that can read open table formats directly. Apache Spark is the most common engine, but the open nature of the architecture allows for a choice of tools, including Trino (formerly PrestoSQL) and Dremio. These engines achieve speeds that rival traditional data warehouses through advanced techniques like:

- Vectorized execution: Processing data in batches (vectors) rather than row-by-row to maximize CPU efficiency.

- Cost-based optimization: Analyzing table statistics to create the most efficient query execution plan.

- Data layout optimization: Using techniques like Z-ordering and data clustering to minimize the amount of data that needs to be scanned from object storage.

This unified engine approach supports all workloads—SQL analytics, batch processing, machine learning, and real-time streaming—on a single platform. This versatility eliminates the need for specialized, siloed systems, making your AI data lakehouse both powerful and efficient.

Unifying Workloads: How a Lakehouse Powers BI and Machine Learning

An AI data lakehouse breaks down the silos between business intelligence (BI) and machine learning (ML) teams. By providing a single source of truth, it allows data scientists to access the same fresh, governed data that powers executive dashboards. This direct access eliminates data drift between analytics and production systems, dramatically boosting productivity and streamlining MLOps.

From Business Intelligence (BI) to Advanced Analytics

BI teams can connect their favorite tools (like Tableau, Power BI, or Looker) directly to the lakehouse for fast SQL queries, interactive dashboards, and ad-hoc reports. Data is often organized using a multi-hop or medallion architecture to progressively refine it:

- Bronze tables: This is the raw ingestion layer. Data lands here in its original format from various sources (databases, logs, streaming events) with minimal transformation, providing a complete and auditable historical archive.

- Silver tables: Data from the Bronze layer is cleaned, validated, de-duplicated, and enriched. Here, data is joined and conformed into a more structured, queryable format. This is often where data scientists and ML engineers begin their work.

- Gold tables: These tables contain business-level aggregates, features, and key performance indicators (KPIs). They are optimized for analytics and reporting, powering executive dashboards and high-level analysis.

This layered approach provides clean, aggregated data for BI users while giving data scientists auditable access to the raw and intermediate data for feature engineering and model exploration.

Accelerating Machine Learning (ML) and AI

The lakehouse transforms ML workflows. Teams can perform data preparation at scale on diverse data types and easily share features for reuse. A key advantage is model training on fresh data. For example, ByteDance uses its lakehouse to power TikTok’s real-time recommendation engine, constantly updating models with the latest user interactions. The reproducibility from features like time travel is also crucial for auditing and debugging, helping to overcome the challenge that nearly 48% of models fail to reach production.

To solve data access bottlenecks for Python-based ML, companies like Netflix have developed high-speed clients that use Apache Arrow to connect tools like Pandas directly to lakehouse tables, boosting data science productivity by orders of magnitude.

Practical Use Cases

The versatility of an AI data lakehouse enables powerful applications across industries:

- Real-time recommendation engines: An e-commerce company can stream clickstream data into its lakehouse, update user profiles in real-time, and serve personalized product recommendations on its website within seconds.

- Predictive maintenance: A manufacturer analyzes high-frequency sensor data from factory equipment. Models trained on this data in the lakehouse predict potential failures, allowing for proactive maintenance that minimizes downtime and saves millions.

- Financial fraud detection: A bank processes millions of transaction records, enriching them with customer data to train models that can flag fraudulent activity in real-time, preventing financial losses.

- Pharmacovigilance and real-time safety surveillance: In life sciences, Lifebit’s platform uses a federated lakehouse to enable secure analysis of sensitive patient data for monitoring adverse drug events across different hospitals without moving the data.

- Multi-omic data analysis: Researchers can accelerate drug discovery by integrating massive genomic, proteomic, and clinical datasets within a unified lakehouse, uncovering novel biomarkers and therapeutic targets.

The Rise of the AI Data Lakehouse: Fueling Next-Generation Intelligence

The lakehouse is evolving into an AI data lakehouse, a platform natively designed for generative AI and Large Language Models (LLMs). This shift supports the rise of agentic AI, where intelligent agents can perform complex tasks and interact with data using natural language. Instead of writing complex SQL or Python, a business user could simply ask, “What were our top-performing drug candidates last quarter, and how do their safety profiles compare?” and get an instant, comprehensive answer synthesized from multiple data sources.

Key Capabilities of an AI data lakehouse

An AI data lakehouse adds essential capabilities for modern AI:

- Vector Search: It integrates vector databases or native capabilities to convert unstructured data (text, images) and structured data into vector embeddings—numerical representations of semantic meaning. This powers similarity and semantic search, allowing AI to understand context and intent, not just keywords.

- Retrieval-Augmented Generation (RAG): This is the primary technique for grounding LLMs in an organization’s proprietary data. When a user asks a question, the system first performs a vector search on the lakehouse to find the most relevant data snippets. These snippets are then injected into the LLM’s prompt as context, enabling the model to generate an accurate, up-to-date, and context-aware response while reducing hallucinations.

- Fine-tuning Datasets: The lakehouse provides a governed environment to create high-quality, curated datasets for adapting Large Language Models to specific domains. Unlike RAG, which provides knowledge at query time, fine-tuning modifies the model’s internal weights to teach it a specific style, format, or specialized vocabulary (e.g., biomedical terminology).

- Real-time Feature Serving: It delivers fresh data points (features) with low latency for in-production AI applications like fraud detection or real-time bidding.

- Unified Monitoring: It provides a single pane of glass to track data quality, model performance drift, and prompt effectiveness to ensure AI applications are reliable and trustworthy.

Integrating Generative AI and Large Language Models (LLMs)

The AI data lakehouse acts as the enterprise backend for generative AI, serving as the “long-term memory” for LLMs. It allows for flexible integration with leading models like GPT-4o, Anthropic Claude, or open-source, self-hosted models like Llama3.

Key integration patterns include using function calling, which allows an LLM to query Lakehouse data programmatically in response to a user’s request. For example, an LLM could translate “show me last month’s sales” into a SQL query, execute it against the lakehouse, and then summarize the results in natural language. Another pattern is automating data classification, where LLMs are used to scan and tag sensitive data (like PII) to enhance governance. The ultimate goal is to enable natural language queries, turning complex data analysis into a simple conversation. At Lifebit, this technology allows researchers to ask complex questions about multi-omic datasets in plain English, dramatically accelerating discovery.

Implementation and Governance: Building a Secure and Effective Lakehouse

Building an AI data lakehouse is a strategic initiative, not just a technology swap. It requires a clear plan that addresses implementation challenges and establishes robust governance from day one to avoid creating a more advanced data swamp.

Challenges in Building an AI data lakehouse

Organizations should anticipate and plan for potential problems:

- Performance bottlenecks for Python/ML: While SQL performance is often excellent, data scientists using Python can face sluggish data access. This is a critical bottleneck. Adopting Arrow-native transfer protocols and clients that can read table formats directly into memory are essential solutions.

- Offline-online skew: Discrepancies between the data used for model training (offline) and the data used for inference in production (online) can degrade model performance. Integrated feature stores that serve the same features to both environments are key to ensuring consistency.

- Real-time data latency: Achieving sub-second response times for applications like recommendation engines may require specialized serving layers or in-memory caches, as querying object storage directly can introduce latency.

- Vendor lock-in: Be wary of platforms that use proprietary data formats, table formats, or catalogs. This can make it difficult and expensive to switch vendors or adopt new tools in the future. An open, disaggregated architecture built on standards like Parquet and Iceberg provides long-term flexibility and avoids lock-in.

- MLOps integration complexity: Integrating a disparate set of MLOps tools for experimentation, training, and deployment with a lakehouse can be challenging. It’s crucial to choose tools that integrate naturally with the lakehouse architecture to avoid creating new data and workflow silos.

Data Governance and Security in the Lakehouse

For sensitive data, especially in regulated industries like healthcare and finance, governance and security are non-negotiable. A successful AI data lakehouse must be built on a foundation of trust and include:

- A unified governance model: A central control plane (or data catalog) is needed to manage permissions, audit access, and enforce consistent policies across all data and AI assets. This single source of truth for governance prevents policy fragmentation.

- End-to-end data lineage tracking: For compliance, debugging, and impact analysis, it’s crucial to have end-to-end visibility into where data comes from, how it’s transformed, and who uses it. If a dashboard shows an incorrect metric, lineage allows analysts to trace the data’s entire journey back to its source, identifying the exact transformation step where an error was introduced.

- Attribute-Based Access Control (ABAC): As opposed to static, role-based control, ABAC is a dynamic and scalable approach to security. It grants access based on attributes of the user (e.g., role, department), the data (e.g., sensitivity level, project tag), and the environment (e.g., time of day). This allows for fine-grained policy enforcement at scale.

- Federated governance: This is an essential approach for meeting privacy regulations like GDPR and HIPAA and for collaborating across organizational boundaries. It allows data to remain in its secure, original location (its “sovereign environment”) while being accessible for authorized, remote analysis. This federated model is core to Lifebit’s platform, enabling compliant biomedical research without ever moving or copying sensitive patient data.

Frequently Asked Questions about the AI Data Lakehouse

Here are answers to common questions about implementing an AI data lakehouse.

How does an AI data lakehouse handle both structured and unstructured data?

The architecture stores all raw data—structured records, JSON files, images, documents—in its native format on low-cost cloud object storage. On top of this, it applies open table formats (like Apache Iceberg or Delta Lake). This metadata layer adds structure, schema validation, and ACID transactions as needed. This allows BI teams to query structured data with SQL, while AI teams can simultaneously access the raw, unstructured data for model training, all from a single source.

What is the main difference between a data lakehouse and a feature store?

Think of the AI data lakehouse as the entire data foundation for your organization, storing all data and supporting all workloads. A feature store is a specialized component within that ecosystem. Its specific job is to create, store, and serve curated, production-ready data inputs (features) for machine learning models. The feature store ensures consistency between training and production, preventing the common problem of offline-online skew.

Is it necessary to move all my data to a lakehouse to use it for AI?

No, and for sensitive data, you shouldn’t. The modern approach is to “bring AI to your data.” Modern AI data lakehouse platforms support data federation, allowing analytics and AI models to query data where it resides—in existing databases, other clouds, or on-premises systems. This is critical for meeting privacy regulations like GDPR and HIPAA. At Lifebit, our federated platform enables analysis of sensitive health data without ever moving it from its secure environment, ensuring compliance and security.

Conclusion: The Future of Data is Intelligent and Unified

The shift to the AI data lakehouse is a turning point for data strategy. It solves the fundamental problems of traditional two-tier architectures by creating a single source of truth for both business intelligence and advanced AI. The benefits are clear: 30-50% cost reductions, dramatically improved data freshness, and accelerated AI development.

As leading database researchers noted, “Cloud-based object storage using open-source formats will be the OLAP DBMS archetype for the next ten years.” The industry is embracing open, disaggregated architectures that maximize flexibility and avoid vendor lock-in.

The AI-readiness of the modern lakehouse—with vector search, LLM integration, and real-time feature serving—is its most powerful attribute. It doesn’t just store data; it makes it intelligent.

At Lifebit, we’ve seen how this architecture transforms sensitive domains like biomedicine. Our federated AI platform, built on AI data lakehouse principles, enables secure, real-time analysis of global biomedical data. Our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer) components deliver insights across hybrid ecosystems without compromising security or compliance, helping organizations move from data to findy faster than ever.

The future of data is unified and intelligent. Organizations that accept the AI data lakehouse will gain a significant competitive advantage, spending less time managing infrastructure and more time generating value.

Ready to transform your data infrastructure and open up the full potential of your organization’s data? Learn how to build a trusted data lakehouse for sensitive research and find how Lifebit can help you make this vision a reality.