Side Effects May Include AI: Pharmacovigilance Gets Smart

AI for Pharmacovigilance: Revolutionizing Safety 2025

The Urgent Need for Smarter Drug Safety

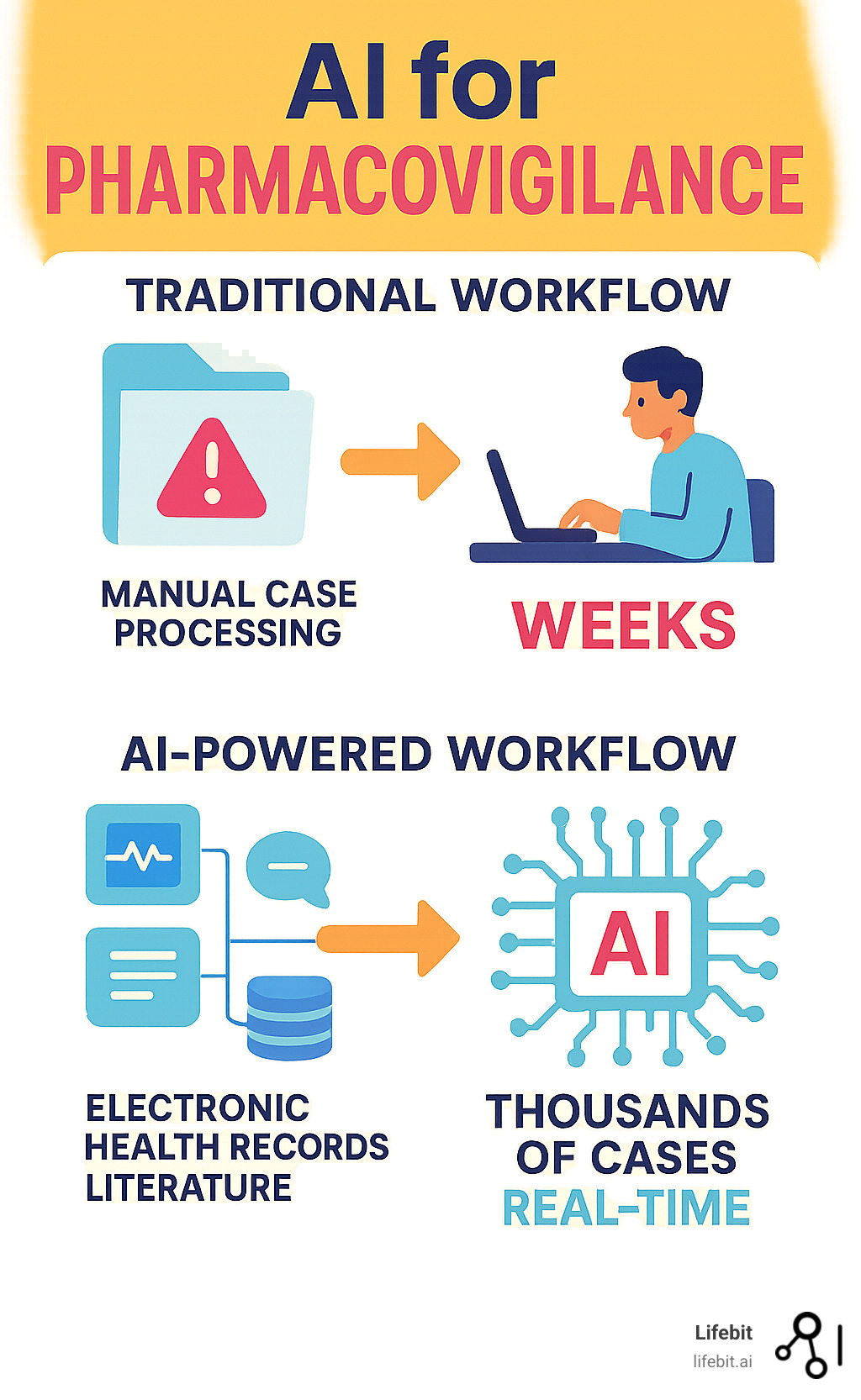

AI for Pharmacovigilance is changing how we monitor drug safety by automating adverse event detection, analyzing vast datasets from electronic health records and social media, and predicting safety signals before they become widespread health threats.

Key AI applications in pharmacovigilance include:

- Automated case processing – AI extracts and analyzes adverse event reports 24/7

- Improved signal detection – Machine learning identifies safety patterns from multiple data sources

- Predictive analytics – AI predicts potential adverse drug reactions before they occur

- Real-time monitoring – Continuous surveillance across social media, literature, and clinical databases

- Natural language processing – AI interprets unstructured text from patient reports and medical records

Traditional pharmacovigilance is challenged by severe underreporting, with a median rate of 94%, and an exponential growth in safety data from online patient experiences and electronic health records. The current system’s reliance on manual review of voluntary reports is slow, expensive, and often misses critical safety signals.

AI changes everything. Instead of waiting for voluntary reports, AI can continuously monitor electronic health records, social media posts, medical literature, and patient forums. It processes thousands of cases in minutes and can detect subtle patterns that humans might miss.

As Dr. Maria Chatzou Dunford, CEO and Co-founder of Lifebit, I’ve seen how AI for Pharmacovigilance transforms patient safety. My experience in AI and health-tech confirms that the future of drug safety lies in intelligent, automated systems that process vast health data while ensuring privacy and compliance.

Basic AI for Pharmacovigilance glossary:

How AI is Revolutionizing Drug Safety Monitoring

AI for Pharmacovigilance is making it possible to spot dangerous drug reactions before they harm patients. The explosion of data from electronic health records, social media, and scientific papers overwhelms traditional pharmacovigilance teams. This is where AI transforms patient protection. Instead of waiting months for safety patterns to emerge, AI can detect problems in real-time.

AI thrives on complexity like polypharmacy, where patients take multiple medications. It analyzes billions of data points to spot dangerous interactions that human reviewers might miss. Research shows that 57.6% of AI applications in pharmacovigilance focus on identifying adverse drug events, which is where AI delivers the biggest impact.

The real game-changer is real-time analysis, shifting from reactive to proactive monitoring that could save countless lives. Natural Language Processing (NLP) makes this possible by reading unstructured text—patient reports, doctor notes, social media posts—and extracting meaningful safety information.

Automating Adverse Event Reporting and Analysis

Individual Case Safety Reports (ICSRs) are the backbone of drug safety, but manual processing is time-consuming, expensive, and error-prone. The traditional workflow is a multi-step, human-driven process: case intake from various sources (email, fax, call centers), manual data entry into a safety database, duplicate checks, medical coding using standardized terminologies like MedDRA, causality assessment, and finally, regulatory submission. Each step is a potential bottleneck and a source of human error.

AI for Pharmacovigilance transforms this entire workflow. AI-powered systems can automatically ingest reports from diverse, unstructured formats. For example, an AI model can read a PDF of a hospital discharge summary, a free-text email from a physician, or even a transcribed voicemail. Using advanced NLP, it performs named entity recognition (NER) to identify and extract key information: patient demographics, suspect and concomitant drugs, dosage information, adverse event terms, dates, and outcomes. The system can then automatically check for duplicates, code the adverse events to the correct MedDRA terms, and populate a structured ICSR form. This automated case processing handles the heavy lifting with superhuman speed and consistency, freeing human experts to focus on high-value tasks like complex medical review and causality assessment.

Most safety reports come as free-text narratives, which are rich in detail but difficult to analyze at scale. AI excels at reading these narratives and extracting the crucial facts. This automation dramatically reduces administrative burden by up to 80% in some cases, while simultaneously increasing accuracy and consistency. AI applies the same coding and extraction rules every time, eliminating the variability inherent in manual processing. For instance, one human might code a report as “heart attack” while another codes it as “myocardial infarction”; an AI system ensures standardized terminology is used consistently, improving the quality of the downstream data used for signal detection.

Research shows that 21.2% of AI applications in pharmacovigilance focus specifically on processing safety reports, reflecting how transformative automation is for these foundational tasks. AI handles the high-volume, repetitive work, while skilled professionals are liftd to roles that require deep medical judgment and critical thinking. It’s not about replacing expertise—it’s about amplifying it, allowing PV teams to manage rising case volumes without a proportional increase in headcount.

More info about AI Challenges in Research and Drug Findy

Enhancing Signal Detection and Prediction with AI for Pharmacovigilance

Beyond individual reports, AI for Pharmacovigilance excels at signal detection—finding new or changing safety concerns hidden in vast amounts of data. A safety signal is essentially a hypothesis of a potential drug-event relationship that warrants further investigation. In the past, signals were detected through manual review or traditional statistical methods like disproportionality analysis (e.g., calculating Reporting Odds Ratios). These methods are valuable but struggle with the sheer volume, velocity, and variety of modern health data, and they are often susceptible to confounding factors and reporting biases.

AI transforms signal detection from an art into a science. Machine learning algorithms, including both supervised and unsupervised models, can sift through heterogeneous data to identify complex drug-drug interactions, detect patterns in specific patient subpopulations, and predict potential adverse drug reactions (ADRs). These models analyze intricate relationships across massive datasets that are impossible for humans to process. For example, a deep learning model could identify a previously unknown interaction between a common antidepressant and a new cardiovascular drug that only manifests in patients over 65 with a specific genetic marker—a signal that would likely be lost in the noise of traditional analysis.

Personalized Risk Prediction and Precision Pharmacovigilance

Predicting ADRs is a powerful capability, moving us from reactive monitoring to proactive protection. The ultimate goal is to move beyond population-level safety to precision pharmacovigilance. AI can analyze individual patient characteristics—including genomics, comorbidities, lifestyle factors, and concurrent medications—to generate a personalized risk score for a potential ADR before a prescription is even written. This risk stratification helps clinicians make more informed prescribing decisions, such as choosing an alternative medication for a high-risk patient or implementing closer monitoring.

The challenge of polypharmacy becomes manageable as AI continuously monitors complex medication combinations in real-world patient populations. By integrating diverse data sources, AI can uncover hidden patterns that inform personalized safety strategies:

- Electronic Health Records (EHRs): Provide a longitudinal view of a patient’s health, including diagnoses (ICD codes), lab results (e.g., liftd liver enzymes), procedures, and unstructured clinical notes.

- Claims Data: Reveals medication dispensing patterns, adherence rates, and healthcare utilization, offering insights into real-world drug exposure and outcomes.

- Social Media and Patient Forums: Mining platforms like X (formerly Twitter), Facebook, and patient forums like PatientsLikeMe captures the patient’s voice directly, often revealing early signs of adverse events or quality-of-life impacts not typically captured in clinical settings.

- Medical Literature: AI tools continuously scan PubMed, clinical trial registries, and conference abstracts for emerging safety findings.

- Wearables and IoT Devices: Data from smartwatches and other sensors can provide continuous physiological monitoring (e.g., heart rate, activity levels), offering novel digital biomarkers for drug safety.

The integration of social media is particularly valuable, as studies suggest up to 17% of adverse events are missed when these online conversations are not monitored. Our approach goes beyond simple correlation to causal inference—employing advanced statistical and AI techniques to determine if a drug is the likely cause of an event, not just associated with it. This crucial step helps separate true safety signals from statistical noise, ensuring that pharmacovigilance efforts are focused on real patient risks.

More info about AI-Powered Biomarker Findy

Navigating the New Frontier: Implementation and Regulation of AI for Pharmacovigilance

Implementing AI for Pharmacovigilance is a complex organizational change, not a simple tech upgrade. It represents a fundamental paradigm shift from retrospective, manual report sifting to continuous, real-time, data-driven safety intelligence. Success hinges on a holistic strategy that addresses technology, process, and people.

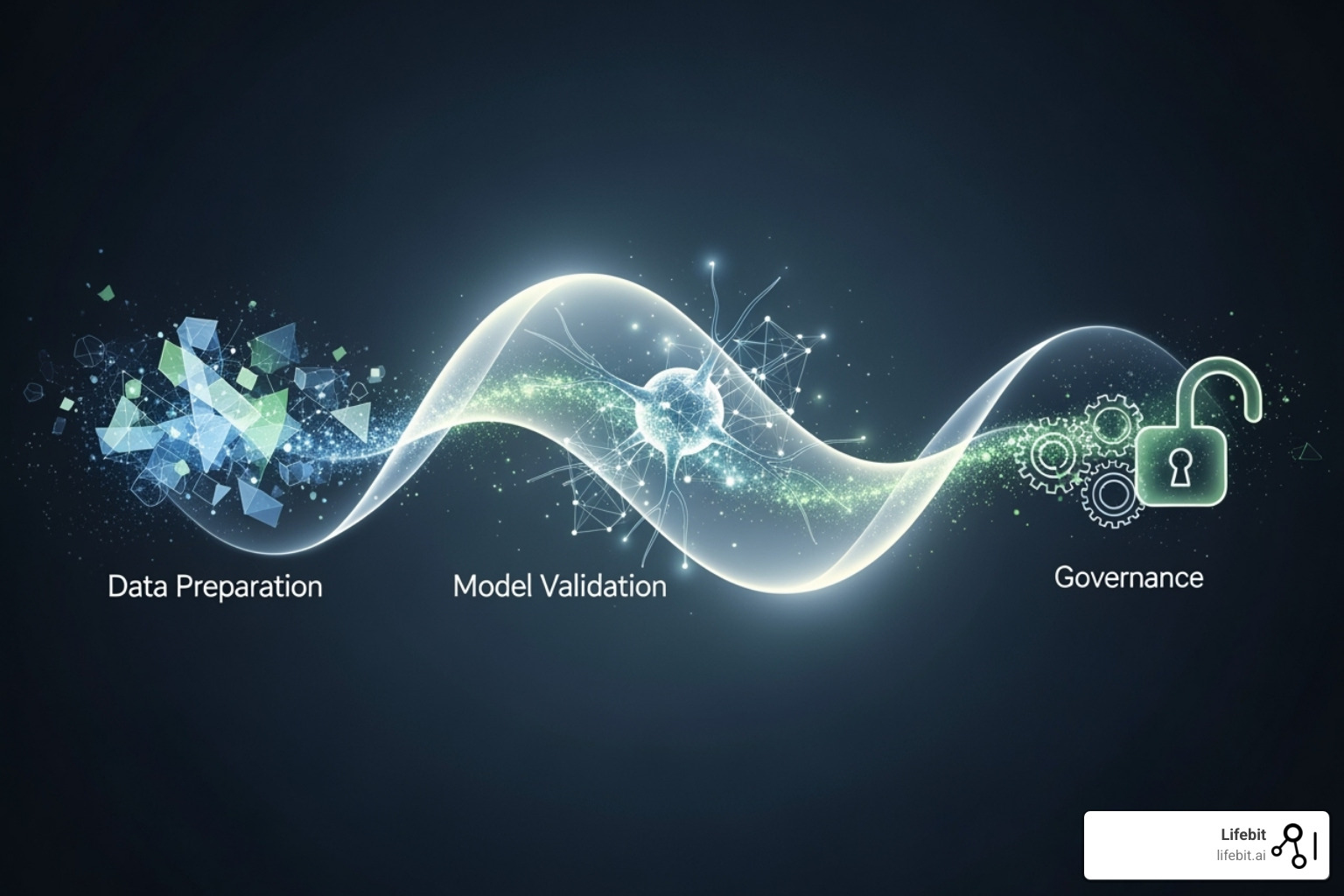

Data quality is your foundation. An AI model is only as good as the data it learns from. Messy, incomplete, or biased data will inevitably lead to flawed, unreliable, and potentially dangerous conclusions. Data standardization is a critical first step, requiring the harmonization of disparate data sources into a common data model (CDM) like the OMOP CDM. This ensures that data from an EHR in Germany can be analyzed alongside claims data from the US. Medical terminologies must also be standardized using frameworks like MedDRA for adverse events and RxNorm for drugs. Beyond standardization, model validation requires rigorous, ongoing testing against benchmark datasets, and human oversight remains essential for interpreting context, managing ambiguity, and making nuanced medical decisions. True success requires a seamless alignment between people, processes, and technology.

The Regulatory Landscape: FDA and Global Guidelines

Regulators are actively working to keep pace with the rapid innovation in AI. The FDA’s Emerging Drug Safety Technology Program (EDSTP) is a key initiative designed to foster dialogue with industry experts and technology developers. The program aims to create a clear pathway for the adoption of new technologies in pharmacovigilance, with a strong emphasis on human-led governance, accountability, and transparency.

The European Medicines Agency (EMA) and other global regulators are developing similar frameworks, often in collaboration through bodies like the International Council for Harmonisation (ICH). A common thread across all regulatory thinking is the critical need for explainability. The “black box” problem, where complex AI models like deep neural networks arrive at a decision without a clear, interpretable path, is a major regulatory and clinical concern. If an AI flags a new safety signal, regulators and clinicians need to understand why.

To address this, Good Machine Learning Practice (GMLP) principles are emerging as the gold standard, guiding the entire lifecycle of an AI model from data acquisition to post-deployment monitoring. These principles emphasize that AI systems must be transparent, robust, and continuously monitored for performance degradation. The regulatory landscape is evolving to accommodate adaptive AI systems that learn over time, as outlined in the FDA’s proposed framework for AI/ML-based SaMD. Explainable AI (XAI) techniques are becoming essential for regulatory submissions. Methods like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) can illuminate which features (e.g., a specific lab value, a phrase in a clinical note) most influenced a model’s output, building the trust required for adoption by healthcare professionals and patients.

Key Steps for Successful AI Integration

Successfully implementing AI for Pharmacovigilance requires a strategic, phased approach, not a big-bang rollout.

-

Establish Robust Data Governance: This is your base camp. Before any AI model is built, you need clear, enforceable policies for data collection, storage, access, security, and usage. This involves breaking down internal data silos, establishing data stewardship roles, and creating a comprehensive data dictionary. Our federated AI platform excels here, enabling secure analysis of global biomedical data while ensuring privacy and maintaining data provenance.

-

Build a Compelling Business Case: AI implementation requires significant investment. The business case must clearly articulate the return on investment (ROI) to leadership. This goes beyond simple cost reduction in case processing. It should quantify benefits like accelerated signal detection (reducing the risk of costly late-stage drug withdrawals), improved inspection readiness, improved patient safety, and the strategic advantage of having a learning health system.

-

Choose the Right Technology Stack: Your technology choices must support the entire AI lifecycle. This includes powerful natural language processing for unstructured data, a suite of machine learning libraries, and a robust data management platform. Our Trusted Research Environment (TRE) and Trusted Data Lakehouse (TDL) provide the scalable, secure, and collaborative infrastructure necessary to handle the massive datasets involved in modern pharmacovigilance.

-

Start with Pilot Studies: Don’t try to boil the ocean. Begin with a well-defined pilot project with clear success metrics, such as automating a specific part of the ICSR process or monitoring social media for a single product. Pilots allow you to test the technology, refine your processes, build confidence within the organization, and generate valuable insights for larger-scale implementations.

-

Invest in Change Management and Upskilling: Technology is only half the battle. The human element is critical. Implementation requires a thoughtful change management strategy to address concerns and build buy-in. This involves upskilling the existing PV workforce to work effectively with AI tools, creating new hybrid roles like “pharmacovigilance data scientist,” and fostering a culture of collaboration. The goal is to create “centaur” teams, where human experts are augmented—not replaced—by AI, combining machine-scale processing with human critical thinking and medical judgment.

-

Implement Continuous Monitoring and Validation: AI models are not static. Their performance can degrade over time due to changes in the underlying data (a phenomenon known as model drift). Post-deployment monitoring is crucial to ensure models continue to perform as expected. This involves continuous retraining, re-validation against new data, and having a clear governance process for updating models in production.

The journey toward AI for Pharmacovigilance requires patience and planning, but the destination—a world where we can predict and prevent adverse drug reactions—makes every challenge worthwhile.

More info about our Data Intelligence Platform

The Future of Vigilance: From Pharmacovigilance to Algorhythmveillance

The evolution of AI for Pharmacovigilance is a remarkable change, pushing the boundaries of what’s possible in patient safety. The future includes sophisticated causal inference models that go beyond spotting statistical correlations to rigorously assessing causation, helping to distinguish true drug effects from confounding factors. Federated learning is another game-changer, enabling AI models to learn from decentralized, global datasets without sensitive patient data ever leaving its secure, local environment. This approach is critical for building more robust and diverse models while respecting patient privacy and data sovereignty regulations.

Explainable AI (XAI) is the holy grail, and its advancement is non-negotiable for widespread clinical adoption. We envision a future where AI models not only predict an adverse event with high precision but also provide a clear, understandable rationale for their prediction. These systems will continually learn and adapt in near real-time, integrating new global health data streams from clinical trials, EHRs, and real-world evidence to become smarter and more accurate with every interaction.

Algorhythmveillance: The Next Evolution in Safety Monitoring

As AI becomes integral to healthcare, a new discipline is emerging: “Algorhythmveillance.” Just as we have pharmacovigilance to monitor drugs, we must have algorhythmveillance to monitor the safety and efficacy of our AI systems. If a drug caused unexpected side effects in a specific population, we would investigate and potentially issue a warning. The exact same level of vigilance is required if an AI diagnostic tool or risk prediction model makes systematically incorrect predictions for a specific patient group.

Algorhythmveillance involves several key practices. Continuous post-deployment monitoring is essential, as an AI model’s performance can change when it encounters real-world data that differs from its training set. We also need robust case reporting systems for “adverse AI-system reactions,” allowing clinicians and patients to flag instances where an algorithm may have caused harm. A major challenge is dataset shift, which occurs when the statistical properties of the real-world data change over time. For example, an AI model trained on pre-pandemic data might perform poorly when analyzing data from the COVID-19 era, where patient behaviors, reporting patterns, and concomitant medications changed drastically. Algorhythmveillance involves continuously monitoring for such shifts and triggering model retraining to maintain accuracy. The urgency is clear, with the AI, Algorithmic, and Automation Incidents and Controversies (AIAAIC) repository identifying over 10,196 AI-related incidents and hazards since 2014.

Insights on Algorithmovigilance

Ethical Considerations and Ensuring Fairness in AI for Pharmacovigilance

AI for Pharmacovigilance wields tremendous power, handling vast amounts of sensitive patient data and influencing the safety of medications used by millions. This power comes with an immense responsibility to uphold the highest ethical standards.

Data privacy is a foundational pillar. We pioneer privacy-enhancing technologies like federated learning, which allows AI models to learn from distributed data without centralizing it, minimizing privacy risks. Bias mitigation requires constant, proactive vigilance. AI models learn from data, and if that data reflects existing societal or historical biases, the AI will learn and potentially amplify them. For example, if training data on skin conditions is predominantly from light-skinned individuals, an AI model may be less accurate at identifying adverse dermatological reactions in patients with darker skin tones, leading to dangerous health disparities. Actively curating diverse, representative training datasets and using fairness-aware algorithms are essential to prevent these algorithmic blind spots.

Fairness and equity are the ultimate goals, ensuring that the benefits of AI in medicine are accessible to all populations, not just a privileged few. Transparency is the mechanism to build trust, allowing patients, clinicians, and regulators to understand, question, and verify how an AI system arrives at its conclusions. A critical ethical and legal question is accountability and liability: who is responsible when an AI system fails? Is it the software developer, the hospital that implemented it, or the clinician who acted on its recommendation? Establishing clear frameworks for accountability is essential for responsible deployment and will likely involve a shared responsibility model that is clearly defined before a system is used on patients.

Our commitment is to improving patient safety and promoting rational drug use for everyone, equitably and responsibly. This is not just a technical challenge; it is a moral imperative.

Dissecting racial bias in healthcare algorithms

More info about AI for Genomics

Frequently Asked Questions about AI for Pharmacovigilance

What are the primary applications of AI in pharmacovigilance?

AI for Pharmacovigilance excels at automating the intake and processing of adverse event reports, turning weeks of manual work into hours. A powerful application is enhancing signal detection from massive datasets like EHRs, social media, and medical literature. AI spots subtle patterns across these diverse sources that traditional methods miss.

Predicting potential adverse drug reactions is where AI really shines, shifting monitoring from reactive to proactive. AI also excels at identifying drug-drug interactions more efficiently, categorizing safety reports, and identifying high-risk patient populations.

What are the main challenges in implementing AI for drug safety?

Implementing AI for Pharmacovigilance has significant challenges. The biggest is ensuring high-quality, standardized data from diverse sources. Making this data interoperable is complex.

The “black box” problem is another issue. Some AI models lack transparency, which is a concern for regulators who require explainability. Navigating the evolving regulatory landscape is also complex, as rules are still being written. Finally, there are substantial investment requirements for technology and skilled personnel, plus ongoing ethical considerations around data privacy and bias.

Will AI replace human experts in pharmacovigilance?

The answer is a resounding no. AI for Pharmacovigilance is designed to be an incredibly smart assistant, not a replacement. AI handles repetitive, data-intensive tasks like initial case processing and preliminary signal detection.

This frees up pharmacovigilance professionals to focus on what humans do best: complex case analysis, causality assessment, and strategic decision-making. The human oversight component remains critical for validation, governance, and nuanced decisions that require medical and regulatory experience. The goal is a collaborative approach where AI amplifies human intelligence.

Conclusion: A New Era of Proactive Patient Safety

AI for Pharmacovigilance is revolutionizing patient protection. We’ve shifted from waiting months to spot side effects to catching them in real-time, sometimes even before they occur. This profound shift from reactive to proactive safety means we can anticipate and prevent problems. AI processes millions of data points from diverse sources, uncovering patterns that would take humans years to find.

The benefits are widespread. Patients receive safer medications, providers make more informed decisions, and pharmaceutical companies identify safety signals earlier. Regulatory agencies get robust data for faster, more confident decisions.

This revolution requires the right data infrastructure. You need platforms designed for the scale, sensitivity, and complexity of global health data, ensuring top security and compliance. Our federated AI platform tackles these challenges, enabling secure, real-time access to biomedical data without compromising privacy. Its harmonization capabilities and advanced AI/ML analytics turn data into actionable insights.

The technical components work together seamlessly. Our Trusted Research Environment (TRE) provides a secure foundation, the Trusted Data Lakehouse (TDL) manages enormous datasets, and R.E.A.L. (Real-time Evidence & Analytics Layer) delivers the AI-driven surveillance that makes proactive pharmacovigilance possible.

This technology democratizes advanced drug safety monitoring, making it accessible to pharmaceutical companies, government agencies, and public health organizations alike. We are building a world where preventable medication harm becomes increasingly rare. Every signal caught early and every reaction predicted translates to people living healthier, safer lives. The future of pharmacovigilance is collaborative, intelligent, and already here.

Learn more about Real-time Adverse Drug Reaction Surveillance