Big Data Meets Biology—A Match Made in Medical Heaven

Big Data Biomedicine: 2025’s Ultimate Breakthrough

Open uping Health Insights with Big Data Biomedicine

Big data biomedicine is about using massive amounts of health and biological information to understand diseases better and find new treatments. This field combines advanced computer power with complex medical data. It’s truly changing how we approach healthcare.

It’s defined by several key characteristics:

- Volume: We’re talking about incredibly large datasets, from thousands of patient records to billions of genetic sequences.

- Velocity: This data is generated and collected at a very fast pace, almost in real time.

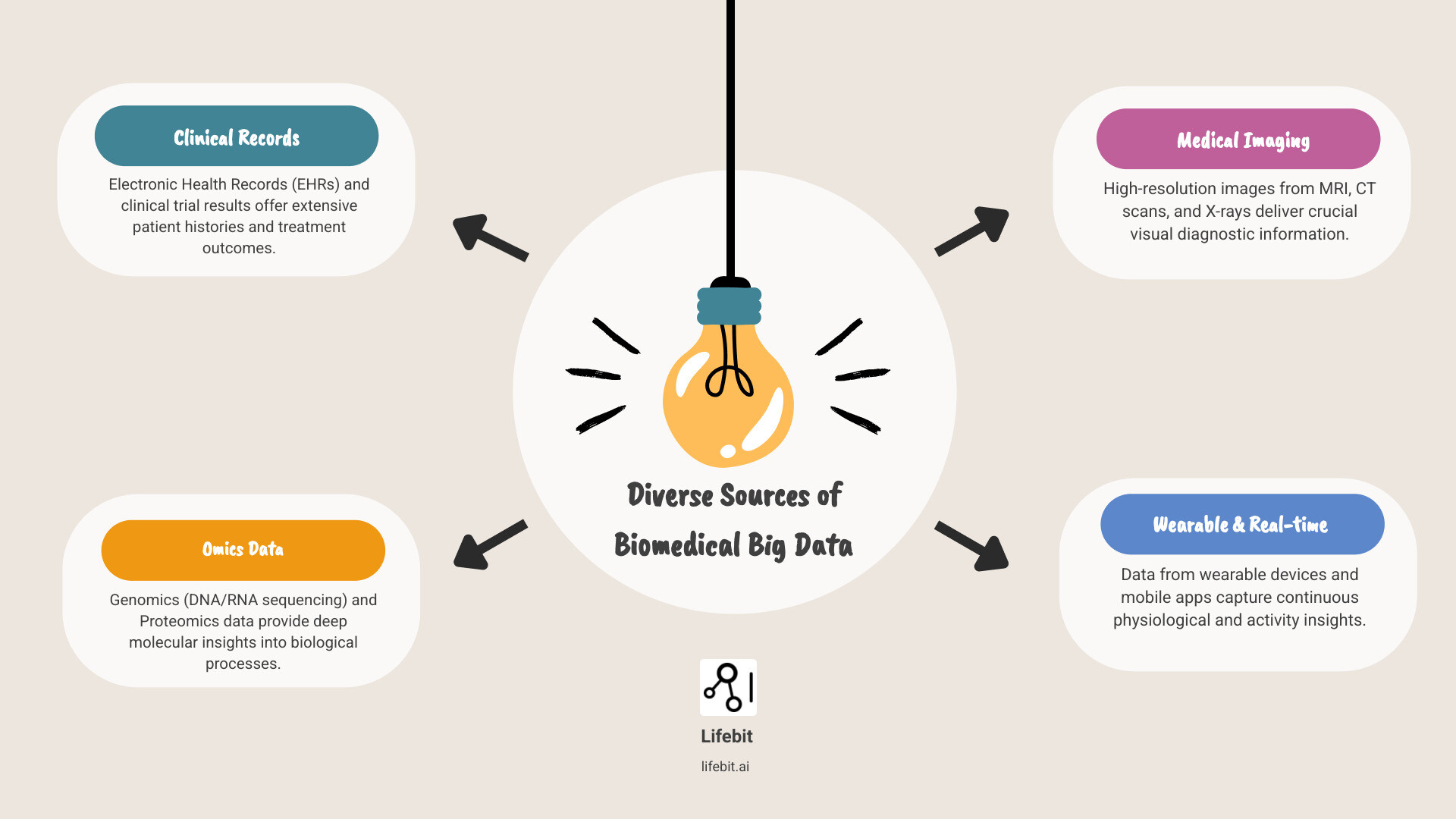

- Variety: It comes in many different forms. Think genetic codes, patient health records, medical images, and data from wearable devices.

- Veracity: Making sure this data is accurate and reliable is crucial for making good medical decisions.

The goal is to move from general treatments to highly personalized ones. This means understanding exactly what makes each person unique. It helps us predict, prevent, and cure diseases more precisely.

I’m Maria Chatzou Dunford, and my work at Lifebit focuses on leveraging big data biomedicine to transform global healthcare. With my background in computational biology, AI, and health-tech entrepreneurship, I’m passionate about making these vast datasets actionable for drug findy and patient care.

Handy big data biomedicine terms:

The Problems: Navigating the Challenges of Big Data Biomedicine

For all its incredible promise, the journey into big data biomedicine isn’t without its bumps. We’re talking about colossal amounts of information, and managing it is a bit like trying to keep a herd of wild horses in a teacup. The primary challenges we face include gaining access to data, tackling tricky integration issues, navigating complex ethical considerations, and addressing a significant shortage of skilled professionals.

The Data Deluge: Integration, Cost, and Maintenance

Imagine a world where life-saving medical insights are locked away in countless digital vaults, each with its own unique key and written in a different language. This is the reality of biomedical data. One of the most formidable problems in big data biomedicine is not just accessing, but also integrating and maintaining large-scale biomedical data for research. The total costs are far too high and climbing rapidly. These costs are multifaceted, encompassing not only the raw price of cloud or on-premises storage but also the substantial expenses of data transfer, the personnel required for data curation and quality control, and the constant need for infrastructure updates to handle ever-growing datasets.

Furthermore, the challenge goes far beyond simple access. Clinical study data are rarely shared due to commercial interests and patient privacy concerns, while electronic health records (EHRs) are walled off by a labyrinth of regulations like HIPAA and GDPR. This creates “data silos”—isolated pockets of information that are incredibly difficult to combine. The integration problem is also a deep technical one. Data exists in a bewildering array of formats: genomic data in VCF or BAM files, medical images in DICOM format, and clinical data increasingly moving toward standards like FHIR. Without standardized ontologies and semantic interoperability, combining these disparate sources is like trying to assemble a puzzle with pieces from different boxes. For research to truly flourish, we need robust frameworks that facilitate the seamless integration of clinical trial results, patient health records, and multi-omic data.

In response, patient advocates are increasingly lobbying for greater access to their own health data, including genomic information, which could be a powerful catalyst for change. Concurrently, researchers, funders, and other stakeholders are actively building alternative models, such as “data commons” and biobanks. Initiatives like the UK Biobank and the NIH’s All of Us Research Program are pioneering examples, providing curated, large-scale datasets to qualified researchers. Funders are also being encouraged to incorporate resources for long-term data access and stewardship into their grants, acknowledging that data sustainability is as crucial as data generation. The culture of data sharing is evolving, but slowly. As highlighted in scientific research, fostering a more open, standardized, and collaborative approach to data is critical for advancing our collective understanding of health and disease.

Scientific research on data sharing culture

The Privacy Paradox: Ethics, Security, and Trust

When we talk about patient health records and genomic information, we’re treading on incredibly sensitive ground. The ethical considerations and challenges associated with using big data biomedicine, particularly regarding patient privacy and data security, are paramount. Medical records are not just data points; they are deeply personal narratives, walled off by stringent privacy and security regulations, and by legitimate legal concerns. The central paradox is that while sharing data is essential for medical progress, it also increases privacy risks.

The increasing availability and growth rate of biomedical information brings significant privacy challenges. Advances in IT applied to biomedicine are continuously changing the landscape of privacy. To address this, a multi-layered approach to security is essential. This starts with foundational techniques like advanced encryption for data at rest and in transit, and robust de-identification and pseudo-anonymization algorithms that strip away personal identifiers. However, these methods alone can be vulnerable to re-identification attacks. Therefore, more advanced Privacy-Enhancing Technologies (PETs) are being explored. These include differential privacy, which adds statistical noise to query results to protect individual identities, and homomorphic encryption, a groundbreaking method that allows computation on encrypted data without ever decrypting it.

Beyond technology, building trust requires strong governance frameworks. This means establishing clear rules for data access, usage, and oversight, often managed by independent data access committees. Security solutions for biomedical data can draw inspiration from other sectors, such as the financial industry, which has long used these rigorous standards to secure sensitive transactions. Applying similar standards is crucial to building public trust and enabling the secure flow of data for research. Good governance standards and practices are not just technical requirements; they are ethical imperatives for the responsible use of big data biomedicine.

The Talent Gap: Cultivating a New Generation of Bioinformaticians

Even with all the data in the world and the most cutting-edge technology, we can’t make progress without the right people. One of the most significant challenges facing big data biomedicine is the shortage of skilled professionals, particularly bioinformaticians. Our research reveals that the lack of attractive career paths in bioinformatics has led to a scarcity of scientists who possess a rare combination of skills: strong statistical skills, a deep biological understanding, and sophisticated computational and software engineering expertise. It’s a unique blend, like being fluent in three very different languages—the language of data, the language of life, and the language of machines.

This talent shortage can stymie the most promising products of big data. The necessary skillset now extends beyond traditional biology and statistics to include cloud computing (on platforms like AWS, GCP, and Azure), machine learning engineering (MLOps) for deploying and maintaining predictive models, and data management at scale. How can academia and industry foster better career development to address this critical gap? Research institutions are beginning to take steps, including setting up formal career tracks that reward bioinformaticians who engage in multidisciplinary collaborations and develop robust software tools. Funders are also finding ways to better evaluate contributions from bioinformaticians, acknowledging their pivotal role. Solutions must be multifaceted, involving updated university curricula, industry-academia partnerships that provide real-world experience, and the creation of more defined, rewarding career ladders within both academic and commercial research institutions. By investing in these robust training programs, we can cultivate the next generation of experts who will bridge the gap between massive datasets and medical breakthroughs.

The Engine Room: How AI is Powering Biomedical Findies

Now, let’s talk about the true powerhouse behind the revolution in big data biomedicine: Artificial Intelligence (AI). Think of AI, especially its clever machine learning models, not just as a fancy tool, but as the mighty engine that takes those vast, often messy, and incredibly complex datasets and turns them into something truly useful. It’s how we go beyond simply collecting information to actually understanding it, using predictive analytics to peer into the future, and generating smart, data-driven insights that can genuinely improve health outcomes.

The Role of AI in Analyzing Genetic Big Data in Biomedicine

AI truly shines when it comes to analyzing and applying all that biomedical big data. Genetic big data, in particular, is like a massive treasure chest for AI. With its amazing ability to spot intricate pattern recognition within huge datasets, AI can do things that would be impossible for humans alone. Its applications are changing key areas of biomedical research:

- Drug Findy and Development: AI is revolutionizing how we find new medicines. Machine learning models can sift through millions of molecular compounds to predict their potential effectiveness against a specific biological target, drastically shortening the initial findy phase. AI can also predict drug-target interactions, design novel molecules from scratch, and even optimize clinical trial design by identifying the most suitable patient populations or predicting recruitment rates.

- Advanced Medical Imaging Analysis: Deep learning, a specialized part of AI, is achieving human-level or even superhuman performance in medical image analysis. In radiology, AI algorithms can detect subtle signs of tumors in CT scans or MRIs that might be missed by the human eye. In pathology, they can analyze digital slides of tissue biopsies to identify cancerous cells with incredible precision. In ophthalmology, AI is used to screen for conditions like diabetic retinopathy from retinal scans, enabling earlier detection and treatment.

- Genomics and Multi-Omics Integration: AI is indispensable for making sense of the human genome. It powers Genome-Wide Association Studies (GWAS) to identify genetic variants associated with diseases. Beyond genomics, AI excels at integrating different types of ‘omics’ data—such as proteomics (proteins), metabolomics (metabolites), and transcriptomics (RNA)—to build a holistic picture of disease biology. This multi-modal approach is key to uncovering the complex mechanisms behind conditions like cancer or neurodegenerative disorders.

At Lifebit, we deeply understand the power of combining AI with biomedical data. Our federated AI platform is specifically designed to securely tap into and analyze global biomedical and multi-omic data. With built-in capabilities for harmonization, advanced AI/ML analytics, and robust federated governance, we empower large-scale, compliant research and pharmacovigilance for biopharma, governments, and public health agencies. This means secure collaboration across diverse, hybrid data ecosystems, ensuring that valuable insights can be extracted without ever compromising data privacy. You can find out more about our platform here: More info about our federated AI platform

From Bench to Bedside: Translating Insights to Point-of-Care

The ultimate goal of all this data collection and analysis isn’t just to find cool patterns in a lab. It’s to take those findies and turn them into actionable insights that directly help patients. We need to bridge the gap between research and the patient’s bedside. This means creating smart clinical decision support systems and giving medical professionals real-time evidence to guide their choices. The goal is clear: improving healthcare delivery and enabling truly personalized treatment plans.

Health systems are working hard to bring the latest treatments into clinics. They’re also building “health-care learning systems” that seamlessly connect with electronic health records (EHRs). These smart systems can sift through massive amounts of patient data to offer recommendations, pinpoint the best treatment paths, and even predict potential complications. For instance, AI models can predict the onset of sepsis in ICU patients hours before clinical symptoms appear, allowing for life-saving early intervention. Projects like CancerLinQ gather de-identified patient data to help guide treatment for cancer patients, especially when their cases are tricky. This allows doctors to use the combined experience of millions of patients to make the very best decisions for each individual.

However, bringing big data biomedicine into everyday medical practice comes with its own set of challenges. There are significant problems in regulatory approval (from bodies like the FDA and EMA), the technical difficulty of integrating new tools with legacy EHR systems, and the crucial need to build clinician trust through transparent, explainable AI (XAI). Despite these challenges, the chance to vastly improve patient care through personalized medicine programs is simply huge. By connecting cutting-edge research with what happens in the clinic, we can ensure that the amazing promise of big data turns into real, tangible benefits for patients, every single day.

Opening up the Code: Applications of Big Data in Precision Medicine

The analysis of big data biomedicine is fundamentally changing the field of precision medicine. This isn’t just a minor adjustment; it’s a paradigm shift! Instead of a one-size-fits-all approach to treatment, precision medicine uses an individual’s unique genetic makeup, lifestyle, and environment to tailor prevention and treatment strategies. This is where big data biomedicine truly shines, enabling us to dig deeper into disease research and apply genetic insights in ways previously unimaginable.

Changing Treatment for Cancer, Neurological, and Cardiovascular Disorders

Genetic big data biomedicine is proving incredibly effective in the research and treatment of many diseases. In oncology, our understanding of cancer has been profoundly reshaped. By analyzing massive datasets, scientists have identified hundreds of oncogenes and somatic mutations. This has led to the development of targeted therapies, such as BRAF inhibitors for melanoma with specific BRAF mutations. Furthermore, the analysis of circulating tumor DNA (ctDNA) from blood samples—a technique known as liquid biopsy—allows for non-invasive cancer monitoring and early detection of recurrence. The insight that a significant portion of cancers (66.1%) may be attributed to random DNA replication errors also helps focus research efforts on early detection and intervention.

In neurology and psychiatry, big data analysis is revealing the complex genetic underpinnings of disorders like autism and Alzheimer’s disease. These conditions often have a polygenic basis, and by analyzing massive datasets, we can identify numerous risk genes and loci, such as the well-known APOE4 variant in Alzheimer’s. This sheds light on the intricate biological pathways involved, opening new avenues for therapeutic targets. Similarly, studies on insomnia have identified hundreds of risk genes, linking it to aging, metabolism, and reproduction through shared genetic factors.

In cardiovascular disease, big data is used to identify genetic risk factors for conditions like coronary artery disease and to develop polygenic risk scores that can predict an individual’s likelihood of a heart attack. AI models analyzing EHR data and real-time ECG signals can also provide early warnings for acute cardiac events.

Research on genetic big data applications

The Rise of Pharmacogenomics

A cornerstone application of big data biomedicine is the field of pharmacogenomics. This discipline studies how a person’s genes affect their response to drugs. The goal is to move away from trial-and-error prescribing and toward a future where medication and dosage are optimized for each individual’s genetic profile. By analyzing genomic data, clinicians can predict who will benefit from a medication, who will not respond, and who is likely to experience adverse side effects. For example, genetic testing for variants in the CYP2C9 and VKORC1 genes is now commonly used to determine the optimal starting dose for the blood thinner warfarin, reducing the risk of dangerous bleeding or clotting. Similarly, pharmacogenomic insights can guide the selection of antidepressants or statins, making treatments safer and more effective from the outset.

Understanding the Links Between Genetics, Immunity, and Physiology

Big data biomedicine also contributes to a deeper understanding of the intricate relationship between genetics, immunity, and physiology. Our bodies are complex systems, and big data allows us to solve how our genes influence our immune responses and overall physiological functions. For instance, large-scale studies analyzing genomic data can reveal how genetic variations impact gene expression and the function of immune cells. This is the focus of immunoinformatics, a field that uses computational approaches to understand and design vaccines, personalize cancer immunotherapy, and solve the mechanisms of autoimmune diseases like rheumatoid arthritis and lupus.

This capability is particularly exciting in areas like pan-cancer analysis, where researchers can look across many different cancer types to find shared genetic or immune system patterns. In neurological conditions, big data biomedicine has helped us understand the immune system’s role. For example, research has shed light on the role of T-cells in Alzheimer’s disease, offering new avenues for understanding and potentially treating this complex condition.

Research on T-cells in Alzheimer’s disease

Furthermore, the analysis of vast datasets can uncover subtle connections between seemingly disparate biological processes. For example, research has explored the relationship between fasting lipid levels and rare mutations, identifying links between specific gene variants and lipid levels. This holistic view, made possible by big data biomedicine, allows us to move beyond studying individual components in isolation. Instead, we can understand the interconnectedness of our biological systems. This deeper understanding is crucial for developing comprehensive strategies for disease prevention and treatment.

The Horizon: Future Frontiers and Breakthroughs

The journey of big data biomedicine is far from over. We’re standing at the edge of something extraordinary, where emerging trends and next-generation technologies are about to reshape healthcare in ways we’re only beginning to imagine. Think of it as the difference between looking at the stars with the naked eye versus peering through the Hubble telescope—we’re about to see things we never knew existed.

The future of healthcare isn’t just about treating diseases after they appear. It’s about understanding them before they even start, preventing them entirely, or fixing them at their very source. That’s the promise that big data biomedicine holds for tomorrow.

The Future of Genetic Big Data: From Gene Editing to Spatial Omics

Gene therapy and gene editing are moving from science fiction to medical reality. Technologies like CRISPR-Cas9 have demonstrated the potential to treat monogenic disorders like Gaucher disease and sickle cell anemia by directly correcting the faulty gene. This breakthrough opens doors for treating countless other genetic disorders that were once considered untreatable, though significant ethical and technical problems remain.

What makes this even more exciting are advances in sequencing. We’ve moved beyond bulk analysis to single-cell sequencing, which allows us to examine the genetic makeup of individual cells. This is like having a microscope powerful enough to read the instruction manual of each cell in a tumor. The next frontier is spatial transcriptomics, a technique that not only identifies which genes are active in a cell but also maps the cell’s precise location within a tissue. This provides an unprecedented view of the complex cellular ecosystems of tumors or the intricate architecture of the brain, helping us understand how cells interact to drive health and disease.

Of course, all this detailed genetic information needs to be stored and analyzed securely. That’s where Trusted Research Environments become crucial. These secure platforms allow researchers to work with sensitive genetic data while maintaining the highest privacy standards. It’s like having a high-security laboratory in the digital world, where groundbreaking research can happen without compromising patient privacy.

More info about Trusted Research Environments

The Promise of Federated Learning and DNA Data Storage

One of the biggest problems in big data biomedicine has been getting different datasets to “talk” to each other. Federated data analysis is changing this by bringing the analysis to the data, rather than moving sensitive patient information around. This approach enables secure collaboration between institutions worldwide while keeping sensitive information exactly where it belongs. The beauty of federated learning is that it breaks down those frustrating data silos that have held back medical research for years, which is especially critical for studying rare diseases where patient data is scarce and globally distributed. Hospitals in New York can collaborate with research centers in London, sharing insights without sharing actual patient data.

But as we generate more data, we face a storage crisis. Silicon-based storage is not sustainable for the exabytes of genomic data being produced. This is where DNA data storage comes in. Scientists are exploring techniques like “DNA fountain,” which could theoretically store a mind-boggling 215 petabytes of data per gram of DNA. This high-density data storage solution isn’t just about saving space—it’s about using the planet’s most ancient and durable information-storage medium to preserve our growing understanding of human health for future generations.

The Quantum Leap: A New Computing Paradigm

Looking even further ahead, the emergence of quantum computing promises to solve biological problems that are currently intractable for even the most powerful supercomputers. While classical computers process information in bits (0s or 1s), quantum computers use qubits, which can exist in multiple states simultaneously. This allows them to explore a vast number of possibilities at once. In biomedicine, this could revolutionize areas like drug findy by accurately simulating the complex interactions between a drug molecule and its protein target. It could also boost the development of AI, like the protein-folding predictions of AlphaFold, leading to a much deeper understanding of biological machinery. While still in its early stages, quantum computing represents a potential leap that could redefine the boundaries of big data biomedicine.

Frequently Asked Questions about Big Data in Biomedicine

You might have some burning questions about how big data biomedicine is truly shaping healthcare. Let’s explore some of the most common ones and shed a little light on this exciting field!

What is the main goal of using big data in biomedicine?

At its heart, the main goal of big data biomedicine is incredibly exciting: it’s all about using huge, complex datasets to open up the secrets of health and disease. Think of it like being a detective, but with an enormous magnifying glass! We analyze these vast amounts of information to uncover hidden patterns, predict what might happen next, and ultimately, develop treatments that are tailor-made for each person. This leads us to a future where healthcare is not just more effective, but also wonderfully precise. It’s about moving from a “one-size-fits-all” approach to one that truly understands you.

How is patient privacy protected when using big data for research?

This is a really important question, and it’s one we take very seriously. Protecting patient privacy is absolutely paramount when working with big data biomedicine. We achieve this through several key layers of protection. First, there are strict regulations in place, like GDPR, that dictate how health data can be handled. Secondly, techniques like data anonymization and de-identification are used. This means removing or scrambling any information that could directly identify an individual, so researchers can still learn from the data without knowing who it belongs to. Finally, and crucially, much of this research happens within highly secure platforms known as Trusted Research Environments (TREs). These are like digital fortresses that control who can access the data and what they can do with it, ensuring that sensitive information remains safe and sound while still enabling groundbreaking findies.

What skills are needed for a career in bioinformatics?

If you’re thinking about a career in bioinformatics, you’re looking at a truly fascinating field! It’s a role that requires a very special mix of talents. To succeed, you’ll need strong statistical skills to make sense of all those numbers and patterns, and excellent computational skills to work with powerful tools and algorithms. But it’s not just about the tech; you also need a deep understanding of biology and genetics. Why? Because you’re not just crunching numbers; you’re interpreting the language of life itself. This unique combination allows bioinformaticians to bridge the gap between massive datasets and meaningful biological insights, making them indispensable in big data biomedicine.

Conclusion: A New Era of Data-Driven Healthcare

Phew! We’ve covered a lot of ground, haven’t we? Our journey through big data biomedicine has shown us a landscape full of both incredible promise and real problems. We’ve seen how it’s ready to totally change how we fight diseases like cancer and understand tricky neurological conditions. We’ve also glimpsed how it’s uncovering the intricate relationships between our genes, our immune systems, and our bodies.

But let’s be honest, it hasn’t been all smooth sailing. We’ve confronted the tough stuff: the high costs and headaches of getting and keeping all that data, the huge need for super strong privacy and security, and yes, that ongoing shortage of brilliant bioinformaticians. These are big problems!

Yet, here’s the exciting part: for every challenge, smart solutions are popping up. Think of Artificial Intelligence and machine learning as the powerful engines, tirelessly sifting through mountains of data to find those precious insights. This is how “precision medicine” is becoming a real thing, giving us custom treatments for each unique person. And looking ahead, technologies like gene therapy, single-cell sequencing, federated learning, and even storing data in DNA itself are ready to blow our minds and push boundaries we never thought possible.

So, this new era of big data biomedicine isn’t just about collecting more data. It’s about getting smarter with the data we have. It’s about using this flood of information to predict, prevent, and cure illnesses with incredible accuracy. This massive shift is reshaping healthcare, guiding us toward a future where medical decisions are truly driven by solid data and perfectly custom for every single individual.

At Lifebit, we’re incredibly proud to be right at the heart of this revolution. We provide the secure, cutting-edge platforms that help researchers and healthcare organizations worldwide open up the full potential of biomedical big data biomedicine. We truly believe that by connecting data securely and encouraging collaboration, we can speed up drug findy, make patient care better than ever, and ultimately, power the future of medicine for everyone.