The Heart of Research: Understanding Clinical Data Management Systems

Stop Wasting Millions on Failed Trials: The Data System You’re Ignoring

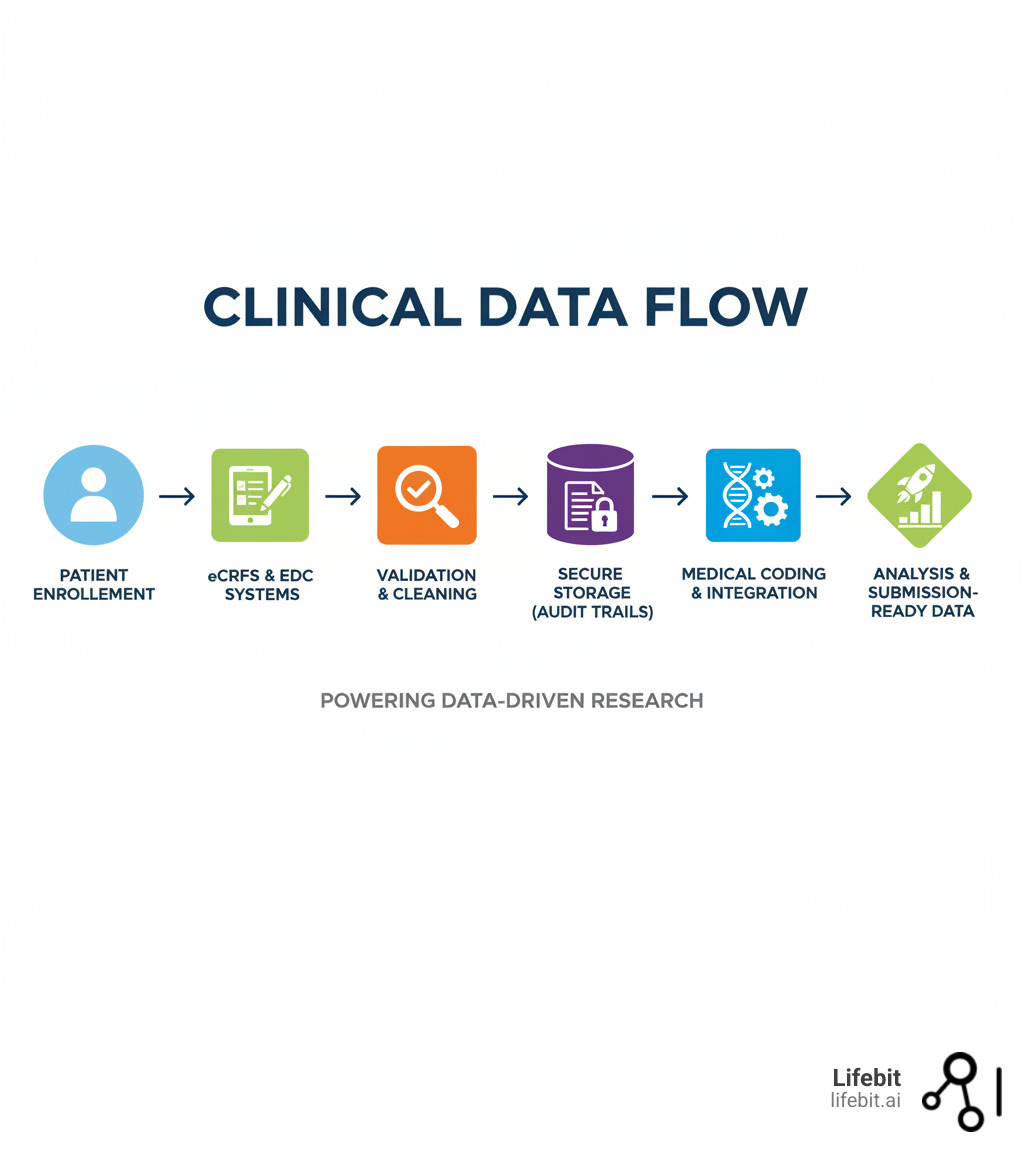

A Clinical data management system (CDMS) is specialized software that acts as the single source of truth for a clinical trial. It’s designed to capture, validate, store, and manage all study data, ensuring it’s accurate, complete, and ready for regulatory submission.

A CDMS is essential for:

- Collecting data from sites, patients, and labs.

- Validating and cleaning data with automated checks and query management.

- Storing data securely with full audit trails.

- Generating reports for analysis and regulatory bodies.

- Ensuring compliance with FDA, GCP, HIPAA, and GDPR standards.

This system is the daily workspace for clinical data managers, database programmers, research associates, biostatisticians, and regulatory affairs teams.

Without a robust CDMS, trials face massive risks: data errors, delayed submissions, regulatory violations, and wasted resources. Many organizations still struggle with siloed data and poor integration, causing trials to take longer and cost more. Modern trials generate complex data from EHRs, wearables, and genomics labs. Managing this without a purpose-built system is like running a hospital without medical records—it simply doesn’t work.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. For 15 years, I’ve helped public sector and pharma organizations build secure, scalable Clinical data management systems that power data-driven drug findy. Let’s break down why these systems are essential for the future of research.

Your Trial’s Mission Control: What a CDMS Actually Does

Imagine managing thousands of patient records, lab results, and adverse event reports across dozens of sites, ensuring every data point is accurate and traceable for FDA review. This is why a Clinical data management system exists.

A CDMS is the mission control for your study. Every piece of information—from screening to final follow-up—flows through this system to be captured, validated, and prepared for analysis. Its primary job is to protect data integrity, ensuring every data point is accurate, complete, and reliable. When patient safety and drug approvals are on the line, there is no room for error.

A well-designed CDMS streamlines trial operations while ensuring compliance with FDA 21 CFR Part 11, GCP, HIPAA, and GDPR. It protects sensitive patient information with robust security and full audit trails. The right system can make or break a trial, leading to faster timelines, better decisions, and more reliable evidence. Learn more about the fundamentals in our Clinical Research Ultimate Guide.

The Teams Who Live and Breathe in Your CDMS

A CDMS is a collaboration hub for multiple teams:

- Clinical Data Managers design the database, build validation rules, and oversee data cleaning, acting as guardians of data quality.

- Database Programmers build the trial database and program the edit checks that catch data issues.

- Clinical Research Associates (CRAs) work with sites to review data and resolve discrepancies.

- Biostatisticians extract clean, locked data to perform the statistical analysis that determines a treatment’s efficacy.

- Regulatory Affairs Professionals use the CDMS to generate compliant reports and documentation for submissions.

- Study Investigators enter patient data and respond to queries through the system’s data capture interface.

Stop Wasting Time and Money: Why a Centralized CDMS is Your Only Option

Centralizing your clinical data management makes trials faster, cleaner, and cheaper. A centralized Clinical data management system reduces errors by standardizing data capture across all sites. Automated validation catches mistakes in real-time, with some modern systems achieving 50% faster data cleaning.

This automation leads to faster trial timelines. With streamlined data capture and cleaning, you shorten the path to database lock. Studies can be built 50% faster, and mid-study changes that once took weeks now take days. For companies racing to bring treatments to market, these time savings are invaluable.

The cost savings are significant. Less manual work means fewer staff hours and less time spent fixing mistakes. Organizations report major decreases in programming cycle times and minimal budget impact for daily data transfers when using modern data science tools.

Most importantly, centralization enables better decision-making. With real-time access to high-quality data from a single source, teams can spot safety signals early and make strategic decisions backed by solid evidence. Everyone works from the same version of the truth—just clean, reliable data when you need it. Find out how modern platforms approach this in our Clinical Data Management Platform guide.

The Engine Room: Core CDMS Functions That Prevent Trial Failure

Think of a Clinical data management system as the engine of your clinical trial. It’s not just a storage locker; it’s a sophisticated system of interconnected parts working to ensure every piece of information is captured correctly, validated thoroughly, and ready for analysis.

These systems handle everything from data capture and validation to integration, reporting, medical coding, and audit trails. When all components work together, you get what every trial needs: clean, reliable, and auditable data that regulators trust.

Drowning in Paper? How EDC Slashes Errors and Accelerates Trials

The old way of managing trial data was slow and error-prone. Investigators used paper Case Report Forms (CRFs), which were mailed and manually entered twice—a process called “double data entry.” It was expensive and inefficient.

Electronic Data Capture (EDC) systems changed everything. Investigators now enter data directly into electronic Case Report Forms (eCRFs) via a web interface. Data flows into the Clinical data management system in real-time, with built-in validation rules that catch errors at the point of entry. For example, the system can flag an impossible blood pressure reading or a missing required field instantly.

The difference is dramatic:

| Feature | Paper-Based Data Capture | Electronic Data Capture (EDC) |

|---|---|---|

| Speed | Slow (manual entry, transport) | Fast (real-time entry) |

| Cost | High (printing, shipping, staff) | Lower (reduced manual labor) |

| Error Rate | Higher (transcription errors) | Lower (built-in validation) |

| Compliance | Requires extensive paper trails | Digital audit trails, electronic signatures |

| Accessibility | Limited (physical forms) | High (web-based, remote access) |

Switching from paper to EDC can cut data cleaning time in half, accelerating market access for life-saving therapies.

Flawed Data, Failed Trials: The Unbreakable Rules of CDMS Accuracy

Data validation, cleaning, and quality assurance are the bedrock of any Clinical data management system. This multi-layered process ensures the data submitted to regulatory authorities is bulletproof. Flawed data doesn’t just delay trials—it can sink them.

- Automated Edit Checks: These are the system’s first line of defense, programmed to run in real-time as data is entered. They instantly flag errors, preventing bad data from polluting the database. Common types include:

- Range Checks: Ensuring a value falls within a plausible range (e.g., an adult’s body temperature is between 95°F and 105°F).

- Consistency Checks: Verifying that data points are logical in relation to each other (e.g., a patient’s date of death cannot be before their date of diagnosis, or a procedure date cannot be before the consent date).

- Format Checks: Confirming data is in the correct format (e.g., dates are DD-MMM-YYYY).

- Uniqueness Checks: Ensuring a value is unique where required (e.g., patient ID).

- Query Management (Discrepancy Management): When an edit check flags an issue, the CDMS initiates a formal query management workflow. This isn’t just about sending an email; it’s a fully auditable process. The system generates a query tied to the specific data point, notifies the responsible user at the clinical site (e.g., a CRA or investigator), and tracks the query’s status. The site user must then investigate the discrepancy, provide a correction or clarification directly in the system, and formally respond. A data manager then reviews the response and either accepts the resolution, closing the query, or re-queries for more information. This closed-loop process ensures every single data anomaly is addressed and documented.

- Manual Data Reviews: While automation is powerful, experienced data managers are irreplaceable. They perform manual reviews to identify subtle patterns and inconsistencies that automated checks might miss, such as a site consistently reporting vital signs at the exact same time for every patient, which could indicate a process issue.

- Source Data Verification (SDV): This process involves comparing a percentage of the data entered into the CDMS against the original source documents at the clinical site (e.g., patient charts, lab reports). While 100% SDV was once common, modern risk-based approaches focus verification efforts on the most critical data points, improving efficiency without compromising quality and ensuring ultimate data integrity.

Beyond the Basics: The Must-Have Tools Your CDMS Needs to Win

A modern Clinical data management system is an ecosystem of specialized tools built around a central database management core. Key components include:

- Data Cleaning and Validation Tools: The engine for monitoring errors and managing the query lifecycle as described above.

- Data Integration Capabilities: The ability to pull in data from diverse external sources via APIs or file transfers. This includes central lab results, imaging data from radiology centers, genomic data files, and real-time streams from patient wearables. The CDMS must be able to map and reconcile this external data with the data collected in the eCRF.

- Reporting and Analytics Tools: Beyond simple data listings, modern CDMS reporting tools offer customizable dashboards that visualize key performance indicators (KPIs) in real-time. These can include patient enrollment rates by site, query aging reports (how long queries remain open), and data entry timeliness. This allows for proactive trial management rather than reactive problem-solving.

- Audit Trails: A non-negotiable regulatory requirement. The CDMS must create an unchangeable, computer-generated, time-stamped record of every data creation, modification, or deletion.

- Medical Coding: This process is critical for safety analysis and regulatory submission. For example, if one site reports ‘headache’ and another reports ‘cephalalgia’, medical coding using a standardized dictionary like MedDRA (for adverse events) or WHODrug (for medications) classifies both under the same preferred term. This allows biostatisticians to accurately calculate the incidence of specific events across the entire study population.

- Integration with CTMS and Lab Systems: Seamless connection to a Clinical Trial Management System (CTMS) provides a holistic view of trial operations, linking data quality metrics with site performance and timelines. Integration with Laboratory Information Management Systems (LIMS) automates the import of lab results, reducing manual entry and transcription errors.

- User-Friendly Dashboards: Visual, intuitive dashboards provide all stakeholders—from CRAs to project managers—with real-time visibility into data quality, enrollment progress, and other key trial metrics, enabling data-driven decision-making.

When these components work together seamlessly, a CDMS transforms data into actionable insights. Clinical Data Integration Software

From Launch to Lock: How a CDMS Manages Your Trial Lifecycle

A clinical trial is a long, carefully orchestrated journey. Throughout this lifecycle, a Clinical data management system acts as the central hub, turning potential chaos into controlled, high-quality data collection. Let’s walk through the three critical stages where a CDMS proves its worth.

Stage 1: Launch Faster and Smarter with a Solid Start-Up

Before patient enrollment, a well-configured CDMS can shave weeks or months off your start-up timeline. Key activities include:

- Protocol Review: The data management team analyzes the study protocol to inform all subsequent design and build decisions.

- eCRF Design: The protocol is transformed into intuitive electronic forms for data capture, balancing comprehensiveness with user-friendliness.

- Database Build: The technical architecture is built within the CDMS, defining variables, data types, and relationships.

- User Acceptance Testing (UAT): All stakeholders test the system to find and fix any confusing workflows or errors before the trial goes live.

- Edit Check Programming: Automated validation rules are programmed to catch bad data at the point of entry.

- System Validation: The entire CDMS configuration is rigorously validated to ensure it meets regulatory requirements.

- Data Management Plan (DMP): This is the master blueprint for data handling. This living document, often over 50 pages long, details everything from the roles and responsibilities of the data management team to the specific procedures for data entry, validation, quality control, and database lock. Key sections include the data flow diagram, the data validation plan (listing all programmed edit checks), procedures for handling external data, and the final archiving strategy. A well-written DMP is a critical reference for the entire study team and auditors, ensuring consistency and compliance. A poorly written one leads to ambiguity, process deviations, and significant regulatory risk.

Stage 2: Gain Real-Time Control During Study Conduct

Once patients enroll, the CDMS manages the constant flow of information while maintaining quality and compliance. This is the most data-intensive stage.

- Live Data Entry: Site staff enter patient data into eCRFs, receiving immediate feedback from built-in validation checks.

- Ongoing Data Cleaning: Data managers proactively monitor incoming data, running checks to identify errors or site-specific training issues early.

- Discrepancy Management: The CDMS tracks all queries from generation to resolution, ensuring a complete audit trail for every data issue.

- Medical Coding: Adverse events and medications are coded continuously using standard dictionaries (MedDRA, WHO Drug) to ensure consistency.

- External Data Reconciliation: The CDMS imports and reconciles data from central labs, imaging centers, and wearables with eCRF data.

- SAE Reconciliation: This is a critical, high-stakes process. A trial’s safety database (which handles expedited reporting of serious events to regulators) and its clinical database are typically separate systems. Regulators require that all Serious Adverse Events recorded in the clinical database (from eCRFs) perfectly match the events in the safety database. Discrepancies can trigger major regulatory findings. The CDMS facilitates this by generating reconciliation reports that highlight differences in key fields like event terms, dates, severity, and causality assessments, allowing data and safety teams to investigate and resolve them systematically before database lock.

Clinical Trial Data Integration Complete Guide

Stage 3: Secure Your Success with a Flawless Close-Out

The final phase ensures your data is perfect for submission. Rushing this phase can undermine years of work.

- Final Data Validation: An exhaustive review is conducted to ensure all queries are closed and all data is complete and accurate.

- Quality Control Checks: Independent reviewers verify that all cleaning activities and documentation are complete and correct.

- Database Lock: This formal, irreversible step freezes the database, creating a fixed snapshot for analysis. The CDMS technically enforces the lock.

- Data Extraction for Analysis: The clean, locked data is exported for biostatisticians to perform efficacy and safety analyses.

- Secure Data Archiving: All trial data, audit trails, and documentation are securely archived for long-term retention (often 15+ years) as required by regulators.

Avoid Million-Dollar Fines: Your Guide to CDMS Compliance

In the highly regulated world of clinical research, compliance and data standardization are non-negotiable. A Clinical data management system is your first line of defense, protecting patient privacy, ensuring data traceability, and aligning your trial with global standards. A single misstep can derail years of work and waste millions.

Your Roadmap to Ironclad Regulatory Compliance

A properly designed CDMS is a compliance partner, built to meet exacting requirements from global regulatory bodies.

- FDA 21 CFR Part 11: This is the gold standard for electronic records and signatures in the US. A compliant CDMS provides secure, time-stamped audit trails that record the ‘who, what, when, and why’ of every data change. This means if a value is corrected, the system logs the original value, the new value, the user who made the change, the date and time, and requires a reason for the change. For electronic signatures (e.g., an investigator signing off on an eCRF page), the system must link the signature to a specific record and ensure it cannot be copied or transferred. This requires users to re-authenticate with their unique username and password at the time of signing, creating a legally binding equivalent to a handwritten signature.

Guidance on electronic records and signatures - Good Clinical Practice (GCP): A CDMS supports GCP by maintaining data accuracy and consistency. Every validation check and query resolution is documented, creating an unbreakable chain of evidence that demonstrates adherence to the protocol and data quality standards.

- HIPAA: For U.S. trials, the Health Insurance Portability and Accountability Act is mandatory. A CDMS must have stringent controls like encryption (both in transit and at rest) and role-based access to protect patient health information (PHI), ensuring only authorized personnel can view sensitive data.

- GDPR: For trials in the EU, the General Data Protection Regulation demands principles like data minimization and proper consent management. A compliant CDMS builds these privacy protections into its core architecture, enabling features like data pseudonymization and supporting a patient’s right to be forgotten where applicable.

A well-designed CDMS makes compliance nearly automatic, giving you confidence that your trial data will withstand the most rigorous regulatory review. Health Data Interoperability

Speak the Global Language: Why CDISC is Non-Negotiable

Imagine trying to analyze data where everyone uses a different format. The Clinical Data Interchange Standards Consortium (CDISC) solved this by creating a universal language for clinical trial data, which is now mandated for submissions by agencies like the FDA and PMDA (Japan).

- CDASH (Clinical Data Acquisition Standards Harmonization): This standardizes how data is collected in eCRFs. To make this concrete, consider a simple data point: a patient’s sex. Without CDASH, one site might enter ‘Male’, another ‘M’, and a third ‘1’. This creates a data cleaning nightmare. CDASH specifies a standard collection format and controlled terms, ensuring everyone enters the data consistently from the start.

- SDTM (Study Data Tabulation Model): This provides a standard structure for organizing and formatting trial data for submission. Following the example above, SDTM provides a standard domain (‘DM’ for Demographics), a standard variable name (‘SEX’), and controlled terminology (‘M’, ‘F’, ‘U’). This seemingly small standardization, when applied across thousands of variables, dramatically accelerates a regulator’s ability to review a new drug application. It allows them to pool data from multiple studies for meta-analysis and apply standardized analysis tools.

- ADaM (Analysis Data Model): This standardizes the creation of analysis datasets, providing a clear line of traceability from the collected data (SDTM) to the statistical results presented in a clinical study report. This makes the statistical work more transparent and reproducible.

Adopting CDISC standards within a Clinical data management system is no longer optional; it is essential for ensuring data consistency and interoperability. This streamlines regulatory submissions and allows researchers to spend less time wrangling data formats and more time on scientific discovery. More on CDISC standards

The Future is Now: How AI and Federation Are Reinventing the CDMS

Clinical research is evolving. Trials now integrate data from wearables, genomic sequencers, EHRs, and patient smartphones. This explosion of diverse, distributed data demands a new approach. The Clinical data management system of tomorrow is already here, powered by AI, Machine Learning, and federated data analysis.

Decentralized Clinical Trials (DCTs) and the use of Real-World Data (RWD) are becoming standard. This shift requires a fundamental change in how we manage clinical data, moving beyond traditional, centralized systems. Clinical Research SaaS Technology

Outpace Competitors with AI-Powered Data Management

Artificial Intelligence and Machine Learning are actively changing how we conduct trials, shifting from reactive data cleaning to proactive, intelligent systems.

- AI-powered data cleaning uses algorithms that learn from millions of data points to spot subtle inconsistencies that traditional edit checks miss. This leads to cleaner data and faster database lock.

- Predictive analytics analyze historical data to forecast where data quality issues are most likely to occur, allowing data managers to intervene early.

- Automated medical coding uses AI to code adverse events and medications, dramatically accelerating a traditionally time-consuming process.

- Natural Language Processing (NLP) extracts valuable insights from unstructured text like physician notes and patient narratives, providing a richer understanding of trial events.

As we integrate big data from genomics, proteomics, and imaging, modern CDMS platforms must apply advanced analytics to uncover patterns essential for precision medicine.

Open up Global Insights: Why Federated Data is the Future

The most valuable data for research is often scattered across different institutions, locked in silos by privacy concerns and logistical problems. Traditionally, analyzing it required centralizing all the data—a slow, risky, and often impossible task.

Federated platforms flip this model on its head. Instead of moving data to the analysis, they bring the analysis to the data.

Data stays in its original, secure location. Researchers send analysis queries to each source, and only the aggregated, non-identifiable results are returned. This approach of analyzing distributed data breaks down silos while ensuring data privacy by design. Sensitive patient information never leaves its secure environment.

Trusted Research Environments (TREs) provide highly controlled, auditable digital spaces where authorized researchers can work with sensitive data. TREs give researchers the access they need while institutions maintain complete control and oversight.

For organizations dealing with sensitive biomedical and multi-omic data, this federated approach is transformative. It enables secure collaboration and multi-modal data integration (clinical, genomic, imaging) without physically moving raw files.

Lifebit’s platform embodies this federated vision. Our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer) deliver real-time insights across hybrid data ecosystems. This allows biopharma, governments, and public health organizations to conduct large-scale, compliant research without compromising privacy or security.

What is a Trusted Research Environment

Your Next Breakthrough Depends on This

The Clinical data management system is the beating heart of every clinical trial. Its purpose is simple: to ensure that every decision, from go/no-go calls to regulatory approvals, rests on a foundation of high-quality, trustworthy data.

Modern trials are incredibly complex, integrating genomic sequences, wearable sensor streams, and real-world evidence from EHRs. Managing this data at scale with traditional, centralized systems is no longer efficient, secure, or feasible.

The future of clinical data management is already here. The shift is toward federated platforms and Trusted Research Environments that enable secure analysis across distributed datasets. This approach is essential for modern research, where data is spread across institutions and geographies.

Lifebit’s federated technology and Trusted Research Environments provide the path forward. By bringing the analysis to the data, we open up insights from previously siloed datasets while upholding the strictest privacy and security standards.

High-quality data remains the unshakeable foundation of every scientific breakthrough. As we push the boundaries of medicine, the CDMS will only become more intelligent, integrated, and essential.