Clinical Data Models Unpacked (Without Losing Your Mind)

Clinical Data Models: 4 Unsung Heroes Unpacked

Why Data Models are the Unsung Heroes of Healthcare Research

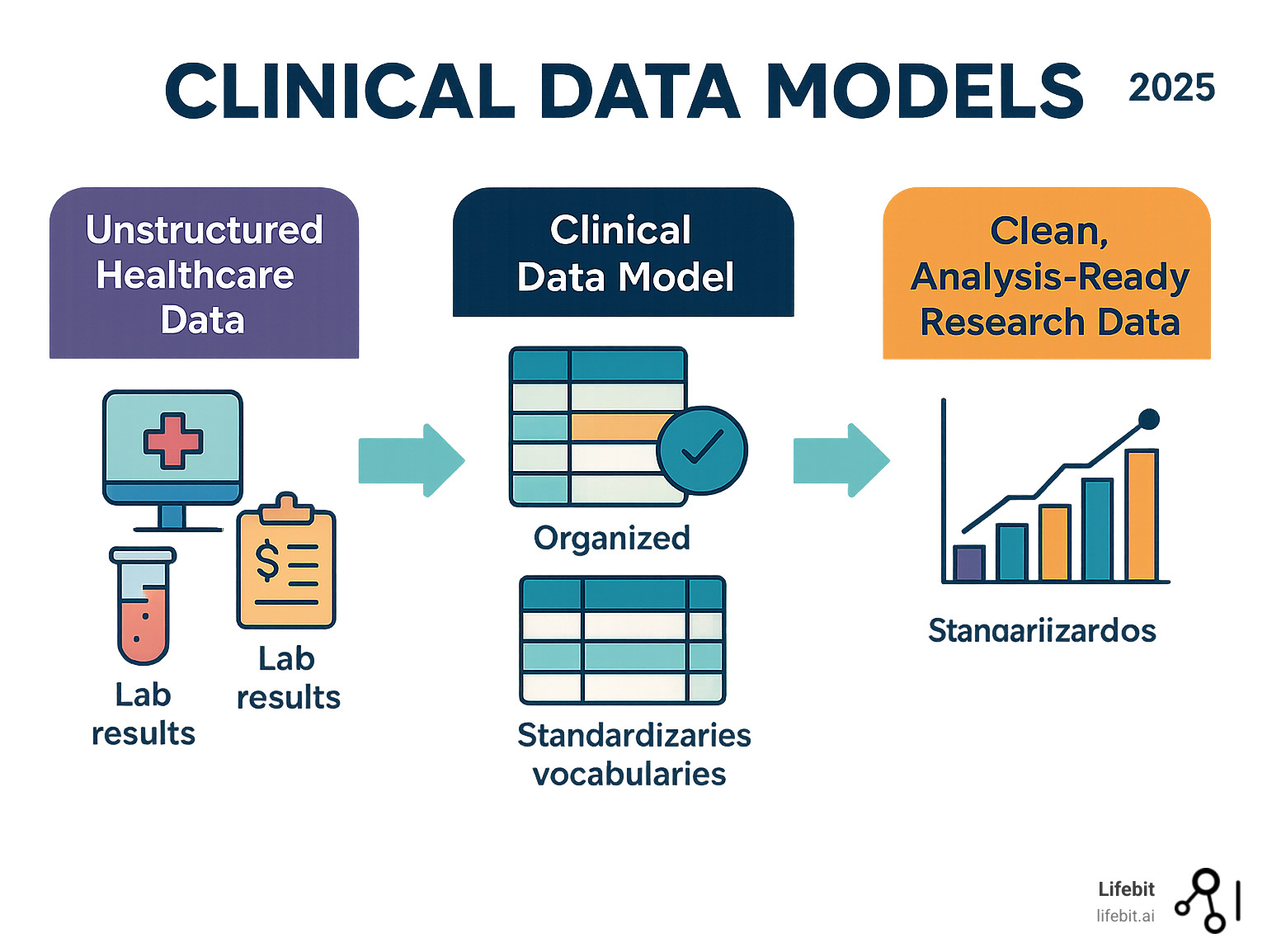

Clinical data models are the invisible backbone that transforms chaotic healthcare information into research-ready insights. They are standardized blueprints that organize data from different sources, enabling researchers to query information consistently across hospitals, health systems, and even countries.

This standardization is critical because the modern healthcare data landscape is a complex and fragmented ecosystem. Data flows from countless sources in myriad formats: structured data from Electronic Health Records (EHRs) and insurance claims, semi-structured lab results, unstructured clinical notes, high-dimensional genomic sequences, medical images, and real-time streams from patient wearables. A typical Phase III clinical trial generates 3.6 million data points, and a single patient’s journey can produce terabytes of data over their lifetime. Without a common structure, this wealth of information remains trapped in disconnected silos, unusable for large-scale analysis.

Think of clinical data models as universal translators. They take the chaos of real-world data—where one hospital codes diabetes as the ICD-10 code “E11.9,” another uses the legacy ICD-9 code “250.00,” and a third describes it in free-text notes—and map it to a single, unified concept within a common vocabulary. This harmonization turns siloed, messy data into a powerful, analyzable asset, enabling large-scale observational studies, powering AI applications, and accelerating the path to discovery. Without them, every research project becomes a custom software nightmare. With them, researchers can focus on finding cures instead of wrestling with data formats.

Key models include:

- OMOP CDM: The most widely used for observational research.

- i2b2: Popular in academic medical centers for cohort discovery.

- Sentinel: The FDA’s choice for active drug safety surveillance.

- PCORnet: A patient-centered outcomes research network.

I’m Maria Chatzou Dunford, CEO of Lifebit. For over 15 years, I’ve helped organizations unlock the power of biomedical data through standardized clinical data models and federated analytics. My experience has shown me how the right data model can accelerate discovery from years to months, turning data chaos into life-saving clarity.

A Landscape of Common Clinical Data Models

Healthcare data is notoriously inconsistent. One hospital might code a heart attack as “STEMI,” another as “410.11,” and a third as “I21.9.” Clinical data models solve this by acting as universal translators, organizing messy data from EHRs, claims, and labs into a common structure that enables large-scale research.

Comparing the Titans: OMOP, i2b2, Sentinel, and PCORnet

Four major clinical data models dominate the landscape, each with distinct strengths tailored to specific research needs. Understanding their philosophies and structures is key to choosing the right tool for the job.

| Feature | OMOP CDM | i2b2 | Sentinel CDM | PCORnet CDM |

|---|---|---|---|---|

| Primary Use | Observational research, RWE generation | Cohort discovery, hypothesis generation | Active medical product safety surveillance | Patient-centered outcomes research |

| Data Structure | Standardized tables (person-centric) | Star/Snowflake schema (fact-based) | Tables optimized for exposures/outcomes | Comprehensive, includes patient-reported data |

| Vocabulary | OHDSI Standardized Vocabularies | Local concept ontology (flexibility) | Standardized codes (e.g., ICD, NDC) | Standardized codes (e.g., ICD, LOINC) |

| Community | Large, open-source (OHDSI) | Academic medical centers | FDA-led network | Patient-focused research networks |

| Interoperability | High, via common semantics & tools | High, within i2b2 network | Moderate, within Sentinel network | Moderate, within PCORnet network |

The OMOP Deep Dive: A Standard for Observational Data

The Observational Medical Outcomes Partnership (OMOP) Common Data Model (CDM), stewarded by the global OHDSI community, is the de facto standard for observational research. Its brilliance lies in a dual approach: standardizing both the data structure and the semantic content.

Structure: OMOP organizes a patient’s entire clinical journey into a set of 16 core tables, known as ‘domains’. Key tables include:

PERSON: Contains demographic information for each patient.VISIT_OCCURRENCE: Records every healthcare encounter, from hospital stays to outpatient visits.CONDITION_OCCURRENCE: Stores all diagnoses and clinical findings.DRUG_EXPOSURE: Captures all medication records, from prescriptions to administrations.PROCEDURE_OCCURRENCE: Lists all surgical and diagnostic procedures.MEASUREMENT: Holds all lab results and clinical measurements.

Content: All clinical events are mapped to a mandatory, standard vocabulary. This ensures that a query for ‘Type 2 diabetes’ (Concept ID: 201820) will retrieve all relevant patients, regardless of whether their source data used ICD-9, ICD-10, or SNOMED codes. This deep semantic standardization enables powerful patient-level prediction and population-level estimation using a suite of open-source tools like ATLAS for cohort design and ARACHNE for executing network studies. The Book of OHDSI is an excellent resource for a comprehensive overview.

i2b2: The Cohort Discovery Engine

Developed by Informatics for Integrating Biology and the Bedside, i2b2 is an academic favorite optimized for one primary task: rapidly identifying patient cohorts for clinical trial recruitment and hypothesis generation. Its structure is a classic ‘star schema,’ consisting of a central Observation Fact table surrounded by ‘dimension’ tables that provide context (e.g., Patient, Concept, Visit). This design allows for highly efficient ‘slice-and-dice’ queries. For example, a researcher can use a simple drag-and-drop interface to find “all female patients over 65 who were diagnosed with osteoporosis and treated with bisphosphonates but have no history of kidney disease.” i2b2’s strength is its flexibility; it allows institutions to use their local terminologies while still enabling federated queries across the network.

Sentinel: The FDA’s Watchdog

The Sentinel CDM was designed by the U.S. Food and Drug Administration (FDA) for a specific mission: active post-market surveillance of medical products. Its structure is highly optimized for its purpose, focusing on efficiently linking drug and vaccine exposures to health outcomes of interest. The model is more streamlined than OMOP, with fewer tables, which allows for extremely fast queries across its distributed network of healthcare data partners. When a potential safety signal emerges for a new drug, the FDA can use Sentinel to rapidly execute a query across millions of patient records to investigate the association, providing critical public health information in days rather than months.

PCORnet: The Patient’s Voice

The Patient-Centered Outcomes Research Network (PCORnet) CDM is unique in its emphasis on data that matters most to patients. While it includes standard clinical data similar to other models, its key differentiator is the robust integration of patient-reported outcomes (PROs). The model has specific tables designed to capture data on quality of life, symptoms, and functional status directly from patients. This makes PCORnet ideal for comparative effectiveness research, where the goal is not just to see if a treatment works, but how it impacts a patient’s daily life compared to other options.

Emerging Approaches: The Generalized Data Model (GDM)

While powerful, transforming raw data into models like OMOP via ETL (Extract, Transform, Load) is complex. The Generalized Data Model (GDM) offers a different approach: keep data as close to the original as possible. Using just 19 tables, the GDM preserves nuances often lost in transformation. It cleverly avoids the ambiguous concept of “visits,” which different systems define inconsistently. Instead, the GDM uses a hierarchical structure to group related clinical events naturally, preserving original medical codes and source context. This makes the GDM a flexible and versatile tool for handling diverse healthcare data while minimizing information loss.

From Raw Data to Research-Ready: The Implementation Journey

The journey from raw, messy healthcare data to a structured, research-ready dataset is a complex process known as Extraction, Transformation, and Load (ETL). This crucial step standardizes data into a clinical data model, but it requires careful planning, deep domain expertise, and robust technical execution.

The ETL Challenge: A Step-by-Step Breakdown

The ETL process is famously labor-intensive, often consuming 80% of the resources in an analytics project. It involves three main phases:

- Extraction: The first hurdle is getting the data out of its source system. This can involve querying proprietary EHR databases (like Epic or Cerner), parsing flat files (CSVs, text files), accessing data via APIs, or processing messages in standard formats like HL7. Each source has its own structure, quirks, and access protocols, requiring custom code for each connection.

- Transformation: This is the most complex phase, where the raw data is cleaned, reshaped, and mapped to the target clinical data model. Key tasks include:

- Vocabulary Mapping: Translating source-specific codes (e.g., a local lab code for cholesterol) to a standard concept ID from a vocabulary like LOINC. This requires creating and maintaining extensive mapping tables.

- Structural Mapping: Restructuring the source data to fit the target schema. For example, data from a single source table might need to be split into the

CONDITION_OCCURRENCEandPROCEDURE_OCCURRENCEtables in OMOP. - Data Cleaning & Validation: Identifying and correcting errors, handling missing values, standardizing units (e.g., converting all weight measurements to kilograms), and running data quality checks to ensure the data is plausible (e.g., a patient’s birth year is not in the future).

- Deriving New Information: Some information is not explicit in the source data. For example, a ‘drug era’ (a continuous period of exposure to a drug) must be inferred by combining multiple prescription records.

- Load: Once transformed, the clean data is loaded into the target database that houses the clinical data model. This phase includes creating indexes to ensure fast query performance and running post-load validation checks to confirm the data was loaded correctly.

The Power of Standardization: Vocabularies and Concept IDs

Effective clinical data models rely on standardized vocabularies to achieve true semantic interoperability. This ensures “diabetes” means the same thing everywhere, regardless of the source system’s coding.

Key standard vocabularies include:

- SNOMED CT: A comprehensive, hierarchical vocabulary for clinical findings, symptoms, and diagnoses.

- LOINC: Used for standardizing laboratory tests and other clinical measurements.

- RxNorm: Provides normalized names for all clinical drugs and links them to their active ingredients and dosages.

The OHDSI community maintains a comprehensive set of these vocabularies, mapping them all to a standard set of concept_ids, which can be explored on the Athena website. This systematic mapping is the engine that powers large-scale, federated research, allowing a single query to run across dozens of datasets worldwide.

Structuring the Story: Provenance, Hierarchies, and ‘Visits’

How a model organizes information is critical for its utility and trustworthiness.

- Data Provenance: Tracking the origin and transformation history of each piece of data is vital for quality control, debugging, and research reproducibility. A good model includes fields to store source information. For instance, the OMOP CDM includes fields like

condition_source_valueandcondition_source_concept_idin theCONDITION_OCCURRENCEtable to retain the original code from the source system, providing a clear audit trail. - Hierarchies: Clinical data is naturally hierarchical (e.g., ‘Pneumonia’ is a type of ‘Lung Disease’). Models represent these relationships either through their table structure or, more powerfully, within the standardized vocabularies themselves. This allows researchers to query at different levels of granularity.

- ‘Visits’: Many models use ‘visits’ or ‘encounters’ as an organizing principle. While intuitive, this can be problematic because a ‘visit’ is defined inconsistently across data sources (e.g., a multi-day hospital stay vs. a single lab test claim). Forcing all data into a rigid visit structure can distort the clinical context. Alternative models, like the GDM, avoid this by grouping events more flexibly, preserving more of the original data’s integrity.

The Payoff: How Data Models Accelerate Discovery and Innovation

The rigorous work of standardizing data into robust clinical data models fundamentally transforms healthcare research, making it more efficient, collaborative, and impactful by improving the ability to find, access, interoperate, and reuse (FAIR) data.

Instead of wrestling with incompatible formats, researchers can focus on discovery.

Enabling Reproducible Research and Federated Network Studies

Adopting a common data model like OMOP enables standardized analytics. The OHDSI community develops and shares open-source tools that can be applied consistently across any OMOP-converted dataset. This ecosystem takes reproducibility a step further by sharing analysis packages that bundle human-readable protocols with machine-executable code. An analysis developed at one institution can be transparently validated and run at any other, dramatically improving the reliability of research findings.

This standardization is the foundation for federated network studies. Instead of centralizing sensitive patient data—a process fraught with privacy risks and governance hurdles—the analytical code is sent to each participating institution. Each site runs the analysis locally behind its own firewall and shares only anonymous, aggregate results back to a central coordinating center. This federated approach enables massive studies that would otherwise be impossible.

A prime example is the OHDSI LEGEND-HTN study, which compared the effectiveness and safety of first-line hypertension medications. By distributing a single analysis package to multiple international databases mapped to the OMOP CDM, researchers analyzed data from over 4.9 million patients, generating evidence that now informs clinical guidelines—a feat unimaginable without a common data model.

Limitations and the Road Ahead

While transformative, clinical data models are not a panacea and continue to evolve. Key challenges and future directions include:

- Genomic and Multi-omic Data: Standard CDMs were designed for clinical data and struggle with the volume, velocity, and complexity of high-dimensional ‘omics’ data. The OMOP-Genomics Working Group is actively extending the model with new tables and conventions to link genomic variants and molecular data to clinical phenotypes, paving the way for true precision medicine at scale.

- Unstructured Data: An estimated 80% of valuable clinical information is locked in free-text formats like physician’s notes and radiology reports. Extracting these insights requires advanced Natural Language Processing (NLP) pipelines to identify entities (like diseases and drugs) and their relationships. Integrating these NLP-derived data points into structured models like OMOP is a major focus of the research community.

- Real-time Integration and the Role of FHIR: Traditional CDMs are populated through batch ETL processes, making them ideal for retrospective research but less suited for real-time applications like clinical decision support. This is where standards like FHIR (Fast Healthcare Interoperability Resources) come in. FHIR is an API standard designed for the real-time exchange of individual patient data. FHIR and CDMs are complementary: FHIR is the pipe that moves data between operational systems, while a CDM is the reservoir that stores and structures data for population-level analysis. The future lies in building seamless pipelines where FHIR resources are used to feed and update analytical data models in near real-time.

The future will see models with improved semantics, deeper integration with AI and machine learning, and built-in privacy-preserving technologies like federated learning to meet the demands of an increasingly complex data landscape.

Frequently Asked Questions about Clinical Data Models

What is the difference between a data model and a database?

A clinical data model is the architect’s blueprint. It defines the rules for how data is organized, structured, and related (the schema and semantics). A database is the actual house—the physical software (e.g., PostgreSQL, SQL Server) and storage system that holds the data according to that blueprint. Models like OMOP are standardized blueprints that ensure everyone’s “data house” is built the same way, enabling collaboration and shared tools.

How do I choose the right data model for my research?

There is no single “best” choice; the right model depends on your needs. Consider these factors:

- Research Questions: Are you doing large-scale observational research (OMOP), rapid cohort discovery (i2b2), active safety surveillance (Sentinel), or patient-centered comparative effectiveness (PCORnet)?

- Data Sources: Are you using EHRs, claims, registry data, or patient-reported outcomes?

- Technical Resources: Do you have the expertise and time for a complex ETL process into a highly structured model like OMOP?

- Collaboration Needs: Will you participate in network studies? Adopting a widely-used model like OMOP simplifies collaboration and gives you access to a vast community and toolset.

The key is to match the model to your specific research context.

Can one institution use multiple data models?

Yes, and it’s often a smart strategy. Major research institutions like Stanford’s STARR project maintain several models simultaneously. For example, they use their raw EHR data model for granular chart reviews, i2b2 for local cohort discovery and trial recruitment, and the OMOP Common Data Model to participate in large-scale OHDSI network studies. This multi-model approach allows them to use the best tool for each research question, from deep dives into local data to standardized, collaborative research.

What is the relationship between FHIR and Common Data Models like OMOP?

This is a common point of confusion. FHIR (Fast Healthcare Interoperability Resources) and CDMs like OMOP are both crucial for data interoperability, but they serve different purposes.

- FHIR is for data exchange. It is an API standard designed for real-time, transactional requests for a single patient’s data. Think of it as the set of pipes that allows a hospital’s EHR to talk to a pharmacy’s system or a patient’s mobile app.

- CDMs are for data analysis. They are database schemas designed to store and organize data from entire populations for retrospective, analytical queries. Think of it as the research data warehouse or reservoir.

They are complementary, not competing. A modern data architecture might use FHIR APIs to extract data from operational systems, which is then transformed and loaded into an OMOP CDM for large-scale research and analytics.

Conclusion: Building a Future of Interoperable Health Data

Clinical data models have evolved from a technical necessity into the foundation of a connected healthcare ecosystem. By enabling disparate institutions to speak the same data language, they empower researchers to answer questions at a scale no single organization could tackle alone.

The community-driven, open-source movement, exemplified by OHDSI, has proven that collaboration accelerates progress. Robust models are already powering next-generation research, turning years of work into months and enabling federated analyses that improve patient safety and open up insights from rare disease data. As these models evolve to handle genomics, AI, and real-time analytics, their impact will only grow.

At Lifebit, this vision drives our work. Our federated AI platform builds on the principles of clinical data models, enabling secure, real-time access to global biomedical data while it remains safe within institutional walls. With built-in harmonization, advanced AI/ML, and federated governance, we help diverse datasets work together seamlessly. We empower researchers to focus on what matters most: finding answers that improve human health.

The future of healthcare data is interconnected and collaborative. By embracing standardized models and federated approaches, we can build a world where findy is limited only by curiosity, not by data silos.