Data Deep Dive: Mastering Clinical Trial Analysis for Better Outcomes

Why Clinical Trial Data Analysis is the Engine of Evidence-Based Medicine

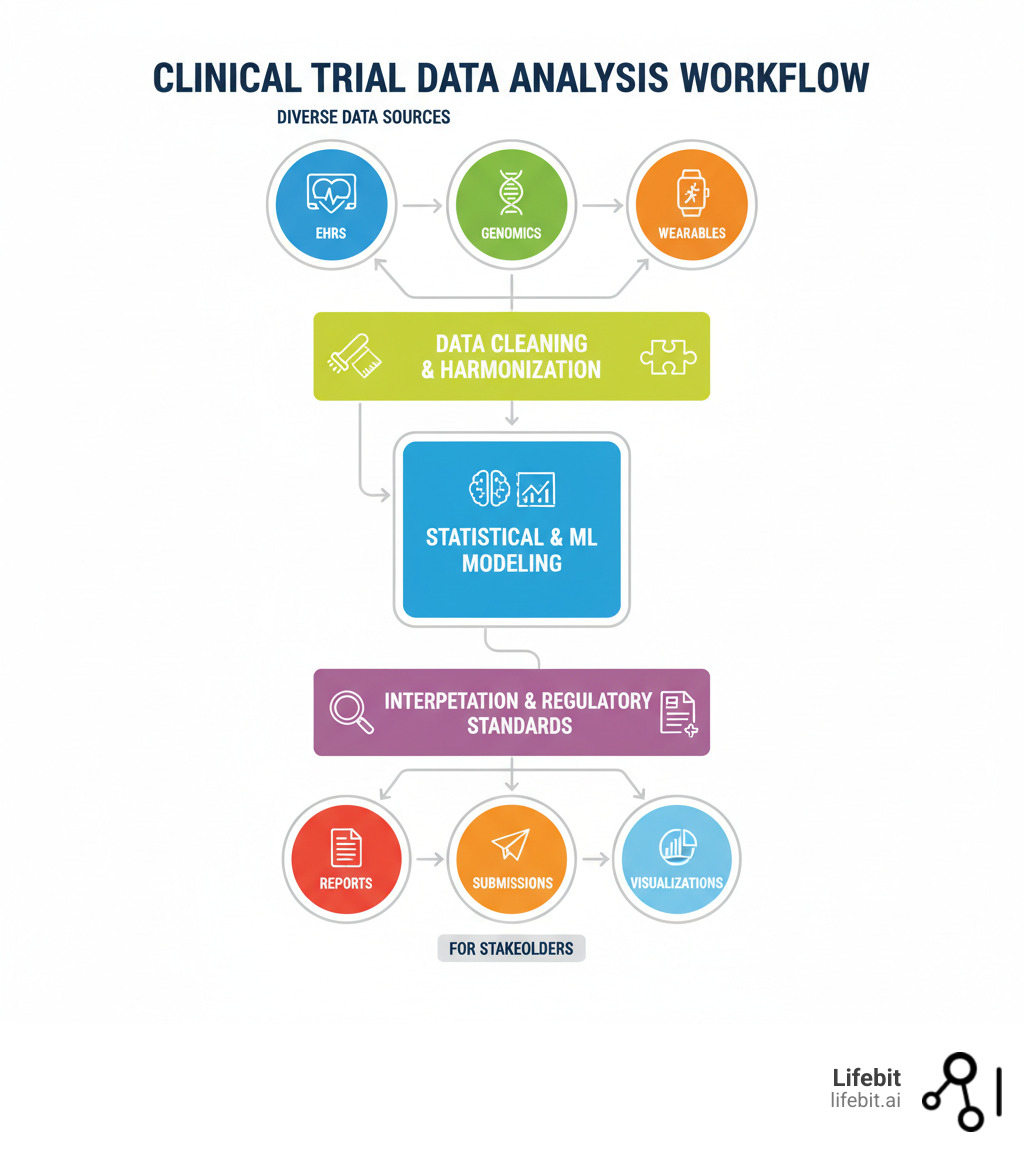

Clinical trial data analysis is the systematic process of examining study data to determine if a new treatment is safe and effective. It involves a five-step workflow: data collection, cleaning, modeling, interpretation, and reporting. Without this rigorous analysis, a trial cannot answer its core questions: Does the treatment work? Is it safe? For whom?

These answers drive every regulatory approval, prescribing decision, and patient outcome. The goal is to turn raw data into decisions that advance life-changing therapies and protect patients from ineffective or harmful ones.

However, the process is complex. Data from sources like EHRs, wearables, and genomics platforms is often messy, incomplete, and siloed. Traditional methods struggle with the volume and variety of modern biomedical data, while regulators like the FDA and EMA demand strict compliance and audit trails. Organizations need real-time insights from distributed datasets without compromising patient privacy.

This is where federated analytics is changing the field. By enabling in situ analysis—analyzing data securely in place without moving it—organizations can accelerate evidence generation and maintain compliance.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. Our federated platform powers secure Clinical trial data analysis at scale. With over 15 years in computational biology and health-tech, I’ve seen how the right analytical infrastructure can collapse timelines from years to months, while the wrong one can derail a billion-dollar program.

Clinical trial data analysis terms you need:

The Anatomy of Analysis: A 5-Step Framework from Raw Data to Actionable Insights

Every clinical trial generates vast amounts of data, but raw numbers don’t save lives. The real work is changing that data into trusted evidence. This section breaks down the five fundamental steps for turning messy data into actionable insights that power regulatory submissions and improve patient outcomes.

Data Collection & Management

Clinical trial data analysis begins with systematic data collection. Modern trials draw from a rich tapestry of sources, including traditional Electronic Data Capture (EDC) systems for clinical observations, Patient-Reported Outcomes (PROs) for direct patient feedback on symptoms and quality of life, and wearables/mHealth apps for continuous, real-time physiological data. This passive monitoring can reveal subtle patterns in activity levels, sleep, or heart rate that are missed in periodic clinic visits. Beyond these, trials increasingly incorporate high-dimensional data like genomics (to identify genetic markers for treatment response), proteomics, and medical imaging (MRI, CT scans).

Clinical Data Management Systems (CDMS) are the backbone of this process, providing a centralized, validated repository for organizing and storing information to ensure data integrity, traceability, and security. However, a key challenge is integrating these disparate sources. Genomic data, EHR extracts, and wearable data often arrive in different formats, use different terminologies, and are governed by different standards, creating data silos that are difficult to analyze holistically. Adhering to FAIR data principles—making data Findable, Accessible, Interoperable, and Reusable—is becoming a critical goal to overcome these silos. Proper planning, as outlined in resources like Designing Clinical Research (4th Edition), is essential to create a robust data management plan that anticipates these integration challenges from the outset.

Data Cleaning and Change

Raw clinical trial data is rarely analysis-ready. It often arrives with errors (e.g., out-of-range values like a body temperature of 986°F), logical inconsistencies (e.g., a patient recorded as deceased on a date prior to their last clinic visit), missing values, and formatting discrepancies. Data cleaning is the meticulous, non-negotiable process of refining this data, because the quality of your analysis depends entirely on the quality of your input.

The process involves several key steps:

- Error Detection and Correction: Identifying and rectifying impossible or illogical data points, often through automated validation checks and manual review.

- Handling Missing Data: This is a critical decision point. Simple methods like Last Observation Carried Forward (LOCF) were once common but are now known to introduce bias. Modern, more robust approaches include multiple imputation, which creates several plausible replacements for each missing value, accounting for the uncertainty of the missing data and leading to more reliable results. The choice of method must be pre-specified in the statistical analysis plan.

- Standardization: This involves harmonizing formats, units, and terminology. For example, mapping “Male,” “male,” and “M” to a single standardized code, or converting all weight measurements to kilograms.

The outcome is an analysis-ready dataset: a structured, validated, and fully documented table ready for modeling. This foundational work is essential for producing analytical results that are trustworthy and can withstand rigorous regulatory scrutiny.

Data Modeling and Analysis

With clean data, data modeling and analysis can begin. This step uses statistical and mathematical techniques to test hypotheses and identify patterns.

Statistical analysis forms the foundation. Hypothesis testing (e.g., t-tests, ANOVA) determines if observed differences between treatment groups are significant or due to random chance. Regression analysis models relationships between variables, such as a drug’s effectiveness relative to patient age, while survival analysis is used for time-to-event outcomes like disease progression.

Increasingly, machine learning models are used alongside statistics. ML excels at finding complex, non-linear patterns in large datasets, helping to predict patient responses, identify biomarkers, or flag safety signals. The most powerful approach often combines both methods: using statistics to test specific hypotheses and ML to explore the data for unexpected patterns.

Interpretation of Results

Analysis produces numbers, but interpretation turns them into actionable evidence. This step involves summarizing findings and navigating statistical nuances.

A key challenge is distinguishing statistical significance from clinical significance. A p-value (typically < 0.05) indicates a result is statistically significant, meaning it’s unlikely due to random chance. However, this doesn’t guarantee the effect is meaningful for patients. A drug might cause a statistically significant but tiny improvement that has no real-world clinical benefit.

Clinical significance asks if the effect is large enough to improve patient outcomes and influence treatment decisions. Contextualizing results against existing research is also vital. Throughout this process, analysts must avoid common misinterpretations, such as overemphasizing p-values or confusing correlation with causation. The goal is to let the evidence speak for itself, considering all study limitations and potential biases.

Reporting and Visualization

Effective reporting and visualization are essential to ensure analytical findings are understood, trusted, and acted upon. This final step in clinical trial data analysis involves tailoring communication to different audiences, from regulators and clinicians to patients and the public.

Data visualization is a powerful tool for conveying complex patterns intuitively. Specific visualizations are standard in clinical research:

- Kaplan-Meier curves are used to illustrate time-to-event data, such as patient survival or disease progression over time between different treatment groups.

- Forest plots are used to summarize results from multiple subgroup analyses or from a meta-analysis, showing the effect estimate and confidence interval for each group.

- Box plots and scatter plots are used to show the distribution of continuous variables and the relationships between them.

Writing study reports for regulatory submission is a specialized skill requiring meticulous documentation of every analytical decision for full reproducibility and auditing. This includes the clinical study report (CSR), which follows the ICH E3 structure. For academic publication, reporting must adhere to guidelines like the CONSORT (Consolidated Standards of Reporting Trials) statement, which promotes transparency and completeness. Finally, registering results on public platforms like The official U.S. government registry of clinical trials is an ethical and often legal requirement that ensures transparency and accessibility. The ultimate goal is clarity, accuracy, and reproducibility, transforming data into evidence that improves patient lives.

Statistical Foundations and Advanced Modeling in Clinical Research

This section dives into the statistical and computational methods that power modern analysis, explaining how researchers make sense of complex datasets.

The Role of Statistical Methods in Clinical Trial Data Analysis

Statistical methods are the foundation of clinical trial data analysis, allowing researchers to distinguish true treatment effects from random noise.

Statistics enable us to make inferences about an entire patient population based on a smaller trial sample. The process begins with descriptive statistics (mean, median, standard deviation) to summarize the data and understand its basic features.

Next, inferential statistics test whether the findings from the sample apply to the broader population. This includes hypothesis testing (e.g., t-tests, ANOVA) to assess if differences between groups are statistically significant, and regression analysis to model relationships between variables like dose and response.

A central goal is managing variability. Since no two patients are identical, statistical methods are crucial for separating natural variation from genuine treatment effects. This ensures that when we conclude a drug works, the finding is reliable and generalizable.

Lifebit’s federated platform integrates these rigorous methods, enabling analysis across multiple data sources to increase sample sizes and improve the reliability of conclusions while keeping data secure.

Applying Data Models: Statistical vs. Machine Learning

Modern clinical trial data analysis uses both traditional statistical models and advanced machine learning (ML).

Statistical modeling is ideal for testing pre-defined hypotheses. Models like regression and ANOVA answer specific questions about efficacy and safety, providing clear, interpretable results (e.g., p-values, confidence intervals) that are easily explained to regulators. They are powerful and transparent, though they often require data to meet certain assumptions.

Machine learning, in contrast, excels at exploration and prediction, finding complex patterns in high-dimensional data like genomics or real-world evidence. ML is used for tasks like patient stratification, biomarker findy, and image analysis. While some ML models can be less interpretable (a “black box”), the field of explainable AI is addressing this challenge.

| Feature | Statistical Modeling (e.g., Regression, ANOVA) | Machine Learning (e.g., Random Forest, Neural Networks) |

|---|---|---|

| Goals | Test pre-defined hypotheses, quantify relationships, explain phenomena | Predict outcomes, identify complex patterns, automate decision-making |

| Interpretability | Generally high (clear coefficients, p-values) | Often lower (“black box” models), but explainable AI is evolving |

| Data Assumptions | Often requires specific assumptions (e.g., normality, linearity) | Fewer strict assumptions, can handle high-dimensional, non-linear data |

| Common Use Cases | Efficacy assessment, safety analysis, dose-response studies, risk factor identification | Patient stratification, biomarker findy, adverse event prediction, image analysis |

The best approach often combines both. Lifebit’s platform integrates statistical and ML tools, allowing researchers to use statistics to validate hypotheses generated by ML, or use ML to uncover new patterns. This synergy powers modern AI-driven clinical trials, accelerating the findy of new therapeutic insights.

Key Considerations for Interpreting Analytical Results

Interpreting analytical results correctly is as important as the analysis itself. A primary consideration is understanding statistical significance (often a p-value < 0.05), which indicates a result is unlikely due to chance. However, p-values have limitations; a statistically significant effect may be too small to be practically useful.

This is why clinical relevance is paramount. Does the finding make a tangible difference in patients’ lives? Confidence intervals help assess this by showing the magnitude and precision of an effect. A large, precise effect is both statistically and clinically meaningful.

Before a trial begins, power analysis and sample size determination are essential. Power is the study’s ability to detect a real effect. Too few participants can lead to a Type II error (a false negative), missing a real effect and making the trial ethically problematic. Too many participants can be wasteful and delay access to a new therapy. The goal is to balance the risk of a Type I error (a false positive) with the risk of a Type II error.

Lifebit’s platform provides tools that go beyond p-values, helping researchers assess both statistical and clinical significance to make informed decisions that ultimately benefit patients.

Overcoming Problems: Common Pitfalls, Regulatory Mandates, and Modern Solutions

Clinical trial data analysis is fraught with challenges, from subtle biases to complex regulatory demands. This section covers the most common pitfalls, the regulatory standards you must meet, and the modern solutions that help overcome these obstacles.

Common Pitfalls and How to Avoid Them

Several pitfalls can compromise the integrity of a clinical trial analysis. Bias is a primary concern, appearing in forms like selection bias (e.g., recruiting healthier patients into the treatment arm) or reporting bias (selectively publishing positive results). Other common issues include:

- The Multiple Comparisons Problem: When analysts conduct many statistical tests (e.g., testing a drug’s effect across dozens of subgroups), the probability of finding a “statistically significant” result purely by chance increases dramatically. This can lead to false positives. To mitigate this, statistical analysis plans should pre-specify primary and secondary endpoints and use statistical correction methods like the Bonferroni correction or controlling the False Discovery Rate (FDR) when multiple exploratory tests are necessary.

- Regression Toward the Mean: This statistical phenomenon describes how extreme measurements tend to move closer to the average when measured a second time. For example, if a trial only enrolls patients with extremely high blood pressure, their blood pressure is likely to decrease on a subsequent measurement even without any treatment. A control group is essential to distinguish this effect from a true treatment effect.

- Underpowered Studies: As discussed earlier, studies with too few participants may fail to detect a real treatment effect, leading to a false negative conclusion.

Avoiding these traps requires rigorous study design and disciplined analysis. Best practices include:

- Randomization to ensure unbiased group assignment.

- Blinding (single or double) to prevent patient or investigator expectations from influencing results.

- Pre-specified statistical analysis plans (SAPs) to prevent “p-hacking” or “data fishing” for favorable results.

- Carefully planned interim analyses with pre-defined stopping rules to assess efficacy, harm, or futility.

- Clear definitions of endpoints and patient populations before the trial begins.

Key Regulatory Considerations for Clinical Trial Data Analysis

Clinical trials operate under strict regulatory oversight to ensure patient safety and data integrity. Compliance is non-negotiable. Key standards and regulations include:

- FDA and EMA Guidelines: These agencies provide comprehensive guidance on trial design, conduct, analysis, and reporting required for market authorization.

- Good Clinical Practice (GCP): An international ethical and scientific quality standard (ICH E6) that protects patient rights and ensures the credibility and accuracy of trial data.

- CDISC Standards: The Clinical Data Interchange Standards Consortium provides a global framework for standardizing clinical trial data. Key models include:

- SDTM (Study Data Tabulation Model): Standardizes the organization and format of raw clinical trial data collected from case report forms, making it easier for regulators to review.

- ADaM (Analysis Data Model): Standardizes the structure of analysis-ready datasets, creating a clear link between the raw data (SDTM), the analysis, and the final results. Adherence to CDISC is now a requirement for submissions to the FDA and Japan’s PMDA.

- Data Security and Privacy (GDPR, HIPAA): Strict regulations governing the protection of sensitive patient information. This requires robust security measures like encryption, access controls, and data anonymization or pseudonymization.

- Audit Trails and Documentation: A complete, time-stamped record of all data collection, changes, and analytical decisions must be maintained. This ensures full traceability and allows regulators to reconstruct the analysis to validate the findings.

Platforms like Lifebit are built to enforce these standards, providing a compliant environment that simplifies the path to regulatory submission.

Technological Advancements and Modern Tools

Modern tools are revolutionizing clinical trial data analysis. AI and machine learning algorithms are now essential for identifying complex patterns in high-dimensional data and predicting patient responses.

Integrating Real-World Data (RWD) from sources like electronic health records (EHRs), insurance claims, and patient registries is another key trend. The evidence derived from RWD, known as Real-World Evidence (RWE), provides a broader view of a therapy’s long-term safety and effectiveness in diverse, everyday clinical settings. RWE is increasingly used to supplement traditional trial data, for example, by creating synthetic control arms for rare disease trials where recruiting a placebo group is difficult, or for post-market safety monitoring.

Perhaps the most transformative technology is federated learning. Instead of centralizing sensitive patient data—a process fraught with privacy risks and logistical hurdles—this approach brings the analysis to the data’s location. Analytical models are sent to each participating institution, trained locally on the secure data, and then the aggregated, anonymized model results (not the data itself) are sent back to a central server. This enables analysis across distributed datasets while maintaining full compliance with GDPR and HIPAA. This model is central to Lifebit’s platform.

Other key technologies include:

- Cloud Computing: Provides the on-demand, scalable power needed to process massive datasets and run complex models without large upfront infrastructure investments.

- Natural Language Processing (NLP): Unlocks insights from unstructured text data like clinical notes, pathology reports, and scientific literature, which constitute a vast, untapped source of information.

These advancements are fundamentally changing research, enabling faster, more secure, and more insightful analysis to bring new therapies to patients.

Frequently Asked Questions about Clinical Trial Data Analysis

What is the difference between statistical significance and clinical significance?

Statistical significance (usually a p-value < 0.05) indicates that an observed effect is unlikely to be due to random chance. It tells you that the treatment likely had some effect.

Clinical significance (or clinical relevance) asks a different question: is the effect large enough to make a real difference to a patient’s health or quality of life?

For example, a drug might reduce blood pressure by 1 mmHg. In a large trial, this result could be statistically significant. However, such a small reduction is clinically insignificant because it doesn’t meaningfully improve patient outcomes. Conversely, a large, clinically important effect might not reach statistical significance in a small, underpowered study. Both types of significance must be considered to make sound decisions, often using confidence intervals to assess the magnitude of the effect.

What is an intention-to-treat (ITT) analysis?

Intention-to-treat (ITT) analysis is a core principle in clinical trial data analysis. It states that all participants should be analyzed in the group to which they were originally and randomly assigned, regardless of whether they completed the treatment, dropped out, or switched groups.

This method is crucial because it preserves the integrity of randomization, which is what allows researchers to make unbiased comparisons between treatment and control groups. Removing participants who don’t adhere to the protocol can introduce bias, as these individuals may be systematically different from those who remain.

ITT provides a more realistic and conservative estimate of a treatment’s effectiveness in a real-world setting, where patient adherence is never perfect. For this reason, regulatory agencies like the FDA and EMA consider ITT analysis the gold standard for assessing efficacy.

Why is sample size calculation so important before a trial begins?

Sample size calculation is critical for the scientific, ethical, and financial success of a clinical trial. It determines the number of participants needed to achieve sufficient statistical power—the probability of detecting a real treatment effect if one exists.

- An underpowered study (too few participants) risks missing a true effect (a false negative, or Type II error). This is unethical, as participants were exposed to risk without the study being able to yield a conclusive result.

- An overpowered study (too many participants) is also unethical and wasteful, as it exposes more people than necessary to potential risks and delays the trial’s completion.

Calculating the correct sample size involves estimating the expected effect size, data variability, and desired power (typically 80% or higher). Getting this right ensures the trial is efficient, ethical, and capable of producing a reliable answer, which accelerates the path to getting effective therapies to patients.

Conclusion: From Data to Findy

Clinical trial data analysis is the vital process that turns raw data into life-saving therapies. From data collection and cleaning to advanced modeling and interpretation, every step is critical for determining if a new treatment is safe and effective. The quality of this analysis directly impacts how quickly new treatments reach patients.

The field is evolving to handle massive, distributed datasets from genomics, wearables, and EHRs. Traditional, centralized data analysis is becoming impractical due to privacy regulations and logistical complexity. The future requires faster, smarter, and more secure methods.

Federated analytics and AI are leading this change. By analyzing data securely where it resides—without moving it—this approach accelerates evidence generation while ensuring compliance with GDPR and HIPAA. At Lifebit, our federated AI platform is built on this vision, enabling clinical trial data analysis at scale across distributed biomedical data. Our Trusted Research Environment brings the analysis to the data, allowing researchers to gain real-time insights without compromising security.

This technology is already collapsing research timelines from years to months, open uping insights from siloed data and getting therapies to patients faster. By combining rigorous science with cutting-edge technology, we are pushing the boundaries of medical research.

If you’re looking to accelerate your clinical research while maintaining the highest standards of security and compliance, learn more about our trusted research environment and see how federated analytics can transform your approach to clinical trial data analysis.