Seamless Data Flow: Understanding Integration Platforms

Data integration platform: Seamless 2025

Why Data Integration Platforms Matter for Modern Organizations

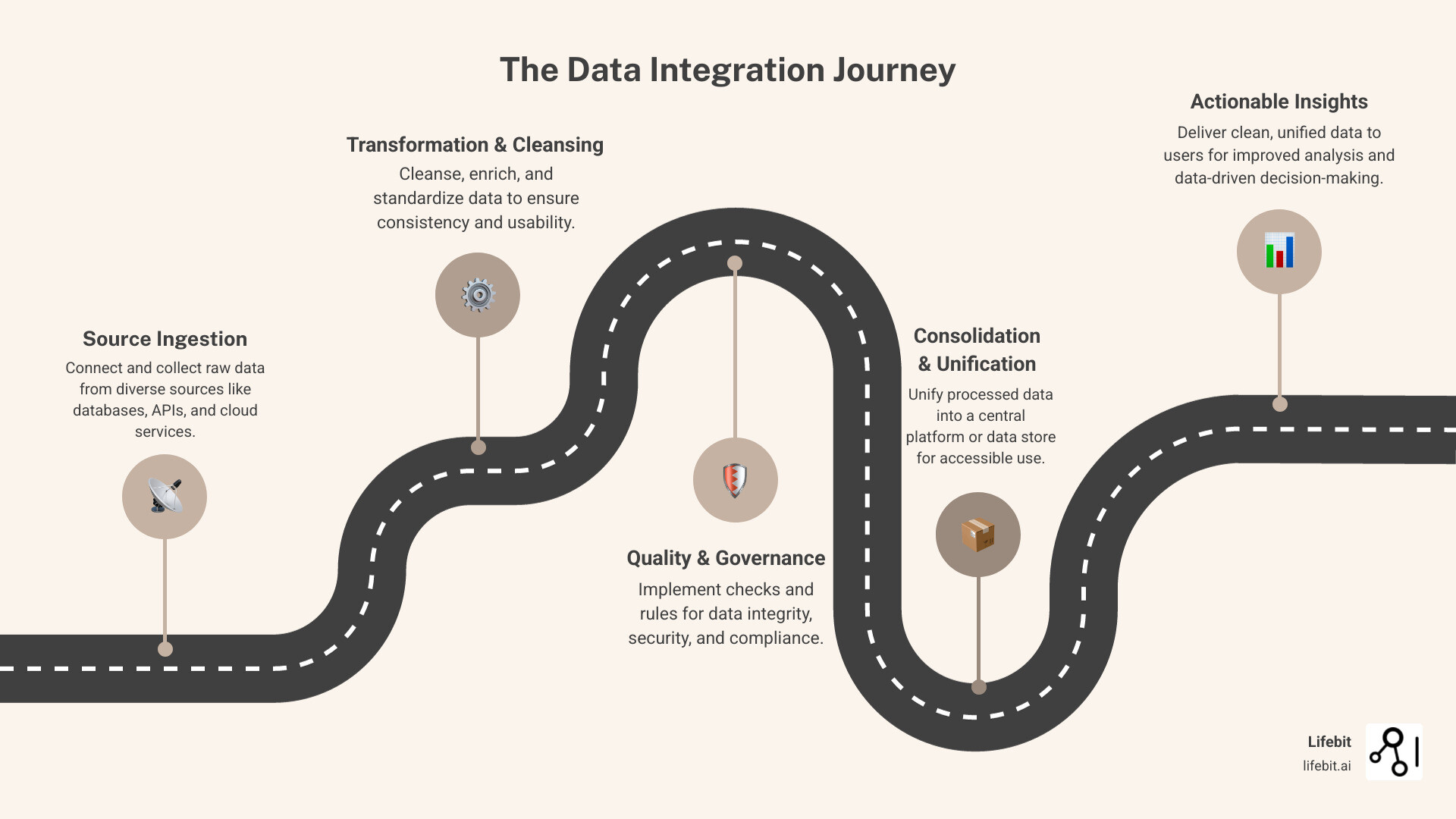

A data integration platform is software that combines data from multiple sources into a unified format for analysis. These platforms automate extracting, changing, and loading data while ensuring quality and consistency.

Key components of data integration platforms:

- Data connectivity – Connect to databases, APIs, cloud services, and files

- Data change – Clean, standardize, and enrich data automatically

- Real-time processing – Handle batch and streaming data

- Governance controls – Ensure data quality, security, and compliance

- Unified access – Provide a single source of truth for all stakeholders

The challenge is real: many organizations struggle with data silos, yet companies like Publicis Groupe increased campaign revenue by 50% by integrating disconnected data. Without these platforms, teams waste hours manually combining data, leading to delayed insights, missed opportunities, and frustrated stakeholders.

Modern data integration platforms solve this by creating seamless data flows that automatically keep information current, clean, and accessible across your organization.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. I’ve spent over 15 years building computational biology tools and data integration platform solutions for precision medicine and biomedical research, from genomic analysis frameworks to federated platforms for secure, real-time access to complex healthcare datasets.

What is a Data Integration Platform and Why is it Crucial?

Working with business data without integration is like solving a jigsaw puzzle with pieces scattered across different rooms. A data integration platform brings those pieces together to create a clear, complete view of your organization.

At its core, a data integration platform is software that consolidates information from all your different systems—CRM, ERP, marketing tools—and makes it useful. It acts as a bridge, pulling scattered information into a cohesive whole. It doesn’t just move data; it transforms it so everything speaks the same language, even as your data changes in real-time.

Automation is where these platforms excel. Manually combining data is time-consuming and error-prone. One study found that organizations using leading integration platforms achieved a 347% ROI by automating data processes. By eliminating manual work, your teams can focus on making smart decisions and driving growth.

The ultimate goal is establishing a single source of truth. When everyone operates from the same, consistent data, collaboration improves and strategic decisions are built on solid ground. This unified approach leads to improved efficiency, as marketing can see real-time sales data, customer service can access complete customer histories, and executives get the full picture without manual report compilation.

To dive deeper into how connecting your data can transform your operations, explore our insights on Data Integration.

The Core of a Modern Data Integration Platform

Modern data integration platforms are built on powerful capabilities that make your data smarter and more useful.

- ETL (Extract, Transform, Load) is a traditional approach where data is extracted, cleaned and standardized in a staging area, and then loaded into a data warehouse. The “Transform” stage is critical, involving tasks like converting data types, joining data from different sources, aggregating values, and deriving new calculated fields. It ensures only clean, structured, and business-ready data enters your analytics environment, making it ideal for predictable, report-driven workflows.

- ELT (Extract, Load, Transform) is a newer method where raw data is extracted and loaded immediately into the destination system, typically a cloud data warehouse like Snowflake, BigQuery, or Redshift. The transformation happens within the target system, using its powerful processing capabilities. This approach is often faster, more flexible, and better for handling large, diverse data volumes, as it allows data scientists and analysts to work with the raw data directly before deciding on the final transformations.

- Data ingestion is the first step of collecting raw information from various sources. This can happen in batches (e.g., importing daily sales files), micro-batches (e.g., processing logs every five minutes), or via real-time streaming (e.g., capturing user clicks from a website). It’s simply about gathering the ingredients before you start cooking.

- Data cleansing and quality checks are crucial because raw data is rarely perfect. These tools automatically identify errors, remove duplicates using fuzzy logic, validate formats (e.g., for emails or phone numbers), and flag outliers. By embedding these checks into the integration pipeline, you prevent the “garbage in, garbage out” problem and build trust in your data.

- API management keeps your modern, app-driven world connected. A data integration platform provides capabilities to manage the security, quality, and real-time data exchange between all your business tools via their APIs. This involves creating, publishing, and monitoring APIs (like REST or GraphQL) that allow applications to communicate in a standardized and secure manner.

- Real-time data sync ensures your information is always current. Using techniques like Change Data Capture (CDC), which reads database transaction logs to capture only the changes, and event-driven architectures, the platform keeps everything up-to-date instantly. This is a stark contrast to traditional batch processing, which might only update data once a day.

Key Business Benefits

The strategic advantages of a data integration platform improve everything from daily tasks to long-term strategy.

- A unified view of operations emerges when you consolidate data, breaking down frustrating data silos and providing a 360-degree picture of your business. For example, a retail company can integrate online sales data, in-store purchase history, and customer support tickets to understand the complete customer journey and identify cross-sell opportunities.

- Improved decision-making becomes natural when leaders have high-quality, real-time information, leading to better strategic outcomes. A logistics company, for instance, can integrate weather data, traffic reports, and its own fleet GPS information to optimize delivery routes in real time, reducing fuel costs and improving delivery times.

- Increased operational efficiency is an immediate benefit, as automation frees teams from manual data work to focus on value-added tasks. A finance department can automate the consolidation of financial data from various subsidiaries into a single report, closing the books in days instead of weeks.

- Improved data quality and consistency means you can trust your numbers. The platform enforces governance and cleansing, turning messy data into a reliable asset. This is critical for regulatory reporting, where inaccuracies can lead to significant fines.

- Scalability for data growth future-proofs your investment, allowing the platform to grow with your data volumes without compromising performance. A startup that begins with a few gigabytes of data can scale to petabytes on the same platform as it grows, without needing to re-architect its entire data infrastructure.

- Better customer experiences result from integrating customer data across all touchpoints, enabling personalized and superior service. A bank can integrate a customer’s transaction history, mobile app usage, and call center interactions to offer proactive support and relevant financial products.

Types of Data Integration Tools and Approaches

The world of data integration isn’t one-size-fits-all. Selecting the right data integration platform approach depends on your specific needs, technical environment, and goals. Some organizations need a quick fix to connect two systems, while others require a comprehensive overhaul of their data architecture.

Common Data Integration Methods

Each method for connecting data sources has its own strengths and ideal use cases.

- Application-based integration uses connectors to link applications directly. It works for simple, point-to-point connections but can become complex and brittle as more systems are added, leading to a messy “spaghetti architecture” that is difficult to maintain and scale.

- Middleware data integration acts as a translator between different systems. It sits between applications, converting data formats and protocols so they can communicate. A classic example is an Enterprise Service Bus (ESB), which provides a centralized hub for routing, transformation, and messaging, which is useful for bridging legacy and cloud systems.

- Uniform access integration (data virtualization) creates a virtual layer to query data from multiple sources without physically moving it. This is valuable for real-time access to distributed data without the overhead of copying and storage. However, it can create performance bottlenecks if underlying source systems are slow and is not ideal for complex historical analysis that requires heavy processing.

- Common data storage is the traditional warehouse approach, gathering all data into a centralized repository like a data warehouse or Data Lakehouse. A data warehouse stores structured, filtered data for business intelligence, while a data lake stores raw data of all types. This provides a single source of truth for comprehensive analytics.

Categories of Integration Tools

Specific tools have evolved to handle different aspects of the integration challenge.

- ETL Tools are the workhorses of data integration, excelling at the Extract, Transform, Load process. Tools like Informatica PowerCenter and IBM DataStage offer robust capabilities for complex data transformations and workflow management, often used in large enterprise environments.

- Integration Platform as a Service (iPaaS) solutions are the modern, cloud-native approach. Offered “as a Service,” these platforms are managed by the vendor, priced via subscription, and accessed through a web browser. Tools like MuleSoft, Boomi, and Zapier provide pre-built connectors, low-code environments, and governance to manage integration flows between cloud and on-premise applications.

- Data Preparation Tools focus on getting data ready for analysis. They excel at cleaning, standardizing, and enriching information, often with user-friendly, spreadsheet-like interfaces. Tools like Trifacta and Alteryx empower business users and “citizen integrators” to perform their own data wrangling without writing code.

- Data Migration Tools serve a specialized role in moving large volumes of data during one-time events like system upgrades, cloud migrations, or mergers. They are designed for high-volume, point-in-time data transfers rather than continuous, operational integration.

- Open-source orchestration tools like Apache Airflow and Dagster offer flexible, code-first frameworks for building custom data workflows. They allow organizations to define, schedule, and monitor complex data processes as code, providing immense flexibility and control for data engineering teams.

- Reverse ETL Tools represent a newer, increasingly important category. While traditional ETL/ELT moves data into a warehouse for analysis, Reverse ETL does the opposite: it syncs insights and enriched data from the warehouse back into operational systems like CRMs (Salesforce), marketing platforms (HubSpot), and advertising networks (Google Ads). Tools like Census and Hightouch operationalize data, ensuring that business teams are acting on the latest insights.

Most organizations build a data integration platform strategy that combines multiple methods and tools to create a comprehensive solution that grows with their needs.

How to Choose the Right Data Integration Platform

Choosing the right data integration platform means finding a partner for your data journey that meets current needs and supports future ambitions. Start by assessing your business pain points and technical requirements. Consider your current systems, data volumes, and long-term vision. A platform that scales with your growth and adapts to new technologies is a worthwhile investment.

When evaluating vendors, look beyond marketing to user experiences and analyst reports. The best platforms consistently earn high ratings from users facing similar challenges.

| Key Selection Criteria | Description |

|---|---|

| Connectivity | Number and type of pre-built connectors to various data sources (databases, SaaS apps, APIs). |

| Scalability | Ability to handle growing data volumes and complexity without performance degradation. |

| Data Quality | Features for data cleansing, validation, change, and consistency. |

| Governance | Capabilities for metadata management, data lineage, access control, and compliance. |

| Performance | Speed and efficiency of data processing, especially for real-time or large batch operations. |

| Ease of Use | User interface, low-code/no-code options, and intuitive design for various user types. |

| Security | Data encryption, access controls, and compliance certifications (SOC 2, HIPAA, GDPR). |

| Support | Quality of vendor support, community, documentation, and training resources. |

| Cost | Pricing model, total cost of ownership (TCO), and potential hidden costs. |

Key Factors for Evaluation

Let’s dive deeper into what matters when evaluating your options.

- Connectivity and connectors: A rich connector library is a primary accelerator. Beyond just the number, assess the quality and depth of the connectors. Do they support just basic data extraction, or can they handle incremental updates (via CDC), metadata, and complex API schemas? Check for robust, well-maintained connectors to your specific mission-critical systems (e.g., Salesforce, SAP, NetSuite) and modern data stack components (e.g., Snowflake, Databricks, dbt).

- Scalability and performance: Your data needs will grow, so the platform must grow with you. Evaluate the underlying architecture. Is it built on a distributed, cloud-native framework like Kubernetes that can scale horizontally to handle increased load? Ask for performance benchmarks and case studies with data volumes and velocities similar to your own. The platform should be able to handle both high-throughput batch processing of terabytes and low-latency real-time streaming of millions of events.

- Data quality and governance features: This is non-negotiable for building trust in your data. Look for built-in data profiling to automatically scan and understand your data’s health, customizable validation rules to enforce business logic, and automated cleansing workflows. For governance, data lineage is critical. Can you visually trace a data point from its source system, through all transformations, to its final destination in a report? This capability is essential for debugging, impact analysis, and proving compliance with regulations like GDPR.

- Security and compliance: Your data is a valuable asset and must be protected. Go beyond a simple checklist of certifications. Does the platform support granular, role-based access controls (RBAC) to ensure users only see the data they are authorized to? Does it offer advanced features like data masking and tokenization to protect sensitive PII? In addition to standard certifications like SOC 2 and ISO 27001, verify compliance with industry-specific mandates like HIPAA in healthcare or PCI DSS in finance.

- Total cost of ownership (TCO): The subscription or license fee is just the tip of the iceberg. Factor in costs for implementation (professional services), training for your team, infrastructure (if self-hosted), and ongoing maintenance. A platform with a steep learning curve that requires specialized developers can significantly increase TCO. Conversely, a low-code platform with strong automation can reduce reliance on expensive engineering resources and deliver a faster return on investment.

- Implementation and support: A great platform with poor support is a recipe for failure. Evaluate the vendor’s support model, including their service-level agreements (SLAs) and access to expert help. Look for comprehensive, well-organized documentation, active user communities (forums, Slack channels), and a rich library of training resources. A user-friendly, low-code/no-code interface can accelerate adoption by business users, but ensure there’s also a robust SDK or API for developers to handle complex, custom integrations when needed.

The Role of a Modern Data Integration Platform in Complex Environments

Today’s data integration platform must handle data that is bigger, more complex, and more critical than ever.

- Handling big data requires specialized capabilities for processing massive datasets efficiently, using distributed processing and scalable storage.

- Organizations working with Real World Data in healthcare and life sciences must combine electronic health records, claims, and patient data into meaningful insights.

- Enabling Precision Medicine requires integrating clinical, genomic, lifestyle, and environmental data to create personalized treatment approaches.

- Managing complex data types like Genomics presents unique challenges, as these datasets are massive and require specialized processing.

At Lifebit, our federated AI platform is built for these complex biomedical data challenges. Our platform includes the Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer) to deliver secure, real-time insights across global biomedical datasets, enabling large-scale, compliant research for biopharma, governments, and public health agencies.

Common Challenges and Advanced Capabilities

Implementing a data integration platform isn’t always smooth sailing. Even with the best tools, you may face challenges. Fortunately, modern platforms have evolved to tackle these obstacles head-on.

Overcoming Implementation Problems

The most common roadblocks are often fundamental, not technical.

- Data findability: You can’t integrate what you can’t find. Modern platforms solve this with integrated data catalogs, which act as a searchable inventory of all your data assets. They automatically capture metadata, making it easy for users to discover and understand available datasets.

- Poor data quality: The “garbage in, garbage out” principle holds true. Duplicate entries, missing values, or inconsistent formats can derail a project. Platforms tackle this with automated data profiling, cleansing, and validation rules that are applied in-flight, ensuring only high-quality data reaches its destination.

- Disparate formats: Robust platforms handle everything from structured tables in relational databases to unstructured text, semi-structured JSON/XML, and complex binary formats like genomic data. They provide powerful transformation engines to automatically parse and map these varied formats into a unified schema.

- System compatibility: Connecting modern cloud applications with decades-old legacy systems is a common headache. Modern platforms use a wide array of specialized connectors and middleware to bridge these gaps. For API-less legacy systems, they may use techniques like database log scraping or even robotic process automation (RPA) as a last resort.

- Managing data volume: As data grows exponentially, platforms must scale without failing. Platforms built for scale use cloud-native, distributed architectures that can dynamically allocate resources, ensuring performance remains consistent whether you’re processing gigabytes or petabytes.

- Securing stakeholder buy-in: Convincing executives to invest requires showing clear business value. The best approach is to start with a pilot project or proof-of-concept (PoC) that targets a high-impact, low-complexity use case. Demonstrating a quick win—such as automating a painful manual report—can build momentum and justify a larger investment.

How Platforms Ensure Data Integrity and Security

Security and integrity are essential. Modern data integration platforms build multiple layers of protection into their operations.

- Data governance frameworks: Platforms provide tools to implement and automatically enforce rules that govern how data moves, who can access it, and what can be done with it. This includes managing data lineage, access policies, and data retention rules.

- Data quality rules and cleansing automation: Systems continuously monitor, validate, and correct information as it flows through integration pipelines. This proactive approach prevents bad data from corrupting downstream analytics and business processes.

- Security protocols: Data is protected with military-grade encryption, both in transit (using TLS) and at rest (using AES-256). Role-based access controls, single sign-on (SSO) integration, and multi-factor authentication add extra layers of protection against unauthorized access.

- Compliance certifications: Certifications like SOC 2 Type II, ISO 27001, and adherence to regulations like HIPAA and GDPR represent rigorous, independently audited security practices. These are table stakes for any enterprise-grade platform.

The Rise of Data Fabric and Data Mesh

Beyond traditional integration, two advanced architectural concepts are shaping the future: data fabric and data mesh.

- Data Fabric is an architectural approach that seeks to automate and augment data integration. It uses AI and machine learning to analyze all of an organization’s metadata (e.g., logs, data catalogs, pipeline definitions) to generate insights and recommendations. A data fabric can suggest which datasets to join, automate pipeline creation, and proactively identify data quality issues. It creates an intelligent, connected tissue across all data sources, making data more discoverable and accessible without necessarily centralizing it.

- Data Mesh is a decentralized, sociotechnical paradigm for data architecture. Instead of a central data team managing a monolithic data platform, data mesh advocates for distributing data ownership to specific business domains (e.g., Marketing, Sales, Logistics). Each domain team is responsible for its data as a product, including the quality, security, and integration pipelines. The central IT or data platform team provides a self-serve platform with the tools and standards to enable these domain teams. This approach scales data management by empowering the people who know the data best, avoiding the bottlenecks of a centralized model.

For organizations handling highly sensitive information, like biomedical research data, platforms can establish a Federated Trusted Research Environment. This innovative approach allows analysis of sensitive data without ever moving it, enabling powerful, secure, collaborative research that aligns with the principles of both data fabric and data mesh.

Frequently Asked Questions about Data Integration

What is the difference between data integration and data ingestion?

Data ingestion is the first step: collecting and importing raw information from various sources into a storage system. It’s about moving data from point A to point B.

Data integration is the comprehensive process that follows. It involves combining, cleaning, and changing that raw data to create a unified, consistent, and analysis-ready view. A data integration platform handles this entire journey.

To dive deeper into this comprehensive process, check out our insights on Data Integration.

What is the difference between ETL and ELT?

The main difference is when data change occurs.

- ETL (Extract, Transform, Load): Data is extracted, transformed in a separate staging area, and then the polished data is loaded into the destination system.

- ELT (Extract, Load, Transform): Raw data is extracted and immediately loaded into the target system. The change happens later, using the destination system’s power. This is often better for large datasets in modern cloud platforms.

How do data integration platforms handle real-time data?

Modern data integration platforms use several techniques to keep information up-to-date instantly:

- Change Data Capture (CDC): Instead of reprocessing entire datasets, CDC identifies and captures only the changes made to source data, keeping target systems current efficiently.

- Event-driven architecture: The platform subscribes to events in source systems (e.g., a new order). When an event occurs, the data is immediately captured and processed.

- Streaming data connectors: These provide a continuous flow of data from sources like IoT devices or social media feeds, processing information as it’s generated.

This real-time capability ensures your analytics are always based on the latest information, enabling immediate responses to business changes.

Conclusion

Your data is only as powerful as your ability to connect it. Data integration platforms break down the walls between scattered information, changing chaos into clarity and confusion into actionable insights. If your teams are still wrestling with disconnected systems, the right platform can create the single source of truth needed for success.

When data flows freely and securely, it becomes a strategic asset. Integration automates tedious processes, ensures data quality, and provides the governance to keep information safe. Most importantly, it empowers your teams to make decisions based on complete, accurate information.

Future-proofing your data strategy means choosing solutions that scale and adapt to new challenges, from traditional metrics to complex Genomics and Real World Data.

For organizations working with sensitive biomedical data, the stakes are even higher. At Lifebit, our federated platform provides the security, governance, and advanced analytics to drive research and innovation. We understand that when lives depend on research, there is no room for compromise on data integrity.

Ready to transform how your organization handles complex biomedical data? Learn how to build a federated biomedical data platform and find what’s possible when your data works as hard as your team does.