Federated Data Analysis for Beginners: Crunch Numbers, Not Privacy

Introduction

What is federated data analysis?

- Definition: A technique that allows analysis of data across multiple organizations without moving or sharing raw data

- Process: Analysis code travels to each data source, runs locally, and only aggregated results are shared

- Privacy protection: Raw sensitive data never leaves its original location

- Also known as: The “code-to-data” paradigm (versus traditional “data-to-code”)

- Key benefit: Enables collaboration while maintaining data sovereignty and security

The term “federation” comes from the Latin word foederis, meaning a treaty or agreement—perfectly illustrating how federated systems link autonomous entities for greater collective impact without sacrificing independence. In an era where 97% of hospital data goes unused according to the World Economic Forum, federated data analysis offers a powerful solution to open up valuable insights while addressing privacy concerns and regulatory requirements.

For organizations struggling with data silos, privacy restrictions, and slow cross-institutional research, federation provides a pathway to collaboration without compromising security or control. Rather than spending months negotiating data transfers or building centralized warehouses, federated approaches let you analyze distributed data in place.

As Dr. Maria Chatzou Dunford, PhD in Biomedicine and founder of Lifebit, I’ve spent years developing federated data analysis solutions that enable secure biomedical research across siloed datasets, helping organizations extract insights from sensitive genomic and clinical data while maintaining the highest privacy standards.

What Is Federated Data Analysis?

Have you ever tried to complete a puzzle when pieces are scattered across different rooms? That’s the challenge researchers face with valuable data locked in separate locations. Federated data analysis solves this puzzle by flipping the traditional approach on its head.

Instead of gathering all data into one place (the old “data-to-code” method), federated data analysis sends the analysis code to where the data lives (the “code-to-data” approach). It’s like sending the puzzle solver to each room rather than moving all the pieces.

The name itself tells a story—”federated” comes from the Latin word foederis, meaning an alliance or treaty. That’s exactly what this approach creates: a respectful partnership between data sources that preserves independence while enabling collaboration.

This innovation couldn’t come at a better time. The World Economic Forum reports a staggering 97% of hospital data goes unused for research. Imagine the medical breakthroughs hiding in that untapped information!

Federated data analysis open ups these insights by:

- Keeping sensitive data secure in its original home

- Sending analysis code to each data source

- Running computations locally at each site

- Returning only summary results—never raw data

- Combining these safe outputs to generate powerful insights

Privacy safeguards are woven into the fabric of this approach. Techniques like K-anonymity ensure that any shared information represents at least K individuals (typically 10+), making it impossible to identify specific people from the results.

Federated Data Analysis vs. Traditional Approaches

Think about the difference between bringing all your friends to one house for a meeting versus having a video call where everyone stays home. The second option saves travel time, keeps everyone comfortable in their space, and reduces complications.

Traditional data analysis faces similar challenges:

Traditional centralized approaches require building massive data warehouses that come with hefty price tags—not just in dollars, but in time and risk. Data must be extracted, transferred, and loaded into a central repository, creating security vulnerabilities at every step. Legal teams often spend 6+ months negotiating complex data sharing agreements, while IT teams wrestle with regulatory compliance across different jurisdictions.

Federated data analysis, by contrast, eliminates these headaches. Data stays safely within its original, secure environment. Only the analysis instructions travel, and only anonymous, aggregated results return. This dramatically reduces security risks, simplifies governance (since data remains under local control), and creates a more resilient system without a single point of failure.

The risk of data interception during transfer—a significant concern with traditional methods—becomes a non-issue with the federated approach. Your sensitive data never travels across networks where it might be vulnerable.

Federated Data Analysis vs. Federated Learning

While they sound similar, these terms describe different concepts:

Federated learning is like a specialized tool in your analytics toolkit—specifically designed for training machine learning models across distributed datasets. It involves training local models at each site, sharing only the model parameters (not data), aggregating these parameters (often using algorithms like FedAvg), and updating the global model.

Federated data analysis is the entire toolkit—a broader concept covering any analytical process performed across distributed datasets without centralizing raw data. This includes statistical analyses, data exploration, cohort identification, and yes, machine learning (including federated learning).

As noted in scientific research on federated learning, “Federated Learning is actually a subset of Federated Analytics, with the ML model as the statistic.” Think of federated learning as one specialized room in the larger house of federated data analysis.

By keeping data in place while enabling collaborative insights, federated data analysis represents a fundamental shift in how we approach the challenge of distributed data—open uping value while preserving privacy and control.

How Federated Data Analysis Works: Levels, Models & Architecture

When we talk about federated data analysis, we’re not describing a one-size-fits-all approach. Instead, think of it as a spectrum of solutions that can be custom to your organization’s specific needs, regulatory requirements, and technical capabilities. Let’s explore how these systems actually work in practice.

Full vs. Partial Federation – Choosing the Right Model

Federation isn’t binary—it exists along a continuum with varying degrees of integration. Imagine it like the difference between completely separate islands (no federation) versus islands connected by increasingly sophisticated bridge networks.

At the most basic level (No Federation), organizations operate in isolation. Researchers must manually download data from each source—time-consuming and often frustrating.

Moving up a level to Partial Federation I, the data stays put but there’s a shared catalog that helps researchers find what exists across organizations. It’s like having a map of all the islands, but you still need separate permission to visit each one.

With Partial Federation II, things get more interesting. Not only can researchers find datasets, but they can also run analyses across sites with the right permissions. The bridges between islands now allow for coordinated activities, though each crossing still requires specific approval.

At the highest level, Full Federation creates a seamless experience. Researchers interact with a unified system that handles all the complexity behind the scenes—automatically routing queries to the right locations, enforcing governance policies, and returning only appropriate results. The islands remain separate, but the transportation system between them becomes nearly invisible to users.

Your choice between these models depends on several practical factors. How strict are your regulatory requirements? How sensitive is your data? What technical capabilities do your partner organizations have? Projects like TRE-FX and TELEPORT are showing how even highly sensitive data can flow securely between Trusted Research Environments when the right safeguards are in place.

Core Building Blocks of a Federated Architecture

Building a robust federated data analysis system is a bit like constructing a secure international airport. You need carefully designed entry and exit points, standardized protocols, and multiple security layers.

The foundation starts with secure APIs and integration layers that act as controlled gateways to each data source. These interfaces handle the critical tasks of verifying who’s allowed to do what, translating queries into a format each system understands, and ensuring no unauthorized data escapes.

For these distributed systems to work effectively, data standardization is crucial. Common data models like OMOP and FHIR in healthcare create a shared language, ensuring that concepts like “blood pressure” or “diagnosis” mean the same thing across different organizations. Without this standardization, combining analyses would be like trying to average temperatures measured in Celsius with those in Fahrenheit—technically possible but prone to serious errors.

The heart of any federation system lies in its secure computation mechanisms. These technologies protect data during analysis through clever approaches like secure aggregation (combining results without revealing individual contributions), homomorphic encryption (performing calculations on encrypted data), and differential privacy (adding carefully calibrated noise to results to prevent identification of individuals).

Orchestrating all this complexity requires sophisticated workflow management systems that coordinate the execution of analyses across distributed sites. Think of these as air traffic controllers, ensuring that analytical code safely reaches its destination, runs correctly, and returns only appropriate results.

Underlying everything is a comprehensive governance framework that enforces policies, tracks permissions, and maintains detailed audit trails. This isn’t just bureaucracy—it’s essential infrastructure that makes federation possible while maintaining compliance with regulations like GDPR and HIPAA.

Trusted Research Environments & Secure “Airlock” Processes

A cornerstone of modern federated data analysis is the Trusted Research Environment (TRE). If you’re picturing a digital fortress where sensitive data can be safely analyzed, you’re not far off.

TREs are guided by the Five Safes framework—a practical approach ensuring that security covers all aspects of the research process. This means vetting the people who access data, confirming projects have proper ethical approval, providing secure technical infrastructure, applying appropriate anonymization, and checking that results don’t inadvertently disclose sensitive information.

Perhaps the most distinctive feature of TREs is their “airlock” system—a concept borrowed from physical containment facilities. Just as airlocks in biohazard labs prevent contamination by never having inner and outer doors open simultaneously, digital airlocks control what enters and leaves the secure environment.

The input airlock carefully examines code and queries before they’re executed. This prevents malicious attempts to extract raw data and ensures analyses comply with approved protocols. Meanwhile, the output airlock reviews results before release, confirming they meet privacy thresholds and don’t contain identifiable information.

Throughout this process, comprehensive audit trails record every action—who did what, when, and why. This creates accountability and provides the documentation often required by regulatory bodies.

This thoughtful architecture allows researchers to work with even the most sensitive data while maintaining the highest standards of privacy and security. By bringing the analysis to the data rather than vice versa, federated data analysis opens new possibilities for collaboration without compromising on protection.

Benefits & High-Impact Use Cases

When it comes to open uping the true potential of sensitive data, federated data analysis is changing the game for everyone involved in the research ecosystem. Rather than just being a technical solution, it’s creating ripple effects of positive change across healthcare, genomics, and clinical research.

Why Researchers, Clinicians and Patients Win

The beauty of federated data analysis is how it creates a win-win-win situation for all stakeholders in the research community.

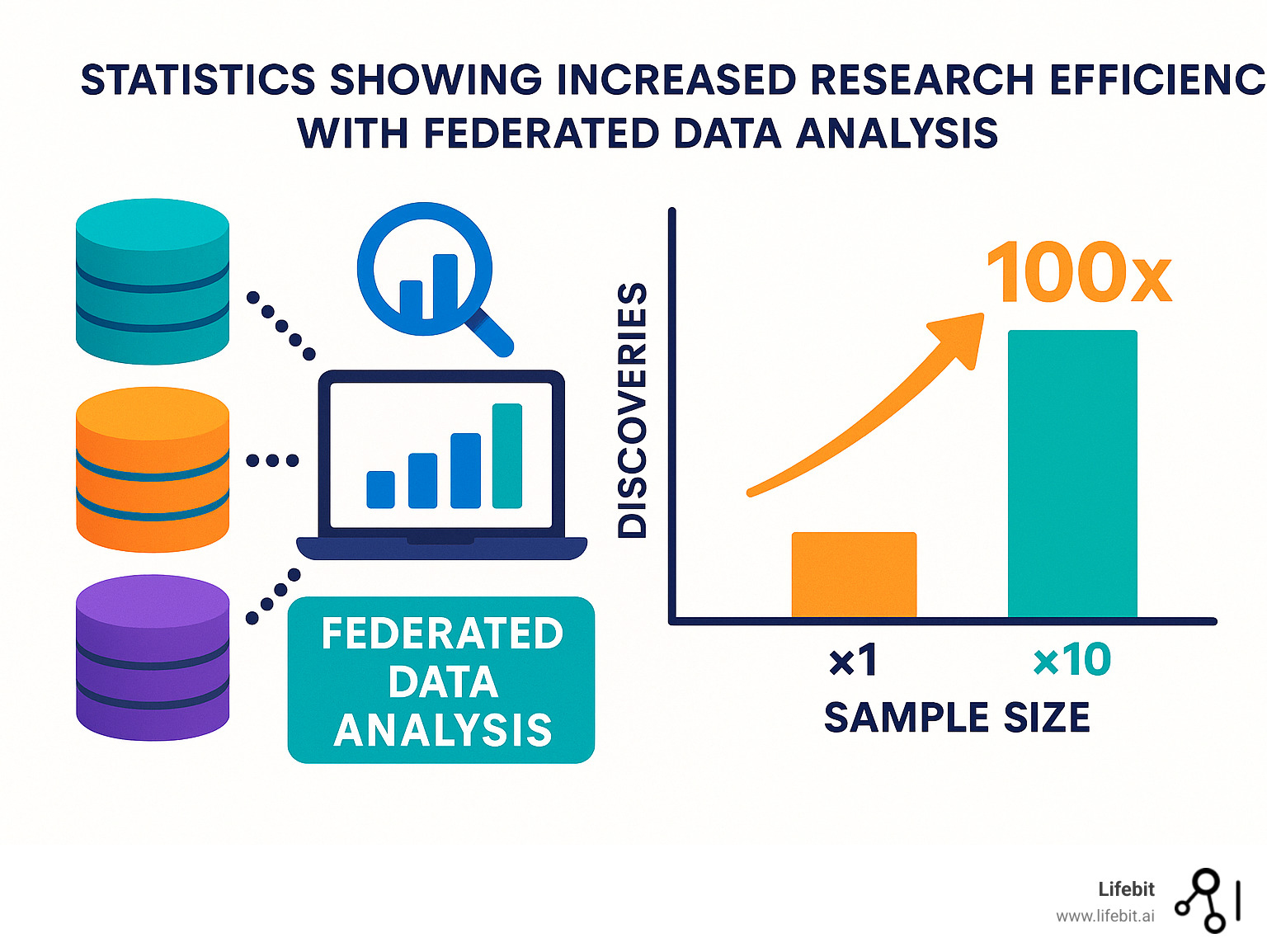

For researchers, the benefits are truly transformative. Imagine having access to datasets that are 10 times larger than what you could normally work with – in genomic studies, this can translate to a 100-fold increase in findies! Instead of waiting six months or more for data sharing agreements to be finalized, you can begin analyzing distributed data immediately. This speed doesn’t just save time; it saves lives.

“The most valuable data is often the most sensitive,” explains a leading genomics researcher. “With federation, we can finally include diverse populations in our studies without compromising privacy or running afoul of local regulations.”

Clinicians see equally compelling advantages. When treating patients with rare diseases, having access to insights from global datasets can make all the difference in identifying patterns that would remain invisible in smaller, localized data collections. Federation enables real-world evidence to flow more freely, helping doctors make better-informed decisions based on outcomes across diverse healthcare settings.

Patients, meanwhile, get the best of both worlds. Their personal data stays secure within trusted institutions, yet still contributes to medical advances that could benefit them and countless others. This approach helps overcome historical biases in medical research by including diverse populations without requiring their data to leave local jurisdictions.

Beyond individual benefits, society as a whole gains from this approach. The environmental impact alone is significant – by reducing unnecessary data duplication and transfer, federated data analysis dramatically lowers the carbon footprint of data-intensive research. It also democratizes access to cutting-edge research capabilities, enabling smaller institutions and developing regions to participate in global research without massive infrastructure investments.

Perhaps most importantly in our post-pandemic world, federation provides a powerful framework for emergency response. When the next health crisis emerges, having systems already in place for rapid, coordinated analysis across global datasets could save countless lives.

Real-World Success Stories Powered by Federated Data Analysis

The COVID-19 Host Genetics Initiative offers a compelling example of federation in action. During the height of the pandemic, researchers faced an urgent need to understand why some patients experienced severe outcomes while others had mild symptoms. Using federated data analysis, they were able to analyze genomic data from over 20,000 ICU COVID-19 patients and 15,000 patients with mild disease across multiple countries – all while respecting patient privacy and complying with different national regulations.

In Canada, the Distributed Infrastructure for Genomics (CanDIG) tackles a different challenge. Canada’s provincial privacy laws create significant barriers to traditional data sharing. CanDIG’s federated approach enables researchers to perform cross-country analyses without moving sensitive patient data across provincial boundaries, open uping insights that would otherwise remain trapped in siloed systems.

Australian Genomics tells a similar story of innovation within constraints. Their national network uses federated data analysis to enable research across multiple clinical sites while maintaining compliance with Australia’s strict privacy regulations. The result? Accelerated rare disease diagnosis and treatment optimization that benefits patients across the country.

The pharmaceutical industry has also acceptd this approach. Major pharmaceutical companies now use federation to collaborate with academic and healthcare partners without requiring sensitive patient data to leave hospital systems. This has streamlined clinical trial design and patient recruitment while ensuring regulatory compliance.

As noted in scientific research on secure GWAS, federated approaches can achieve nearly the same statistical efficiency as pooled analysis while maintaining privacy and regulatory compliance. This represents the holy grail of sensitive data research – powerful insights without privacy compromises.

These real-world examples aren’t just technical success stories; they represent a fundamental shift in how we approach collaborative research with sensitive data. By bringing the analysis to the data, rather than the other way around, federated data analysis is helping open up insights that can transform healthcare and accelerate scientific findy in ways that protect both privacy and progress.

Challenges, Limitations & How to Overcome Them

Let’s be honest – federated data analysis isn’t all sunshine and rainbows. Like any powerful approach, it comes with its share of problems. The good news? These challenges are well understood, and smart people have developed practical solutions to address them.

Data Harmonisation & Heterogeneity

If you’ve ever tried to assemble furniture with instructions in a different language, you’ll understand the challenge of data harmonization. When different organizations collect and store data in their own unique ways, bringing it all together for analysis can feel like herding cats.

Think about healthcare data: one hospital might code a heart attack as “MI” while another uses “myocardial infarction,” and a third uses numeric code “410.0.” Without harmonization, your analysis would miss connections between these identical conditions.

How we’re solving this:

The research community has made impressive progress with common data models like OMOP, which gives everyone a shared language for medical concepts. Rather than forcing everyone to change their systems, OMOP provides a translation layer that maps local terms to standard concepts.

Phenopackets from the Global Alliance for Genomics & Health offer another neat solution, especially for genetic research. They provide a consistent format for describing observable characteristics, making it possible to compare apples to apples across different datasets.

The key is finding the right balance. Perfect harmonization is rarely possible (or even necessary), but metadata standards that clearly document what each data element means can go a long way toward ensuring your federated analysis delivers meaningful results.

Ensuring Statistical Validity in a Federated Setting

Statistics gets tricky when your data lives in different places. Imagine trying to calculate an average height when you can only see summary statistics from each classroom, not individual measurements. Now imagine some classrooms have 10 students while others have 100 – how do you weight things properly?

Federated statistics faces similar challenges, especially when different sites have different population characteristics or sample sizes.

How we’re solving this:

Researchers have developed clever approaches that deliver nearly the same statistical power as pooled analysis. Meta-analysis techniques properly weight site-specific results based on sample size and variance. Some statistical methods (called one-shot algorithms) can produce identical results to centralized analysis with just a single round of communication.

Even more sophisticated tests like the Mann-Whitney U have federated implementations that achieve near-full statistical efficiency while maintaining privacy (typically with K=10 anonymity protections).

Before deploying these methods on sensitive real-world data, researchers often validate them through simulation studies that confirm they’ll deliver reliable results in federated settings.

Security, Privacy & Attack Surfaces

When you’re working with sensitive data, security isn’t optional. Federated systems have to defend against some pretty creative attack vectors – from attempts to reverse-engineer raw data to membership inference attacks that try to determine if specific individuals are in a dataset.

How we’re solving this:

Differential privacy has emerged as a powerful defense, adding carefully calibrated “noise” to results that prevents identification of individuals while preserving overall statistical validity. It’s like adding static to a radio signal – the music comes through fine, but you can’t pick out individual instruments with perfect clarity.

Secure aggregation protocols ensure that only combined results from multiple sites are visible, not individual site contributions. This prevents an attacker from isolating information from a specific source.

The K-anonymity requirement (typically set at K=10) means each released data point must represent at least 10 individuals, making it impossible to single out specific people.

Comprehensive threat modeling helps identify potential vulnerabilities before they can be exploited. By systematically thinking through how someone might try to attack the system, developers can build in appropriate safeguards.

While these challenges might seem daunting at first glance, they’re increasingly well-understood. The research community continues to develop neat solutions that maintain statistical validity while preserving privacy in federated settings. With proper implementation, federated data analysis can deliver powerful insights while keeping sensitive data secure.

Implementing Federated Data Analysis: Standards, Compliance & Best Practices

So you’re convinced that federated data analysis is the way forward—but how do you actually implement it in the real world? Let’s break down the practical aspects of making federation work while staying on the right side of regulations.

Regulatory Landscape & Privacy-Enhancing Tech

The regulatory landscape can feel like navigating a maze, especially when your federated system spans multiple countries or jurisdictions.

Under GDPR, clarity about roles is crucial. Organizations contributing identifiable data typically act as data controllers, while those providing the federation infrastructure usually serve as processors. A well-designed federation minimizes the number of controllers, which significantly reduces your compliance headache.

One of the beauties of federated data analysis is that you can minimize identifiable data transfer. When only non-identifiable results leave data controller nodes, you dramatically simplify your GDPR compliance requirements. It’s like having your cake and eating it too—gaining insights without moving sensitive data.

For US healthcare organizations, HIPAA brings its own requirements. Business Associate Agreements become necessary when sharing protected health information across organizational boundaries. Your federated queries should accept the “minimum necessary standard”—requesting only the data elements absolutely required for your specific analysis.

Privacy-enhancing technologies serve as your trusted allies in this journey:

Differential privacy adds just enough statistical noise to results to protect individual privacy while preserving the overall utility of your analysis. Think of it as adding a protective blur that shields individuals without obscuring the big picture.

Homomorphic encryption performs a neat magic trick—allowing computations on encrypted data without decryption. While powerful, it does come with computational overhead, so use it wisely.

K-anonymity ensures each released data point represents at least K individuals (typically 10 or more), making it virtually impossible to identify specific people from your results.

Technical & Organisational Prerequisites

Building a robust federated system requires several foundational elements—think of them as the building blocks for your federation success.

On the infrastructure front, you’ll need scalable computing resources at each participating site, whether cloud-based or on-premises. Secure, reliable network connections between your federated nodes are non-negotiable, as are robust authentication and authorization systems.

Your API and integration layer serves as the communication backbone of your federation. Well-defined query interfaces, consistent result formatting, and thoughtful error handling will save countless headaches down the road.

Workflow orchestration brings everything together. Standards like RO-Crate (Research Object Crate) help package analysis workflows, while repositories like WorkflowHub make sharing and finding federated analysis methods straightforward. Containerization technologies like Docker create portable, reproducible analysis environments that work consistently across sites.

Many organizations are finding creative ways to integrate federation with existing systems. For example, Amazon Security Lake & Splunk federated analytics demonstrates how federated approaches can complement existing security and data infrastructure.

Your governance framework provides the human element that technology alone can’t replace. Data Access Committees review and approve federated analysis requests, comprehensive audit trails track system activities, and output checking processes prevent privacy leaks before results are released.

Future Opportunities & Roadmap

The federated data analysis landscape continues to evolve at breakneck speed, with several exciting developments on the horizon.

AI integration is perhaps the most promising frontier. Federated foundation models are combining large-scale AI with federated learning, enabling privacy-preserving collaborative training across multiple organizations. Meanwhile, federated prompt tuning is making it possible to personalize large language models without sharing sensitive prompts or data.

Multi-modal data integration is breaking down yet another set of silos. Cross-domain federation is connecting clinical, genomic, imaging, and environmental data for truly comprehensive analysis. Federated approaches for longitudinal studies are making it possible to track outcomes over time without centralizing sensitive patient journeys.

On the policy front, efforts to harmonize international standards for federated research are gaining momentum. Certification frameworks are emerging to verify the compliance and security of federated implementations, building trust across organizational boundaries.

As these developments unfold, it’s wise to build your federated systems with flexibility in mind. The field is evolving rapidly, and today’s cutting-edge approach may be tomorrow’s standard practice.

The future of federated data analysis is bright—enabling collaboration without compromise, insights without exposure, and innovation without risk. By thoughtfully implementing federation with attention to standards, compliance, and best practices, you’ll be well-positioned to open up the full potential of distributed data while maintaining the highest standards of privacy and security.

Frequently Asked Questions about Federated Data Analysis

What data types can participate in federated data analysis?

When people first hear about federated data analysis, they often wonder if their specific type of data can be included. The good news is that federation works with almost any data type you can imagine!

Federated data analysis shines brightest when working with sensitive information that needs extra protection. Clinical data from electronic health records, patient lab results, and medication histories can all participate without leaving their secure environments. The same goes for valuable genomic data like DNA sequences and gene expression profiles that contain highly personal information.

Medical imaging isn’t left out either! X-rays, MRIs, pathology slides, and other visual medical data work beautifully in federated systems. Real-world data from insurance claims, patient registries, and even wearable devices can join the party too. And of course, research data from clinical trials, biospecimen analyses, and survey responses fit right in.

The only real requirement is that your data needs some structure that allows for consistent analysis across different sites. This usually happens through standardized formats or common data models that everyone in the federation agrees to use.

Does federated data analysis slow down research compared to centralised methods?

I hear this question all the time, and it’s a natural concern. The short answer? Not really – and in many cases, federated data analysis actually speeds things up!

Yes, there’s some technical overhead in a federated system. But consider what it replaces: months of legal negotiations, complex data sharing agreements, and massive data transfers. These traditional steps often take 6+ months before any actual analysis can begin.

With federation, you skip all that waiting. Your analysis runs simultaneously across all participating sites in parallel, which is inherently efficient. Many modern federated methods are also optimized to require just a single round of communication, keeping things snappy.

While setting up a federated system does take initial investment, it pays off quickly. Once established, each subsequent analysis runs faster than it would have in the traditional model. For large-scale genomic studies especially, the time saved by avoiding data transfers and legal red tape typically dwarfs any small computational overhead from the federated approach.

How is statistical power maintained when only summaries leave each site?

This is probably the most common scientific concern I hear about federated data analysis – and it’s a good question! Researchers worry that if they can’t directly access and manipulate the raw data, their statistical findings might suffer.

The reality is much more reassuring. Properly designed federated methods can achieve statistical results virtually identical to centralized analysis. For many statistical tests, we only need summary statistics anyway – things like means, counts, and correlations – which can be shared without compromising privacy.

Well-established meta-analysis techniques help maintain statistical validity when combining site-specific results. Some clever “one-shot algorithms” can even produce results identical to centralized analysis with just a single round of communication.

The research community has made impressive progress developing federated statistical methods that maintain near-optimal efficiency while preserving privacy. Studies have shown that federated implementations of common tests like the Mann-Whitney U can operate at close to full statistical power even with K-anonymity constraints (where K=10).

So while it might seem counterintuitive at first, you don’t have to sacrifice statistical rigor to gain the privacy benefits of federation. The math works out beautifully to give you the best of both worlds!

Conclusion & Next Steps

Federated data analysis has truly transformed how we approach collaborative research in our data-rich, privacy-conscious world. Instead of struggling with the old model of gathering all data in one place, this approach flips the script by bringing analysis to where data lives – keeping sensitive information secure while still open uping its value.

After exploring this fascinating approach, several key insights stand out:

Privacy becomes a feature, not an obstacle. By keeping sensitive data safely within its original location, federation builds privacy protection into the very foundation of collaborative research. This isn’t just good ethics – it’s good science, enabling access to datasets that would otherwise remain locked away.

Research timelines shrink dramatically. Remember those months-long negotiations for data sharing agreements? Federation bypasses that entire process. Researchers can focus on findy rather than administrative problems, accelerating the path from question to insight.

Regulatory compliance becomes manageable, not overwhelming. Well-designed federated systems help organizations steer the complex landscape of GDPR, HIPAA, and other regulations while still enabling valuable collaborative work. This is particularly valuable for international research spanning multiple jurisdictions.

Collaboration scales beyond traditional boundaries. Federation enables research networks that span institutions, sectors, and national borders without compromising on data sovereignty or security. This global approach is especially powerful for rare disease research and other fields requiring diverse, large-scale datasets.

The technology has matured significantly. What was once experimental is now increasingly practical. The tools, standards, and methodologies for federated analysis have evolved rapidly, making implementation feasible for organizations of all sizes.

At Lifebit, we’ve witnessed how these approaches open up insights from previously siloed biomedical data. Our federated platform enables secure, in-situ analysis of sensitive genomic and clinical information across five continents, accelerating scientific findy while maintaining the highest standards of privacy protection.

Whether you’re a researcher hoping to collaborate across institutional boundaries, a healthcare organization looking to participate in multi-center studies without moving patient data, or a biotech company seeking to accelerate findy through broader data access, federated approaches offer a powerful solution to your challenges.

We invite you to explore how federated data analysis might transform your own research workflows, enabling your team to extract insights from distributed data without compromising privacy or security. The future of collaborative research doesn’t require choosing between data access and privacy protection – with federation, you can have both.

More info about federated data services