Demystifying Privacy-Preserving AI: Your Guide to Secure Data Innovation

Privacy preserving AI: Secure 2025 Breakthrough

Why Privacy-Preserving AI is the Future of Secure Data Innovation

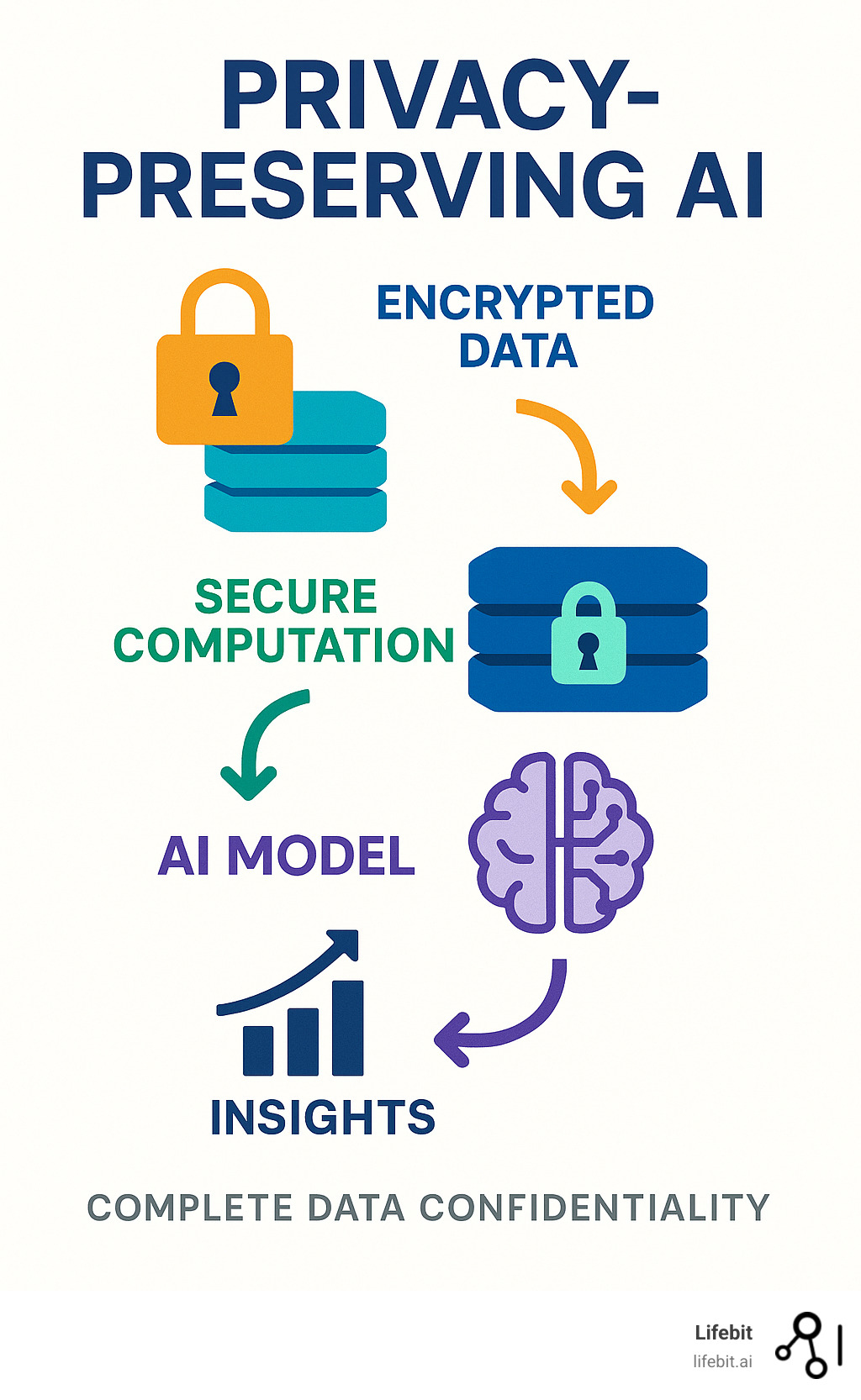

Privacy preserving AI enables organizations to harness the power of artificial intelligence while keeping sensitive data completely secure and encrypted throughout the entire process. This approach allows AI models to analyze and learn from data without ever accessing the raw information, solving one of the biggest challenges in modern data science.

Key Privacy-Preserving AI Techniques:

- Fully Homomorphic Encryption (FHE) – Performs computations on encrypted data without decryption

- Federated Learning – Trains models across distributed data without centralization

- Differential Privacy – Adds controlled noise to protect individual privacy

- Secure Multi-Party Computation – Enables collaborative analysis without revealing inputs

The stakes couldn’t be higher. In healthcare, pharmaceutical companies and regulatory bodies like the FDA need to analyze vast datasets containing sensitive patient information, genomic data, and clinical trial results. In finance, institutions must detect fraud and assess risk while protecting customer privacy. Traditional AI approaches force organizations to choose between innovation and privacy – but that’s changing.

Recent breakthroughs are changing privacy preserving AI from theoretical possibility to practical reality. The Orion framework, developed by researchers at NYU, has achieved unprecedented speed improvements and made Fully Homomorphic Encryption accessible for the first time. This means AI can now process encrypted data at scale while maintaining complete privacy guarantees.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where we’ve spent over 15 years building secure platforms for genomics and biomedical data analysis across federated environments. My experience developing privacy preserving AI solutions for pharmaceutical organizations and public sector institutions has shown me how these technologies can open up collaboration while maintaining the highest security standards.

The ‘Holy Grail’ of Cryptography: Understanding Fully Homomorphic Encryption

Picture this: you have a locked safe containing your most sensitive documents, and somehow a mathematician can perform complex calculations on those documents without ever opening the safe. That’s exactly what Fully Homomorphic Encryption (FHE) does with digital data.

FHE is a cryptographic technique that lets computers perform calculations on encrypted information while keeping it completely locked away. The magic happens when you decrypt the final result – it’s identical to what you’d get if you had done the same calculation on the original, unencrypted data. For privacy preserving AI, this is nothing short of revolutionary.

Why is FHE called the “holy grail” of cryptography? Because it offers something that seemed impossible for decades: the ability to process sensitive data on untrusted systems without ever exposing it. Cloud providers, AI models, even entire data centers can crunch your numbers without seeing a single piece of your actual information. It’s the ultimate security guarantee.

But here’s where the story gets interesting – and a bit frustrating. For years, FHE was like having a Ferrari with a broken engine. The concept was brilliant, but the execution was painfully slow. Early FHE systems needed enormous amounts of computing power and memory, making them about as practical as using a sledgehammer to crack a walnut.

The computational overhead was staggering. Imagine trying to run a modern AI model, but every single calculation takes thousands of times longer and uses massive amounts of memory. Neural networks, which already require significant resources, became nearly impossible to adapt to FHE’s unique programming requirements. It was like trying to teach a race car to swim.

Despite these challenges, researchers worldwide refused to give up. The promise was too compelling – secure cloud computing, privacy preserving AI, and truly confidential data analytics were worth the struggle. You can explore the fascinating journey of scientific research on Homomorphic Encryption to see how far we’ve come.

FHE isn’t the only player in the privacy game, though. It’s part of a powerful toolkit that includes Differential Privacy, which adds carefully controlled noise to protect individual privacy while preserving useful patterns in data. Federated Learning keeps data distributed across multiple locations, training AI models without centralizing sensitive information. Secure Multi-Party Computation allows multiple organizations to collaborate on analysis without revealing their individual datasets to each other.

Each technique has its own strengths and trade-offs. Sometimes the most robust privacy solutions combine multiple approaches, creating layers of protection that would make even the most security-conscious organization feel confident about their data.

The exciting news? Those seemingly impossible FHE challenges are finally being solved, opening up possibilities that seemed like science fiction just a few years ago.

A Breakthrough in Privacy-Preserving AI: Introducing the Orion Framework

After years of FHE being stuck in the “promising but impractical” category, something remarkable has happened. A team of researchers at NYU has cracked the code on making privacy preserving AI with FHE actually work in the real world.

Meet the Orion framework – a breakthrough that’s turning heads across the AI and cryptography communities. Led by researchers including Austin Ebel, Karthik Garimella, and Assistant Professor Brandon Reagen, this innovative framework does something that seemed almost impossible: it makes FHE practical for deep learning.

The significance of their work hasn’t gone unnoticed. Their research earned the prestigious Best Paper Award at ASPLOS ’24 – one of the most respected conferences in computer systems. You can dive into The Orion study on arXiv to see exactly how they pulled off this technical feat.

How Orion Solves FHE’s Biggest Challenges

Think of traditional FHE like trying to perform surgery while wearing thick winter gloves. Technically possible, but painfully slow and incredibly difficult. That’s what researchers faced when trying to run neural networks on encrypted data.

The problems were daunting. Computational overhead made simple operations take forever. Memory constraints meant encrypted data bloated to enormous sizes. Most neural network operations simply weren’t designed for FHE’s mathematical requirements. And then there was noise management – a technical challenge where encrypted data gradually becomes corrupted during computation.

Orion tackles these challenges through clever co-design of algorithms and hardware. Instead of forcing existing neural networks to work with FHE, the framework reimagines how the whole process should work. It optimizes how encrypted data is structured, streamlines the computational flow, and handles the tricky noise management automatically.

The result? AI models that can genuinely operate on encrypted data without the crushing performance penalties that made FHE impractical before.

Unprecedented Speed and Scale for a New Kind of Privacy-Preserving AI

The performance improvements Orion delivers are genuinely exciting. The framework achieves a 2.38x speedup on ResNet-20 compared to previous methods. While that might sound incremental, in FHE, where computations traditionally crawl along, this represents a massive leap forward.

But here’s where things get really impressive. Orion enabled the first-ever FHE object detection using a YOLO-v1 model with 139 million parameters. To put that in perspective, this model is roughly 500 times larger than the ResNet-20 that researchers typically used for FHE experiments.

This achievement moves privacy preserving AI from handling toy problems to tackling real-world applications. We’re talking about the difference between a proof-of-concept and something you could actually deploy in production. It’s the shift from theoretical possibility to practical reality.

Lowering the Barrier: Making FHE Accessible for Privacy-Preserving AI

Perhaps Orion’s most important contribution is making FHE accessible to regular developers. Previously, implementing FHE required you to be part cryptographer, part AI expert, and part systems engineer. It was like requiring every web developer to understand the intricacies of TCP/IP protocols.

Orion changes this equation completely. The framework acts like a compiler function that takes standard PyTorch models and automatically converts them into efficient FHE programs. Developers can now leverage privacy preserving AI without needing a PhD in cryptography.

The team’s commitment to open-source contribution makes this even more powerful. By making Orion’s open-source project freely available, they’re democratizing access to advanced privacy techniques. This transparency allows the global research community to build upon their work, identify improvements, and accelerate adoption.

This open approach is crucial for building trust in privacy technologies. When the code is publicly auditable, organizations can verify that the privacy guarantees are real – not just marketing promises.

Real-World Impact: How Orion Transforms Industries

The breakthrough capabilities of Orion aren’t just impressive in theory—they’re reshaping entire industries where sensitive data has long been a barrier to innovation. For the first time, organizations can truly collaborate on AI projects without the usual privacy concerns that have held back progress for decades.

Think about it: how many groundbreaking findings have been delayed simply because organizations couldn’t share their data? How many life-saving treatments might have been developed faster if hospitals could pool their patient information securely? Privacy preserving AI is finally making these scenarios possible.

Healthcare and Life Sciences

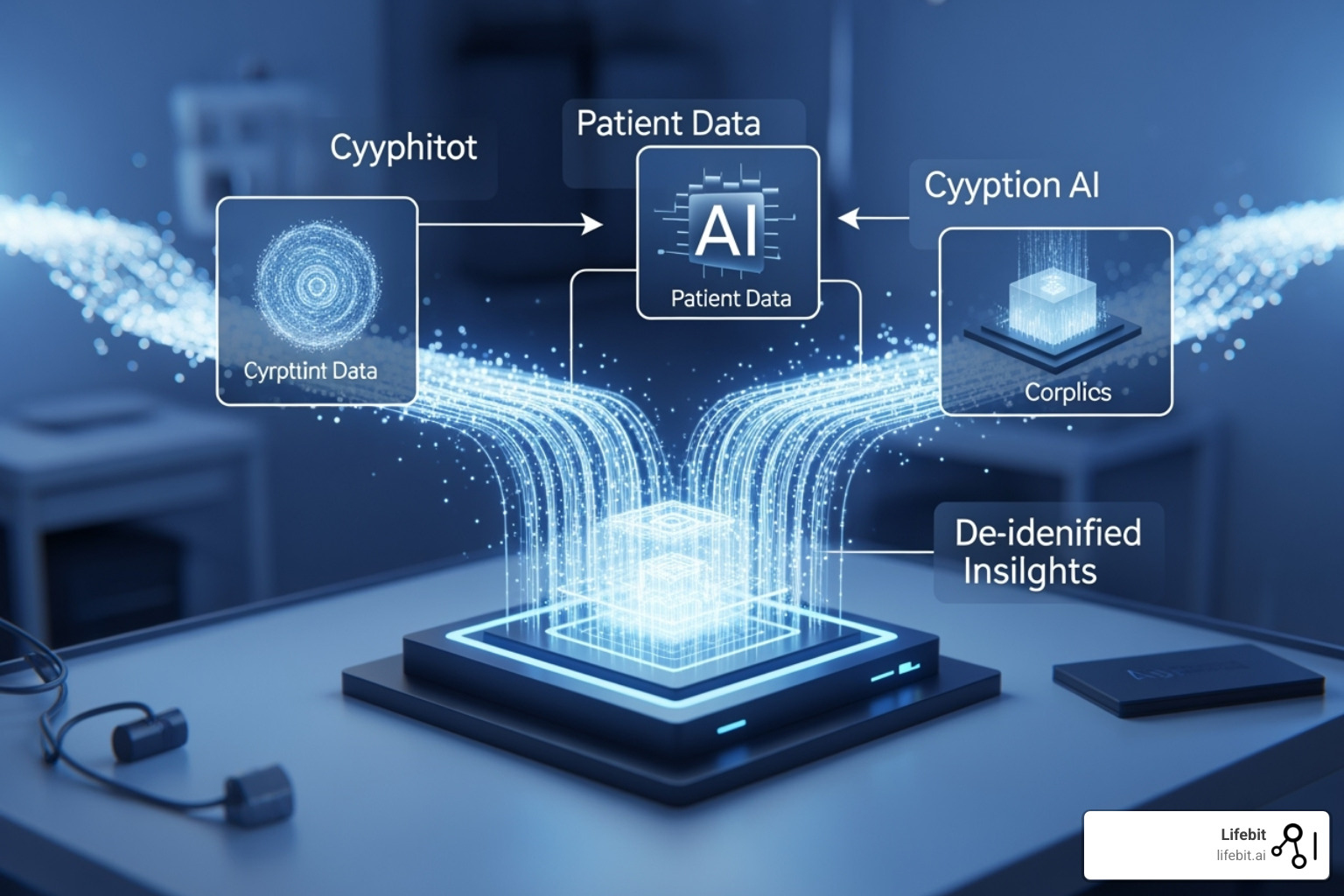

Healthcare sits at the heart of the privacy revolution, and for good reason. Every piece of patient data—from genetic information to treatment records—is deeply personal and strictly regulated. Yet medicine advances fastest when researchers can analyze large, diverse datasets from multiple sources.

The traditional approach has created an impossible choice: protect patient privacy or advance medical knowledge. Orion changes this completely.

Imagine genomic analysis where researchers across continents can study rare genetic diseases together, combining their encrypted datasets without ever exposing individual genetic profiles. Or consider how AI models can now process encrypted electronic health records to spot disease patterns and predict outbreaks while keeping every patient’s identity completely confidential.

Drug discovery becomes particularly exciting when pharmaceutical companies can collaborate on encrypted clinical trial data. Instead of each company working in isolation with limited datasets, they can pool their resources securely. This means faster development of life-saving treatments and better monitoring of drug safety across diverse populations.

At Lifebit, we’ve seen how powerful this approach can be. Our federated platforms enable large-scale compliant research and pharmacovigilance across biopharma organizations, allowing them to securely access biomedical and multi-omic data without compromising patient privacy.

Finance and Cybersecurity

The financial world has always been built on trust, but that trust has been tested by countless data breaches and privacy violations. Privacy preserving AI offers a path forward that doesn’t require customers to simply hope their data stays safe.

Banks can now train fraud detection models using encrypted transaction data from multiple institutions. This creates far more robust protection against financial crimes without any single bank needing to expose their customers’ sensitive financial details. The result? Better security for everyone, with no privacy trade-offs.

Credit risk assessment becomes more fair and accurate when lenders can access richer datasets while preserving individual privacy. Instead of making decisions based on limited information, they can consider encrypted data from various sources to make better lending decisions.

Even cybersecurity gets a major upgrade. Security firms can train intrusion detection systems using encrypted network logs and threat intelligence from multiple organizations. This creates stronger defenses against cyberattacks without exposing the sensitive operational details that might reveal corporate vulnerabilities.

Emerging Frontiers: The Metaverse and Online Advertising

As our digital lives become more immersive, privacy challenges are growing exponentially. The metaverse promises amazing experiences, but it also means unprecedented data collection—everything from eye movements to emotional responses could be tracked.

Here’s where things get really interesting. With frameworks like Orion, metaverse privacy doesn’t have to be an oxymoron. AI can personalize your virtual experiences, moderate content, and even track movements within virtual spaces while keeping all your underlying data encrypted and private. Your virtual adventures truly stay yours.

Online advertising is perhaps the most controversial application, but also one with huge potential. Instead of the current model where your personal information gets passed around like a trading card, advertising platforms could process encrypted user data to deliver personalized ads. You get relevant content, advertisers reach their target audience, and your sensitive information never leaves your control.

This isn’t just about better technology—it’s about creating a more ethical digital economy where privacy and innovation work together instead of competing against each other.

Frequently Asked Questions about Secure AI

We know privacy preserving AI can feel overwhelming at first. These questions come up all the time, so let’s break down the essentials in plain terms.

What is the difference between privacy-preserving AI and traditional AI?

Think of traditional AI like a detective who needs to examine every piece of evidence with a magnifying glass. The AI model requires direct access to your raw, unencrypted data to do its job. Whether it’s analyzing medical records, financial transactions, or personal photos, traditional AI systems need to “see” everything clearly to learn patterns and make predictions.

This creates obvious privacy risks. Your sensitive information sits exposed during processing, storage, and transfer. If someone gains unauthorized access, they can see everything the AI sees.

Privacy preserving AI works more like a brilliant detective who can solve cases while looking through frosted glass. The AI can still extract valuable insights and make accurate predictions, but it never actually sees your original, sensitive data. Instead, it works with encrypted data, anonymized information, or distributed pieces that individually reveal nothing meaningful.

The magic is that you get the same powerful AI capabilities without sacrificing your privacy. It’s like having your cake and eating it too.

Is Fully Homomorphic Encryption the only method for privacy-preserving AI?

Definitely not! While FHE gets a lot of attention for being the “holy grail” of cryptography, it’s just one tool in a growing toolkit of privacy techniques.

Federated Learning keeps your data at home while still training powerful AI models. Instead of sending your sensitive information to a central server, only the learning updates get shared. It’s like a group of chefs sharing cooking techniques without revealing their secret ingredients. This approach works particularly well for mobile apps and multi-institutional collaborations.

Differential Privacy adds carefully calculated noise to your data, making it impossible to identify any individual’s contribution while still allowing for meaningful analysis. Think of it as adding just enough static to a radio signal so you can’t make out individual voices, but you can still hear the overall music.

Secure Multi-Party Computation lets multiple organizations work together on AI projects without anyone seeing each other’s private data. It’s like solving a puzzle where everyone contributes pieces, but no one sees the complete picture until the very end.

Each method has its sweet spot. Federated Learning excels for mobile and distributed scenarios, while FHE provides the strongest mathematical guarantees for cloud computing. Often, the most robust solutions combine multiple techniques for layered protection.

Is privacy-preserving AI ready for widespread use?

The landscape is changing rapidly, and we’re witnessing privacy preserving AI transition from research labs to real-world applications. Breakthroughs like the Orion framework are game-changers because they solve the practical problems that kept these technologies locked away in academic papers.

Just a few years ago, running a simple AI model on encrypted data would take hours or days. Today, we’re seeing performance improvements that make these systems practical for real business needs. Organizations are no longer asking “if” they should adopt privacy-preserving techniques, but “how” and “when.”

The momentum is building across industries. Healthcare organizations are using these techniques to collaborate on drug discovery without sharing patient data. Financial institutions are detecting fraud across encrypted transaction networks. Government agencies are analyzing sensitive information while maintaining citizen privacy.

At Lifebit, we’re seeing this shift firsthand. Our partners increasingly want to operationalize advanced privacy techniques rather than just experiment with them. The technology has matured to the point where it’s becoming a competitive advantage rather than a research curiosity.

Are there still challenges? Absolutely. Integrating these systems into existing infrastructure takes careful planning. Performance optimization continues to improve. But the fundamental question has shifted from “Can we do this?” to “How quickly can we implement this?”

The future of AI is undeniably private, and that future is arriving faster than most people realize.

Conclusion: The Future of Secure and Accessible AI

We’re standing at a pivotal moment in the evolution of artificial intelligence. The development of frameworks like Orion isn’t just a technical achievement—it’s a bridge between two worlds that seemed impossible to connect. On one side, we have the incredible potential of AI to solve humanity’s greatest challenges. On the other, we have our fundamental right to privacy and data security.

For too long, these felt like opposing forces. Organizations had to choose: innovate with AI or protect privacy. Thanks to breakthroughs in privacy preserving AI, that choice is becoming a thing of the past.

The ripple effects of making advanced cryptographic techniques like FHE accessible extend far beyond the tech world. When individuals know their personal data stays truly private—even while contributing to AI-driven breakthroughs—they’re more willing to participate in research that could save lives. Cancer patients might share genomic data for drug discovery. Financial institutions might collaborate on fraud detection without exposing customer information.

This shift is already building deeper trust in AI systems. As these technologies become woven into our daily lives, from healthcare to finance to entertainment, trust becomes the foundation everything else is built on. Privacy preserving AI demonstrates a commitment to ethical data handling that goes beyond compliance—it shows respect for human dignity.

Perhaps most exciting is how this open ups collaboration that was previously impossible. Healthcare institutions that couldn’t share patient data due to privacy regulations can now work together on encrypted datasets. Pharmaceutical companies can pool clinical trial information to accelerate drug development. Government agencies can collaborate on public health initiatives without compromising citizen privacy.

At Lifebit, we’ve seen how transformative this can be. Our federated platform operationalizes these advanced privacy techniques, turning theoretical possibilities into practical solutions. We’re not just building technology—we’re creating the infrastructure for a future where breakthrough findings happen safely and securely.

Our Trusted Research Environment solutions represent what’s possible when cutting-edge privacy technology meets real-world needs. By enabling secure, real-time access to global biomedical data while maintaining the highest privacy standards, we’re helping researchers make findings that were simply impossible before.

The future of AI isn’t just about making machines smarter. It’s about making them trustworthy, ethical, and accessible to everyone. That future is privacy preserving AI—and it’s closer than you might think.

More info about our Trusted Research Environment solutions.