The Complete Guide to Target Identification Analytics

Why Drug Development Costs Billions—And How Analytics Can Change That

Target identification analytics uses computational methods and AI to pinpoint the molecular entities (proteins, genes, RNA) that cause disease and can be modified by drugs.

Key Components:

- Data Integration: Combines genomics, proteomics, metabolomics, and real-world clinical data.

- Analytical Methods: Employs computational biology, machine learning, and high-throughput screening.

- Validation Process: Confirms targets with lab experiments before starting drug development.

- Primary Goal: Slash the 58% failure rate in late-stage clinical trials by finding better targets early.

Why It Matters:

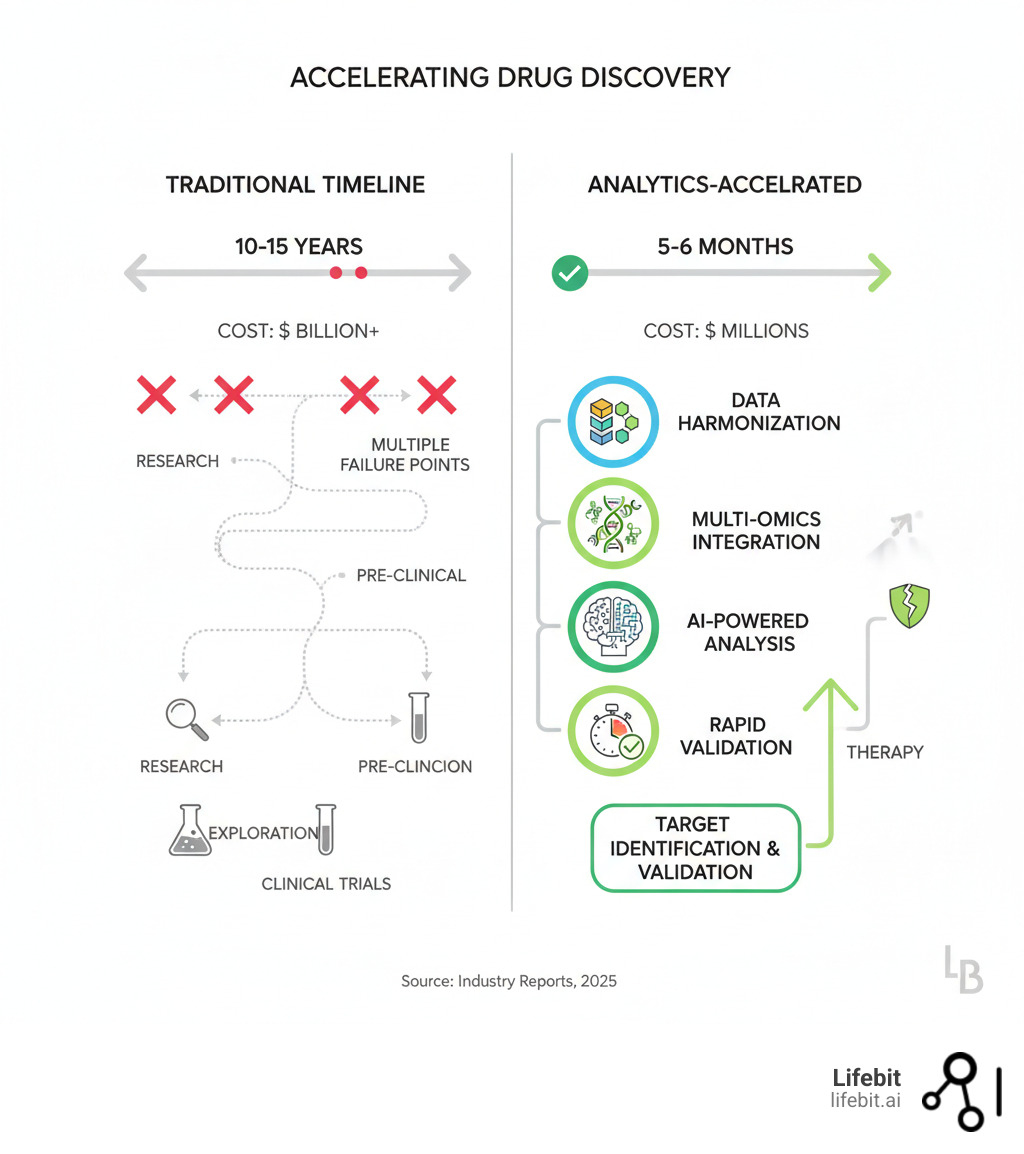

- A new drug costs between $161 million and $4.54 billion to bring to market.

- Well-characterized targets can shorten development cycles from years to just 5-6 months.

- First-in-class drugs capture up to 82% of market value.

The pharmaceutical industry faces a brutal reality: most drug candidates fail late in development, wasting billions. The core problem is often poor target selection at the start.

Traditional methods relied on intuition and limited data. Today, target identification analytics transforms this by harmonizing massive datasets—from genomics to real-world evidence—and using AI to predict which molecular targets will work in patients. This means faster cures, more personalized therapies, and a more efficient path from lab to bedside.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. We build federated data platforms that help pharma and public sector clients accelerate target identification analytics in secure environments. With 15 years in computational biology and AI, including contributions to Nextflow, I’ve seen how harmonized data and advanced analytics can collapse research timelines from years into months.

Basic target identification analytics glossary:

Why 58% of Drugs Fail—And How to Beat the Odds

When disease strikes, it’s like a critical malfunction in your body’s complex biological network. Target identification analytics is how we find that exact point of failure—a rogue protein, a faulty gene—and determine if we can fix it with a drug.

The challenge is immense. We’re analyzing thousands of interacting proteins, genes, and RNA molecules. The goal isn’t just to find molecules involved in a disease, but to pinpoint which ones drive it and can be influenced by a therapeutic agent.

Instead of relying on intuition, we use computational methods to analyze massive datasets and identify targets with the highest likelihood of success. This data-driven approach helps pharmaceutical companies invest resources where they’ll have the greatest impact.

Amplify Clinical Success by Over 58%

A sobering reality: more than 58% of drug candidates fail in late-stage clinical trials, wasting billions of dollars and delaying life-saving treatments. These failures are predominantly split between two causes: lack of efficacy (the drug doesn’t work) and unforeseen safety issues (the drug is harmful). Both roads often lead back to a single origin point: poor target selection.

The primary culprit is choosing a target with a weak or misunderstood link to the disease. If a drug is designed to modulate a protein that is merely correlated with a disease rather than causing it, the therapeutic effect will be minimal or non-existent. This is the leading cause of Phase II and Phase III efficacy failures. Traditional methods, often relying on limited preclinical models that don’t fully recapitulate human disease, can easily select these suboptimal targets.

Target identification analytics changes this equation. By integrating human multi-omics data and clinical evidence, it builds a stronger, data-driven case for a target’s causal role in disease. This process, known as target validation, is crucial. It involves confirming that modulating the target will produce the desired therapeutic outcome in humans. By focusing on well-characterized targets with clear, causal links to disease, we dramatically improve the predictability of clinical outcomes. This leads to shorter drug development cycles, a significant reduction in late-stage failures, and improved drug safety by identifying potential off-target effects early.

Instead of discovering five years and hundreds of millions of dollars into development that a target is irrelevant or unsafe, you gain that critical knowledge upfront. This “fail fast, fail cheap” philosophy allows research teams to pivot quickly, reallocating precious resources to targets with a genuine probability of becoming successful, life-changing therapies.

Pioneer First-in-Class Therapies and Precision Medicine

Beyond improving efficiency, target identification analytics is the engine for true innovation. By sifting through complex biological data to find previously overlooked or “undruggable” targets, it opens entirely new frontiers. This enables the development of first-in-class therapies that create new treatment paradigms for previously intractable diseases.

These are the breakthroughs that offer hope to patients with rare cancers, neurodegenerative disorders like Alzheimer’s and Parkinson’s, or monogenic diseases such as cystic fibrosis, where the development of CFTR modulators was a direct result of pinpointing the fundamental genetic and protein-level defect. By moving beyond well-trodden biological pathways, analytics uncovers novel intervention points that can fundamentally alter a disease’s course.

The commercial advantages are substantial. First-in-class drugs, by definition, face no competition upon launch. They establish the standard of care and often dominate the market, capturing up to 82% of market value compared to later “me-too” competitors. This market leadership not only generates significant revenue but also builds a powerful brand reputation and funds the next wave of R&D innovation.

Furthermore, precise target identification is the cornerstone of personalized therapies. By understanding which molecular targets drive disease in specific patient subpopulations, we can move away from a one-size-fits-all model. This involves developing treatments tailored to an individual’s genetic profile, tumor mutations, or other biomarkers. This approach often involves co-developing a companion diagnostic, a test that identifies patients most likely to respond to a therapy. The result is a more effective and safer healthcare system: delivering the right drug to the right patient at the right time.

The Data-Driven Methods That Pinpoint Winning Drug Targets

Modern target identification analytics replaces guesswork with a systematic process. It combines vast datasets, computational methods, and lab techniques to find the precise molecular switches that can treat a disease.

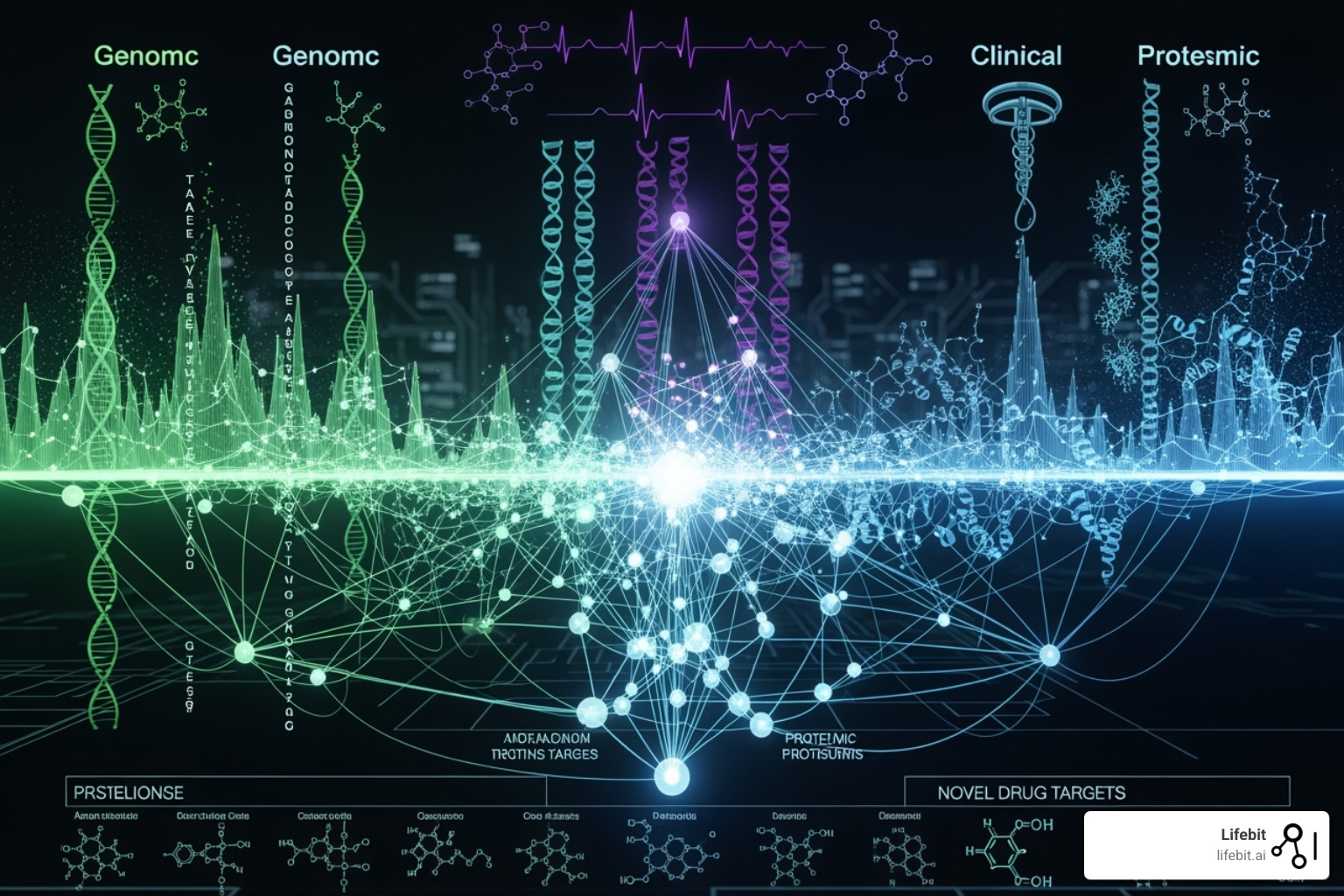

From Data Chaos to Actionable Insight: The Multi-Omics Foundation

Every breakthrough in modern drug discovery starts with diverse, high-quality data that paints a complete, multi-layered picture of disease biology. Integrating these “omics” layers is key to moving from correlation to causation.

- Genomics: This is the foundation, revealing the genetic blueprint. Techniques like Genome-Wide Association Studies (GWAS) analyze the genomes of thousands of individuals to identify genetic variants associated with a higher risk of disease. For example, identifying variants in the APOE gene linked to Alzheimer’s disease has focused research on its role. Whole-genome sequencing can pinpoint specific disease-driving mutations in rare diseases or cancer.

- Transcriptomics: This layer shows which genes are actively being transcribed into RNA, providing a dynamic snapshot of cellular activity. RNA-sequencing (RNA-seq) compares gene expression levels between healthy and diseased tissues, highlighting entire pathways that are up- or down-regulated. A gene that is highly overexpressed in tumor tissue compared to healthy tissue becomes a strong candidate for an oncology target.

- Proteomics: Since most drugs target proteins, this layer is critical. It involves studying the entire set of proteins—their structures, functions, modifications, and interactions. Mass spectrometry-based proteomics can quantify the abundance of thousands of proteins, revealing which ones are dysregulated in a disease state. This provides direct evidence of the molecular machinery gone awry.

- Metabolomics: This examines the small-molecule chemicals (metabolites) involved in cellular processes. By profiling metabolites, we can see the downstream functional consequences of genetic and protein-level changes, identifying disruptions in metabolic pathways that could be targeted.

- Real-world data (RWD): Data from electronic health records (EHRs), insurance claims, and patient registries provides invaluable context on how diseases manifest and progress in large, diverse populations. This helps validate findings from the lab in a real-world setting.

- Clinical trial data and scientific literature: This existing body of knowledge provides crucial context, helping researchers build on previous successes and avoid past failures.

This data arrives in a chaotic mix of formats, from different labs, with varying standards. Data harmonization is the non-negotiable first step to standardize and integrate these disparate sources. Without clean, analysis-ready data, even the most powerful AI is flying blind.

The Analytical Arsenal: From High-Throughput Screening to Genetic Perturbation

Once data is harmonized, a powerful arsenal of analytical techniques is deployed to sift through the noise and pinpoint promising targets.

Computational and systems biology are used to build comprehensive models of biological networks. This allows researchers to simulate how components interact and predict how the system will react to the disruption of a specific node (the potential target). Statistical analysis, particularly methods like differential expression analysis, identifies statistically significant differences between patient and control groups.

More specialized experimental and computational techniques provide deeper insights:

- High-Throughput Screening (HTS) uses robotics and automated liquid handling to test millions of chemical compounds against a specific biological target in a miniaturized format (like microtiter plates). This can rapidly identify “hits”—molecules that interact with the target.

- Genetic Screening (e.g., CRISPR-Cas9) allows for the systematic knockout or activation of every gene in the genome. By observing which genetic perturbations replicate a disease phenotype or, conversely, rescue it, researchers can directly link genes to function and identify critical targets.

- Chemical proteomics uses specialized chemical probes to “fish out” the protein targets of a compound from a complex cellular lysate, confirming drug engagement and identifying potential off-targets.

- Pharmacophore models are computational 3D models that define the essential structural features a molecule must have to bind to a target receptor. These are invaluable for virtual screening and optimizing lead compounds.

- Activity-based protein profiling (ABPP) and mass spectrometry are used to profile the functional state of enzymes and quantify protein changes in disease states with high precision.

The real power comes from an iterative cycle of combining these methods—using computational predictions to guide experiments, and then feeding experimental results back to refine the models.

From Hypothesis to Certainty: The Gauntlet of Target Validation

Identifying a promising target is just the start. Before committing hundreds of millions of dollars to clinical development, that target must be rigorously validated. This is the process of target validation, a critical gauntlet where many promising but ultimately flawed candidates are filtered out.

Validation is a careful, multi-stage progression of experiments designed to build confidence in a target’s role.

In vitro techniques (in test tubes or cell cultures) are the first line of defense. They are fast and cost-effective for answering basic questions: Does a drug candidate bind to its intended target? Does this binding produce a measurable cellular response (e.g., inhibiting an enzyme, blocking a signaling pathway)? Assays like reporter gene assays can measure changes in gene expression, while the Cellular Thermal Shift Assay (CETSA) provides powerful evidence of drug-protein engagement within living cells.

However, cells in a dish are a simplified model. The next crucial step is in vivo validation using animal models. These experiments aim to confirm that modulating the target produces a therapeutic effect in a complex, living organism. For example, a drug targeting a protein involved in tumor growth would be tested in mice with implanted human tumors (xenograft models). In some cases, “humanized” mouse models, which are genetically engineered to express a human gene or have a human-like immune system, provide even more reliable predictors of clinical outcomes.

This rigorous, multi-step validation process acts as an insurance policy against late-stage failure, ensuring that only targets with the highest probability of translating into effective human therapies are advanced into the costly clinical trial phase.

How AI is Revolutionizing Target Identification

Traditional drug findy is like searching for a needle in a haystack. AI is the super-intelligent assistant that scans the entire field in minutes and tells you exactly where to look. That’s what AI and machine learning are doing for target identification analytics.

These technologies are redefining what’s possible, making previously “undruggable” targets reachable. AI excels at seeing patterns humans miss, connecting dots across millions of data points, and generating hypotheses in a fraction of the time.

Predictive modeling is at the heart of this revolution. Machine learning analyzes relationships between genes, proteins, and diseases with incredible precision. Network analysis reveals vulnerabilities in disease pathways, while Natural Language Processing (NLP) extracts insights from millions of scientific papers. The impact is measurable: what once took years can now happen in months.

Find Targets in Months, Not Years, with Predictive AI

This is where AI truly shines, acting as a powerful hypothesis-generation engine.

- Deep learning: Neural networks can digest vast, heterogeneous multi-omics data to identify subtle, complex disease signatures that are invisible to conventional statistical analysis. For instance, Graph Neural Networks (GNNs) are perfectly suited to analyze protein-protein interaction networks, predicting how a drug might affect the entire system, not just a single protein. Convolutional Neural Networks (CNNs), famous for image recognition, can be applied to high-content cellular imaging data to identify phenotypic changes caused by disease or drug treatment.

- QSAR (Quantitative Structure-Activity Relationship) analysis: This classic machine learning technique has been supercharged by AI. It predicts a compound’s biological activity (e.g., how strongly it binds to a target) based on its chemical structure. The process involves generating hundreds of molecular descriptors (features describing the molecule’s size, charge, shape, and hydrophobicity) and using them to train a model. This allows for the rapid in silico screening of virtual libraries containing millions of potential drug candidates, prioritizing the most promising ones for lab synthesis and testing.

- AI-driven network analysis: Biology is a network. AI excels at mapping and analyzing these complex biological networks (e.g., gene regulatory networks, metabolic pathways). By identifying “hub” proteins, “bottleneck” nodes, or feedback loops within a disease-specific network, AI can uncover non-obvious targets that may exert master control over an entire disease cascade. Targeting such a node can be far more effective than targeting a downstream, peripheral player.

The speed gains are transformative. Faster target identification allows research teams to move from raw data to a validated target in a fraction of the time. AI’s unparalleled ability for pattern recognition in large datasets allows scientists to test more hypotheses computationally, fail faster and cheaper, and prioritize the most promising paths with a high degree of data-driven confidence.

Overcoming the “Black Box” Problem with Explainable AI

A critical challenge with advanced AI models is that they can operate as “black boxes,” providing highly accurate predictions without a clear explanation of their reasoning. In drug discovery, where lives and billions of dollars are at stake, “because the algorithm said so” is an unacceptable justification for advancing a target.

This valid concern about interpretability and replicability has spurred the development of Explainable AI (XAI). XAI encompasses a set of techniques designed to make AI models transparent and understandable to human experts. For example, methods like SHAP (SHapley Additive exPlanations) can be applied to a model’s prediction for a specific target. SHAP assigns an importance score to each input feature (e.g., a specific gene’s expression level, a patient’s clinical parameter), showing exactly which pieces of evidence pushed the model toward its conclusion. This allows a biologist to see if the model’s reasoning aligns with known biological principles.

Beyond explanation, the goal is to establish causality. Causal inference methods go a step further than standard machine learning, which is excellent at finding correlations. These methods aim to distinguish true cause-and-effect relationships from mere associations. For example, Mendelian Randomization uses natural genetic variation in a population as an instrumental variable to determine if a potential target (like the level of a certain protein) is causally linked to a disease outcome. This provides much stronger evidence that modulating the target will have a therapeutic effect.

Building trust in AI-driven results requires a symbiotic relationship between computational power and biological wisdom. At Lifebit, our federated AI platform is designed to create a transparent, auditable pipeline. Researchers can scrutinize how algorithms arrive at their conclusions and how AI-generated predictions align with established biology. Every AI-generated hypothesis is still treated as just that—a hypothesis—which must then be rigorously validated through experimentation. We aren’t replacing scientists; we’re augmenting them with computational superpowers. This powerful combination of machine intelligence and human expertise is the engine that will deliver the next generation of breakthrough therapies.

Beyond Pharma: How Target Analytics Drives Success Across Industries

The core principle of target identification analytics—systematically pinpointing critical points in a complex system—transcends industry boundaries. The same thinking that finds a cancer target can also identify a cyber threat or a military vulnerability.

Drug Discovery and Life Sciences

This is the primary domain where target identification analytics directly impacts and saves patient lives, opening new avenues of research in the most challenging disease areas.

- Oncology: Analytics is revolutionizing cancer treatment by moving beyond cytotoxic chemotherapies to targeted therapies. It helps identify novel targets on tumor cells or in the tumor microenvironment. This has led to breakthroughs like immune checkpoint inhibitors targeting PD-1/PD-L1, which unleash the patient’s own immune system to fight cancer. It is also cracking the code of once “undruggable” targets like KRAS, a common cancer-driving mutation, leading to new classes of drugs.

- Neurodegenerative disorders: For complex, slow-progressing diseases like Alzheimer’s and Parkinson’s, analytics helps untangle the intricate web of genetic and environmental factors. By integrating genomic, proteomic, and imaging data, researchers can identify and validate targets like tau protein and amyloid-beta in Alzheimer’s or alpha-synuclein in Parkinson’s, pursuing intervention points that could slow, halt, or even reverse disease progression.

- Inflammatory and Autoimmune Diseases: In diseases like rheumatoid arthritis or Crohn’s disease, the immune system mistakenly attacks the body. Analytics helps find the precise “sweet spot”—a target that can dampen the harmful inflammatory response while preserving the immune system’s ability to fight infection. This has led to highly successful biologic drugs targeting cytokines like TNF-alpha and interleukins.

- Precision Medicine: This is the ultimate goal. Analytics allows us to stratify patient populations and move beyond a one-size-fits-all approach. By identifying the right target for a specific patient’s disease subtype—based on their unique genetic profile or tumor mutations—we can deliver highly personalized and effective treatments.

This work is intrinsically linked to and accelerates biomarker discovery. A well-validated target often serves as a powerful biomarker, a measurable indicator that can be used for early diagnosis, patient stratification in clinical trials, and monitoring treatment response.

Cybersecurity and Defense

The military and cybersecurity sectors face a similar challenge: identifying critical vulnerabilities in complex, interconnected systems.

In military target development, analysts prioritize enemy capabilities or infrastructure for disruption, a process that mirrors drug findy. High-Value Target (HVT) prioritization relies on the same data analysis and pattern recognition skills.

In cybersecurity, threat actor identification and vulnerability scanning use network analysis to find malicious actors and system weaknesses, much like we search for druggable pockets in proteins. Anomaly detection algorithms that spot cyberattacks use the same statistical methods that help us find aberrant biological signals. The language differs—”threat vectors” instead of “disease pathways”—but the analytical framework is the same.

The Problems to Overcome: Data, Bias, and Governance

Despite its immense promise, deploying target identification analytics at scale presents significant challenges that must be addressed.

- Data Integration and Standardization: The “garbage in, garbage out” principle applies. Harmonizing massive, multimodal data from different labs, hospitals, and countries—each with its own formats, protocols, and quality levels—is a monumental technical hurdle. It requires the adoption of Common Data Models (like OMOP CDM) and standardized ontologies to ensure that “blood pressure” means the same thing everywhere.

- Time and resource intensive: The initial setup is not trivial. It requires significant investment in secure cloud infrastructure, a team of expert data scientists and bioinformaticians, and robust data governance frameworks. The cost of generating high-quality multi-omics data at scale is also substantial.

- Data and algorithmic bias: This is a critical ethical and scientific challenge. AI models are trained on data, and if that data is not representative of the global population, the models will be biased. For example, a large percentage of genomic data comes from individuals of European ancestry. An AI model trained on this data may identify targets or predict drug responses that are less relevant or even incorrect for individuals of African or Asian descent, exacerbating health inequities.

- Patient privacy and data security: The data fueling these analytics is among the most sensitive personal information in existence. Protecting patient privacy is paramount. This requires robust governance and strict compliance with regulations like GDPR in Europe and HIPAA in the US. A single data breach can have devastating consequences. This is where Privacy-Enhancing Technologies (PETs) like federated learning become essential. At Lifebit, our federated platform allows AI models to be trained on distributed data sources without the raw data ever leaving its secure, local environment. The model “travels to the data,” not the other way around, ensuring security and governance are built-in from the ground up.

The KPIs That Matter: How to Measure Real-World Impact

To justify the investment and ensure that analytics is delivering on its promise, we must track concrete Key Performance Indicators (KPIs).

- Time to Target Validation: This measures the speed of the early pipeline. How quickly can the organization move from a broad hypothesis to a fully validated target ready for a drug discovery campaign? Analytics aims to compress this timeline from years to months.

- Reduction in Late-Stage Failures: This is the ultimate bottom-line metric. A measurable drop in the ~58% failure rate in Phase II and Phase III trials is the clearest proof of better target selection. This should be tracked specifically for failures due to lack of efficacy.

- Number of Novel Targets Entering the Portfolio: This quantifies innovation. How many new, first-in-class targets are being identified and advanced, versus pursuing “me-too” drugs for existing targets? This is a leading indicator of future market leadership.

- Cost per Validated Target: A direct measure of R&D efficiency. By “failing faster and cheaper” on poor targets and accelerating good ones, the overall cost to get a single validated target into the pipeline should decrease significantly.

- Predictive Accuracy of In Silico Models: How often do the computational predictions made by AI models hold up when tested in wet lab experiments? A high accuracy rate builds trust and demonstrates the value of the computational platform.

- Translational Success Rates: This measures the success of moving from preclinical to clinical stages. What percentage of targets validated in animal models succeed in Phase I human trials? Improving this rate is a key indicator that preclinical models are becoming more predictive of human biology.

Rigorously monitoring these KPIs is essential to demonstrate ROI and prove that this technology-driven approach is delivering what matters most: better, safer, and faster medicines for patients.

Conclusion: The Future is Targeted, Precise, and AI-Driven

The era of target identification analytics is here, fundamentally changing how we develop new medicines. We’ve moved from staggering costs and a 58% late-stage failure rate to an alternative powered by AI and harmonized data. This new approach collapses timelines from years to months, reduces costs, and gets treatments to patients faster.

AI is turning “undruggable” targets into viable opportunities by uncovering patterns in massive multi-omic datasets that were previously invisible.

But none of this works without the right foundation. High-quality, harmonized data is the bedrock of modern drug findy. Without access to integrated datasets, even the most sophisticated AI is powerless.

This is why we built Lifebit’s federated AI platform. The future of drug findy depends on secure, real-time access to global biomedical data and the power to analyze it. Our platform—including our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer)—delivers compliant, harmonized data with built-in AI/ML and federated governance.

The goal is to find the right targets with precision and speed, reducing waste and accelerating breakthroughs. We’re not waiting for this future to arrive. We’re building it, one validated target at a time.

Ready to accelerate your drug findy initiatives with next-generation target identification analytics? Learn how our federated AI platform can transform your research at Lifebit: Pharma Target Identification & Translation.