Detailed Guide to Trusted Research Environments: Security Explained

The Health Data Dilemma: Open up Insights Without 6-Month Delays or Security Risks

Trusted Research Environments Explained: How They Secure Global Health Data Sharing is the answer to one of modern medicine’s most urgent dilemmas: how to open up life-saving insights from sensitive health data without compromising patient privacy. Here’s what you need to know:

How TREs Secure Global Health Data Sharing:

- Data Never Moves – Researchers access data remotely in a locked-down environment; sensitive information stays put.

- Five-Layer Security Framework – Safe People, Safe Projects, Safe Settings, Safe Data, and Safe Outputs protect every step.

- Controlled “Airlock” System – Only approved, non-disclosive results can exit the environment after rigorous checks.

- Full Audit Trails – Every action is logged and monitored in real-time to detect suspicious activity.

- Regulatory Compliance – Built-in adherence to GDPR, HIPAA, and other global data protection laws.

Healthcare generates 30% of the world’s data, yet traditional sharing is dangerously slow—agreements can take six months or longer, a critical delay for patients. Meanwhile, 66% of medical researchers cite data sensitivity as the top barrier to collaboration.

The stakes are immense. Precision medicine’s progress is blocked by data silos and security fears. Pharma companies face a “once-in-a-century opportunity” with AI but can’t access the necessary datasets. Public health agencies need real-time insights but can’t move data across borders.

Trusted Research Environments (TREs)—also called Secure Data Environments—solve this. They are highly secure computing environments where approved researchers remotely analyze sensitive data without ever moving it. The data stays in its fortress; only aggregated, non-identifiable results can leave after rigorous checks.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. We build federated AI platforms that power secure health data analysis for leading organizations globally, making Trusted Research Environments Explained: How They Secure Global Health Data Sharing central to our mission. My 15 years in computational biology have shown me that the right infrastructure turns impossible research challenges into tractable problems.

Trusted Research Environments Explained: How They Secure Global Health Data Sharing further reading:

The Digital Fortress: How to Analyze Global Health Data Without Moving It

Picture a digital fortress where the world’s most sensitive health data—genomic sequences, electronic health records, medical imaging—lives locked safely inside, while researchers globally can still analyze it. That’s a Trusted Research Environment (TRE).

You may also hear them called Secure Data Environments (SDEs) or Data Safe Havens. The name varies, but the mission is constant: enable life-saving research without compromising patient privacy.

The magic of TREs lies in one principle: data immobility. The sensitive information never leaves its secure environment. Instead of downloading massive, risky datasets, researchers come to the data, accessing it remotely within a controlled digital space. This flips traditional data sharing on its head, eliminating the security risks of laptops, emails, and USB drives full of patient information.

Why does this matter? Healthcare generates a tsunami of complex, unstructured data. A single whole genome sequence is 750MB; multiply that by millions of patients, and traditional sharing methods become impossible to secure.

TREs provide the secure, remote access needed to analyze this data at scale. They enable researchers in London, Boston, and Singapore to collaborate on the same dataset without ever moving it. Built to align with regulations like GDPR and HIPAA, they give organizations confidence that their research is compliant.

This is how Trusted Research Environments Explained: How They Secure Global Health Data Sharing becomes more than a technical solution—it’s a fundamental shift. Instead of choosing between scientific progress and patient privacy, TREs let us have both. As validated by foundational research on secure computing environments, TREs are essential infrastructure for modern medical research.

The 5-Layer Security Framework That Makes Health Data Sharing Safe

When people ask how Trusted Research Environments Explained: How They Secure Global Health Data Sharing works in practice, I point to the Five Safes framework. Developed by the UK Office for National Statistics, it’s the gold standard for governing secure data access because it addresses risk holistically across five domains: people, projects, settings, data, and outputs. You can learn more about the Five Safes Framework explained and why it’s so widely adopted.

Think of it as five interlocking shields. A weakness in one compromises the entire system, as a TRE’s security is only as strong as its weakest Safe.

Safe People: Only Trusted Researchers Get In

The most advanced firewall is useless if you give the keys to an untrustworthy individual. The Safe People principle ensures every researcher is vetted, trained, and accountable. This human-centric security layer involves several checks:

- Accreditation and Vetting: Researchers must complete a formal accreditation process. This includes mandatory training (required by 85% of TREs) on data privacy laws (like GDPR and HIPAA), information security, and research ethics. Most TREs (76%) also enforce institutional vetting, meaning the researcher’s home institution must vouch for their credentials.

- Legally Binding Agreements: Researchers sign legally enforceable contracts (used by 79% of TREs) that outline their responsibilities and the severe penalties for misuse, which can range from immediate access revocation and professional sanctions to civil and criminal prosecution.

- Principle of Least Privilege: Access is not a free-for-all. Using Role-Based Access Control (RBAC), TREs enforce a strict principle of least privilege, granting a researcher only the minimum data access required to complete their specific, approved project. This prevents unauthorized exploration of the dataset.

Safe Projects: Only Ethical, High-Value Research Allowed

Even a trusted researcher must have a legitimate, ethically sound reason for accessing sensitive data. The Safe Projects principle ensures data is used for projects with a clear potential for public benefit that outweighs any residual privacy risks. Every research proposal is rigorously reviewed by a Data Access Committee (DAC), which often includes clinicians, ethicists, lawyers, and, crucially, patient and public representatives. The guiding principle is proportionality. For example:

- Approved Project: A proposal to analyze genomic and clinical data from 10,000 cancer patients to identify novel biomarkers for predicting treatment response would likely be approved. The public benefit is immense, and the research question is specific.

- Rejected Project: A vague proposal to “explore correlations in the health dataset” would be rejected as a “fishing expedition.” A request from a commercial entity to access data for marketing purposes would be unequivocally denied. This gatekeeping function ensures the social license to use patient data is respected.

Safe Settings: The Digital Fortress

Safe Settings refers to the secure computing environment itself—the technical fortress where data is analyzed and never leaves. Modern TREs are typically built on secure cloud platforms like Azure or AWS, configured with multiple layers of defense:

- Full Audit Trails: Every single action—every login, file opened, and command run—is logged and monitored in real-time. Automated systems flag suspicious activity (e.g., attempts to access unauthorized data) for immediate investigation by a security team.

- Environmental Lockdown: The researcher’s virtual workspace is heavily restricted to prevent data exfiltration. Features like disabled copy-paste, blocked USB ports, and restricted internet access ensure data cannot be moved out through unauthorized channels.

- Network Isolation: The environment is segregated from the public internet using Virtual Private Clouds (VPCs) and strict firewall rules, creating a secure perimeter that is extremely difficult to breach.

Safe Data: Reducing Re-identification Risk

Even within the fortress, steps are taken to protect patient identities. The Safe Data principle involves treating the data to minimize re-identification risk. TREs typically use pseudonymization, where direct identifiers (name, address) are replaced with a secure token. The key linking the token back to the individual is stored separately and securely by the data custodian. This allows for data linkage (e.g., connecting a patient’s genomic data to their clinical record) without exposing their identity to the researcher. Furthermore, data minimization is applied, ensuring researchers only see the specific data fields they need. A project on cardiac outcomes, for instance, would not be given access to a patient’s psychiatric history.

Safe Outputs: No Accidental Data Leaks

The final shield is Safe Outputs. Researchers never export raw data, only aggregated results. Before any result can leave the TRE, it must pass through a secure “airlock” for disclosure review. This process involves:

- Statistical Disclosure Control (SDC): This is a set of methods to prevent re-identification from summary data. For example, a rule might state that no table cell can represent fewer than 10 individuals. If an output shows results for only three patients, it would be blocked.

- Human-in-the-Loop Review: A telling statistic: 0% of interviewed TRE operators believe software alone can replace human judgment in this process. Automated checks catch obvious risks, but trained disclosure officers review every output request. They look for subtle risks that software might miss, such as deductive disclosure (where an attacker could combine multiple approved outputs to re-identify someone). This human oversight is the non-negotiable last line of defense.

Inside the Airlock: The Technical Blueprint for Zero-Leak Data Security

The genius of Trusted Research Environments Explained: How They Secure Global Health Data Sharing is its core principle: researchers come to the data, not the other way around. This inverts the traditional, high-risk model of downloading sensitive datasets onto local machines—a security nightmare. Instead, the data remains stationary within a secure digital fortress, and approved researchers are granted remote access to a controlled workspace to conduct their analysis.

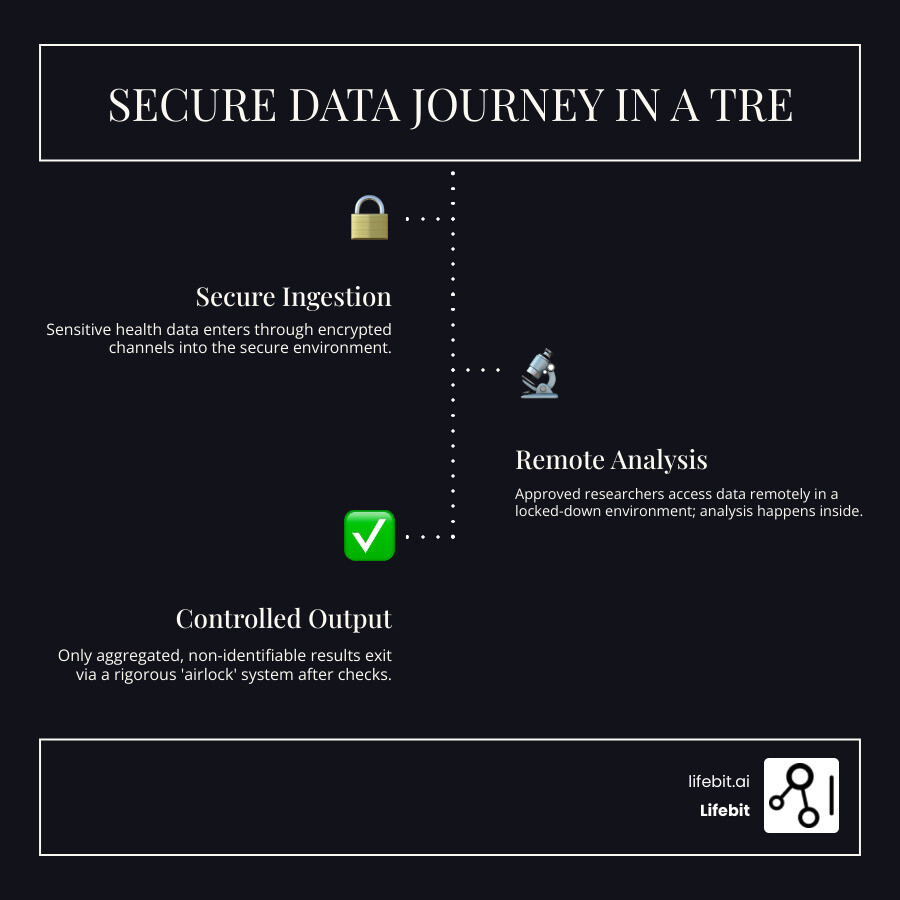

The Secure Data Journey Inside a TRE

Let’s follow the data’s journey from ingestion to insight, illustrating the security controls at each stage:

- Secure Ingestion and Quarantine: Data enters through encrypted channels (e.g., SFTP) into a segregated “quarantine zone.” Here, it undergoes automated checks for malware, format validation, and integrity. This ensures that corrupted or malicious files cannot compromise the core research environment.

- Harmonization and Preparation: Raw data is cleaned, structured, and de-identified. Direct personal identifiers are stripped and replaced with secure cryptographic tokens (pseudonymization). Datasets are often mapped to a Common Data Model (CDM), such as OMOP, to standardize format and terminology. This enables powerful, large-scale analysis and linkage across disparate sources without exposing patient identities to the researcher.

- Analysis in a Locked-Down Workspace: An approved researcher logs into a dedicated, isolated virtual workspace. The data itself is never downloaded; the researcher’s tools (like RStudio or Jupyter notebooks) run within the secure environment, and their queries are executed against the data in place. The workspace has disabled copy-paste to the local machine, no internet access (or highly restricted access), and is under constant monitoring.

- Controlled Output Review (The Airlock): When analysis is complete, the researcher cannot simply export their work. They must submit a formal request to export their results—never the raw data. These outputs enter the “airlock” for rigorous security checks before release.

Key Security Technologies and Protocols

A TRE’s digital fortress is an architecture built on layers of proven security technologies:

- Role-Based Access Control (RBAC): This enforces the principle of least privilege with granular precision. For example, a researcher on a genomics project might be granted read-only access to chromosome 1 data and anonymized clinical outcomes, but be blocked from seeing patient demographics. RBAC ensures users only see and do what is strictly necessary for their approved project.

- Multi-Factor Authentication (MFA): A non-negotiable baseline for secure access. MFA requires a second form of verification (like a code from a mobile app) to prove the user’s identity and prevent unauthorized access from compromised credentials.

- End-to-End Encryption: Data is protected at every stage. This includes encryption at rest (using standards like AES-256 for stored data), encryption in transit (using protocols like TLS to secure data moving over a network), and, increasingly, encryption in use. This emerging field, known as confidential computing, uses secure enclaves to isolate data and code even while it is being processed in memory, protecting it from a compromised operating system or cloud administrator.

- Continuous Auditing and Monitoring: Every action is logged and fed into a Security Information and Event Management (SIEM) system, creating an immutable audit trail. Automated alerts are configured to detect anomalous behavior in real-time, such as a user attempting to access data outside their project’s scope, allowing security teams to respond to potential threats instantly.

- Pre-planned Incident Response: A robust TRE has a tested and rehearsed incident response plan. This ensures clear procedures to contain threats, preserve data integrity, and maintain operational continuity in the event of a security incident.

The “Airlock” System: Stopping Data Leaks Before They Happen

The airlock is the critical final checkpoint that embodies the “Safe Outputs” principle. When a researcher requests to export findings, the results are held in a secure area for a two-stage review:

- Automated Scanning: Software first scans the output for obvious disclosure risks, such as small cell counts in a table (e.g., a cell representing fewer than 10 individuals) or direct identifiers.

- Manual Review: As 0% of TRE operators believe automation is sufficient, a trained data officer then performs a final manual review. They apply Statistical Disclosure Control (SDC) principles to spot subtle risks. For instance, if a researcher tries to export a graph showing a single individual as an outlier, the request would be denied. The reviewer would advise the researcher to aggregate the data to obscure the individual’s identity. Throughout this process, one rule is absolute: no raw or potentially identifiable data leaves the environment. Ever. This is how Trusted Research Environments Explained: How They Secure Global Health Data Sharing becomes a concrete, trustworthy reality.

From 6 Months to Weeks: How TREs Slash Research Timelines and Risk

Trusted Research Environments Explained: How They Secure Global Health Data Sharing is not a theoretical concept; it’s actively changing how pharmaceutical companies, biotechs, and public health agencies work. Research timelines that once stretched over six months are collapsing to weeks as data access negotiations are replaced by immediate, secure collaboration.

Key Benefits for Researchers and Organizations

- Faster Insights: Researchers gain insights in weeks, not months, by working directly where approved data lives, eliminating download and transfer delays.

- Improved Reproducibility: Every analytical step is logged in an audit trail, making it easy to verify findings for publications or regulatory review.

- Seamless Global Collaboration: Teams in the UK, USA, Israel, and Singapore can work on the same dataset in a single secure environment, breaking down geographic silos.

- Lower Costs and Complexity: Organizations consolidate security, compliance, and access controls into a single governed TRE, reducing operational overhead.

- Built-in Compliance: With frameworks designed to meet GDPR, HIPAA, and other regulations, compliance becomes part of the workflow, not a hurdle.

Use Cases: TREs Powering Medical Breakthroughs

The proof is in the projects TREs enable:

- Genomics England: Provides secure access to over 135,000 whole genomes, enabling diagnoses for rare diseases and new insights into cancer genetics.

- Rare Disease Research: Initiatives like the FDA-funded RDCA-DAP and BeginNGS use TREs to securely pool fragmented patient data, accelerating the search for cures.

- Pandemic Response: The UK’s Secure Research Service supported a study of 46 million adults in the NHS Digital TRE, enabling rapid assessment of vaccine effectiveness and long COVID patterns.

- Clinical Trial Data Sharing: The Yale University Open Data Access (YODA) Project uses a TRE to give global researchers secure, no-cost access to data from hundreds of pharmaceutical trials.

These large-scale, real-world implementations are powering life-saving research that would be impossible without the secure foundation TREs provide.

Beyond the Bottleneck: How Federated, AI-Ready TREs Solve Today’s Data Challenges

While Trusted Research Environments Explained: How They Secure Global Health Data Sharing represents a massive leap forward, first-generation TREs have revealed new challenges that are shaping the solutions of tomorrow.

Current Challenges and Limitations

Despite their power, current TREs face real bottlenecks:

- Access Delays: Rigorous approval processes, while necessary, can still stretch to six months or more.

- Scale Challenges: Nearly half of TREs (45%) struggle to scale computing and storage for petabyte-scale datasets like the UK Biobank (20+ PB).

- Interoperability Issues: The proliferation of different TREs has created new, secure silos, hindering cross-institutional research.

- AI/ML Workflow Support: Security features can complicate AI development. Only 23% of TREs permit exporting trained AI models, a major bottleneck for iterating on new findings.

The Future: Federated, AI-Ready, and Scalable

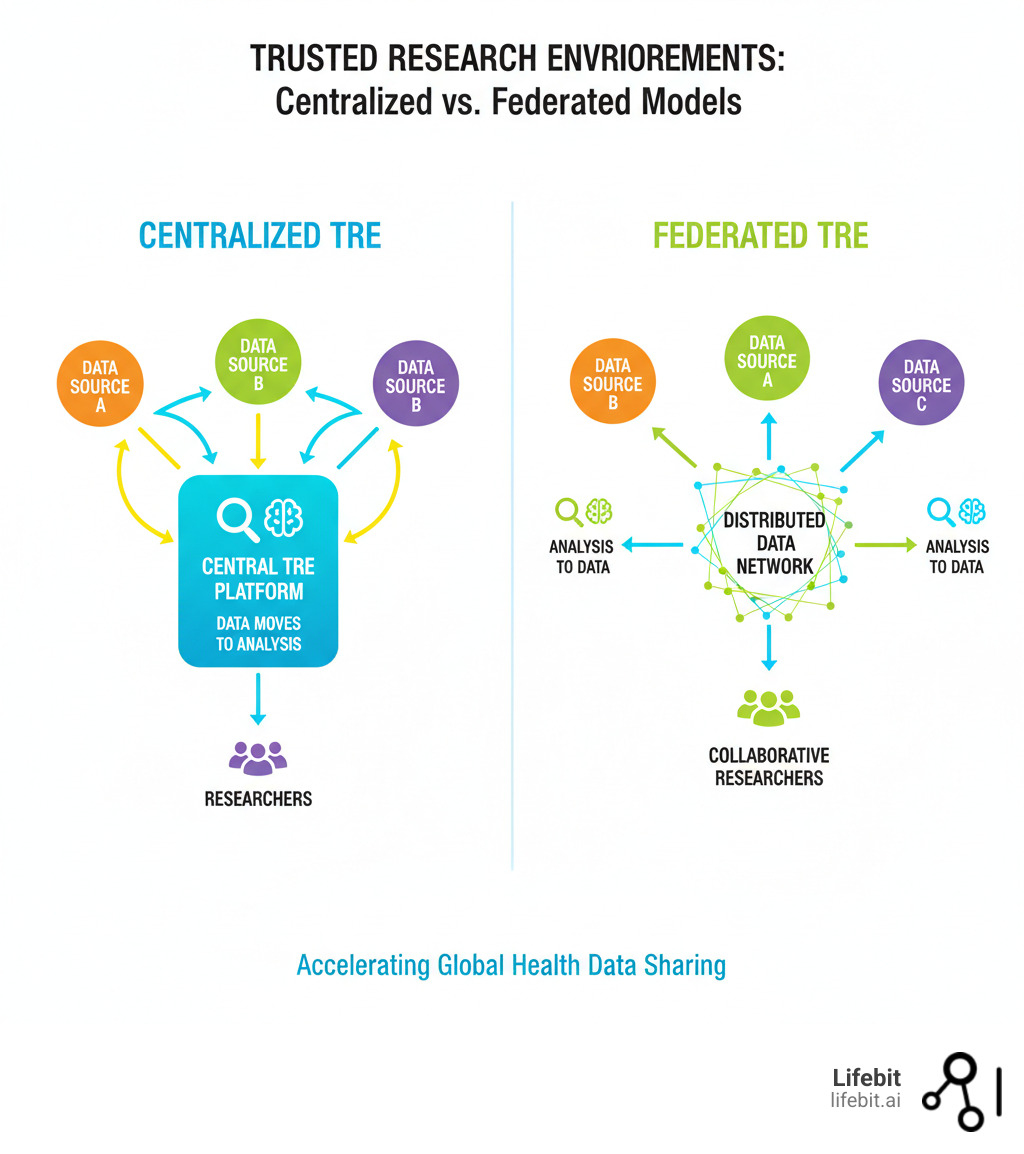

The next generation of TREs is evolving from centralized models to federated ecosystems. In a federated model, the analysis moves to the data, not the other way around. This eliminates risky data transfers and respects data sovereignty, as data custodians retain full control.

This shift is supported by policy initiatives like the European Health Data Space (EHDS), which aims to create a network of interconnected TREs. Researchers could run analyses across multiple countries without any raw data crossing borders.

This new paradigm is powered by Privacy-Enhancing Technologies (PETs) like federated learning, where AI models are trained on distributed data without it ever being pooled. Only the model updates are shared, ensuring GDPR compliance is built into the architecture.

Preparing for AI and New Data Types

The pharmaceutical industry’s “once-in-a-century opportunity” with AI, as reported by McKinsey in The Times, requires infrastructure that can keep pace. Next-gen TREs must support the full AI lifecycle—training, validation, and deployment—with integrated GPU acceleration and streamlined, secure model export pathways. They must also be able to unify multi-modal data (genomics, imaging, EHRs) into coherent, analyzable datasets. The future is about building infrastructure intelligent enough to deliver both security and speed.

The Social License: Why Your TRE Fails Without Public Trust and Transparent Governance

Here’s a hard truth we’ve learned building secure health data platforms: the most sophisticated technology is useless if people don’t trust you with their data. This is the core of Trusted Research Environments Explained: How They Secure Global Health Data Sharing.

Technology is the backbone, but trust is earned through transparent governance and public engagement. Without this “social license to operate,” a TRE will fail to get the data needed for breakthrough research.

The Role of Governance and Oversight

Governance provides the human guardrails around the technology. Key components include:

- Independent Oversight Committees: Multidisciplinary teams, including patient representatives, who scrutinize every data access request to ensure it serves the public good and justifies any privacy risk.

- Radical Transparency: Successful TREs publish clear policies on how decisions are made, what security is in place, and who is using the data for what. This openness builds confidence.

- Legal Compliance: A foundation of adherence to complex regulations like GDPR and HIPAA is essential, as highlighted in Prof Ben Goldacre’s independent review for the UK government.

Building Public Trust Through Engagement

Patient and Public Involvement and Engagement (PPIE) means making decisions with patients, not about them. The most effective TREs embed public contributors directly into their governance committees. As shown in examples of public contributors shaping TRE services, these members ask crucial questions that researchers might miss, making the entire system stronger and more trustworthy.

Openness builds trust in ways security certifications alone never can. At Lifebit, we know governance and technology must work hand-in-hand. Our federated AI platform enables the transparent, accountable collaboration that makes secure data sharing possible at scale, because the future of health research depends on earning the public’s trust.

Conclusion

The path to faster medical breakthroughs doesn’t have to sacrifice patient privacy. Trusted Research Environments Explained: How They Secure Global Health Data Sharing shows how to bridge the gap between sensitive data and the researchers who can open up its life-saving insights.

We’ve moved from risky data transfers and six-month delays to secure environments where research happens in weeks. The Five Safes framework and technologies like the “airlock” system provide a robust foundation for this trust.

Now, the field is evolving from centralized models toward federated architectures where analysis travels to the data. This next generation of AI-ready, interconnected TREs—powered by Privacy-Enhancing Technologies and supported by initiatives like the European Health Data Space—is essential for realizing the pharmaceutical industry’s “once-in-a-century opportunity” with AI.

Technology alone is not enough. Governance, transparency, and public engagement are critical for earning the trust that makes this all possible.

The future of research depends on agile, scalable, and interconnected TREs. At Lifebit, our federated AI platform powers this next-generation secure research. Our Trusted Research Environment, combined with our Trusted Data Lakehouse and R.E.A.L. layer, enables global health data sharing that is both secure and impactful—delivering real-time insights while safeguarding patient privacy.

See how Lifebit enables secure, global health data sharing with our federated Trusted Research Environments.