Database lake: Unleash Data Potential in 2025

Understanding the Database Lake

What is a database lake?

It’s a modern data architecture that brings the best of two worlds together:

- The vast, low-cost storage of a data lake for all data types (structured, semi-structured, unstructured, binary).

- The reliable, governed features of a traditional database, including ACID transactions, schema enforcement, and data quality.

- This hybrid approach helps organizations manage and analyze massive, diverse datasets efficiently.

If you’ve heard terms like “data lake,” “data warehouse,” and “data lakehouse,” you might be wondering where a database lake fits in. It’s the evolutionary answer to handling today’s explosion of data, combining flexibility with crucial reliability. This powerful, unified platform overcomes the limitations of older systems.

As Dr. Maria Chatzou Dunford, CEO and Co-founder of Lifebit, my 15+ years in computational biology and AI, especially with large genomic datasets, have shown me the transformative power of a well-managed database lake. My work has consistently focused on building secure, compliant platforms for federated data analysis, enabling advanced insights in challenging fields like biomedicine.

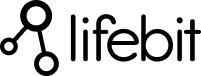

Data Architectures Explained: From Warehouses to Lakes

Before we dive deeper into the nuances of a database lake, it’s helpful to understand the data architectures that paved its way. Think of it as the family tree of data storage: each generation building upon the last, learning from its ancestors’ strengths and weaknesses.

Data Warehouse: The Structured Repository

The data warehouse was the original standard for enterprise data analysis, pioneered by figures like Bill Inmon and Ralph Kimball. It serves as a central repository for clean, curated, and structured data drawn from various operational systems (like CRM or ERP). Optimized for business intelligence (BI) and reporting, its primary goal is to provide a “single source of truth.” Data warehouses employ a schema-on-write approach, where data is carefully structured and validated before being loaded. This is done through robust ETL (Extract, Transform, Load) pipelines that cleanse, conform, and integrate data, ensuring high data quality and fast performance for SQL queries. However, this architecture has significant limitations in the modern data era. Its rigid schema makes it slow and costly to adapt to new data sources or changing business requirements. Furthermore, traditional data warehouses tightly couple storage and compute resources, meaning you must scale both together. This leads to high costs, as you pay for peak compute capacity even when it’s idle, and scaling storage for massive datasets becomes prohibitively expensive.

Data Lake: The Raw Data Ocean

The data lake emerged as a direct response to the limitations of the data warehouse, designed to handle the sheer volume, velocity, and variety of big data. Coined by James Dixon, a data lake is a vast, centralized repository that stores all data types in their raw, native format—unstructured (images, videos), semi-structured (JSON, XML), and structured. Built on low-cost object storage, it uses a schema-on-read approach. This means structure is applied only when the data is queried, not before it’s stored. This paradigm shift offers immense flexibility and low-cost scalability, making it the preferred environment for data scientists and AI/ML engineers. They can use more modern ELT (Extract, Load, Transform) processes, loading raw data first and changing it as needed for exploratory analysis. However, this unregulated freedom is its greatest weakness. Without robust governance, metadata management, and quality controls, a data lake can quickly degenerate into a “data swamp.” This is a chaotic, undocumented repository where data is duplicated, its origins are unknown, and its quality is questionable, rendering it useless for reliable analysis and decision-making. Furthermore, querying raw files directly can be slow and inefficient without the optimization layers found in databases.

For a deeper dive into the distinctions, explore the Top Five Differences between Data Warehouses and Data Lakes.

The Rise of the Data Lakehouse

The data lakehouse emerged as a hybrid model designed to resolve the fundamental trade-off between data warehouses and data lakes. It aims to deliver the best of both worlds: the low-cost, scalable, and flexible storage of a data lake combined with the reliability, performance, and governance features of a data warehouse. This is achieved by implementing a transactional metadata layer directly on top of open-format files in the data lake’s object storage. This layer introduces critical database-like capabilities that were previously missing from the lake.

Key features include:

- ACID Transactions: Bringing the reliability of traditional databases (Atomicity, Consistency, Isolation, Durability) to the data lake, ensuring data integrity for critical operations.

- Data Governance and Quality: A transactional layer and schema enforcement prevent the “data swamp” problem, enabling better data management, lineage tracking, and access control.

- Unified Workloads: It supports everything from BI and reporting to ML and AI on a single platform, eliminating data silos and complex pipelines.

By bringing structure and reliability to the lake, the data lakehouse directly addresses the “data swamp” problem while preserving the flexibility that modern data workloads require. It creates a single, unified platform that can serve both traditional BI reporting and advanced AI/ML development, eliminating the need for complex, costly, and redundant data pipelines between a separate lake and warehouse. This evolution leads directly to what we at Lifebit call the database lake. We explore this concept further in our article, What is a Data Lakehouse.

Here’s a quick comparison of these architectures:

| Feature | Data Warehouse | Data Lake | Data Lakehouse (Database Lake) |

|---|---|---|---|

| Data Types | Structured, some semi-structured | All types: structured, semi-structured, unstructured, binary | All types: structured, semi-structured, unstructured, binary |

| Schema | Schema-on-write (predefined) | Schema-on-read (flexible) | Schema-on-read with schema enforcement/evolution |

| Cost | Higher (expensive for large scale) | Lower (cost-effective, scalable) | Lower (cost-effective, scalable) |

| Primary Users | Business analysts, BI professionals | Data scientists, ML engineers, data engineers | All users: analysts, scientists, engineers |

| Reliability | High (ACID, governed) | Low (risk of “data swamp”) | High (ACID, governed) |

| Flexibility | Low (rigid for new data) | High (handles any data) | High (flexible, but with structure) |

| Use Cases | Operational reporting, dashboards | Exploratory analytics, ML model training | BI, ML, AI, real-time analytics, data science |

The Emergence of the Database Lake: A Modern Hybrid

Now that we’ve charted the evolution of data architectures, let’s zoom in on the star of our show: the database lake. While often used interchangeably with “data lakehouse,” we like to emphasize the “database” aspect to highlight the crucial reliability and transactional capabilities it brings to the vastness of a data lake. It’s not just a lake; it’s a lake you can truly depend on, like a reliable database.

What is a Database Lake?

A database lake is the evolution of data architecture, combining the massive, low-cost storage of a data lake with the reliability of a traditional database. It solves the challenge of managing diverse, raw data while ensuring it remains trustworthy and useful. This is achieved by adding an intelligent management layer on top of raw object storage.

This architecture brings core database functionality directly to your data lake. Key features include:

- ACID Transactions: Guarantees of Atomicity, Consistency, Isolation, and Durability ensure data integrity, which was a critical missing piece in early data lakes.

- Schema Enforcement and Evolution: It provides the flexibility to adapt data structures as requirements change without costly migrations, while still enforcing rules when quality is paramount.

- Data Quality and Governance: Robust tools for metadata management, data lineage, and access control prevent “data swamps” and ensure compliance.

- Decoupled Storage and Compute: Storage and compute resources can be scaled independently, creating a highly efficient and cost-effective model for modern data needs.

This approach represents what experts have called A new big data architecture that’s built for today’s data realities.

Key Characteristics of a Database Lake

A database lake combines several powerful capabilities into one cohesive system:

- ACID transactions: This foundational feature ensures every data operation is reliable. Atomicity guarantees that transactions are all-or-nothing; a multi-step update either fully completes or fails entirely, preventing partial updates. Consistency ensures that any transaction brings the database from one valid state to another. Isolation ensures that concurrent transactions do not interfere with each other, as if they were run sequentially. Durability guarantees that once a transaction is committed, it will remain so, even in the event of a system failure. For a database lake, this means you can reliably mix streaming data appends with large-scale batch updates without corrupting data.

- Time travel (Data Versioning): This practical feature allows you to access any previous version of your data by querying a specific timestamp or version number. It’s invaluable for auditing data changes, fixing accidental bad writes or deletes by reverting a table to a previous state, and ensuring reproducible machine learning experiments by pinning a model to an exact version of the dataset it was trained on.

- Open formats: A database lake is built on open-source file formats like Apache Parquet and ORC. This is crucial for preventing vendor lock-in. Your data remains in a standard format on your own cloud storage, accessible to a wide and growing ecosystem of query engines and tools (like Spark, Presto, and Trino) without needing proprietary drivers or complex export processes.

- Unified batch and streaming: It seamlessly handles both historical batch data and real-time data streams. This eliminates the need for complex, hard-to-maintain Lambda architectures that required separate pipelines for batch and stream processing. With a database lake, streaming data is simply treated as a series of incremental transactions, making it immediately available for querying alongside historical data.

- Schema evolution: You can flexibly evolve your data schema over time without rewriting existing datasets or causing downtime for data pipelines. For example, you can easily add new columns, change data types, or rename fields. The system handles the changes gracefully, ensuring that older data remains queryable alongside new data conforming to the updated schema.

- Performance Optimizations: Unlike a standard data lake, a database lake incorporates performance-enhancing features directly into its metadata layer. Techniques like data skipping (using file-level statistics to avoid reading irrelevant data), Z-ordering (a multi-dimensional clustering technique), and data compaction (rewriting small files into larger, optimized ones) can dramatically accelerate query performance, making it competitive with traditional data warehouses for BI workloads.

- Support for all data types: A database lake handles structured, semi-structured, unstructured, and binary data, which is essential for fields like biomedical research that rely on connecting diverse sources.

Avoiding the “Data Swamp”: The Governance Advantage

Early data lakes often became unusable “data swamps.” The database lake prevents this with robust governance features that bring maturity to data management, as highlighted in research on The growing importance of big data quality.

- Enforceable Data Quality: ACID transactions and schema enforcement provide the foundation for high-quality data. You can define and enforce data quality rules (e.g., a column cannot contain nulls, a value must be within a certain range) as constraints on your tables. Data that fails these checks can be quarantined, logged, or rejected, preventing bad data from polluting the lake and downstream analytics.

- Metadata Management and Data Catalog: A comprehensive data catalog is central to a database lake. It automatically captures technical metadata (schemas, data types, partitions) and allows users to add rich business context (descriptions, ownership, tags). This transforms raw files into well-documented, findable assets. Integrated data lineage tracks how data is created and transformed, providing transparency and building trust among users.

- Fine-Grained Access Controls: You can implement precise security policies to ensure sensitive information is protected while democratizing access to non-sensitive data. Access can be controlled at the table, row, and even column level. This is critical for compliance with regulations like GDPR and HIPAA, allowing organizations to confidently share data with authorized users while masking or redacting sensitive columns like personally identifiable information (PII).

By following best practices on How to avoid a data swamp, organizations can ensure their database lake remains a valuable, productive asset.

Applications and Use Cases in the Real World

The true power of a database lake really shines when you see it in action. By bringing together all your data – no matter its type or size – into one reliable and super scalable platform, it open ups incredible possibilities for advanced analytics and transforms how organizations operate across various industries.

How a Database Lake Powers Advanced Analytics

A database lake is the ideal launchpad for modern analytics, machine learning, and AI. It creates the perfect environment for:

- Machine Learning Models: Data scientists can train more accurate models by using the massive, diverse datasets available in the lake, from unstructured images to structured tables.

- Predictive Analytics: By combining historical data with real-time information (e.g., from IoT devices), organizations can build sophisticated models to predict future outcomes, from customer demand to equipment maintenance.

- AI Development: The ability to store and process vast volumes of varied data provides the essential fuel for building complex AI applications.

- Data Science Exploration: The “schema-on-read” flexibility allows data scientists to freely explore raw data, fostering creative experimentation and new findies.

Bringing these data sources together improves customer interactions, drives R&D innovation, and increases operational efficiencies. For more on how this data analysis works across distributed sources, check out our insights on Federated Data Analysis.

Industry Spotlight: Finance, Retail, and Manufacturing

The database lake architecture is not limited to one sector; its benefits are changing operations across the board.

- Financial Services: In finance, it powers real-time fraud detection by analyzing transaction streams against historical customer behavior. It also enables complex risk modeling and algorithmic trading by providing a unified platform for both historical market data and live data feeds.

- Retail and E-commerce: Retailers use database lakes to build comprehensive “Customer 360” profiles. By unifying online clickstream data, in-store purchase history, social media sentiment, and loyalty program data, they can deliver hyper-personalized marketing campaigns and product recommendations.

- Manufacturing and IoT: On the factory floor, a database lake can ingest and analyze real-time sensor data from machinery (IoT). This enables predictive maintenance, instantly spotting anomalies that signal potential equipment failure, reducing downtime, minimizing waste, and improving product quality.

Use Cases in Life Sciences and Research

At Lifebit, the database lake is the heart of our secure, real-time platform. For sensitive, vast, and diverse biomedical and multi-omic data, this architecture is essential.

- Genomic Data Analysis: A database lake can store and manage massive genomic datasets, including raw sequencing files (BAM, CRAM), variant calls (VCF), and associated clinical phenotypes. This allows researchers to perform large-cohort studies that were previously computationally prohibitive, querying across thousands of samples to link genetic variants to disease and accelerate biomarker findy.

- Real-World Evidence (RWE): It simplifies the ingestion, harmonization, and analysis of diverse RWE from sources like electronic health records (EHRs), patient registries, insurance claims, and medical images. The flexible schema can handle both the structured data (lab results) and unstructured data (clinician’s notes), enabling a holistic view of patient journeys to inform drug development and health outcome studies.

- Pharmacovigilance: By ingesting real-time data from clinical trials, social media, and published literature, a database lake powers AI-driven safety surveillance. This allows pharmaceutical companies to move beyond reactive reporting and proactively detect adverse event signals faster and more accurately.

- Clinical Trial Optimization: Unifying data from trial management systems, wearable devices, and genomic assays helps optimize every stage of a clinical trial. It can accelerate patient recruitment by identifying eligible cohorts from RWE, monitor patient adherence through wearable data, and enable adaptive trial designs that respond to incoming data in near real-time.

Our platform, with its Trusted Research Environment, harnesses the database lake to enable secure, compliant, and federated data analysis. This allows researchers to collaborate and gain insights without compromising data privacy. To learn more, explore the Application of Data Lakehouses in Life Sciences.

Frequently Asked Questions about Data Lakes and Lakehouses

It’s totally normal to have questions about these evolving data architectures! The world of data is always growing and changing, and with new terms like database lake popping up, it can sometimes feel a bit like trying to steer a new city without a map. Let’s clear up some common points and get you oriented.

What is the main difference between a data lake and a data lakehouse?

A traditional data lake is a flexible, low-cost repository for raw data of any format. However, its lack of built-in governance and transactional capabilities creates a risk of it becoming a disorganized “data swamp” with poor data quality. Its “schema-on-read” approach can also lead to inconsistencies.

A data lakehouse (or database lake) improves the data lake by adding a management layer that provides the reliability, governance, and performance of a database. It introduces crucial features like ACID transactions and schema enforcement directly on top of low-cost storage. This prevents the “data swamp” problem, combining the flexibility of a lake with the trustworthiness of a warehouse.

What technologies are used to build a database lake?

Building a database lake involves several key technologies working together:

- Cloud Object Storage: Services like Amazon S3, Azure Data Lake Storage, or Google Cloud Storage provide scalable, durable, and cost-effective storage for vast amounts of data.

- Open File Formats: Formats like Apache Parquet and Apache ORC efficiently store and compress data for faster analytics, while preventing vendor lock-in.

- Transactional Metadata Layer: This is the core component that adds database-like capabilities. Technologies like Delta Lake, Apache Hudi, or Apache Iceberg enable ACID transactions, schema evolution, and data versioning (time travel) on top of object storage.

- Processing and Query Engines: Engines like Apache Spark are used for large-scale data processing. Query engines like Presto, Starburst, or AWS Athena allow users to run standard SQL queries directly on the data in the lake.

Together, these technologies create a unified, high-performance, and reliable data platform.

Can a database lake handle real-time data?

Yes, and this is a key advantage. The database lake architecture excels at unifying historical batch data with real-time streaming data in a single system. This eliminates the need for separate, complex pipelines for streaming analytics.

The transactional layer (e.g., Delta Lake) supports continuous data ingestion and updates, enabling real-time analytics and ML applications directly on the lake. For Lifebit, this is essential for use cases like real-time pharmacovigilance monitoring, where immediate insights can save lives. It’s the backbone of our platform’s ability to deliver secure, real-time insights from sensitive biomedical data.

Conclusion: Open uping Your Data’s Full Potential

The evolution from data warehouses and data lakes to the database lake marks a significant step forward in data management. This architecture is not just a buzzword; it’s a practical solution that combines the scale and low cost of a data lake with the reliability and governance of a database.

By unifying workloads and democratizing data, the database lake empowers an entire organization. Business analysts get trustworthy data for reporting, while data scientists gain frictionless access to raw data for exploration and model building. This provides the crucial AI-readiness foundation needed for modern machine learning initiatives, removing the barriers of poor data quality and siloed systems.

At Lifebit, we’ve witnessed how this architecture transforms biomedical research. For sensitive genomic, clinical, and real-world data, a platform must be both secure and flexible. Our approach lets researchers open up the full value of their data by combining the scale of a data lake with the governance of a database, all while ensuring the highest standards of security and compliance.

The future of data architecture is here. Whether you’re analyzing multi-omic datasets or building the next breakthrough in personalized medicine, the database lake provides the solid foundation to turn data into findies.

Ready to see what this could mean for your organization? Find our Trusted Data Lakehouse and let’s explore how we can help you steer the exciting possibilities of modern data management.