Why Federated Data Analysis is Revolutionizing Healthcare Research

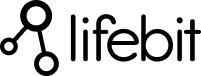

Federated data analysis is a privacy-preserving approach that enables organizations to analyze distributed datasets without moving sensitive data from its original location. Instead of centralizing data, computational code travels to where the data resides, processes it locally, and returns only aggregated results.

Quick Answer for “Federated Data Analysis”:

- What it is: A method where analysis code goes to data locations rather than moving data to a central server

- How it works: Code runs locally at each data site, only sharing computed results and statistics

- Key benefit: Enables large-scale analysis while keeping sensitive data within organizational boundaries

- Best for: Healthcare, genomics, and other domains with strict privacy requirements

- Main difference: Unlike traditional analytics, raw data never leaves its original secure environment

The need for this approach has never been more urgent. Research shows that 97% of hospital data goes unused, largely due to privacy concerns and regulatory barriers. Meanwhile, patients of non-European ancestries face significantly higher rates of “variant of uncertain significance” results in genetic testing – a disparity that could be addressed through larger, more diverse datasets.

Traditional centralized data analysis creates a fundamental tension: researchers need large, diverse datasets to generate meaningful insights, but healthcare organizations face strict legal, ethical, and technical barriers to sharing sensitive patient information. Federated analysis resolves this by bringing computation to the data rather than data to computation.

During the COVID-19 pandemic, federated genomics projects successfully analyzed data from nearly 60,000 individuals across multiple institutions without compromising patient privacy. Similarly, federated approaches have enabled breakthrough studies in rare disease research and cancer genomics by connecting previously isolated datasets.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where we’ve pioneered genomics and biomedical data platforms that make federated data analysis accessible to healthcare organizations worldwide. Through my 15+ years in computational biology and health-tech, I’ve seen how federated approaches can open up the full potential of healthcare data while maintaining the highest security and compliance standards.

What Is Federated Data Analysis and How Does It Work?

Federated data analysis is like having a team of researchers who can work together without ever meeting in the same room. Instead of gathering everyone’s data in one place, the analysis travels to where each dataset lives, does its work locally, and brings back only the insights you need.

This approach works with horizontally partitioned datasets – imagine five hospitals that all track the same patient information, but for different groups of people. Rather than asking each hospital to ship their sensitive patient records to a central location, federated analysis sends the research questions to each hospital’s secure environment.

Here’s how it works: A research team writes their analysis code once, and a central orchestration system distributes this code to each participating hospital. The code runs behind each hospital’s existing firewalls, processes the local data, and returns only statistical summaries – never the raw patient information.

The beauty lies in result aggregation. Each hospital returns statistics like “30% improvement in patient outcomes” or “average treatment duration: 14 days.” The central system combines these statistics to create comprehensive insights without anyone seeing another organization’s sensitive data.

Technical Components of Federated Systems

The orchestration layer serves as the brain of federated systems, managing workflow distribution, monitoring execution status, and coordinating result collection. Modern orchestrators use container technologies like Docker and Kubernetes to ensure analysis code runs consistently across different computing environments, whether on-premises servers or cloud infrastructure.

Data connectors provide standardized interfaces to different data sources. A hospital might store patient records in Epic, research data in REDCap, and genomic data in specialized databases. Federated systems use adapter patterns to normalize these diverse sources without requiring organizations to change their existing infrastructure.

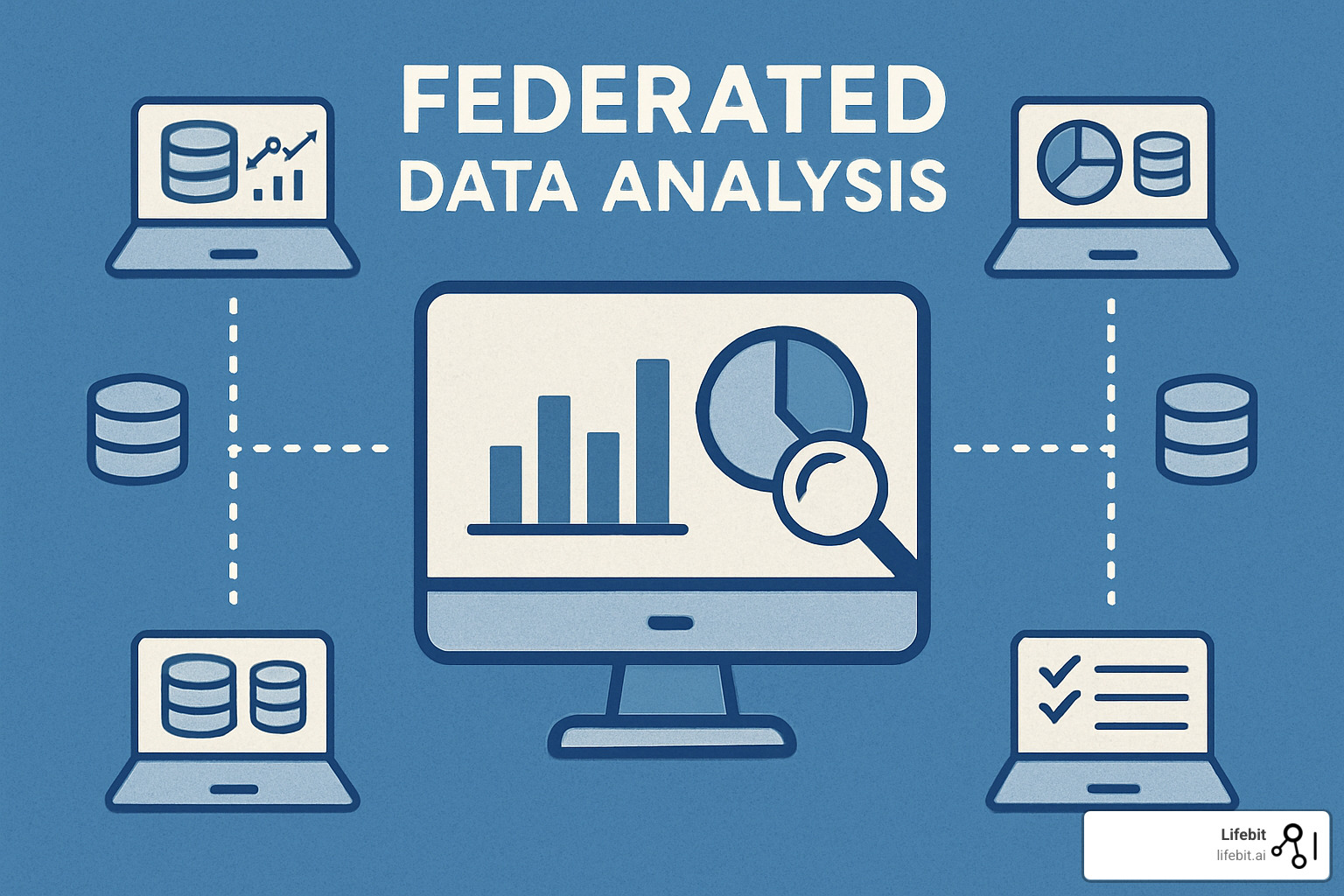

Security gateways enforce access controls and audit trails. Every analysis request passes through authentication layers that verify user permissions, log activities, and ensure compliance with organizational policies. These gateways can integrate with existing identity providers like Active Directory or SAML systems.

Result harmonization engines handle the complex task of combining outputs from different sites. When one hospital returns results in JSON format and another uses CSV, the harmonization layer converts everything to consistent formats before final aggregation.

Federated Data Analysis vs. Centralised Analytics

The difference between federated and centralized approaches is like house visits versus dinner parties. In centralized analytics, everyone brings their data to one location. In federated data analysis, the analysis visits each dataset where it lives.

This local data residency approach solves several problems. Data movement costs can be astronomical with large genomic datasets or medical imaging files. A single whole-genome sequence generates 100-200 GB of data, and moving thousands of these files across networks becomes prohibitively expensive. More importantly, keeping data within its original security perimeter means organizations don’t have to expand their compliance boundaries.

Centralized approaches also create data staleness problems. By the time sensitive healthcare data gets copied, cleaned, and integrated into central repositories, it may be weeks or months old. Federated systems can access real-time data, enabling applications like pharmacovigilance that require immediate detection of adverse drug reactions.

Governance complexity multiplies in centralized systems. When data from multiple organizations sits in one location, determining access rights, audit responsibilities, and liability becomes a legal nightmare. Federated approaches maintain clear data ownership boundaries.

Federated Analysis, Federated Learning & Federated Analytics

Federated Learning focuses on training AI models across distributed datasets by sharing model updates like gradients rather than raw data. Google’s Gboard keyboard uses federated learning to improve autocorrect without sending your typing data to Google’s servers. In healthcare, federated learning trains diagnostic AI models on medical images from multiple hospitals without centralizing patient scans.

Federated Analytics handles basic statistical computations, sharing statistics only. Think of calculating average patient age across multiple hospitals – each site computes its local average, and the central system combines these into a global average without seeing individual patient ages.

Federated Data Analysis is the comprehensive umbrella covering both approaches while extending to complete research workflows. It includes complex statistical modeling, survival analysis, genome-wide association studies, and other sophisticated analyses that require multiple iterations and intermediate results.

The distinction matters for privacy analysis. Federated learning’s iterative model updates can potentially leak more information than single-shot statistical queries, requiring different privacy-preserving techniques.

Typical Workflow & Architecture

The orchestration server acts like a project manager, coordinating all components. When researchers submit requests, it validates privacy requirements, packages analysis code into containers, and distributes them to participating compute nodes. Results travel back through secure channels, and the result merger combines outputs into meaningful global insights.

Workflow engines like Nextflow or Cromwell have been adapted for federated environments, enabling complex multi-step analyses that might involve quality control, statistical analysis, and visualization phases. Each step can run at different sites depending on data availability and computational requirements.

Monitoring dashboards provide real-time visibility into federation health. Administrators can track which sites are online, monitor resource utilization, and identify bottlenecks. Researchers can see analysis progress and estimated completion times across all participating sites.

Audit systems maintain detailed logs of all activities for compliance and troubleshooting. These logs capture who requested what analysis, when it ran, which data was accessed, and what results were returned – all while maintaining privacy protections.

Why Choose Federation in Healthcare & Genomics?

Healthcare sits on a treasure trove of data that could revolutionize patient care – yet 97% of hospital data goes unused, not because it lacks value, but because sharing it safely feels nearly impossible.

The barriers are real: GDPR in Europe and HIPAA in the United States create complex compliance requirements. Cross-border transfer restrictions kill promising international research projects. Meanwhile, patients suffer from knowledge gaps that could be filled if we could safely connect these isolated data islands.

Federated data analysis cuts through these barriers by keeping data within each organization’s existing security walls. Instead of wrestling with data transfer agreements, researchers can access insights from distributed datasets while respecting every privacy law and organizational policy.

The financial benefits are equally compelling. Copying and storing massive genomic datasets costs millions. Federation eliminates these duplication costs while dramatically reducing environmental impact.

Closing Diversity Gaps and Reducing VUS Rates

Patients from non-European ancestries face much higher rates of “variant of uncertain significance” results in genetic testing. These VUS results leave families in limbo – they know they carry a genetic variant, but doctors can’t determine if it increases disease risk.

The root cause is simple: most genetic databases are heavily skewed toward European populations. When patients of African, Asian, or Indigenous ancestry get genetic testing, there isn’t enough data to confidently classify their variants.

BRCA1/BRCA2 studies show what’s possible. These genes link to hereditary breast and ovarian cancer, but variant interpretation varies dramatically across populations. Federated approaches can connect genetic databases across continents without moving patient records. Early projects suggest this could boost sample sizes by 10-fold or more, dramatically improving variant classification for underrepresented populations.

Legal, Ethical & Environmental Drivers

GDPR’s principle of data minimization actively encourages processing data where it lives rather than creating copies. Cross-border transfer bans in some regions make federation the only viable path for international collaboration. Countries can participate in global studies while maintaining complete data sovereignty.

The environmental case is strong too. Scientific research on sustainable bioinformatics demonstrates that federated approaches substantially reduce the carbon footprint of large-scale research by eliminating redundant data storage and transfer infrastructure.

Trust Models, Security Risks & Privacy-Preserving Technologies

When it comes to federated data analysis, security isn’t just about building higher walls – it’s about creating smart systems that protect sensitive data while enabling groundbreaking research. The challenge: how do you enable hospitals in different countries to collaborate on cancer research without any patient data ever leaving its home institution?

The foundation starts with choosing the right trust model. Centralised trust relies on a single trusted coordinator – simpler to manage but creates a single point of failure. Decentralised mesh networks distribute trust across multiple nodes, increasing resilience but making governance more complex. Most real-world systems use hybrid approaches, combining centralized coordination with decentralized trust for sensitive operations.

Threat Landscape for Federated Systems

Membership inference attacks analyze aggregated results to determine whether specific individuals participated in a study. Property inference attacks attempt to extract sensitive population characteristics from research outputs. Poisoning attacks involve malicious participants submitting false data to skew findings. Reconstruction attacks try to reverse-engineer individual data points from aggregated results.

Toolbox of Mitigations

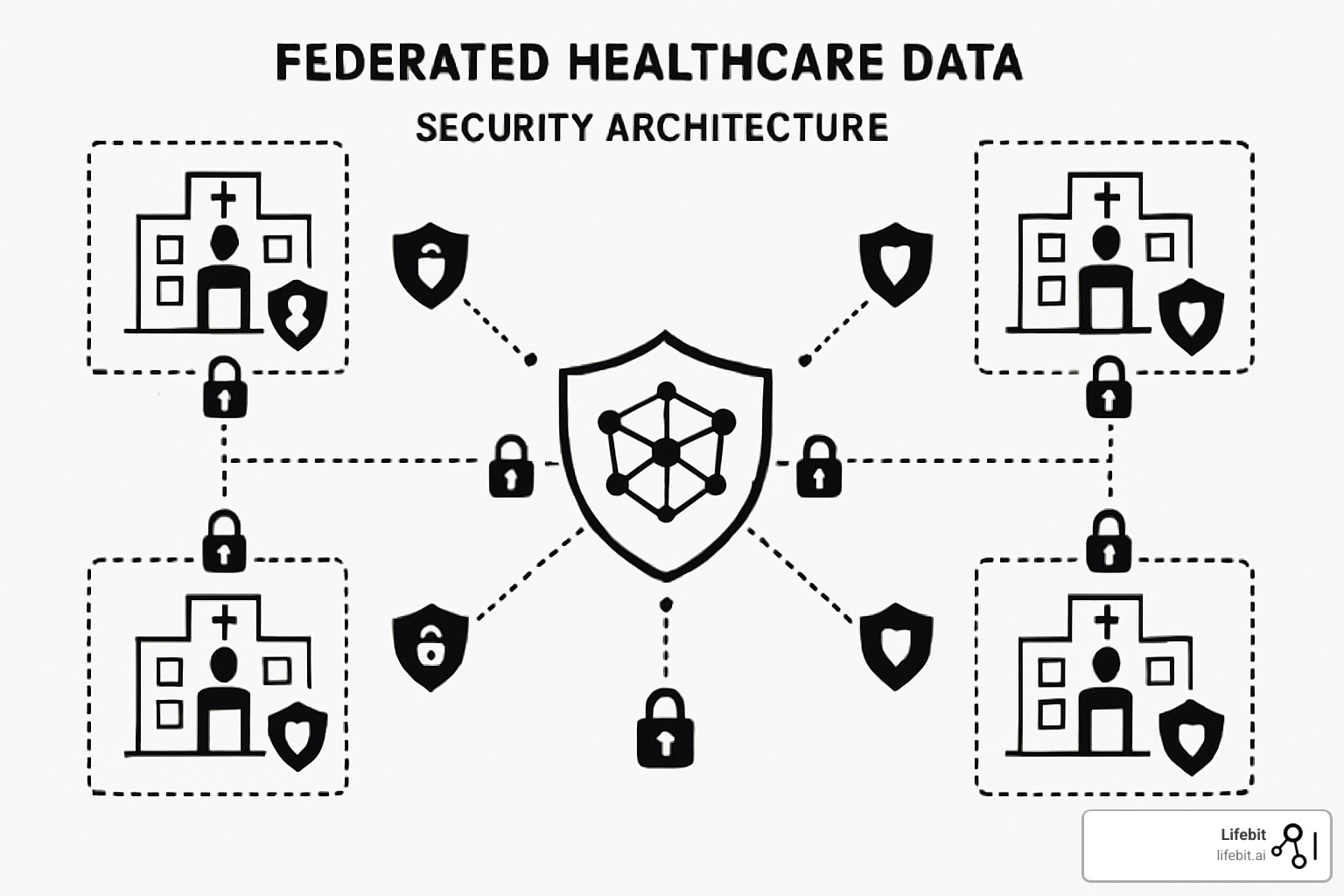

Secure multiparty computation (SMC) enables computations on encrypted data without revealing inputs. Homomorphic encryption allows computations on encrypted data that remain encrypted throughout processing. Differential privacy adds carefully calibrated noise to prevent individual-level information leakage while preserving statistical utility.

Secure aggregation uses cryptographic techniques to mask individual contributions, ensuring only combined results are visible. Trusted execution environments like Intel SGX provide hardware-based isolation for sensitive computations.

The DataSHIELD platform demonstrates how these techniques work together, providing R-based federated analysis with built-in privacy protections that researchers can use without needing cryptography expertise.

Modern federated systems layer these protections. No single technique is perfect, but combining multiple approaches creates robust defenses that withstand sophisticated attacks while enabling large-scale research that saves lives.

Implementation Roadmap: From Data Harmonisation to Production-Grade Federated Data Analysis

Building successful federated data analysis systems requires orchestrating components across different locations. The journey starts with data harmonisation – getting all participants to agree on formats and standards before analysis begins.

Healthcare data makes this challenging. One hospital might use FHIR standards, another relies on legacy systems, and genomics labs work with VCF files or Phenopackets. Standards like OMOP Common Data Model for clinical data and GA4GH standards for genomics are making large-scale federated research possible.

Modern federated platforms rely on Trusted Research Environments (TREs) and infrastructure-as-code approaches that make deployment predictable and secure. These containerized workflows ensure analysis runs consistently whether processing data in London, New York, or Tokyo.

Comprehensive Data Harmonisation Strategies

Semantic harmonisation goes beyond simple format conversion. Medical terminologies vary dramatically – one system might code diabetes as “E11.9” using ICD-10, while another uses “250.00” from ICD-9, and a third uses SNOMED codes. Terminology mapping services like UMLS (Unified Medical Language System) provide crosswalks between different coding systems.

Temporal harmonisation addresses how different systems handle time. Electronic health records might timestamp events differently – admission time, registration time, or first vital signs. Genomics data includes collection dates, sequencing dates, and analysis dates. Federated systems need temporal alignment strategies to ensure meaningful comparisons.

Unit standardisation prevents subtle errors that could invalidate research. Laboratory values might be reported in different units (mg/dL vs mmol/L for glucose), different scales (Celsius vs Fahrenheit), or different reference ranges. Automated unit conversion with validation checks catches these issues before analysis begins.

Quality harmonisation establishes minimum data quality thresholds. Sites with 90% missing data for key variables shouldn’t contribute equally to analyses as sites with complete data. Data quality scorecards help researchers understand the reliability of federated results.

Step-by-Step Deployment Checklist

Successful deployment requires methodical approaches. Governance agreements clearly define responsibilities and procedures. Your metadata catalog becomes the window into your federation without exposing sensitive information – detailed enough to be useful but vague enough to preserve privacy.

Phase 1: Foundation Building involves establishing legal frameworks, technical standards, and governance structures. Data sharing agreements must address liability, intellectual property, publication rights, and withdrawal procedures. Technical specifications define minimum infrastructure requirements, security standards, and interoperability protocols.

Phase 2: Pilot Implementation starts with synthetic or de-identified data to validate technical components without privacy risks. Proof-of-concept analyses demonstrate value while identifying integration challenges. User training programs prepare researchers and IT staff for new workflows.

Phase 3: Production Deployment introduces real data with comprehensive monitoring and support systems. Gradual rollout strategies start with simple analyses before progressing to complex workflows. Performance benchmarking establishes baseline metrics for system optimization.

Phase 4: Scale and Optimization expands to additional sites and use cases. Automated onboarding processes reduce the friction of adding new participants. Advanced analytics capabilities like real-time monitoring and AI-driven insights become available.

Containerised workflows ensure analysis code runs consistently across different environments. Monitoring systems maintain trust and compliance, showing participants that the system works as promised and data remains secure.

Technical implementation focuses on secure channels between nodes using enterprise-grade encryption, while identity and access management ensures only authorized users access the system. Validation with synthetic data catches problems before affecting real research.

Advanced Technical Architecture Considerations

Load balancing strategies become critical as federations scale. Some sites might have powerful computing clusters while others run on modest servers. Adaptive scheduling algorithms can distribute computational load based on site capabilities and current utilization.

Fault tolerance mechanisms handle inevitable infrastructure failures. When a site goes offline mid-analysis, the system needs graceful degradation strategies that continue with available sites while maintaining statistical validity.

Version control systems manage analysis code and dependencies across distributed environments. Reproducibility frameworks ensure that analyses can be repeated months later with identical results, even as underlying software components evolve.

Network optimization reduces latency and bandwidth requirements. Compression algorithms minimize data transfer, while caching strategies avoid redundant computations. Edge computing approaches can pre-process data at local sites to reduce coordination overhead.

Overcoming Practical Barriers

Bandwidth limits mean every byte counts when coordinating across multiple sites. Compression strategies can reduce network traffic by 80-90% for typical genomics workflows. Incremental synchronization only transfers changes rather than complete datasets.

Non-IID data – where different sites have systematically different populations – can produce misleading results without careful handling. Population stratification techniques account for demographic differences, while meta-analysis approaches can identify and adjust for site-specific effects.

Stakeholder training gets overlooked but is crucial. Researchers need to learn new ways of thinking about data analysis, while IT teams must understand new security requirements. Training programs should include hands-on workshops, documentation libraries, and ongoing support channels.

The complexity of controller-processor roles under GDPR creates genuine legal challenges that require clear contractual frameworks. Legal playbooks help organizations steer these relationships, while privacy impact assessments identify and mitigate compliance risks.

Change management addresses organizational resistance to new approaches. Champion networks within participating organizations can advocate for federated approaches and help colleagues adapt to new workflows. Success metrics demonstrate value and build momentum for broader adoption.

Real-World Success Stories & Future Directions

The theoretical promise of federated data analysis is becoming practical reality through pioneering implementations across healthcare and genomics.

CanDIG (Canadian Distributed Infrastructure for Genomics) connects genomic datasets across Canadian provinces while respecting local data governance. Australian Genomics implements federated approaches for rare disease research, with their federated variant classification system helping reduce variants of uncertain significance.

The Personal Health Train initiative in Europe demonstrates federated analysis at scale, enabling researchers to send analysis “trains” to distributed data “stations” across multiple countries. During the pandemic, federated genomics projects analyzed host factors in severe COVID-19 across nearly 60,000 individuals from multiple institutions.

Pharmaceutical companies increasingly adopt federated approaches for drug findy and safety surveillance through secure analytics “datalands” that enable pre-competitive research collaboration.

Emerging Standards & Community Initiatives

The Global Alliance for Genomics and Health (GA4GH) leads standards development for federated genomics. The Matchmaker Exchange demonstrates federated findy in action, enabling clinicians to search for patients with similar rare genetic variants across databases without accessing underlying patient data.

The UK’s Genome UK vision explicitly calls for federated approaches, recognizing that centralized models cannot scale to population-level genomics while maintaining public trust. Open-source tools like DataSHIELD and emerging standards accelerate adoption across the community.

The Road Ahead for Federated Data Analysis

The future lies in automation and intelligence. AI-driven query planners will optimize workflows across federated networks, automatically selecting efficient algorithms and privacy techniques. Real-time analytics represents the next frontier – pharmacovigilance systems detecting adverse drug reactions across healthcare systems in real-time could save lives.

Policy harmonization will be crucial for global federations. While technical solutions exist for cross-border analysis, regulatory frameworks remain fragmented.

At Lifebit, we’re pioneering next-generation capabilities through our federated AI platform. Our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer) components deliver real-time insights while maintaining the highest security standards.

Frequently Asked Questions about Federated Data Analysis

How is federated data analysis different from meta-analysis?

Meta-analysis works with published results from completed studies – odds ratios, confidence intervals, p-values – but you can’t dig deeper into underlying patterns. Federated data analysis provides access to individual-level data patterns while keeping data in original locations. This enables sophisticated analyses like joint modeling that accounts for population structure and complex statistical analyses impossible with only summary statistics.

For genetic studies, meta-analysis might combine published odds ratios from different populations, but federated analysis can perform joint modeling accounting for ancestry-specific patterns and environmental interactions – achieving the same analytical power as if all data were centralized.

Can federated methods handle vertically partitioned datasets?

Federated data analysis works most naturally with “horizontally partitioned” datasets – where organizations have the same information types about different people. Vertically partitioned scenarios (different organizations having different data types about the same individuals) are much trickier, requiring specialized privacy-preserving record linkage techniques.

While possible using sophisticated cryptographic approaches, the computational costs are significant and privacy analysis becomes complex. Most practical federated systems focus on horizontal partitioning, though hybrid approaches for linking genomic and clinical data are emerging.

What level of IT infrastructure does my organisation need?

Infrastructure requirements are usually more modest than expected. Data providers need secure compute environments (cloud or on-premises), decent network connectivity, and ability to run containerized applications – capabilities most organizations already have.

Federation coordinators need more sophisticated setups including orchestration servers, monitoring systems, and identity management. But even these requirements are manageable with modern cloud platforms. What matters more is ensuring your setup meets security and compliance requirements for your specific use case.

Many organizations start with cloud-based solutions that scale as programs mature. The key is not over-engineering from the beginning – start with what you need and grow as you learn.

Conclusion

The change of healthcare research is happening now, and federated data analysis is leading the charge. We’re witnessing a fundamental shift from “move data to analysis” to a smarter “bring analysis to data” model that respects privacy while opening incredible possibilities.

When 97% of hospital data sits unused because of sharing barriers, we’re missing chances to save lives. When diverse patients get uncertain genetic results at higher rates due to database limitations, we see the real cost of current restrictions.

The technology has moved beyond experiments into production systems making real differences. From 60,000 individuals contributing to COVID-19 genomics research through federated approaches to rare disease patients getting diagnoses through systems like Matchmaker Exchange, federation proves its worth daily.

Success requires thoughtful planning around data harmonization, clear governance frameworks, and proper training. It’s about building trust between organizations and creating systems that work for real people in real situations.

At Lifebit, we’ve seen how the right approach turns challenges into opportunities. Our federated AI platform brings together secure computation environments, real-time analytics, and comprehensive governance tools. Organizations that invest in both technical infrastructure and human elements succeed.

Looking ahead, AI-driven query optimization will make federated systems smarter. Real-time analytics will enable faster responses to health threats. As policies evolve to support cross-border collaboration, we’ll see global federations tackling humanity’s biggest health challenges together.

Organizations embracing federated data analysis today aren’t just preparing for tomorrow – they’re actively shaping it. They’ll lead the next generation of medical research, where privacy and progress work hand in hand.

Ready to see what’s possible? Learn more about our secure federated platform and find how we’re turning federated analysis promises into practical reality for healthcare organizations worldwide. The future of collaborative healthcare research is here, and it’s more accessible than you think.