Next Generation Sequence Testing Made Simple

Next Generation Sequence Testing: Essential 2025 Guide

Why Next-Generation Sequencing is Revolutionizing Modern Healthcare

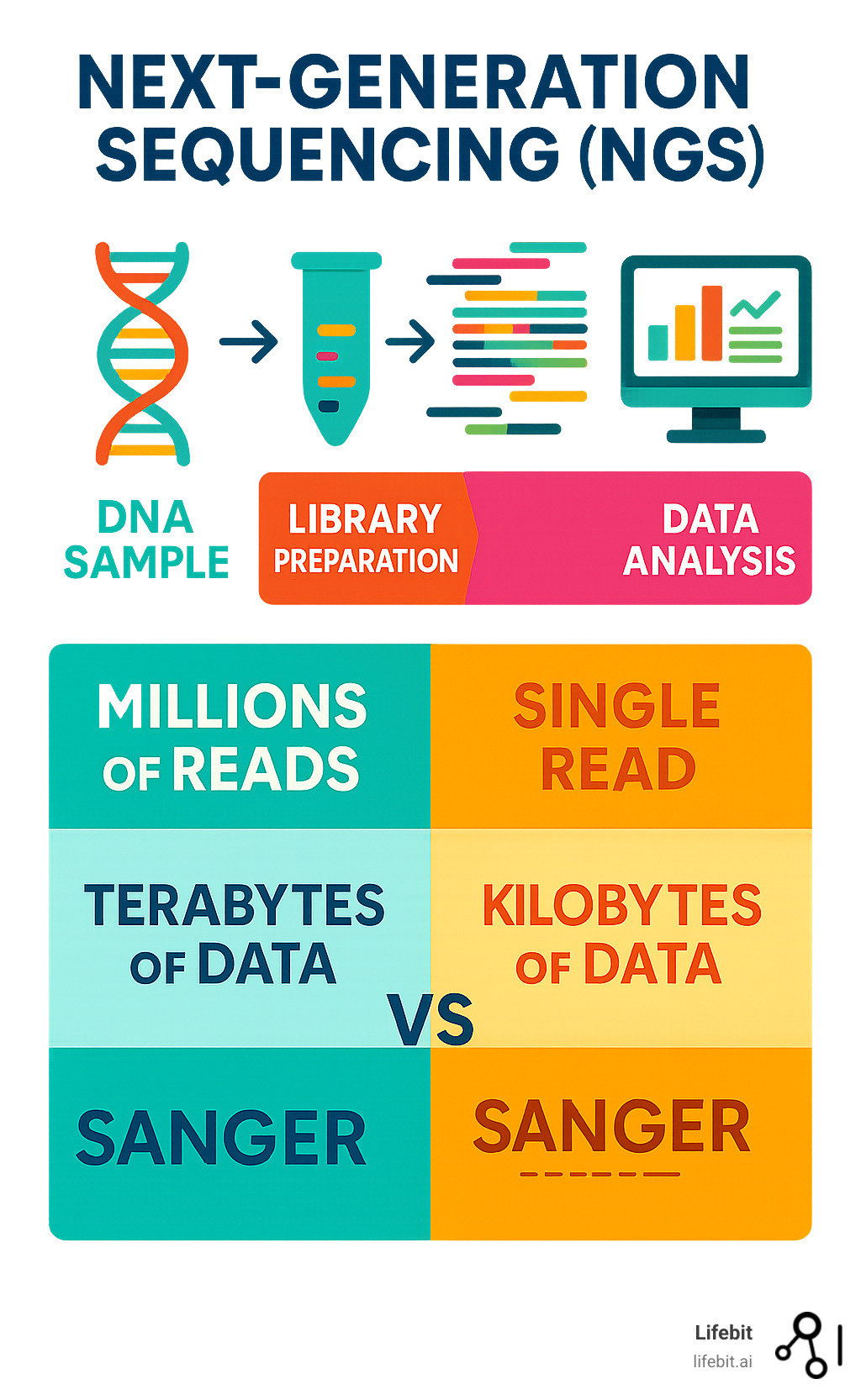

Next generation sequence testing is a technology that analyzes millions of DNA fragments simultaneously, making genetic testing faster, more affordable, and more comprehensive than ever before. By sequencing millions of DNA fragments at once (massive parallelism), it can analyze entire genomes in days, not months. This high-throughput approach has led to a 96% decrease in cost-per-genome, enabling versatile applications from cancer diagnosis to rare disease detection.

Unlike traditional Sanger sequencing, which analyzes one DNA fragment at a time, NGS technology processes millions in parallel. This breakthrough enables comprehensive cancer panels that guide treatment decisions and population-scale studies that reveal new disease mechanisms. The technology fragments DNA, adds special adapters, and sequences all fragments simultaneously, generating massive amounts of genetic data.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. With over 15 years in computational biology and genomics, my focus is on making complex genomic data accessible and actionable. At Lifebit, we help organizations harness the power of next generation sequence testing through secure, federated data analysis platforms.

Next generation sequence testing further reading:

What is Next-Generation Sequencing (NGS)?

Imagine reading millions of pages of a book simultaneously instead of one by one. That’s what Next-Generation Sequencing (NGS) does with DNA and RNA. Also known as massively parallel sequencing or high-throughput sequencing, NGS determines the exact order of nucleotides (A, T, C, and G) by reading millions of DNA pieces at once.

This breakthrough allows us to study entire genomes and analyze gene expression, changing everything from cancer treatment to rare disease research. What once took years and cost billions can now be done in days for a fraction of the price.

How NGS Differs from Traditional Sequencing

To appreciate next generation sequence testing, compare it to its predecessor, Sanger sequencing. Developed in the 1970s, Sanger’s method was brilliant but could only read one DNA fragment per reaction. It’s incredibly accurate and still used for validation, but it’s like reading a library one book at a time.

| Feature | Sanger Sequencing | Next-Generation Sequencing (NGS) |

|---|---|---|

| Throughput | Low (single fragment per reaction) | Ultra-high (millions to billions of fragments per run) |

| Cost | High per base for large-scale projects | Significantly lower per base (96% decrease in cost-per-genome) |

| Speed | Slow (days for individual genes) | Rapid (whole genomes in days, targeted panels in hours) |

| Accuracy | Very high (gold standard for validation) | High, with deep coverage providing robust variant detection |

| Scalability | Limited to small regions or single genes | Highly scalable, from targeted panels to whole genomes |

| Sample Input | Higher requirements | Lower input requirements |

| Sensitivity | Detects variants at higher allele frequencies | Detects variants at lower allele frequencies |

The Human Genome Project illustrates the difference. Using Sanger sequencing, it took over 10 years and nearly $3 billion. Today, NGS can sequence a genome in days for under $1,000. The fundamental difference is scalability. Sanger excels at small regions, but modern genetics demands the massive scale that massively parallel sequencing provides.

You can read more about the foundational work that made this possible in this scientific research on early sequencing methods.

The Core Principle: Massive Parallelism

The secret to NGS is massive parallelism. Instead of sequencing one long DNA strand, NGS breaks it into millions of smaller, overlapping fragments.

The process begins with library preparation, where special adapter sequences are attached to each fragment. These adapters act as molecular barcodes.

Next, clonal amplification copies each fragment millions of times. This is necessary to generate a signal strong enough for the sequencing machine to detect.

Finally, all amplified fragments are sequenced simultaneously on a flow cell. As each base is added, the machine detects a signal and records the sequence. This parallel processing has dramatically increased speed and reduced costs, democratizing genetic research and enabling clinicians to offer comprehensive genetic testing faster than ever before.

The Core of Next Generation Sequence Testing: Workflow and Analysis

Next generation sequence testing is a sophisticated process that turns a biological sample into digital insights. The workflow has four main stages: DNA/RNA extraction, library preparation, sequencing, and data analysis, with quality control at every step.

Step 1: Library Preparation

Library preparation is a crucial multi-step process that readies genetic material for sequencing. It ensures the DNA or RNA is in a format that the sequencing machine can read.

First, long DNA or RNA strands are fragmented into smaller, manageable pieces, typically ranging from 100 to 800 base pairs. This can be achieved through physical methods like sonication (using sound waves) or enzymatic methods using transposases, which simultaneously cut the DNA and insert adapter sequences.

Next, in adapter ligation, special DNA sequences called adapters are attached to both ends of each fragment. These adapters are critical; they contain sequences that allow the DNA fragment to bind to the sequencer’s flow cell, act as a priming site for the sequencing reaction, and include unique molecular barcodes or indexes. These indexes allow multiple samples to be pooled together in a single sequencing run (a process called multiplexing) and later identified bioinformatically, dramatically increasing throughput and reducing costs.

For some applications, target enrichment is used to focus the sequencing power on specific genes or regions of interest. This is highly efficient for clinical testing where only a known set of disease-related genes needs to be analyzed. The two main methods are hybrid-capture, which uses biotinylated RNA or DNA probes to pull down the desired DNA fragments, and amplicon-based approaches, which use PCR to selectively amplify the target regions. Hybrid-capture is often preferred for its uniform coverage and ability to detect novel variants within the target regions.

Finally, PCR amplification makes millions of copies of each library fragment. This ensures the signal generated during sequencing is strong enough for the machine’s optical sensors to detect accurately. The choice of library preparation method is tailored to the specific application, such as whole genome sequencing, whole exome sequencing, targeted gene panels, and RNA sequencing.

Step 2: Sequencing

Modern NGS platforms read millions of DNA fragments simultaneously. The prepared library is loaded onto a flow cell, a glass slide with lanes coated in oligonucleotides that are complementary to the library adapters. Here, clonal amplification occurs, where each individual fragment is amplified into a distinct cluster of identical molecules. In Illumina platforms, this is done via bridge amplification.

Most NGS systems, including those from Illumina, use sequencing by synthesis (SBS) chemistry. In this process, fluorescently labeled nucleotides (A, T, C, G) with a reversible terminator are flowed across the cell one at a time. When a nucleotide is incorporated into a growing DNA strand complementary to the template, it emits a flash of colored light, and the terminator prevents further additions. High-resolution cameras capture these light signals from millions of clusters at once. The terminator and fluorescent tag are then cleaved, and the cycle repeats. Other technologies exist, such as Ion Torrent’s semiconductor sequencing, which detects the release of a hydrogen ion (a change in pH) upon nucleotide incorporation.

Finally, base calling software analyzes the series of images from the sequencing run, converting the light signals from each cluster into a DNA sequence (a “read”) and assigning a quality score (a Phred or Q-score) to each base. A Q30 score, for example, indicates a 1 in 1,000 chance of an incorrect base call, representing 99.9% accuracy.

Step 3: Data Analysis and Interpretation

The sequencing process generates terabytes of raw data that require a complex bioinformatics pipeline to become actionable clinical insights.

Primary processing is the base calling step described above, which converts the machine’s raw image files into FASTQ files. These files contain the DNA sequence reads and their corresponding quality scores.

Secondary analysis involves cleaning the data and performing read alignment. This is a massive computational puzzle where each short DNA read is mapped to its correct location on a human reference genome (e.g., GRCh38). This process generates a Sequence Alignment Map (SAM) or its binary version (BAM) file. After alignment, variant calling algorithms scan the aligned reads to identify differences (variants) between the sample DNA and the reference genome. This can identify single nucleotide polymorphisms (SNPs), small insertions or deletions (indels), copy number variations (CNVs), and larger structural variants (SVs). The output is a Variant Call Format (VCF) file, which lists all identified variants.

Tertiary analysis is the final and most critical step for clinical applications. Each variant in the VCF file is annotated with information from public and private genetic databases (e.g., dbSNP, gnomAD, ClinVar, OMIM) to determine its frequency in the population and any known disease associations. Filtering algorithms then help prioritize variants most likely to impact health. Finally, clinical geneticists and bioinformaticians interpret these variants according to established guidelines, such as those from the American College of Medical Genetics and Genomics (ACMG), classifying them as Pathogenic, Likely Pathogenic, Variant of Uncertain Significance (VUS), Likely Benign, or Benign.

At Lifebit, we understand this complexity. Our Trusted Research Environment helps researchers and clinicians manage these large-scale analyses securely and efficiently, turning raw data into clinical insights.

The Power of NGS: Advantages and Real-World Applications

The arrival of next generation sequence testing has changed biological research and medical diagnosis, allowing us to see genetic details that were previously invisible.

What are the primary benefits of next generation sequence testing?

The advantages of NGS are transformative:

- High throughput: Instead of analyzing one gene at a time, NGS examines millions or billions of DNA fragments simultaneously, enabling everything from targeted panels to whole genome analysis in a single run.

- Cost-effectiveness: The falling cost of DNA sequencing has been dramatic, with a 96% decrease per genome, making large-scale analysis accessible. A genome that once cost billions to sequence can now be done for under $1,000, democratizing genomics for research and clinical use.

- Speed: Research and clinical timelines have shrunk from months or years to days or weeks. This rapid turnaround can be life-changing for patients with aggressive cancers or newborns with acute genetic disorders.

- High sensitivity: NGS can detect genetic variants at very low frequencies (less than 1%), a capability known as deep sequencing. This is crucial for applications like detecting circulating tumor DNA in a blood sample or identifying low-level mosaicism.

- Lower sample requirements: Meaningful genetic information can be extracted from small or degraded samples, such as a few drops of blood, a small tissue biopsy, or even formalin-fixed, paraffin-embedded (FFPE) tissue commonly stored in pathology labs.

Key Applications in Research and Genomics

The versatility of next generation sequence testing has led to its adoption across biology:

- Whole Genome Sequencing (WGS) provides the most comprehensive view of the genetic code, capturing genes, regulatory regions, and non-coding DNA. It is the gold standard for discovering novel disease-causing genes and is essential for identifying large structural variations like translocations and inversions. Large-scale population studies like the UK Biobank and the All of Us Research Program use WGS to link genetic variation to health and disease on an unprecedented scale.

- Whole Exome Sequencing (WES) focuses on the protein-coding regions (the exome), which harbor about 85% of known disease-causing mutations. It offers a cost-effective yet powerful alternative for many clinical questions, particularly in ending the “diagnostic odyssey” for individuals with rare genetic disorders.

- Transcriptomics (RNA-Seq) reveals which genes are active in a cell and at what level, providing a dynamic snapshot of cellular function. It is used to discover novel transcripts, identify differential gene expression between healthy and diseased states, and detect gene fusions (e.g., BCR-ABL in chronic myeloid leukemia) that are potent cancer drivers and drug targets.

- Epigenomics studies chemical modifications that regulate gene activity without changing the DNA sequence itself. Methods like Bisulfite-Seq map DNA methylation patterns, which can silence tumor suppressor genes in cancer, while ChIP-Seq identifies where proteins bind to DNA to control gene expression.

- Metagenomics sequences all genetic material in a complex sample, such as from the human gut or an environmental source. This reveals the diversity and functional capacity of microbial communities, linking gut dysbiosis to conditions like inflammatory bowel disease, obesity, and even the efficacy of cancer immunotherapies.

How is next generation sequence testing applied in personalized medicine?

NGS is the engine driving healthcare from a one-size-fits-all model to genetically informed, personalized care.

- Oncology: Comprehensive cancer panels analyze hundreds of genes simultaneously from a tumor biopsy. If an NGS test on a non-small cell lung cancer patient identifies an ALK gene fusion, they can be treated with a highly effective ALK inhibitor. Liquid biopsy, which analyzes circulating tumor DNA (ctDNA) in blood, allows doctors to monitor treatment response, detect cancer recurrence non-invasively by tracking minimal residual disease (MRD), and identify new mutations that confer drug resistance.

- Rare Disease Diagnosis: For patients, often children, with complex, multi-system disorders, WES or WGS can end years of inconclusive testing. By screening all candidate genes at once, these tests can identify a single de novo mutation responsible for the condition, providing a definitive diagnosis, informing prognosis, and guiding management.

- Pharmacogenomics: Genetic information is used to predict patient responses to specific drugs. For example, NGS can test for variants in the CYP2D6 gene, which affects how individuals metabolize many common drugs, including codeine. An “ultrarapid metabolizer” is at risk of overdose, while a “poor metabolizer” may receive no pain relief. This information helps clinicians select the right drug and dose from the start, avoiding adverse reactions.

- Carrier Screening: NGS-based expanded carrier screening allows prospective parents to be tested for hundreds of recessive genetic conditions (like cystic fibrosis or spinal muscular atrophy) with a single test. This provides crucial information for family planning and reproductive decisions.

This ability to tailor treatments to a patient’s unique genetic profile is the core of personalized medicine. The clinical applications of NGS in cancer are a prime example of this shift. At Lifebit, our federated platform helps organizations securely analyze the massive datasets generated by NGS to advance these applications.

Navigating Challenges and the Future of Sequencing

The power of next generation sequence testing comes with significant challenges, but the field is evolving rapidly with exciting solutions on the horizon.

Current Challenges and Limitations

The massive data output and complexity of NGS create several hurdles:

- Data Storage and Computational Costs: A single human genome sequenced at 30x coverage can generate 100-200 gigabytes of data. Storing and processing terabytes or petabytes of this information for large cohorts requires significant investment in high-performance computing infrastructure and cloud storage, along with strategies for data compression and long-term archiving.

- The Bioinformatics Bottleneck: There is a shortage of bioinformaticians with the skills to design, validate, and run complex analysis pipelines. This creates a bottleneck between data generation and clinical interpretation. Standardizing pipelines with workflow languages like Nextflow and deploying them on scalable cloud platforms are key to overcoming this challenge.

- Variant Interpretation: Identifying a genetic variant is easy; determining if it is clinically significant is hard. A large proportion of identified variants are classified as Variants of Uncertain Significance (VUS), creating diagnostic uncertainty for clinicians and anxiety for patients. International consortia like ClinGen are working to systematically curate evidence to reclassify VUS, but it remains a major challenge.

- Lab Setup and Quality Management: Establishing a clinical NGS facility requires specialized equipment, dedicated lab space to prevent contamination, and strict quality management systems (QMS) to ensure the accuracy and reproducibility of results, often governed by regulatory bodies like CLIA or CAP.

- Ethical, Legal, and Social Implications (ELSI): Widespread genetic testing raises profound ethical questions regarding data privacy, genetic discrimination (in employment or insurance), the return of incidental findings, and ensuring equitable access to testing across diverse populations.

Emerging Trends and the Future of NGS

Despite these challenges, the future of next generation sequence testing is bright, driven by continuous innovation:

- Long-Read Sequencing: While traditional NGS uses short reads (150-300 bp), newer technologies from companies like Pacific Biosciences (PacBio) and Oxford Nanopore Technologies (ONT) generate reads tens of thousands of base pairs long. These long reads are transformative for assembling complete genomes de novo, resolving complex, repetitive genomic regions that are invisible to short reads, and identifying large structural variants.

- Single-Cell Analysis: Applying NGS to individual cells is revolutionizing our understanding of tissue heterogeneity. Single-cell RNA sequencing (scRNA-Seq) can deconstruct a tumor into its constituent cell types (cancer cells, immune cells, fibroblasts), revealing cellular interactions, developmental trajectories, and mechanisms of drug resistance at an unprecedented resolution.

- AI and Machine Learning: Smart algorithms are being integrated across the NGS workflow. AI models like Google’s DeepVariant improve the accuracy of variant detection. Furthermore, machine learning is being used to predict the pathogenicity of variants from sequence data alone (e.g., AlphaMissense) and to integrate multi-omics data to discover novel biomarkers and drug targets.

- Multi-omics Integration: The future lies in combining genomics with other data types like transcriptomics (gene expression), proteomics (protein levels), and metabolomics (metabolite profiles) to create a holistic picture of biological systems. For example, integrating genomic risk factors with proteomic and metabolic changes in a patient can provide a much deeper understanding of disease progression than any single data type alone. This is explored in Modern Multiomics: Why, How, and Where to Next?.

These advances, along with more accessible benchtop sequencers and user-friendly cloud-based analysis platforms, are making NGS faster, cheaper, and more insightful, paving the way for its routine use in personalized and preventative medicine.

Frequently Asked Questions about NGS

When exploring next generation sequence testing, many organizations have similar questions about cost, time, and accuracy. Here are answers to the most common queries.

How much does an NGS test cost?

The cost of NGS has fallen dramatically, with a 96% decrease in cost-per-genome since its introduction. Today’s prices vary based on several factors:

- Test Type: Whole Genome Sequencing (WGS) is the most comprehensive and expensive option. Targeted gene panels, which examine a specific set of genes, are more budget-friendly.

- Depth of Coverage: This refers to how many times each genetic letter is read. Higher coverage provides better sensitivity for detecting rare variants but increases the cost. A 30x coverage is standard for many applications, while cancer liquid biopsies may require 1000x or more.

How long does it take to get NGS results?

The turnaround time for next generation sequence testing is typically days to weeks. The timeline depends on:

- Sample Preparation: Usually 1-3 days. Challenging samples like FFPE tissue may take longer.

- Sequencing Run Time: Varies from hours for targeted panels on benchtop sequencers to several days for whole genomes on high-throughput platforms.

- Data Analysis: This is often the longest phase, taking from a few days to over a week. Automated, cloud-based analysis pipelines can significantly speed up this step.

For urgent clinical cases, some labs offer expedited results in 24-48 hours.

Is NGS more accurate than Sanger sequencing?

NGS and Sanger sequencing are complementary technologies that excel in different ways.

- Sanger sequencing is the gold standard for accuracy when reading a single DNA fragment. It is often used to confirm critical findings from an NGS test.

- Next generation sequence testing achieves high accuracy through depth of coverage. By reading each genetic position many times, it can confidently identify variants. This statistical power gives NGS a key advantage: detecting low-frequency variants.

While Sanger typically requires a variant to be in at least 20% of the DNA, NGS can detect variants present in just 1-5% of cells. This sensitivity is crucial for applications like cancer monitoring. In practice, labs often use NGS for broad screening and Sanger for targeted validation, combining the strengths of both technologies.

Conclusion

Next generation sequence testing has fundamentally changed how we understand life. By sequencing millions of DNA fragments simultaneously, it has become the backbone of modern genetics and medicine. The 96% decrease in cost-per-genome has democratized genetic analysis, making it a powerful tool for researchers and clinicians worldwide.

Today, NGS is revolutionizing healthcare. It enables personalized cancer treatments, provides diagnoses for rare genetic conditions, and helps researchers uncover new drug targets. This is precision medicine in action.

The future is even more exciting. Long-read sequencing, single-cell analysis, and AI are pushing the boundaries of what’s possible. The integration of genomics with other multi-omics data is creating a more complete picture of human biology.

Of course, challenges in data management, analysis, and interpretation remain. At Lifebit, we understand these problems. Our federated AI platform helps organizations securely access, analyze, and interpret complex biomedical data at scale, breaking down the barriers that slow down groundbreaking research. Our Trusted Research Environment, Trusted Data Lakehouse, and R.E.A.L. platform components empower biopharma, governments, and public health agencies to harness the power of genomic data.

The future of next generation sequence testing is a world where genomic insights are a routine part of healthcare, enabling preventative and truly personalized medicine. This is the promise of precision medicine, powered by this remarkable technology.

Contact Lifebit to accelerate your research