In Depth Guide to Federated Analytics

Federated Analytics: Ultimate Guide 2025

Why Federated Analytics is Revolutionizing Data Science

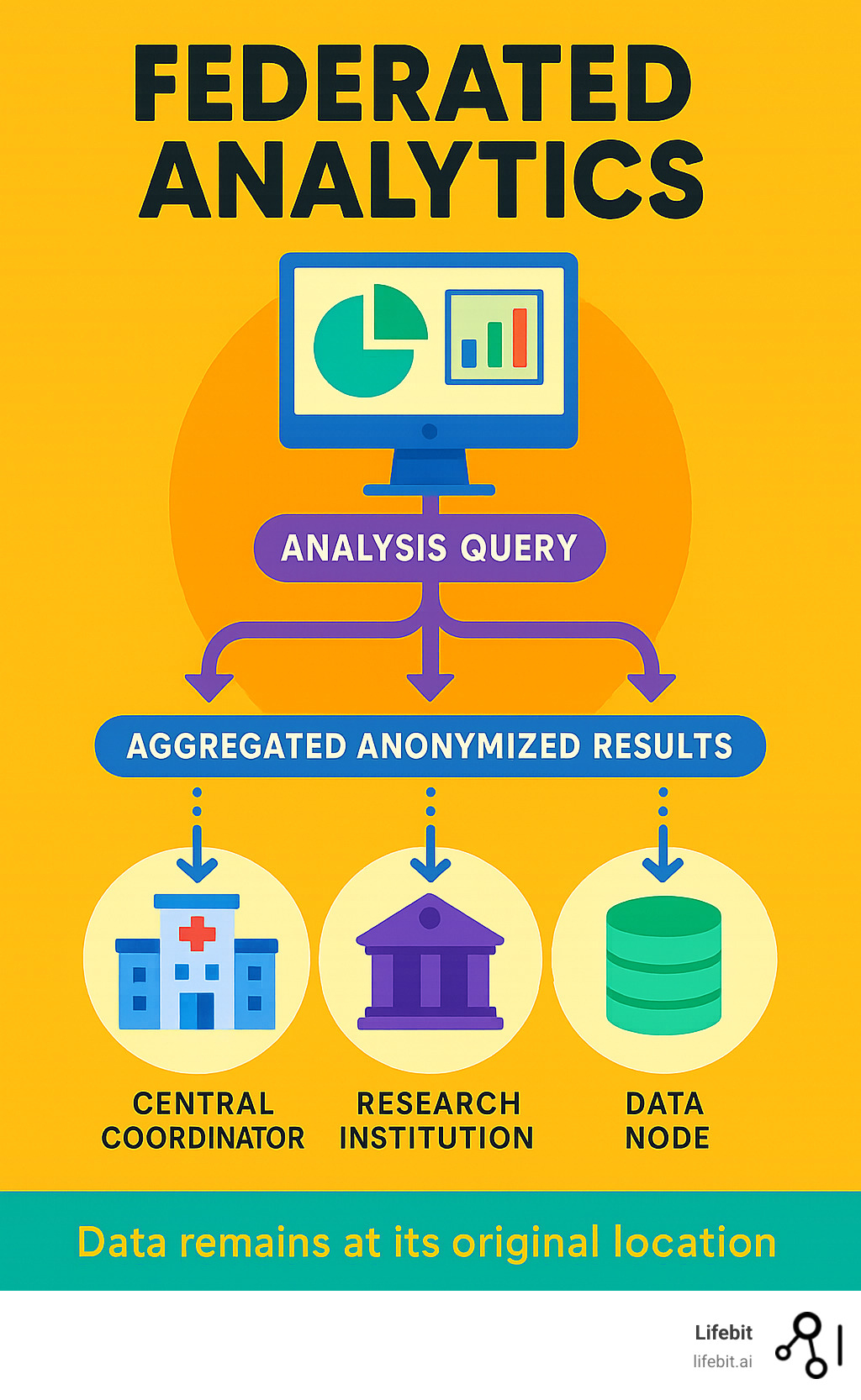

Federated analytics is a groundbreaking approach to analyze data across multiple distributed sources without centralizing it. Instead of moving data, the analysis travels to the data, enabling collaboration while keeping sensitive information secure and private.

Key characteristics of federated analytics:

- Data stays put – Analysis code travels to distributed datasets, not the other way around

- Privacy-first – Only aggregated, anonymized results are shared between parties

- Collaborative – Multiple organizations can participate without exposing raw data

- Secure – Each data source maintains its own security controls and governance

- Efficient – Eliminates costly and risky data transfers between organizations

The need is urgent. The World Economic Forum estimates 97% of all hospital data goes unused, largely due to privacy concerns and regulatory restrictions. Traditional analysis, which requires centralizing data, creates significant privacy risks, regulatory problems, and massive infrastructure costs.

This problem is acute in healthcare, where genomic and clinical trial data are siloed by regulations like GDPR and HIPAA. Data sharing agreements alone can take six months or more to finalize, delaying critical research.

Federated analytics solves this by changing the question from “How do we move data safely?” to “How do we analyze data where it lives?” This paradigm shift is changing fields from drug findy to cybersecurity.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. For over 15 years, I’ve pioneered federated analytics solutions in genomics and biomedical research, focusing on making secure, collaborative analysis accessible to organizations worldwide without compromising data sovereignty.

What is Federated Analytics and How Does It Differ from Traditional Analysis?

Think of federated analytics like a postal service for data analysis. Instead of bringing everyone to a central meeting (centralized analysis), you send the agenda to each person and collect their responses. This way, you gain insights without anyone leaving their secure workspace.

This approach flips traditional analysis on its head, moving from a “data-to-code” model (pulling data to a central location) to a “code-to-data” model (sending analysis to the data). It’s like a traveling scientist who visits each lab and shares only the final results.

Each organization retains data sovereignty, controlling who can access their information and how. This privacy by design approach aligns with regulations like GDPR and HIPAA because raw data never leaves its secure environment.

To see the stark differences, let’s compare traditional and federated approaches:

| Parameter | Traditional Centralized Analytics | Federated Analytics |

|---|---|---|

| Data Movement | Raw data is collected and moved to a central repository. | Data remains in its original location; analysis code moves to data. |

| Privacy | Higher risk of privacy breaches due to data aggregation. | Improved privacy as raw data is never exposed or transferred. |

| Security | Centralized data stores are single points of failure, vulnerable to breaches. | Distributed data reduces the risk of large-scale breaches; local security maintained. |

| Data Sovereignty | Data owners relinquish direct control once data is centralized. | Data owners retain full control and governance over their data. |

| Scalability | Can be limited by the capacity and cost of central storage and compute. | Highly scalable as computation is distributed across local nodes. |

| Regulatory Compliance | Complex and challenging, requiring extensive legal agreements for data transfer. | Simplifies compliance by keeping data within jurisdictional boundaries. |

| Collaboration | Difficult due to data sharing agreements and privacy concerns. | Facilitates secure collaboration across multiple organizations. |

For organizations dealing with sensitive data, this difference is game-changing. You can learn more about the technical details in our Federated Data Analysis guide.

Core Principles and Benefits of Federated Analytics

The core principle of federated analytics is data minimization: only aggregated, anonymized results travel between organizations, while raw data stays locked down. This improves security by design. Instead of creating a single data honeypot, information remains distributed, reducing the attack surface while each organization maintains its own security controls.

This principle unlocks several key benefits:

-

Increased Statistical Power: By accessing previously unreachable datasets, researchers can achieve the sample sizes needed for statistically significant findings. For example, a study on a rare genetic disorder might only have a dozen patients at a single hospital, which is not enough for a powerful analysis. By federating the analysis across 20 hospitals globally, researchers can analyze a cohort of hundreds, dramatically increasing the chances of identifying the genetic variants responsible. One study showed a 10-fold sample size increase yielded 100-fold more findings.

-

Accelerated Time-to-Insight: Traditional data sharing can involve months or even years of negotiating legal agreements, followed by complex and time-consuming data transfer and ingestion processes. Federated analytics bypasses this bottleneck. Once a federated network and its governance are established, new analyses can be executed in days or hours, allowing organizations to respond to urgent questions—like those posed during a pandemic—at speed.

-

Reduced Costs and Complexity: Centralizing massive datasets is incredibly expensive. It involves costs for data transfer (which can run into the millions for petabyte-scale genomic data), redundant storage, and the powerful central computing infrastructure needed to process it. Federation eliminates these transfer and central storage costs, leveraging the existing compute resources at each local data node.

-

Enhanced Cross-Organizational Collaboration: Federation provides a neutral, secure framework for collaboration between competing or highly regulated entities, such as pharmaceutical companies, hospitals, or government agencies. It builds trust by ensuring no party has to relinquish control over its valuable data assets, making previously impossible collaborations feasible.

These principles are particularly powerful in genomics research, as we explore in our Federated Architecture in Genomics article.

The Challenge of Data Silos in Modern Research

The challenge of data silos is immense. An estimated 97% of all hospital data goes unused, trapped by a combination of regulatory, technical, and organizational barriers. Healthcare data fragmentation is rampant, with patient records, genomic sequences, and clinical trial results living in separate, incompatible systems. For example, The Cancer Genome Atlas Programme contains 2.5 petabytes of data, a treasure trove that is difficult to combine with other similar datasets globally.

These silos are not just a healthcare problem; they exist in every industry and manifest in several forms:

- Organizational Silos: Data is often segregated by department (e.g., R&D, marketing, sales) with different goals and little incentive to share. In a hospital setting, the radiology department’s imaging data is often completely disconnected from the pathology lab’s genomic data.

- Technical Silos: Data is stored in different formats, databases, or legacy systems that cannot communicate with one another. This lack of interoperability makes it technically difficult to combine and analyze data even within a single organization.

- Geographical and Regulatory Silos: Data is often bound by national or regional privacy laws, such as GDPR in Europe or HIPAA in the US. These regulations impose strict rules on where data can be stored and how it can be transferred, effectively creating legal boundaries that prevent centralization.

Accessing this information is hampered by regulatory problems and data sharing agreement delays that can take over six months. This slows down research, delays patient treatments, and prevents businesses from gaining a holistic view of their operations. Our federated biomedical data platform is designed to break down these silos, enabling collaboration in days, not months, while respecting data security.

How Federated Analytics Works: Technology and Infrastructure

Imagine a conductor leading an orchestra where musicians play from their own homes. The conductor sends the sheet music (analysis code), each musician plays their part, and sends back a summary (aggregated results). This is the essence of federated analytics.

In a federated analytics architecture, a central coordinator sends a query to multiple data nodes (e.g., hospitals, research labs). Each node runs the analysis locally on its private data. The raw data never moves. Only aggregated, privacy-preserving results are sent back to the coordinator, who combines them to generate global insights.

Key Technological Components for Implementing Federated Analytics

A robust federated analytics system is a sophisticated ecosystem built on several key technological pillars:

-

Trusted Research Environments (TREs): These are highly secure computing environments that act as the local data nodes in a federated network. Data is placed within the TRE, and researchers are given access to analyze it within that secure space, but they cannot move it. The TRE ensures that data never leaves its protective walls, making it the foundational building block for secure, federated analysis.

-

Secure Aggregation Protocols: These are cryptographic techniques that allow the central coordinator to compute a collective result (like a sum or average) from the individual results of each node, without being able to see any individual node’s contribution. Techniques like Secure Multi-Party Computation (SMPC) enable multiple parties to jointly compute a function over their inputs while keeping those inputs private. This adds a powerful mathematical guarantee of privacy to the architectural separation of data.

-

Data Standards and Common Data Models (CDMs): For a federated query to work, it must be able to run successfully on diverse, heterogeneous datasets. Data standards and CDMs, like the Observational Medical Outcomes Partnership (OMOP) model in healthcare, provide a solution. They harmonize disparate datasets into a common format and terminology. This ensures that a query like “find the average age of patients with type 2 diabetes” means the same thing and can be executed correctly at every node in the network.

-

APIs (Application Programming Interfaces): APIs act as the contractual agreement for communication within the federated network. They define the precise protocols for how the central coordinator sends analysis queries and how the local nodes return results. Well-defined APIs ensure that different systems, potentially built by different vendors, can interoperate seamlessly and securely within the federation.

-

Workflow Managers: Real-world data analysis is rarely a single query. It often involves complex, multi-step pipelines. Workflow managers (like Nextflow or Cromwell) orchestrate these entire processes, managing the distribution of each step of the analysis, collecting intermediate results, and ensuring the overall analytical pipeline runs efficiently and correctly across the distributed nodes.

-

Confidential Computing & Trusted Execution Environments (TEEs): This represents the cutting edge of security. TEEs are hardware-based secure enclaves (like Intel SGX) that isolate code and data during processing. In a federated context, this means the analysis code can run in a protected environment on the local node, encrypted and invisible even to the node’s owner. This provides an unprecedented level of assurance that the data is only being used for the approved computation.

For more detailed information on how we build and use these secure environments, explore our resources on Federated Trusted Research Environments.

Federated Analytics vs. Federated Learning: What’s the Difference?

Though often confused, federated analytics and federated learning are distinct but related concepts. Both follow the “code-to-data” principle, keeping raw data local, but their goals differ.

-

Federated Learning focuses on collaboratively training a shared machine learning model. The process involves sending a model to each data node, where it learns from the local data. Instead of sending data back, each node sends updated model parameters (weights and biases) to the central server, which aggregates them to improve the shared model. The goal is to produce a single, highly accurate predictive model.

-

Federated Analytics focuses on statistical analysis and business intelligence. It is used to compute aggregate statistics (such as counts, sums, averages, or frequency histograms) from distributed data. The goal is not to train a model, but to generate descriptive insights and answer specific questions about the collective dataset. For example, federated analytics might answer, “What is the average age of patients with a specific biomarker across 10 hospitals?”

In short, federated learning builds smarter predictive models, while federated analytics reveals descriptive data patterns. The two are often complementary; for instance, federated analytics could be used first to understand a dataset’s characteristics before federated learning is used to train a predictive model on it.

The Role of Trusted Research Environments (TREs)

Trusted Research Environments (TREs) are the secure foundations for federated analytics, especially when dealing with sensitive data. A TRE is a secure computing system where approved researchers can remotely access and analyze sensitive data. They are designed around the internationally recognized “Five Safes” framework to ensure data is used responsibly:

- Safe People: Researchers are trained, vetted, and approved, ensuring they are trustworthy and have the skills to handle sensitive data.

- Safe Projects: Every analysis proposal is reviewed by a Data Access Committee to ensure it is ethical, in the public interest, and scientifically valid.

- Safe Settings: The TRE itself is a robust, secure technical environment with strong access controls and auditing to prevent unauthorized access or data leakage.

- Safe Data: Data is de-identified or anonymized to the greatest extent possible before being made available for analysis, minimizing privacy risks.

- Safe Outputs: All results are checked before they can be removed from the TRE. This “airlock process” ensures that no sensitive or disclosive information leaves the environment, allowing only aggregated, non-identifiable results to be exported.

The historical challenge was analyzing data across multiple TREs. Federation solves this by creating a network of TREs that can collaborate without centralizing data. Initiatives like DARE UK are pioneering this cross-TRE collaboration, proving its feasibility and security at a national scale.

Learn more about our comprehensive approach at Federated Trusted Research Environment.

Real-World Applications and Use Cases

The beauty of federated analytics lies in how it’s already changing industries across the globe, from life-saving medical research to the apps we use every day. Let’s explore how this technology is making a real difference in the world.

Healthcare and Genomics

Healthcare is a prime field for federated analytics, where it addresses massive data volumes and strict privacy rules.

- COVID-19 Research: During the pandemic, federation enabled international consortiums to rapidly analyze clinical and genomic data from patients across continents. This allowed researchers to identify genetic risk factors for severe disease in near real-time, without the delays of traditional data sharing agreements.

- Rare Diseases: For conditions affecting few people, no single hospital has enough data for meaningful research. Federation allows researchers to virtually combine patient cohorts from across the globe. This provides the statistical power needed to identify rare genetic markers, understand disease progression, and discover potential therapeutic targets.

- Population Genomics: Large-scale projects like the Genomics England 100,000 Genomes Project use federated methods to run genome-wide association studies (GWAS) on diverse populations. A GWAS scans entire genomes to find variants associated with a disease. Federation is critical for this work, as it allows for the inclusion of diverse ancestral populations, improving the equity and accuracy of findings. The Global Alliance for Genomics and Health (GA4GH) promotes standards to enable this global collaboration.

- Real-World Evidence (RWE) Analysis: Pharmaceutical companies need to understand how their drugs perform in the real world, outside of controlled clinical trials. Federated analytics allows them to analyze real-world data (RWD) directly from hospital electronic health record systems. This helps them monitor drug safety (pharmacovigilance) and effectiveness across diverse patient populations without ever accessing sensitive patient records.

Our work in Federated Technology in Population Genomics shows how we’re making these critical analyses accessible to researchers worldwide.

Cybersecurity

In the world of cybersecurity, threats are often distributed and subtle. Federated analytics helps security teams get a unified view of threats across scattered environments (cloud, on-prem, hybrid) without the risk and cost of centralizing all security logs. Instead of moving terabytes of log data to a central SIEM (Security Information and Event Management) system, analysts can run federated queries against data where it resides. This helps them detect sophisticated, low-and-slow attacks or Advanced Persistent Threats (APTs) that might not trigger alerts in a single environment but show a clear pattern when viewed collectively. Standards like the Open Cybersecurity Schema Framework (OCSF) provide a common language for security data, making these federated queries more powerful and effective.

Finance and Insurance

Financial institutions operate under strict regulations and fierce competition, making data sharing nearly impossible. Federated analytics provides a breakthrough solution for collaboration on common challenges.

- Collaborative Fraud Detection: Individual banks only see a small piece of a complex fraud scheme. By using federated analytics, a consortium of banks can share insights on fraudulent transaction patterns without revealing any customer data. A query could identify a new type of money laundering typology that is only visible when analyzing transactions across multiple institutions, allowing all members to update their detection models simultaneously.

- Credit Risk Modeling: A global bank can use federated analytics to build more robust credit risk models by analyzing lending data from different countries. This allows them to incorporate regional economic trends and borrower behaviors without violating data residency laws like GDPR, leading to more accurate risk assessments and fairer lending decisions.

Consumer Technology and Beyond

You likely use federated analytics daily. It powers features on your smartphone while keeping your data private.

- Keyboard Predictions: Your phone’s keyboard learns your personal slang and typing style locally on your device to suggest the next word. It contributes anonymized insights about new words or phrases to a central server, which are aggregated with insights from millions of other users to improve the overall prediction model for everyone, all without your private messages ever leaving your phone.

- Song Recognition: Your device can identify a song by processing a snippet of audio locally. If it’s a new or unrecognized song, it might contribute an anonymized feature print of the audio to a global database, helping to improve the recognition service for all users. This method improved recognition accuracy by 5% across devices.

- Smart Replies: Messaging apps learn from your common responses on-device to provide relevant, one-tap reply suggestions. These learnings can be aggregated in a privacy-preserving way to improve the smart reply feature for all users without the app’s developer ever reading your private conversations.

These examples show we no longer have to choose between useful features and privacy.

Challenges, Governance, and the Future of Federation

While federated analytics opens incredible doors for data collaboration, it’s not a magic bullet. Like any groundbreaking technology, it comes with its own set of challenges that we need to tackle to truly harness its potential.

Overcoming Challenges and Limitations

Key challenges in implementing federated analytics include:

- Data Heterogeneity: Data from different sources often has varying formats, schemas, and quality. Harmonizing this data to a Common Data Model (like OMOP) is a crucial prerequisite for federation, but it can be a significant undertaking requiring substantial data engineering and domain expertise at each participating site.

- Communication Latency: In a federated system, every query must travel across a network to each node, and the results must travel back. This introduces communication overhead. While this is manageable for batch analyses that run overnight, it can be a challenge for interactive, real-time queries where analysts expect immediate responses.

- Result Accuracy and Bias: Working only with aggregated data risks losing important nuances. More importantly, if the data across nodes is not identically and independently distributed (non-IID)—for example, if one hospital’s patient population is much older than another’s—simple aggregation can lead to statistically biased or misleading results, a phenomenon known as Simpson’s Paradox. Advanced federated algorithms are needed to account for and correct this heterogeneity.

- Computational Overhead at the Edge: While federation removes the need for a massive central compute cluster, it requires each participating organization to have adequate local computing resources to run analyses. This can be a barrier for smaller institutions or those with limited IT infrastructure and personnel.

- Lack of Standardization: While standards for data models and communication are emerging, universal frameworks for federated governance, security protocols, and legal agreements are still evolving. This can make setting up a new federation a complex, bespoke process.

Despite these problems, the field is rapidly evolving. Our research on Privacy Preserving Statistical Data Analysis on Federated Databases directly addresses many of these complex challenges.

Governance Frameworks and Ethical Considerations

Effective governance is built on trust and is arguably more critical than the technology itself. A strong governance framework is what makes a federation sustainable. Key pillars include:

- Data Access Policies: A central body, often a Data Access Committee (DAC), must establish and enforce clear, transparent rules defining who can query the data, what types of analyses are permitted, and under what conditions. This ensures data is used for its intended purpose.

- Ethical Oversight: For research involving human data, an independent ethics committee must review all project proposals to ensure they are ethically sound, protect participant privacy, and have a clear potential for public benefit.

- Transparency and Accountability: Every query and computation within the federation must be logged in a secure, immutable audit trail. This transparency allows all participants to verify that data is being used appropriately and provides a clear record for accountability. Some future systems may even use blockchain for this purpose.

- Benefit Sharing: Frameworks must be established to ensure that all contributors to the federation benefit from the findings. This includes fair arrangements for intellectual property, publication authorship, and ensuring that insights are shared back with the patient and public communities who provided the data.

These governance considerations are the bedrock that makes federated analytics sustainable and trustworthy. Our work on Federated Data Governance explores these critical frameworks in detail.

The Next Frontier: Confidential Federated Analytics

The next frontier is confidential federated analytics, which combines the distributed nature of federation with the hardware-level security of confidential computing and Trusted Execution Environments (TEEs). TEEs are secure enclaves within a processor (like Intel SGX or AMD SEV) that keep data and code confidential and tamper-proof, even from the host system’s administrator. This provides two transformative guarantees:

- Protection in Use: Data remains encrypted and isolated even while it is being processed. This protects against malicious insiders at the data location or compromised infrastructure.

- Verifiability through Attestation: TEEs support a process called remote attestation, where the central coordinator can cryptographically verify that the correct, unaltered analysis code is running inside the secure enclave before sending any queries. This provides mathematical proof that the data will only be used for the approved computation.

This combination offers the strongest possible privacy guarantees, protecting data at rest, in transit, and now, in use. This technical advancement, detailed in this Technical whitepaper on confidential federated analytics, represents the future of secure, trustless data collaboration.

Frequently Asked Questions about Federated Analytics

Here are answers to some of the most common questions about adopting federated analytics.

How does federated analytics ensure data privacy?

Privacy is ensured through a multi-layered defense-in-depth strategy:

- Data Stays Local: The foundational principle is that raw, individual-level data never leaves its secure source environment. Only the analysis code travels to the data, not the other way around.

- Aggregated Results: Only summary insights (e.g., counts, averages, model parameters) that are mathematically aggregated and do not contain individual-level information are shared between the participating nodes.

- Privacy-Preserving Techniques (PETs): Advanced cryptographic and statistical methods add further layers of protection. Secure aggregation allows for computing collective results without any party, including the central coordinator, seeing individual contributions. Differential privacy adds precisely calibrated statistical noise to the final aggregated results, making it mathematically impossible to re-identify any single individual’s contribution to the output.

These battle-tested techniques, often used in combination, make federated analytics suitable for the most sensitive healthcare and genomic data.

Is federated analytics slower than traditional analytics?

This question requires a nuanced answer. A single federated query might have higher latency than a query on a centralized database due to network communication. However, the overall time-to-insight is often dramatically faster. Traditional methods require months or even years of legal negotiations and massive data transfers before analysis can even begin. Federated analytics bypasses these delays, allowing research to start in days. Furthermore, for computationally intensive tasks, federation leverages the distributed computing power of all participating nodes, effectively creating a parallel supercomputer. This can make large-scale analyses significantly faster than running them on a single, overloaded central server.

What is required to set up a federated analytics system?

Setting up a federated system requires a combination of technology, governance, and people:

- Technology: This includes a central coordinating server to manage queries, standardized software deployed at each data node, and agreed-upon data models (e.g., OMOP) and APIs to ensure technical interoperability.

- Governance: A clear and robust governance framework is the most critical component. This is a legal and ethical layer that includes agreements on data access rights, ethical oversight procedures, publication policies, and benefit sharing. This framework builds the trust necessary for successful and sustainable collaboration.

- People: Successful federation requires buy-in and participation from various stakeholders, including IT staff at each node to maintain the system, data stewards to ensure data quality, and a central committee to oversee governance.

Once these components are in place, running subsequent analyses becomes remarkably straightforward.

How does federated analytics handle data quality issues?

Data quality is a shared responsibility in a federated network. The principle is “garbage in, garbage out.” Federated analytics itself does not automatically clean data. However, it provides the tools to assess and monitor data quality across the network. For example, federated queries can be run to generate data quality reports, check for missing values, or identify outliers across all participating sites without moving the raw data. This allows the central coordinator to identify quality issues, but the responsibility for remediating the data ultimately lies with the local data owner at each node.

Can federated analytics be used for real-time analysis?

It depends on the definition of “real-time.” While federated analytics is generally not suited for applications requiring sub-second responses due to network latency, it can support near-real-time use cases. For example, a federated query to monitor for a security threat or a disease outbreak across a network of hospitals could be run every few minutes or hourly. This is much faster than traditional methods and can be considered “operational” or “near-real-time.” The feasibility depends on the complexity of the query, the size of the network, and the performance of the communication channels.

Conclusion: A New Paradigm for Collaborative, Secure Data Science

Federated analytics is reshaping collaborative research by shifting the paradigm from collecting data to analyzing it where it lives. This is more than a technical upgrade; it’s a reimagining of data science.

By addressing the challenge that 97% of hospital data goes unused, federated analytics creates a vital bridge between data protection and scientific progress, turning missed opportunities into potential breakthroughs.

The benefits are clear: privacy-first analysis, improved collaboration, accelerated findy, and greater operational efficiency. It eliminates lengthy data-sharing negotiations and costly data transfers, allowing researchers to focus on insights, not logistics.

From COVID-19 research to rare disease studies, the applications show that federated analytics is already delivering real-world impact.

The future is federated. As data volumes and privacy regulations grow, analyzing distributed data will become essential. Emerging technologies like confidential computing will provide even greater security and verifiability.

At Lifebit, we’re not just observing this change—we’re actively driving it. Our next-generation federated AI platform enables secure, real-time access to global biomedical and multi-omic data, with built-in capabilities for harmonization, advanced AI/ML analytics, and federated governance. We power large-scale, compliant research and pharmacovigilance across biopharma, governments, and public health agencies because we believe that data’s potential should be open uped safely and responsibly.

The future of data science is federated, and it’s already here. We’re helping organizations harness their distributed data while upholding the highest privacy standards.

Ready to explore how federated analytics can transform your research? Learn more about Lifebit’s approach to Federation and find how we make secure data collaboration a reality.