AI and Drug Safety – A Match Made in Pharma Heaven

Why Drug Safety AI is Changing Modern Pharmacovigilance

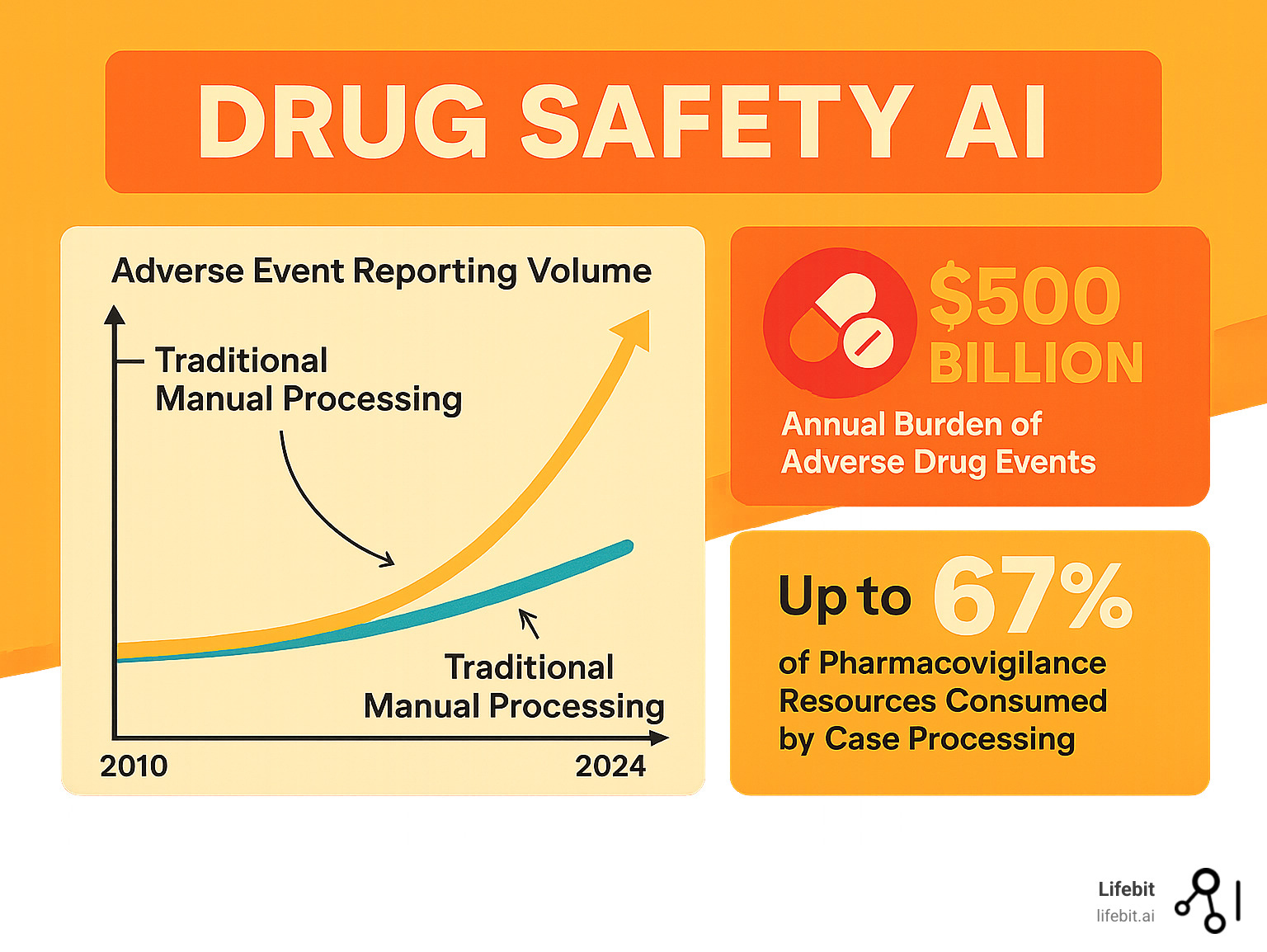

Drug safety AI is revolutionizing how pharmaceutical companies and regulators monitor adverse drug reactions. With adverse event volumes growing 20% annually and underreporting rates as high as 94%, traditional manual methods can no longer handle the scale of modern drug safety surveillance.

Drug safety AI automates case intake 10x faster than manual review, detects safety signals months earlier, and integrates diverse data sources like spontaneous reports, EHRs, and social media. This is critical, as case processing consumes up to two-thirds of pharmacovigilance resources, and adverse drug events cost over $500 billion annually, representing the fourth leading cause of death in the United States.

Current pharmacovigilance systems miss critical signals due to manual bottlenecks and data fragmentation. Regulatory agencies like the FDA are now actively seeking AI solutions through programs like the Emerging Drug Safety Technology Program (EDSTP), and early adopters report significant efficiency gains.

I’m Maria Chatzou Dunford, CEO of Lifebit. I’ve spent over 15 years developing AI and genomics platforms for secure, federated analysis of biomedical data. My work focuses on how Drug safety AI can transform pharmacovigilance while upholding the highest standards of regulatory compliance and data privacy.

The Ticking Clock: Why Pharmacovigilance Is at a Breaking Point

Every day, thousands of adverse event reports flood pharmacovigilance teams. The volume grows by about 20% annually, but the teams processing them do not. This isn’t just an operational challenge; it’s a direct threat to patient safety. Adverse drug reactions are the fourth leading cause of death in the U.S. and cost the healthcare system over $500 billion annually.

Modern medicine’s complexity, with patients often taking multiple medications (polypharmacy), creates a web of potential interactions that is nearly impossible to track manually. Individual responses to drugs vary based on genetics, lifestyle, and health conditions, making the puzzle even harder to solve with traditional methods. This is why Drug safety AI has become essential.

The Challenge of Manual Processing

Case processing consumes up to two-thirds of most pharmacovigilance teams’ resources. This means skilled drug safety experts spend most of their time on data entry and administrative tasks instead of analyzing complex safety signals. This repetitive work not only wastes expertise but also introduces human error. While experts are buried in paperwork, potential safety signals sit undetected in a growing backlog, delaying the identification of critical patterns that affect patient safety.

The Hidden Dangers of Underreporting and Data Silos

A shocking statistic reveals a median underreporting rate of 94% in spontaneous reporting systems. For every 100 adverse events, only about six are reported. The data we do have is scattered across disparate systems like the FDA’s FAERS database and the WHO’s VigiBase, as well as electronic health records, claims data, and social media. This fragmentation means we often detect safety signals months or years after they first appear. Real-world data from EHRs and wearables holds immense promise, but without AI to integrate and analyze these sources, its value remains largely untapped.

Changing the Pharmacovigilance Lifecycle with Drug Safety AI

Drug safety AI offers a step-by-step approach to improving pharmacovigilance, starting with targeted solutions that deliver immediate value. This pragmatic roadmap leads to end-to-end augmentation of safety processes while maintaining strict standards for AI for regulatory compliance. The goal is not to replace human expertise but to amplify it by removing the tedious tasks that hinder experts from performing critical analysis.

Today’s Wins: Automating the Front Lines

The biggest wins from Drug safety AI are currently at the beginning of the pharmacovigilance process, where data first enters the system. These automations deliver immediate ROI by reducing manual labor and accelerating case processing times.

- Case intake and triage: AI can rapidly sort through a deluge of adverse event reports from emails, faxes, and call center notes. It automatically identifies the four minimum criteria for a valid report (patient, reporter, drug, event) and flags serious cases for immediate human review based on regulatory definitions. Pfizer’s early pilots, launched in 2014, proved that AI could reliably handle these crucial decisions, achieving high accuracy and freeing up human experts to manage ambiguous or complex cases.

- Deduplication: In a global system, the same adverse event may be reported by a patient, their doctor, and a hospital, creating multiple records. The WHO-Uppsala Monitoring Centre’s vigiMatch algorithm uses sophisticated probabilistic matching to process about 50 million report pairs per second, identifying not just exact duplicates but also near-duplicates with minor variations—a task far beyond human capability that is essential for data integrity.

- Case validity and seriousness assessment: AI applies consistent logic based on ICH E2B guidelines across thousands of reports to determine if an event is serious (e.g., resulting in death, hospitalization, or disability). This reduces the significant inter-reviewer variability common in manual assessment, leading to more consistent and reliable safety data.

- Data entry automation: This is one of the most impactful applications. Natural language processing (NLP) models read unstructured narratives from reports and automatically extract and code key information—such as medical terms, dates, dosages, and lab values—into structured fields compliant with safety database formats like E2B(R3). This can reduce the time for a single case from hours to minutes, dramatically shrinking backlogs and accelerating the flow of information into analytical systems.

Tomorrow’s Frontiers: Augmenting Expert Decisions

The future of Drug safety AI lies in augmenting human expertise, where AI acts as a tireless research assistant with superhuman pattern recognition, enabling deeper and more proactive safety analysis.

- Medical review: Instead of just presenting a flat report, AI can create a dynamic patient timeline, visualizing the sequence of drug administration, adverse events, lab results, and comorbidities. It can summarize complex cases, highlight potential drug-drug interactions from a patient’s medication history, and flag suspicious timelines for medical reviewers, allowing them to grasp the full clinical context in seconds.

- Signal management: Moving beyond simple frequency counts, AI can spot subtle signals early. Using unsupervised learning techniques like topic modeling or clustering, it can analyze millions of cases to group reports based on a constellation of symptoms, patient demographics, and concomitant medications. This can reveal novel safety syndromes or identify at-risk subpopulations that traditional methods would miss.

- Aggregate safety reporting: Creating complex periodic reports like PBRERs/PSURs and DSURs is a resource-intensive process. AI is changing this by automatically gathering relevant cases, calculating incidence rates, generating tables and charts, and even drafting initial narrative sections for medical writers and safety physicians to review, edit, and interpret. This shifts the focus from manual compilation to strategic analysis.

- Automated follow-up queries: When reports are missing critical information, AI can identify the specific data gaps (e.g., “The dose of concomitant medication X is missing” or “The outcome of the event is not specified”). It can then generate personalized, natural-language follow-up questions to send to the original reporter, improving data completeness and speeding up case completion.

- Benefit-risk assessment: The ultimate goal is a dynamic, living benefit-risk profile for every drug. AI can integrate safety data, clinical trial efficacy outcomes, and real-world evidence on effectiveness to continuously update our quantitative understanding of a drug’s performance. This transforms pharmacovigilance from a reactive, compliance-driven function to a proactive, value-adding component of lifecycle management.

Powering Intelligent Safety: Data Sources, NLP, and Interoperability

Modern Drug safety AI acts as a sophisticated translator and detective, understanding complex medical language from diverse sources to spot patterns humans might miss. This interconnected approach allows us to move beyond traditional safety reports, which capture as little as 6% of actual adverse events, and tap into the rich, heterogeneous mix of real-world healthcare data.

Leveraging a World of Data for Deeper Insights

Getting a complete picture of drug safety requires looking at all the digital footprints left by patients and providers. Each source has unique strengths and challenges.

- Spontaneous Reporting Systems (SRSs) like the FDA’s FAERS and the WHO’s VigiBase remain the foundation of pharmacovigilance. While invaluable for generating hypotheses, they suffer from significant underreporting and reporting biases (e.g., the Weber effect, where reporting is higher for newly marketed drugs).

- Electronic Health Records (EHRs) and Claims Data offer the complete patient journey, including diagnoses, lab results, procedures, and prescriptions. This longitudinal data provides critical clinical context. EHR text-mining algorithms have achieved 73% sensitivity in detecting adverse drug reactions from unstructured clinical notes, but the challenge lies in integrating this with structured data fields and claims information to build a coherent patient history.

- The Scientific Literature contains decades of medical knowledge. AI can scan millions of abstracts and full-text articles in minutes to find mechanistic evidence linking a drug to a biological pathway that could explain an observed adverse event, helping to validate a new signal.

- Social Media and Patient Forums like Reddit or PatientsLikeMe have become valuable sources of early, patient-centric signals. While noisy, these platforms capture the patient’s own words and experiences. BERT-based models have achieved an F-score of 0.89 in detecting adverse drug reactions from Twitter posts, demonstrating the potential to filter signal from noise.

- Drug Labels contain systematic, regulator-approved safety information. Projects like Cedars-Sinai’s OnSIDES have extracted data from over 47,000 labels to create a searchable, computable resource that AI can use to quickly check if a detected event is already a known side effect.

- Wearable Data from smartwatches and other sensors could revolutionize safety monitoring by providing continuous, objective physiological data. This could enable the detection of subtle changes like arrhythmias, altered sleep patterns, or reduced mobility after a patient starts a new medication, offering a new frontier for proactive signal detection.

The primary challenge is making these diverse sources work together. This requires sophisticated clinical data interoperability. Modern health data standards like FHIR (Fast Healthcare Interoperability Resources) are crucial for enabling seamless data exchange. AI platforms must not only ingest data from these varied sources but also perform careful data harmonization to map different formats and terminologies into a unified analytical framework.

How AI Understands Clinical Language

Most safety information exists in unstructured, human language. AI uses several layers of Natural Language Processing (NLP) to interpret it with precision.

- Named Entity Recognition (NER) is the first step. It acts like a highlighter, automatically identifying key information in text. For example, in the sentence, “Patient developed a severe rash after taking 50mg of amoxicillin twice daily,” NER would identify ‘severe rash’ as an Adverse Event, ‘amoxicillin’ as a Drug, ’50mg’ as a Dosage, and ‘twice daily’ as the Frequency.

- Relation Extraction then connects the dots. It understands the grammatical and semantic connections between the entities NER has identified. In the example above, it would establish a causal link: ‘amoxicillin’ is the cause of the ‘severe rash’. This is critical for distinguishing between adverse events, a patient’s pre-existing conditions, and other incidental medical information mentioned in a narrative.

- Normalization to Standards translates the extracted information into a common, computable language. A patient might report “feeling blue,” a doctor might write “depressed mood,” and a forum post might say “I’m so down.” Normalization maps all of these variations to a single, standardized medical terminology like MedDRA (e.g., the Preferred Term ‘Depression’). Similarly, drug names are normalized using RxNorm, and clinical findings to SNOMED CT. The UMLS (Unified Medical Language System) acts as a master thesaurus, connecting these vocabularies and allowing AI to understand relationships across different coding systems.

This multi-stage language processing pipeline transforms scattered, unstructured information into the structured, harmonized data that powers advanced safety analytics, enabling Drug safety AI to detect critical signals that might otherwise remain hidden in the text.

Beyond the Status Quo: Advanced Signal Detection and Real-World Impact

Traditional pharmacovigilance relies on disproportionality analysis (DPA), a statistical method that scans safety databases for drug-event pairs reported more frequently than expected. Methods like the Proportional Reporting Ratio (PRR) and Reporting Odds Ratio (ROR) are workhorses of signal detection, but they are like using a magnifying glass when a microscope is needed. DPA is prone to biases, can be confounded by factors like age and co-medications, and struggles to identify complex interactions. Drug safety AI moves beyond these simple counts to sophisticated causal inference and knowledge graphs, helping us understand not just that an event is happening, but why.

This shift is profound. By accounting for complex confounding variables, machine learning can identify safety concerns 7-22 months earlier than traditional methods, a lead time that can translate into protecting thousands of patients from preventable harm.

Enhancing Signal Detection with Machine Learning

Machine learning models can simultaneously analyze hundreds of variables, including patient demographics, medical history, lab values, and concomitant medications, to isolate a drug’s specific effect. A recent model achieved an AUC of 0.95 on spontaneous reporting data, significantly outperforming traditional methods. Key metrics provide a multi-faceted view of performance:

- AUC (Area Under the Curve) measures a model’s ability to distinguish true signals from random noise; a value of 0.95 indicates excellent discriminatory power.

- Precision and Recall offer a practical trade-off. High precision ensures that when the model flags a signal, it’s very likely to be a real one (reducing false alarms), while high recall ensures the model finds most of the real signals present in the data (reducing missed events).

- Lead-time versus label changes is a crucial real-world metric, quantifying how much earlier the AI detected a problem compared to when the official drug label was updated.

Drug safety AI also excels at detecting complex drug-drug interactions and employs causal inference methods to move beyond correlation. Techniques like propensity score matching statistically simulate a randomized controlled trial using observational data, helping to distinguish true causation from confounding factors. Furthermore, knowledge graphs provide mechanistic plausibility. These vast networks connect drugs, genes, proteins, pathways, and diseases. When AI detects a new signal (e.g., Drug X is associated with liver injury), it can query the knowledge graph to see if Drug X is known to modulate a biological pathway implicated in liver function, adding a powerful layer of evidence.

Proven Use Cases in Drug Safety AI

The real-world impact of Drug safety AI is already visible across the industry:

- Intake automation systems are now achieving over 90% accuracy in extracting key data from unstructured reports, reducing manual case processing time by 50-70% and allowing teams to reallocate skilled experts to high-value analysis.

- Large-scale deduplication, exemplified by the WHO-UMC’s vigiMatch algorithm, processes 50 million report pairs per second to ensure the integrity of the world’s largest safety database—a foundational task for all subsequent analysis.

- The FDA’s Sentinel System demonstrates the power of active, large-scale safety surveillance. By running queries across a distributed network of over 300 million patient records, it has conducted hundreds of analyses to evaluate safety concerns for vaccines and therapeutics, including critical monitoring during the COVID-19 pandemic.

- Label mining projects like Cedars-Sinai’s OnSIDES have created comprehensive, computable databases of known side effects from over 47,000 drug labels. This allows AI to automatically triage new signals, prioritizing novel events that are not yet documented.

- Predictive toxicology models are being used pre-clinically to forecast a compound’s potential for toxicity based on its molecular structure. This helps de-risk drug candidates early in development, preventing unsafe drugs from ever reaching human trials and saving millions in downstream costs.

This shift toward real-time, intelligent pharmacovigilance represents a fundamental change in how we approach drug safety—from a reactive burden to a proactive, data-driven advantage that saves lives.

Building Trustworthy AI: Governance, Validation, and Regulatory Strategy

Implementing Drug safety AI is about creating systems that regulators, clinicians, and patients can trust. In a field where decisions impact human lives, AI must be transparent, accountable, and fair.

Governance and Human-in-the-Loop Oversight

The golden rule is that humans must always remain in control. AI should augment, not replace, human expertise. This human-in-the-loop approach prevents “automation bias” and ensures clinical accountability remains with qualified experts.

Successful AI governance requires clear operating models with defined roles and responsibilities. Robust processes for change control, documentation, and audit trails are critical in regulated environments. Every AI decision, model version, and human intervention must be recorded to explain how the system works. Finally, having failure modes and fallback procedures is essential for maintaining continuous safety surveillance.

Our AI-enabled data governance solutions are designed to support these requirements, providing the controls and transparency that regulated industries demand.

Validation and Regulatory Engagement for Drug Safety AI

Regulatory approval for Drug safety AI requires a risk-based Computer Software Assurance (CSA) approach focused on critical thinking. The key is demonstrating real-world performance through rigorous testing against gold standard datasets. Model version control is crucial for tracking a system’s evolution over time.

Explainability is also vital. Techniques like SHAP and LIME help us look inside the “black box” of AI, showing which factors influenced a prediction and giving experts the context to validate AI outputs. Proactive engagement with regulators through programs like the FDA’s Emerging Drug Safety Technology Program (EDSTP) is essential for shaping the evolving regulatory landscape and ensuring solutions meet requirements.

Ensuring Privacy, Security, and Fairness

Handling sensitive patient data requires bulletproof privacy and security measures that comply with regulations like HIPAA and GDPR. Traditional data de-identification may not be sufficient, as AI can potentially re-identify individuals from anonymized data. More sophisticated approaches are needed.

Bias detection and mitigation are critical. If training data isn’t diverse, AI models may miss safety signals in underrepresented groups, creating health equity issues. Regular bias audits and diverse dataset curation are essential for building fair systems.

Federated learning offers a powerful solution to privacy concerns. Instead of centralizing data, this approach brings the algorithm to the data, allowing models to be trained across institutions without moving raw patient information. Our platforms are built on these principles, enabling secure collaboration while maintaining the highest standards of data protection.

Frequently Asked Questions about AI in Pharmacovigilance

Here are answers to common questions about the role of Drug safety AI in pharmacovigilance.

How does AI handle the complexity of unstructured data like doctor’s notes or social media posts?

AI uses Natural Language Processing (NLP) to make sense of messy, human language. Named Entity Recognition (NER) identifies key terms like drug names and symptoms. Contextual understanding with transformer models like BERT helps the AI grasp nuances crucial for accurate assessment; these models have achieved F-scores of 0.89 in detecting adverse drug reactions on Twitter. The AI then maps these concepts to standardized terminologies like MedDRA and SNOMED CT, allowing for comparison across different data sources. For social media, sentiment analysis can help prioritize signals based on the user’s emotional tone.

Will AI replace pharmacovigilance professionals?

No. Drug safety AI augments pharmacovigilance professionals, it does not replace them. Currently, up to two-thirds of PV resources are spent on repetitive tasks like data entry and triage. AI excels at automating this high-volume, rule-based work, freeing human experts to focus on complex tasks that require their irreplaceable skills, such as signal investigation, risk management strategy, and benefit-risk assessment. The human-in-the-loop approach is essential, as clinical accountability must always rest with human experts who provide the judgment and strategic thinking no algorithm can replicate.

How can we trust the “black box” decisions of an AI model in a regulated environment?

Trust is built through transparency, validation, and governance. Explainable AI (XAI) methods like SHAP and LIME allow us to understand an AI model’s reasoning by showing which factors influenced a decision. Before deployment, models undergo rigorous validation against gold standards to ensure accuracy and reliability. Continuous performance monitoring ensures the AI performs as expected over time. Most importantly, human-led governance means every critical decision receives human review. Collaboration with regulators through programs like the FDA’s Emerging Drug Safety Technology Program helps establish best practices, and clear documentation for audits ensures every aspect of the AI system is transparent and accountable.

Conclusion

We are at a pivotal moment in pharmacovigilance. Traditional manual methods are overwhelmed by exponential data growth, with adverse event volumes climbing 20% annually. This is a direct threat to patient safety.

Drug safety AI is the solution. It transforms the pharmacovigilance lifecycle by automating intake, detecting signals months earlier, and integrating diverse data sources. The evidence is compelling: machine learning models are achieving AUCs of 0.95 and providing 7-22 months earlier detection than traditional methods.

Crucially, AI amplifies human expertise, it does not replace it. By handling repetitive tasks, AI frees experts to focus on complex signal investigations and nuanced clinical judgments. The path forward requires a focus on trust and governance, including human-in-the-loop oversight, rigorous validation, and privacy-preserving approaches like federated learning.

At Lifebit, our federated AI platform is built to address these challenges. Our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer) deliver secure, compliant, and powerful drug safety insights. We enable organizations to harness their data’s full potential while maintaining the highest standards of privacy and governance.

Embracing these technologies responsibly will create a future where drug safety monitoring is more accurate, timely, and effective at protecting patients worldwide.

Ready to see how Drug safety AI can transform your pharmacovigilance operations? Learn how to accelerate drug safety insights with federated AI.