Cohort Chronicles: Mastering Prospective and Retrospective Studies

End the Guesswork: Prove Causality and Quantify Risk with Prospective Cohort Analysis

Prospective cohort analysis is a research method where investigators identify a group of individuals (a cohort) who do not yet have the outcome of interest, measure their exposure to various factors, and then follow them forward in time to observe who develops the outcome. This forward-looking approach is one of the most powerful tools in observational research for establishing whether exposures cause specific health outcomes.

Key characteristics of prospective cohort analysis:

- Forward-looking: Participants are enrolled before the outcome occurs and followed into the future. The study progresses in the same direction as time.

- Outcome-free at baseline: A critical requirement is that no subjects have the disease or condition being studied when the study begins. This ensures a clean starting point for observing new (incident) cases.

- Exposure-based groups: Participants are typically classified and grouped by exposure status (e.g., exposed vs. unexposed) at the start of the study, allowing for direct comparison.

- Longitudinal follow-up: Data is collected at multiple points over time, which can span months, years, or even decades, to track changes in health status and other variables.

- Incidence measurement: This design allows for the direct calculation of incidence—the rate of new cases—in each exposure group, enabling the quantification of risk through metrics like risk ratios and rate ratios.

- Temporality: It definitively establishes that the exposure precedes the outcome, a crucial criterion for inferring a causal relationship.

This design sits high in the hierarchy of evidence for observational studies because it minimizes recall bias and allows researchers to establish the correct sequence of events—a critical requirement for demonstrating causality.

When you’re investigating the etiology of diseases, studying multiple health outcomes from a single exposure, or examining rare exposures in large populations, prospective cohort analysis becomes invaluable. The Framingham Heart Study, which has followed participants and their offspring in the town of Framingham, Massachusetts, since 1948, exemplifies this approach’s power. It was this study that popularized the concept of a “risk factor” and provided the first major evidence linking high blood pressure and high cholesterol to an increased risk of heart disease. Over the decades, it has revealed fundamental connections between smoking, cholesterol types (HDL vs. LDL), physical activity, and cardiovascular events, transforming preventive medicine.

For pharmaceutical companies, public health agencies, and regulatory bodies, the challenge has evolved beyond study design to execution at a global scale. Many now work with siloed datasets distributed across institutions, countries, and continents. Modern prospective cohort analysis requires harmonizing these diverse data sources, which often use different coding systems (e.g., ICD-9 vs. ICD-10), measurement units, and data formats. Furthermore, it demands strict adherence to participant privacy under regulations like GDPR and HIPAA, making data centralization impossible. The solution lies in enabling real-time analytics across federated environments—bringing the analysis to the data—without moving or compromising sensitive information.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where I’ve spent over 15 years building computational tools and platforms that enable large-scale prospective cohort analysis across genomic and biomedical datasets. My work focuses on making federated data analysis accessible for researchers conducting observational studies while maintaining the highest standards of data security and regulatory compliance.

Choose the Right Study Design in 5 Minutes: Save Months and Millions

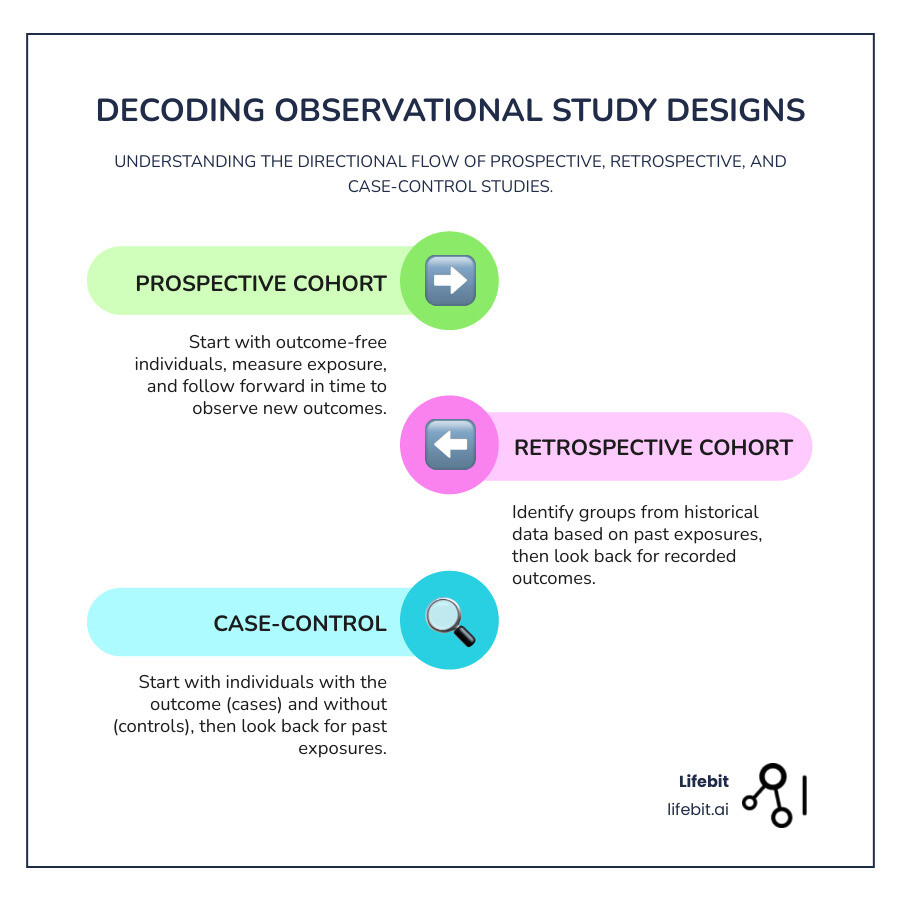

If you’ve ever felt confused by the terminology around observational studies, you’re not alone. The words “prospective,” “retrospective,” and “case-control” get thrown around a lot, but understanding what they actually mean—and more importantly, how they differ—is essential for choosing the right approach for your research question.

All three designs fall under the umbrella of observational studies, meaning researchers observe what happens without intervening or manipulating variables. But the way they approach time and data collection? That’s where things get interesting.

Prospective Cohort Studies: Looking to the Future

Think of a prospective cohort study as setting up camp at the starting line and watching the race unfold. You identify a group of people—your cohort—who don’t yet have the outcome you’re interested in studying. Maybe you’re investigating whether a certain dietary pattern leads to diabetes, or whether workplace exposure to chemicals increases cancer risk.

At the beginning of the study, you measure each participant’s exposure status and other relevant characteristics. Then comes the waiting game. You follow this group forward in time, checking in at regular intervals—monthly, annually, or even over decades. As time passes, you record who develops the outcome and who doesn’t.

This forward-looking, longitudinal design is what makes prospective cohort analysis so powerful. Because you’re measuring exposure before the outcome occurs, you can be confident about the sequence of events. The exposure genuinely came first. This temporal relationship is one of the key building blocks for establishing causality, which is why prospective cohorts sit high in the evidence hierarchy.

Retrospective Cohort Studies: Uncovering the Past

Now imagine you have access to detailed historical data—employment records from a factory, medical charts from a hospital system, or insurance databases spanning decades. A retrospective cohort study lets you travel back in time through these existing records to identify your cohort and determine their exposure status at some point in the past.

You’re still following a cohort forward in time, but you’re doing it through historical documentation rather than in real time. You identify when people were exposed (e.g., when they started working in a specific department), then trace through the records to see when the outcome occurred. The outcome has already happened by the time you begin your study, but the logic remains the same: compare outcomes in the exposed versus the unexposed.

The beauty of this approach? Speed and cost. You don’t need to wait years for outcomes to develop. But there’s a trade-off. You’re limited by whatever data was recorded at the time, and the quality and completeness of those records can vary wildly. If the information you need wasn’t documented, you’re out of luck.

Case-Control Studies: A Different Approach

Case-control studies flip the script entirely. Instead of starting with exposure and waiting for the outcome, you start with the outcome and work backward.

You identify people who already have the disease or condition you’re studying—these are your “cases.” Then you select a comparable group of people without the condition—your “controls.” Once you have both groups, you look back in time to compare their past exposures, often through interviews or records. Did the cases have higher exposure rates to the risk factor you’re investigating compared to the controls?

This design is remarkably efficient for rare diseases. If you’re studying a condition that affects only 1 in 10,000 people, you’d need to follow an enormous prospective cohort to capture enough cases. With a case-control study, you can directly identify those rare cases and compare them to controls, saving tremendous time and resources. The classic 1950 study by Doll and Hill that first linked smoking to lung cancer used a case-control design.

The downside? Because you’re often asking people to recall past exposures, recall bias can creep in. Someone who’s been diagnosed with cancer might remember chemical exposures differently than someone who hasn’t. And because you’re selecting cases and controls separately, selection bias is a real concern.

Hybrid Designs: The Best of Both Worlds?

To balance the strengths and weaknesses of these classic designs, researchers sometimes use hybrid approaches, most notably within large, ongoing prospective cohorts.

-

Nested Case-Control Study: This design is a case-control study conducted within a cohort study. As the cohort is followed over time, all individuals who develop the disease of interest become the “cases.” For each case, one or more “controls” are selected from the members of the cohort who were still disease-free at the exact time the case was diagnosed. This matching on time (and often other factors) is a key feature. The primary advantage is efficiency. Instead of analyzing expensive stored biological samples (like blood or tissue) for the entire cohort, you only need to analyze them for the cases and the selected controls, dramatically reducing costs and lab work while retaining the benefits of prospectively collected data.

-

Case-Cohort Study: This is another variation conducted within a cohort. Like a nested case-control study, it uses all individuals who develop the disease as cases. However, the comparison group is different. Instead of selecting unique controls for each case, a single comparison group—called the “subcohort”—is randomly selected from the entire cohort at the very beginning of the study. This subcohort represents the person-time experience of the full cohort. A major advantage is that the same subcohort can be used as the comparison group for multiple different case groups (e.g., for studying heart disease, cancer, and diabetes within the same cohort), making it highly efficient for studying multiple outcomes.

These hybrid designs offer a cost-effective way to leverage the massive investment of a prospective cohort study, especially when dealing with expensive biological or genetic analyses.

| Feature | Prospective Cohort Study | Retrospective Cohort Study | Case-Control Study |

|---|---|---|---|

| Starting Point | Exposure status (groups free of outcome) | Exposure status (groups from historical records) | Outcome status (cases with outcome, controls without) |

| Timeline | Forward in time (exposure measured, then outcome observed) | Backward in time (data collected on past exposures and outcomes) | Backward in time (outcome already occurred, then past exposures are assessed) |

| Key Strength | Establishes temporality, minimizes recall bias, allows incidence calculation, good for multiple outcomes from one exposure | Faster, less expensive, good for rare exposures, uses existing data | Efficient for rare diseases, less time-consuming than cohort studies |

| Key Weakness | Costly, time-consuming, inefficient for rare diseases, potential for loss to follow-up | Limited by data quality, prone to information bias, recall bias (less than case-control), confounding | Prone to recall bias, selection bias, cannot calculate incidence rates directly, typically studies one outcome |

The choice between these designs isn’t about which is “best”—it’s about which is best for your specific research question. Are you studying a rare disease or a common one? Do you have access to high-quality historical data? Do you need to establish that exposure definitively preceded the outcome? Can you wait years for results, or do you need answers now?

Understanding these differences helps you design stronger studies and interpret published research more critically. And when you’re working with federated datasets across multiple institutions—where data lives in different formats, under different governance structures—choosing the right design becomes even more critical to your success.

Plug Your Cohort Leaks Now: The Retention Playbook to Cut Attrition and Save Your Study

Think of a prospective cohort analysis as planning a cross-country road trip. You wouldn’t just hop in the car and hope for the best—you’d map your route, pack supplies, and plan for the unexpected. The same principle applies here, except instead of miles, we’re tracking years (sometimes decades), and our passengers are the participants whose health journeys we’re documenting in real time.

Participant Selection: Building Your Cohort

Every great study begins with finding the right people. In prospective cohort analysis, we start by defining our target population—the specific group we want to study. This could be a general population cohort (like the residents of a specific town) or a special exposure cohort (like workers in a particular industry or survivors of a natural disaster). We then develop clear inclusion and exclusion criteria to determine who is eligible. This isn’t about being picky; it’s about precision and ensuring the study can answer the research question.

The golden rule? Everyone we enroll must be free of the outcome we’re investigating. If we’re studying heart disease, we can’t include people who already have it. This seems obvious, but it’s what makes the design work—we need a clean starting line to measure the development of new (incident) disease.

We typically divide our cohort into exposed and unexposed groups based on the risk factor we’re examining. Studying whether coffee consumption affects cognitive decline? We’d recruit coffee drinkers and non-coffee drinkers from the same community, making sure they’re otherwise similar in age, education, and health status. This comparison is what allows us to isolate the effect of our exposure.

Probability sampling methods (like simple random, stratified, or cluster sampling) help ensure our findings aren’t just relevant to the specific people we studied, but can be generalized to the broader population. A crucial step is calculating the required sample size. This isn’t a guess; it’s a statistical calculation based on factors like the expected incidence of the outcome in the unexposed group, the smallest effect size (e.g., risk ratio) the study aims to detect, the desired statistical power (typically 80% or 90%), and the significance level (alpha, usually 0.05). Underpowered studies are a waste of resources, as they may fail to detect a real association that exists. This is why prospective cohorts often require hundreds, thousands, or even hundreds of thousands of participants.

Data Collection and Follow-Up: The Longitudinal Engine

Once we’ve assembled our cohort, the real work begins. At baseline (day zero of our study), we collect comprehensive data on everything that might matter: exposure status, demographic information, lifestyle habits, medical history, and potential confounding variables. This can include self-reported data from validated questionnaires (e.g., food frequency questionnaires), objective physical measurements (blood pressure, BMI, spirometry), biological samples (blood, urine, tissue for genomic, proteomic, or metabolomic analysis), and linkage to external records (electronic health records, cancer registries, death certificates). This upfront effort pays massive dividends because we’re capturing information before anyone develops the outcome, which dramatically reduces recall bias.

Then comes the longitudinal follow-up—the heart of any prospective study. We track these same individuals forward in time, checking in at regular intervals (monthly, annually, or somewhere in between, depending on the outcome). Each follow-up captures new data: Has their exposure status changed? Have they developed the outcome? What other life changes might influence their risk?

The challenge that keeps researchers up at night? Loss to follow-up. People move, change phone numbers, lose interest, or pass away. When participants drop out, we lose valuable data and introduce attrition bias—the people who leave might be systematically different from those who stay, which can skew our results. To combat this, smart study teams employ a multi-pronged retention strategy. Beyond collecting multiple contact methods (home phone, mobile, email, a trusted friend or relative), successful cohorts build a sense of community. This can involve sending regular newsletters, birthday cards, providing personalized feedback on non-critical health metrics (with ethical approval), and creating participant advisory boards. Making follow-up as convenient as possible (e.g., online questionnaires, flexible appointment times) is also key. Studies like the Canadian Longitudinal Study on Aging and the UK Biobank have perfected these retention strategies, maintaining contact with vast numbers of participants over many years.

When attrition is unavoidable, researchers can use statistical techniques to mitigate its impact. Methods like inverse probability of censoring weighting (IPCW) can give more weight in the analysis to individuals who remain in the study but share characteristics with those who dropped out, helping to correct for potential attrition bias.

When is This Design the Right Choice?

Prospective cohort analysis isn’t the right tool for every research question, but when it fits, nothing else comes close. It’s your best bet when you’re investigating disease etiology—figuring out what actually causes health conditions, especially those that develop slowly over time. Want to understand how occupational exposures lead to respiratory disease twenty years down the line? Prospective cohort design is your answer.

Unlike case-control studies that zoom in on a single outcome, cohort studies let you explore multiple outcomes from one exposure. That coffee study? You could simultaneously track cognitive decline, cardiovascular events, cancer incidence, and mortality—all from the same cohort. This efficiency makes cohort studies incredibly valuable for understanding the full health impact of exposures.

The real superpower of prospective designs is establishing temporality. Because we measure exposure first and then watch for outcomes, we can confidently say the exposure preceded the disease. This temporal sequence is essential for making causal arguments. Did smoking cause lung cancer, or did early lung changes cause people to smoke more? The prospective design answers this definitively.

These studies are foundational in epidemiology and public health research. The National Cancer Institute’s article on The Power of Cohorts in Cancer Research highlights how these designs have uncovered complex relationships between genetics, environmental exposures, and cancer development—insights that would be nearly impossible to capture any other way.

For organizations working with large-scale biomedical data across multiple institutions, the challenge shifts from study design to execution. Modern prospective cohort analysis demands platforms that can harmonize diverse data sources, maintain participant privacy across jurisdictions, and enable real-time analytics without centralizing sensitive information—precisely the challenges federated analysis platforms are built to solve.

Prospective Cohorts: A Simple ROI Test to Avoid Multi-Million-Dollar Mistakes

Every research design comes with trade-offs. Prospective cohort analysis offers some of the strongest evidence in observational research, but it demands significant resources, patience, and strategic planning. Understanding both sides of this equation helps you decide when this design is worth the investment.

Key Advantages of Prospective Cohort Analysis

The real magic of prospective cohort analysis lies in its ability to watch events unfold in real time. Because you’re measuring exposure before anyone develops the outcome, you can confidently say that the exposure came first. This establishing of temporality puts prospective cohorts miles ahead of cross-sectional studies (where everything is measured at once) and case-control studies (where you’re reconstructing the past after the outcome has occurred).

Another powerful advantage is minimizing recall bias. When you collect exposure data at baseline—before anyone knows who will develop the disease—you eliminate the problem of people remembering their past differently based on their health outcomes. In prospective studies, you’re recording behaviors and exposures as they happen, often through objective measurements rather than relying on memory.

This forward-looking approach also lets you calculate incidence rates directly, which is crucial for quantifying risk. There are two key measures of incidence:

- Cumulative Incidence (Risk): This is the proportion of people who develop the outcome during a specified time period. It’s calculated as:

(Number of new cases) / (Number of disease-free individuals at the start). It’s a simple measure of average risk over that period. - Incidence Rate (or Incidence Density): This is a true rate that measures how quickly new cases arise in the population, accounting for the fact that people are followed for different lengths of time. It’s calculated as:

(Number of new cases) / (Total person-time at risk). Person-time is the sum of the time each participant remained disease-free and under observation.

From these measures, you can calculate ratios that compare the exposed and unexposed groups. A Risk Ratio (Relative Risk) is the ratio of the cumulative incidence in the exposed group to that in the unexposed group. A Rate Ratio is the ratio of the incidence rates. A rate ratio of 5.2, for example, means the rate at which the outcome occurs is more than five times higher in the exposed group than the unexposed group. These concrete numbers give clinicians and policymakers actionable information.

One often-overlooked benefit is the ability to study multiple outcomes from a single exposure. Once you’ve invested in following a cohort, you can track not just one disease but several. The British Doctors Study, initiated in 1951, followed nearly 35,000 male British doctors and was one of the first studies to provide convincing statistical proof of the link between smoking and lung cancer. But it didn’t stop there; it also quantified the association between smoking and dozens of other outcomes, including heart disease, stroke, and various other cancers.

Major Limitations and Challenges

But let’s be honest: prospective cohort analysis isn’t easy, and it’s definitely not cheap. The high cost is usually the first obstacle. You’re recruiting potentially thousands of participants, tracking them for years, managing massive amounts of data, and maintaining regular contact. Personnel costs, data infrastructure, and lab analyses can be staggering.

The time-consuming nature compounds this challenge. If you’re studying a disease with a long latency period—like the relationship between a dietary pattern and cancer risk—you might wait 10, 20, or even 30 years for enough cases to analyze. This makes prospective cohorts unsuitable when you need answers quickly.

Perhaps the most frustrating limitation is that prospective cohorts are inefficient for rare diseases. Even if you follow 10,000 people for a decade, you might only see a handful of cases of a very rare condition, leaving you with insufficient statistical power. This is precisely when case-control studies shine.

Loss to follow-up introduces attrition bias. If the people who drop out differ systematically from those who stay (perhaps they’re sicker or have adopted healthier habits), your results become skewed. Maintaining high retention rates requires constant effort.

Then there’s the perpetual challenge of confounding variables. A confounder is a third factor associated with both the exposure and the outcome that can distort the true relationship. For example, if you find that coffee drinkers have a higher rate of heart disease, the real cause might be that coffee drinkers are also more likely to smoke. Researchers must control for confounding through several methods:

- In the design phase:

- Restriction: Limiting study participants to a group with a specific characteristic (e.g., only non-smokers) to eliminate that factor as a confounder. This can limit the generalizability of the findings.

- Matching: For each exposed participant, selecting one or more unexposed participants with matching key characteristics (e.g., age, sex). This can be complex to implement.

- In the analysis phase:

- Stratification: Analyzing the exposure-outcome relationship within different levels (strata) of the confounding variable (e.g., analyzing smokers and non-smokers separately).

- Multivariate Modeling: Using statistical models like Cox proportional hazards regression to simultaneously adjust for many potential confounders, allowing you to estimate the independent effect of the exposure.

Finally, information bias (or measurement error) can creep in. This systematic error in measuring exposure or outcome can be non-differential (the error is the same across study groups), which usually biases the results toward the null (making an association seem weaker), or differential (the error differs between groups), which is more serious as it can skew results in any direction. For example, if a researcher is aware of a participant’s exposure status, they might scrutinize them more closely for the outcome, leading to observer bias.

Despite these challenges, when you’re tackling important questions about disease etiology, need to establish clear temporal relationships, or want to explore multiple health outcomes from a common exposure, prospective cohort analysis remains one of the most powerful tools in your research arsenal.