Beginner’s Guide to AI-Automated Airlocks

Analyze EHR and Genomics in Real Time Without Moving Data: Why You Need an AI-Automated Airlock Now

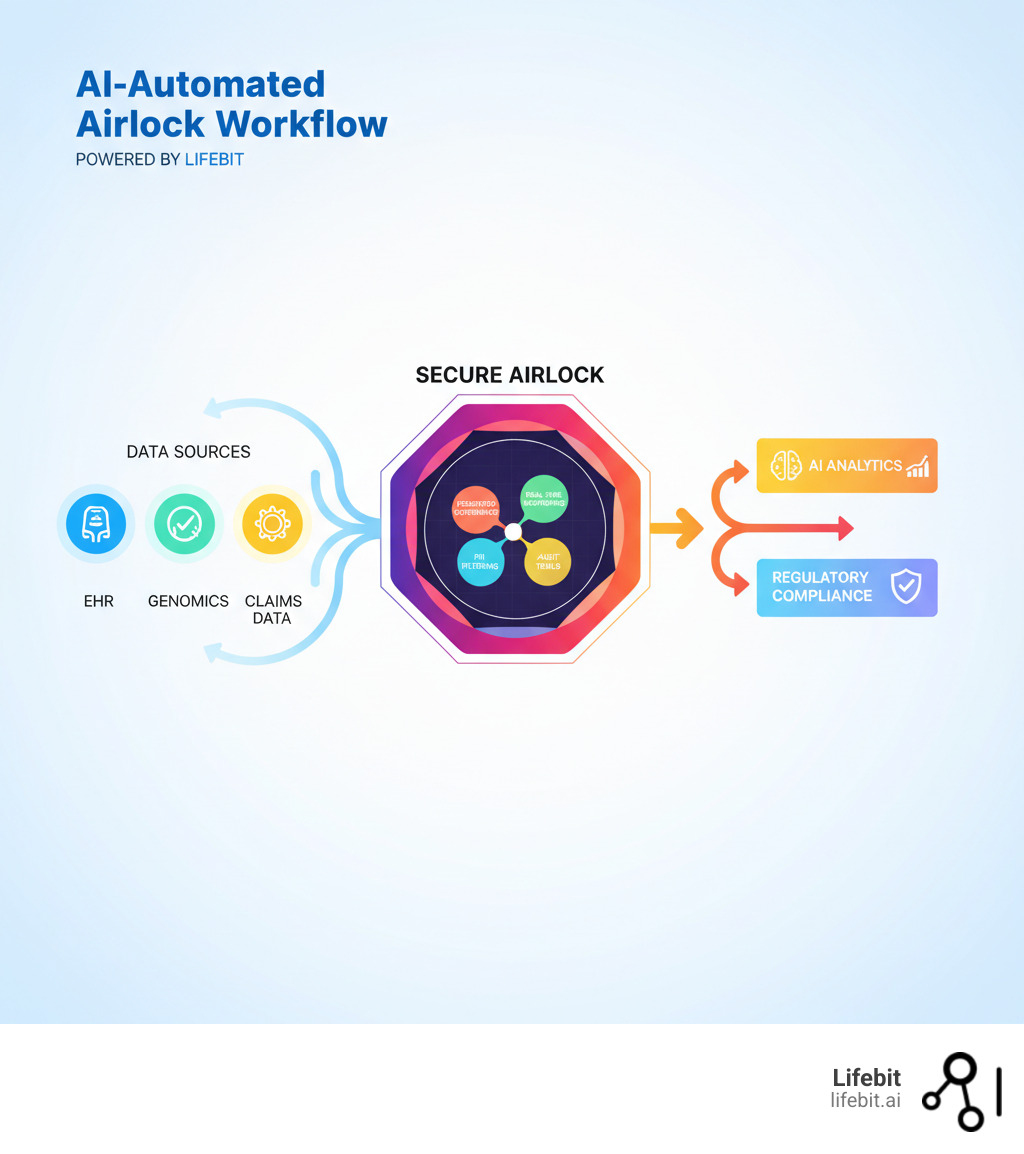

An AI-Automated Airlock is a secure gateway that enables controlled, compliant access to sensitive biomedical and multi-omic data for AI and machine learning analyticswithout exposing raw patient information. In healthcare, it acts as a critical intermediary that allows researchers, regulators, and pharma teams to analyze distributed datasets in real-time while maintaining strict data governance, privacy, and security standards.

Key purposes of AI-Automated Airlocks:

- Secure Data Access: Enable AI models to analyze sensitive health data without transferring or exposing it

- Federated Governance: Support distributed analysis across multiple institutions while enforcing unified compliance policies

- Real-Time Analytics: Power pharmacovigilance, cohort analysis, and evidence generation without data movement

- Regulatory Compliance: Meet stringent requirements for data protection (GDPR, PSD2, PCI DSS) and clinical safety

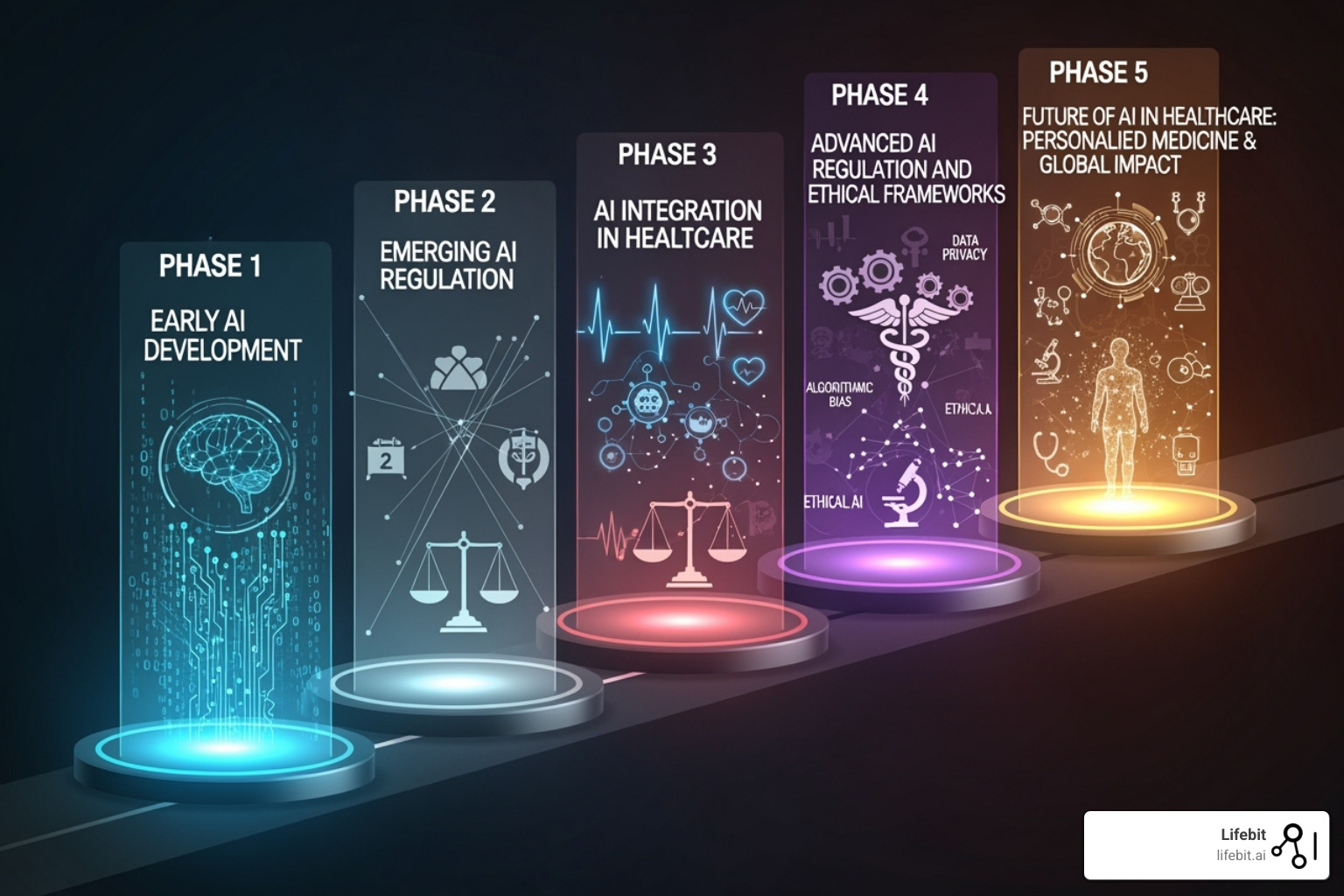

The term “AI Airlock” has also emerged in the UK regulatory context. In Spring 2024, the UK’s Medicines and Healthcare products Regulatory Agency (MHRA) launched the first AI Airlock regulatory sandboxa controlled testing environment where AI as a Medical Device (AIaMD) products undergo real-world evaluation before reaching patients. This program addresses critical challenges like explainability, hallucinations in AI outputs, and post-market surveillance for continuously evolving AI models.

Both concepts share a common goal: creating secure, controlled environments where AI can be tested, monitored, and deployed safely in healthcare settings.

The stakes are high. Forty companies applied to the MHRA’s pilot program; only five were accepted, with four completing case studies. These tests revealed that existing regulatory frameworks struggle with AI’s unique characteristicsmodels that learn and change after deployment, synthetic data that lacks clear validation rules, and the silent risk of clinician over-reliance on AI recommendations.

For global pharma leaders, public health agencies, and regulatory bodies managing siloed EHR, claims, and genomics datasets, the challenge is twofold. First, how do you enable real-time, federated analytics across distributed data sources without compromising security or compliance? Second, how do you ensure that AI modelswhether used for drug safety surveillance or clinical decision supportremain trustworthy as they evolve?

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where we’ve spent over 15 years building federated genomics and biomedical data platforms that power secure, compliant AI-Automated Airlock environments for precision medicine and drug findy. In this guide, I’ll walk you through what AI-Automated Airlocks are, how they work, and why they’re essential for the future of healthcare AI.

MHRA AI Airlock: Test AIaMD in the Real World Before Patients—What You Need to Pass

How do you approve a medical AI that learns and changes after you’ve signed off on it? Traditional drug approvals don’t prepare regulators for algorithms that evolve in real-time, potentially “hallucinate” false diagnoses, or develop biases you can’t see until they’ve already affected patients.

The UK decided to tackle this head-on. The Medicines and Healthcare products Regulatory Agency (MHRA) launched the AI Airlock, a regulatory sandbox specifically designed for AI as a Medical Device (AIaMD). Think of it as a controlled testing ground where AI meets real-world medicine under close supervision—before any patient is at risk.

This isn’t a typical approval process. The AI Airlock represents a fundamental shift from reactive compliance to proactive, collaborative oversight. Instead of waiting for problems to emerge after launch, regulators work alongside developers to identify and solve issues during development. It’s agile regulation for an agile technology. This approach mirrors the iterative cycles of modern software development, replacing the rigid, ‘waterfall’ style of traditional medical device certification with a dynamic process of continuous dialogue, testing, and adaptation. This is essential for a technology that is, by its very nature, not static.

The initiative brings together the MHRA, the NHS AI Lab, and the Department of Health and Social Care (DHSC) in a unique partnership, with each entity playing a critical role:

- The MHRA acts as the core regulatory authority, providing the legal framework, safety oversight, and ultimate decision-making power. Their primary goal within the Airlock is to learn how to evolve existing regulations to be fit-for-purpose for AI.

- The NHS AI Lab serves as the clinical implementation partner. It provides the crucial link to the real world, offering access to clinical expertise, anonymised data for testing, and the practical environment of the NHS. Their focus is ensuring that AI tools are not only safe but also clinically useful, effective, and capable of integrating into complex hospital workflows.

- The DHSC provides the high-level strategic direction, policy support, and funding. They ensure the AI Airlock initiative aligns with national healthcare priorities, such as improving diagnostic efficiency, reducing administrative burdens on clinicians, and ultimately delivering better patient outcomes.

This tripartite collaboration ensures that regulatory frameworks stay grounded in real clinical needs while keeping pace with rapid innovation. The question they’re asking isn’t just “Is this AI safe?” but “How do we build regulatory systems that can keep AI safe as it evolves?”

For those building AI-Automated Airlock platforms like ours at Lifebit, this regulatory evolution matters deeply. The technical infrastructure we create for secure, federated data access must align with how regulators think about AI safety and governance. The insights from the MHRA’s program are shaping what “secure by design” means in practice. You can explore the program’s objectives and early findings in this detailed post: Exploring AI in Healthcare: Insights from the AI Airlock Pilot.

The Pilot Program: From 40 Applicants to 4 Case Studies

The numbers tell a story of selectivity and rigor. When the pilot program was announced, forty companies applied. Only five made it through the initial screening. After running from September 2023 to April 2024, four pioneering companies completed full case studies.

This wasn’t about excluding good ideas. It was about focusing limited regulatory resources on the hardest problems—the ones that would teach regulators the most about AI’s unique challenges. Each company brought a different piece of the puzzle, from generative AI for clinical documentation to diagnostic algorithms, and together, they stress-tested the boundaries of current regulatory frameworks.

The pilot generated over 40 recommendations that will shape how medical AI gets approved and monitored in the UK and potentially worldwide. These weren’t theoretical; they came from real developers hitting real regulatory walls and working through them with regulator support. The trade-off for this intense scrutiny was direct access to regulatory expertise and the chance to help write the rules that will govern their industry. For a comprehensive look at what the pilot uncovered, the AI Airlock Sandbox Pilot report provides remarkable detail.

Core Mission: De-Risking AI Before It Reaches Patients

The core mission is simple: test AI in healthcare within controlled environments before patients are exposed to any risk. The AI Airlock uses a layered testing approach, moving AI products through simulation, virtual testing, and finally real-world clinical settings—each stage adding realism while maintaining strict safety controls. It’s like a flight simulator for medical AI, allowing developers and regulators to see how the system behaves under pressure without real-world consequences.

This represents a fundamental departure from traditional regulatory pathways, which were built for static devices. AI learns and adapts, and the AI Airlock acknowledges this reality by creating space for continuous learning and adaptation within the regulatory process itself. Regulators act as active participants, helping developers solve safety challenges during development, not after a product has launched. This proactive approach provides a roadmap for companies building the secure data access platforms, federated analytics systems, and real-time monitoring tools that will power the next generation of healthcare AI.

40 Regulator-Backed Fixes for Medical AI: Stop Hallucinations, Bias, and Drift Now

The AI Airlock pilot didn’t just test products—it stress-tested the entire regulatory framework. What emerged was clear: traditional medical device regulations simply weren’t built for AI’s unique nature.

Unlike a pacemaker, AI models aren’t static. They learn, evolve, and can confidently produce outputs that are completely wrong—what the industry calls “hallucinations.” These characteristics created a perfect storm of regulatory challenges that the pilot program tackled head-on.

The four case studies each wrestled with different aspects of this complexity. Through their experiences, the pilot generated over 40 concrete recommendations, revealing gaps in everything from explainability standards to post-market surveillance. Issues like data bias, model drift, and the silent risk of clinician over-reliance on AI recommendations came sharply into focus. It became evident that we can’t simply apply old rules to new technology; AI demands a fundamentally different approach to safety and oversight.

Addressing Explainability and ‘Hallucinations’

Accuracy matters, but trust matters more. Clinicians in the pilot often preferred a slightly less accurate AI if they could understand why it made a certain recommendation. This explainability challenge, also known as Explainable AI (XAI), runs deep. It’s not just about fostering trust; it’s a critical safety mechanism that allows clinicians to verify outputs, helps developers debug models, and provides a crucial tool for auditing and identifying hidden biases. When an AI flags a potential issue, doctors need to understand its reasoning to have confidence in the output. The pilot confirmed that one-size-fits-all explanations don’t work; they must be tailored to the clinical context and user. For example, some XAI techniques like LIME (Local Interpretable Model-agnostic Explanations) can highlight which pixels in a mammogram most influenced a model’s ‘malignant’ classification, while others like SHAP (SHapley Additive exPlanations) can quantify the impact of different lab results on a sepsis risk score, giving clinicians actionable insights.

Then there’s the hallucination problem, a critical risk for generative AI in healthcare. To combat this, participants tested adaptive AI tools guided by clinical protocols to keep outputs grounded in reality. This often involves a technique called Retrieval-Augmented Generation (RAG), where the AI is required to base its output on information retrieved from a curated, trusted knowledge base—such as the latest NICE guidelines or approved clinical trial data—drastically reducing the risk of fabricating information. The pilot also highlighted the need for clear validation rules for synthetic data. While synthetic data can help augment limited datasets and train models on rare conditions, it carries the risk of perpetuating hidden biases or failing to represent real-world edge cases. Regulators will demand robust validation to prove that synthetic data accurately reflects the statistical properties of the target population. One practical solution that emerged was traffic-lighting systems, which flag AI outputs by confidence level (red, yellow, green) to ensure human oversight remains central in high-stakes scenarios.

Reinventing Post-Market Surveillance for Evolving AI

Post-market surveillance proved to be the thorniest problem of all. Traditional medical devices are predictable; AI is not. Models can drift over time as they encounter new data, causing performance to shift. An AI designed to learn continuously is essentially a different device six months after deployment, a reality that current regulatory frameworks are not equipped to handle.

This is where the pilot identified the most urgent needs for change:

- Continuous Monitoring for Model Drift: Models degrade. Data drift occurs when the input data changes (e.g., a hospital gets a new brand of MRI scanner with different image properties). Concept drift occurs when the relationship between inputs and outputs changes (e.g., a new treatment protocol alters the typical progression of a disease). The pilot concluded that continuous, real-time monitoring of model performance against real-world clinical data must become the standard.

- Real-Time Observability Dashboards: To manage drift, regulators and developers need early warning systems. The recommendation is to build real-time dashboards that track key metrics like AI inference values, data quality, and statistical shifts in performance, allowing for proactive intervention before patient safety is compromised.

- Predetermined Change Control Plans (PCCPs): Borrowing from the FDA’s forward-thinking framework, the pilot emphasized the need for PCCPs. A PCCP is a ‘regulatory blueprint’ submitted by the developer before marketing the device. It details the specific, anticipated modifications the AI is designed to make, the methodology for implementing and validating those changes, and an assessment of their impact, all within a controlled and pre-approved plan. This allows AI to evolve without needing a full re-submission for every update.

The pilot also revealed that existing NHS incident reporting systems aren’t equipped to capture AI-specific issues. A clinician is unlikely to report ‘model drift’ as an adverse event. Likewise, the subtle influence of automation bias—the tendency for clinicians to over-rely on an automated system and lower their own vigilance—is a significant risk that current systems don’t track. For instance, a radiologist, accustomed to an AI tool that is 99% accurate, might subconsciously miss a rare or atypical fracture that the AI also fails to flag—a mistake a human alone might have caught. The bottom line is that we need AI-specific post-market surveillance guidance, and the AI Airlock has shown that a shift from static compliance to dynamic oversight is not only possible but necessary.

Run AI Where the Data Lives: How an AI-Automated Airlock Lets You Analyze EHR and Genomics Without Moving Data

While the MHRA’s AI Airlock tackles regulatory oversight, the term AI-Automated Airlock also describes a technical solution: a secure data gateway that is changing how we work with sensitive health information. The fundamental challenge is giving AI models access to the rich biomedical data they need without compromising patient privacy or violating compliance rules.

Traditional approaches require copying data to a central location—a risky, expensive, and often illegal proposition. An AI-Automated Airlock offers a smarter solution. It acts as an intelligent intermediary, allowing AI models to analyze sensitive data in real-time without the raw information ever leaving its secure home. Imagine a physical airlock on a spacecraft; it lets you move between two environments without compromising either one. Our AI-Automated Airlock does exactly that for data, creating a controlled gateway where computation meets information safely.

Its core purposes are to enable secure data access, enforce federated governance across institutions, power real-time analytics without data movement, and ensure regulatory compliance with standards like GDPR. For organizations managing siloed health records, claims, and genomics data, this infrastructure is essential for generating timely insights while data stays protected.

The Federated Paradigm: A Revolution in Data Security and Collaboration

At Lifebit, our AI-Automated Airlock is central to our federated AI platform. It solves the problem of running sophisticated analytics on sensitive data that legally cannot move. The answer is to bring the computation to the data, not the other way around. This federated paradigm represents a fundamental shift away from outdated, high-risk centralized models.

- The Old Way (Centralized): Data from multiple sources (hospitals, research centers) is physically copied, transferred, and pooled into a single, massive central database. This approach is fraught with risk. It creates a single, high-value target for cyberattacks. The process of transferring data is expensive, time-consuming, and creates immense legal and compliance overhead, often violating data residency laws like GDPR in Europe or country-specific regulations that forbid patient data from leaving national borders.

- The New Way (Federated): Instead of moving data, the AI models and analytical queries ‘travel’ to the data. The analysis runs locally within each data owner’s secure, private environment. The AI-Automated Airlock then acts as the control point, inspecting the results before they leave. Only privacy-preserving, aggregated insights—such as a statistical correlation, a model parameter, or an anomaly alert—are allowed to return to the researcher. No raw or patient-level data ever moves or is exposed. This approach is secure by design, inherently compliant with data residency laws, and enables global collaboration on a scale that was previously impossible.

Our platform enables this through a suite of integrated components:

- Trusted Research Environment (TRE): This is the highly secure, locked-down virtual workspace where analysis occurs. Approved researchers are granted access to the TRE, but they cannot download, copy, or export raw data. Every action is logged in an immutable audit trail. The AI-Automated Airlock functions as the secure exit point for this environment, ensuring only permissible results get out.

- Trusted Data Lakehouse (TDL): This is the advanced storage and management layer that sits within the secure perimeter. It combines the scalability of a data lake (capable of handling raw, unstructured data like genomic sequences or medical images) with the powerful management and query features of a data warehouse (for structured data like EHR records). Crucially, the TDL is where data harmonization occurs, transforming disparate data formats (e.g., HL7, FHIR, OMOP) into a consistent model that AI can readily use.

- Real-time Evidence & Analytics Layer (R.E.A.L.): This is the ‘intelligence engine’ that sits on top of the federated network. It’s designed for continuous, operational analytics, not just one-off research projects. For example, a pharmaceutical company could use R.E.A.L. to continuously monitor a cohort of patients on a new drug across multiple hospitals globally, receiving privacy-preserving alerts on efficacy trends or potential safety signals in near-real-time—a pharmacovigilance process that used to take months or years.

Key Features of an AI-Automated Airlock Platform

An effective AI-Automated Airlock platform must make working with sensitive health data simpler, safer, and more transparent. Key capabilities include:

- Unified Data Access & Harmonization: Provides a single interface for working with diverse AI models and distributed data sources (EHR, genomics, claims). It automatically transforms disparate information into consistent formats (e.g., the OMOP Common Data Model) that AI models can use, dramatically reducing research timelines from months to days.

- Integrated Security & Governance: Implements a zero-trust architecture where no user or system is trusted by default. It actively filters personally identifiable information, blocks malicious code, and enforces policy-driven controls. Advanced techniques like differential privacy can be applied to add mathematical noise to results, ensuring individual privacy even in aggregated outputs. Role-based permissions ensure users only access data relevant to their approved purpose.

- Full Observability & Audit Trails: Creates an unbroken, immutable record of every interaction—who accessed what data, which AI model ran, and what the results were. This granular auditability is critical for regulatory compliance (e.g., GxP in pharma development) and for debugging and improving models over time.

- Scalability, Performance & Cost Management: Built on a cloud-native architecture, the system can scale seamlessly from small research projects to massive, multi-national programs involving petabytes of data. It provides central control over resource usage and expenses, ensuring projects run efficiently and within budget.

These features work together to create an environment where innovation can happen safely. AI models get the data they need to improve patient outcomes, while privacy, security, and compliance remain uncompromised.

Phase 2: 7 AI Tools Under Scrutiny—What the UK AI Airlock Will Demand Next

The MHRA AI Airlock isn’t a one-off project. It’s the beginning of a fundamental shift in how we regulate AI in healthcare. The pilot program proved that regulatory sandboxes can work, and now the real work begins: scaling those lessons into a dynamic framework that keeps pace with AI’s rapid evolution.

Phase 2 is already underway, running through the 2024-2025 financial year. This expansion represents a deeper commitment to understanding AI’s complexities, building on the pilot’s foundation to tackle even more challenging regulatory questions. The broader goal is to create a regulatory environment where innovation thrives without compromising patient safety. By fostering collaboration among regulators, healthcare providers, and developers, the AI Airlock is charting a course toward safer, more effective AI deployment.

What Technologies Are Being Tested Next?

Phase 2 casts a wider net, bringing seven additional AI technologies into the fold. These are practical solutions addressing real bottlenecks in healthcare delivery, and each presents a unique set of regulatory hurdles:

- AI-powered clinical note taking: The challenge here is not just transcription accuracy but semantic understanding. How does a regulator certify an AI that summarizes a complex patient-doctor conversation? The risk of ‘hallucinating’ a symptom or omitting a critical detail is significant. Validation requires new methods beyond simple word-error rates, focusing on clinical content fidelity and the system’s safeguards against generating false information.

- Advanced cancer diagnostics: For these tools, the primary regulatory hurdle is managing model drift and ensuring equitable performance. A model trained on images from one hospital’s scanners may perform differently on images from another. Furthermore, how does the model adapt to new discoveries in cancer pathology without requiring a full re-certification each time? This is where robust PCCPs become essential.

- Eye disease detection tools: The key concern is data bias. Many genetic eye conditions have different prevalence and presentation across ethnic groups. A model trained predominantly on one population may fail catastrophically on another. Regulators will demand rigorous proof of fairness and evidence that the training data is representative of the entire target patient population.

- Obesity treatment support systems: These systems, often involving continuous feedback loops with the patient via an app, present a unique challenge for long-term monitoring. How do you measure the safety and efficacy of an AI that provides adaptive lifestyle advice over years? The regulatory focus will be on the robustness of the underlying behavioral science, the safety of the recommendations, and the mechanisms for detecting and reporting adverse outcomes over a long time horizon.

Each of these technologies will further test the regulatory frameworks for evolving AI, post-market surveillance, and the practical implementation of Predetermined Change Control Plans. For those wanting to dig deeper, the MHRA hosted a comprehensive webinar covering the pilot results and Phase 2 plans: Review the AI Airlock webinar — Pilot and Phase 2, which took place on 19 June 2024.

Global Impact and Recommendations for AI Developers

The AI Airlock isn’t just reshaping UK regulation—it’s sending ripples across the global healthcare AI landscape. Its practical, evidence-based approach is providing valuable insights for other major regulatory bodies, such as the FDA in the US and agencies within the EU. The MHRA’s findings on PCCPs and real-world performance monitoring, for example, provide a practical template that could inform the FDA’s ‘Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan’ and the implementation of the EU’s AI Act for high-risk medical devices.

For developers, the message is unmistakable: the old playbook no longer works. The key takeaways are:

- Dynamic compliance is the new reality. AI systems require continuous post-market surveillance with built-in monitoring for drift and performance degradation.

- Explainability must be baked in from day one. It is no longer an optional feature but a core safety requirement.

- Rigorous validation for synthetic data is now essential. Developers must be prepared to prove their synthetic data is a fair and accurate representation of reality.

- Human-AI interaction design is a critical frontier. Mitigating automation bias and ensuring clinicians remain in control requires thoughtful design and user training.

Perhaps most importantly, collaboration with regulators is no longer optional. Developers who proactively engage with initiatives like the AI Airlock will not only de-risk their products but also help shape future guidance, positioning themselves ahead of the curve. The UK MHRA is actively building a global network to foster international collaboration toward harmonized regulation, which promises safer AI deployment, faster innovation, and better patient outcomes worldwide.

Platforms like ours at Lifebit, with our AI-Automated Airlock capabilities, provide the federated infrastructure essential for creating these secure research environments, enabling the very innovation the regulatory AI Airlock seeks to govern safely.

Bottom Line: Ship Safer Medical AI with an AI-Automated Airlockor Get Left Behind

We stand at a pivotal moment in healthcare. The integration of AI into medicine isn’t a distant futureit’s happening now, and the decisions we make today will shape patient care for generations to come.

The UK’s AI Airlock has shown us something remarkable: that proactive, collaborative regulation can keep pace with rapid technological advancement. From explainability challenges to the thorny problem of AI hallucinations, from post-market surveillance to the subtle dangers of automation bias, the pilot program has tackled head-on what many thought were unsolvable regulatory puzzles. The journey from 40 applicants to four groundbreaking case studies has generated over 40 recommendations that will reshape how we think about medical AI oversight.

But regulatory frameworks alone aren’t enough. We also need the technical infrastructure to support safe, compliant AI innovation at scale.

This is where the concept of an AI-Automated Airlock becomes transformative. By enabling secure, federated access to sensitive biomedical datawithout ever exposing raw patient informationwe can open up the full potential of AI-driven findy while maintaining the highest standards of privacy and compliance. Real-time analytics, continuous monitoring, and federated governance aren’t just technical features; they’re the foundation of trust that patients, clinicians, and regulators need.

Both approachesregulatory oversight and secure technical infrastructuremust work in harmony. The MHRA’s sandbox provides the testing ground and regulatory learning; platforms like the one offered by Lifebit provide the federated infrastructure essential for creating these secure, compliant research environments, enabling the very innovation the AI Airlock seeks to regulate safely. Together, they create an ecosystem where innovation and safety aren’t competing priorities, but complementary goals.

The path forward is clear. We need AI that explains its reasoning. We need continuous surveillance that catches problems before they reach patients. We need secure data environments that enable collaboration without compromise. Most importantly, we need to build trustwith every algorithm, every test, and every deployment.

At Lifebit, we’re committed to this vision. We believe that trustworthy AI in medicine isn’t just possibleit’s within reach. The technology exists. The regulatory frameworks are evolving. What we need now is the collective will to put patient safety and data security at the heart of every innovation.

The future of healthcare AI is being built today, one secure airlock at a time.