The Future is Now: Top AI Solutions for Pharmacovigilance

90% of Adverse Drug Events Go Unreported. Here’s How AI Fixes It.

AI-driven pharmacovigilance solutions are changing how pharmaceutical companies, regulatory agencies, and public health organizations detect, assess, and prevent adverse drug reactions. Here’s what you need to know:

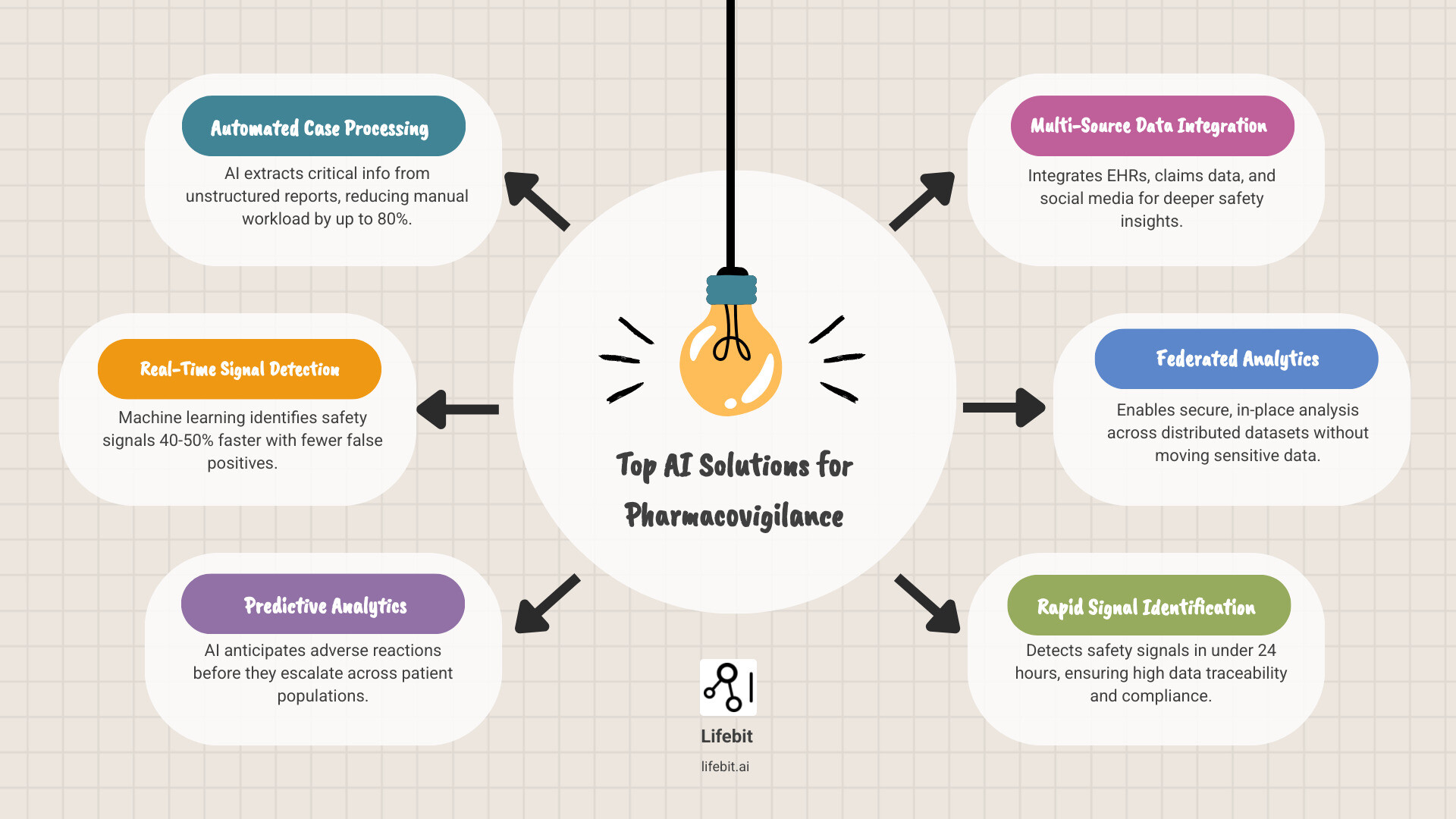

Top AI Solutions for Pharmacovigilance:

- Automated Case Processing – AI extracts critical information from unstructured reports, reducing manual workload by up to 80%

- Real-Time Signal Detection – Machine learning identifies safety signals 40-50% faster with fewer false positives

- Predictive Analytics – AI anticipates adverse reactions before they escalate across patient populations

- Multi-Source Data Integration – Platforms like the FDA’s Sentinel Initiative analyze electronic health records, claims data, and social media

- Federated Analytics – Secure, in-place analysis across distributed datasets without moving sensitive patient data

The statistics are stark: over 90% of adverse drug events go unreported through official channels, while case processing consumes up to two-thirds of a company’s pharmacovigilance budget. With 15-day reporting deadlines for serious reactions in many jurisdictions, the pressure is immense.

Traditional pharmacovigilance methods can’t keep pace. The exponential growth in data—from electronic health records to social media posts—has created a data tsunami that manual review was never designed to handle. Less than 5% of adverse events reach official reporting channels, while the vast majority hide in free-text emails, phone calls, and online conversations.

Artificial intelligence is the game-changer. AI transforms drug safety from reactive to proactive by using natural language processing to understand unstructured text and machine learning to spot hidden patterns. It’s not just about speed; it’s a fundamental shift in detection, analysis, and response.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where we’ve spent over 15 years building federated data platforms that enable secure, real-time analysis across siloed healthcare datasets. Our work with public sector institutions and pharmaceutical organizations has shown us how ai-driven pharmacovigilance solutions can detect safety signals in under 24 hours while maintaining 100% data traceability and compliance. The future of drug safety isn’t about working harder—it’s about working smarter with AI that respects data privacy while delivering unprecedented insights.

Cut Case Processing Time by 80%? How AI Transforms PV Workflows

Pharmacovigilance (PV), as defined by the European Medicines Agency (EMA), is the science of drug safety. Traditional PV systems are overwhelmed by the volume and variety of data from sources like electronic health records (EHRs), literature, and social media. AI steps in to manage this “data tsunami,” improving efficiency, consistency, and cost savings.

Automating Case Intake and Processing

One of the most impactful applications of AI in pharmacovigilance is automating case intake and processing. This has traditionally been a highly manual, labor-intensive process where PV specialists spend the majority of their time on data entry and quality checks rather than on scientific analysis.

AI significantly reduces the manual workload in data collection and entry, improving checks for completeness and validity. AI platforms, for example, have drastically cut processing times, with some companies reducing case processing from days to hours. This efficiency gain is crucial when facing exponential growth in adverse event (AE) reports, driven by new communication channels and larger patient populations.

AI-driven data extraction methods streamline individual case safety report (ICSR) processing by accurately extracting essential elements such as patient details, adverse reactions, and medication information. This is powered by Natural Language Processing (NLP), a cornerstone AI technology for PV. Since most safety data is unstructured text—like physician notes, patient emails, or social media posts—NLP enables AI to read and interpret this text at scale, automatically extracting relevant information.

Specifically, NLP models use techniques like Named Entity Recognition (NER) to identify and classify key terms, such as drug names, dosages, symptoms (coded to MedDRA terminology), and patient demographics. Following this, Relation Extraction models determine the relationships between these entities—for instance, confirming that a specific drug caused a particular adverse event, rather than just being mentioned in the same report. This structured output can then be used to auto-populate safety databases, minimizing human error and freeing up experts for verification and analysis.

Consider the variability in reporting formats: a patient might describe an adverse event in a free-text email, a doctor in a structured EHR note, or a researcher in a scientific publication. AI, particularly advanced NLP models, can handle this variability. By leveraging robust algorithms, these systems can parse diverse textual inputs, normalize terminology (e.g., recognizing that “Tylenol” and “acetaminophen” refer to the same substance), and extract the necessary data points, overcoming a significant hurdle for PV teams. Our goal is to ensure that critical information, regardless of its original format, is captured accurately and efficiently.

Enhancing Signal Detection and Surveillance

Beyond case processing, AI greatly improves signal detection, surveillance, and adverse drug reaction (ADR) reporting automation. AI techniques like data mining and automated signal detection have expedited safety signal identification, moving the field from a reactive to a proactive stance.

AI handles the enormous volume and variety of data sources, enabling continuous, automated surveillance. Traditional signal detection relies on disproportionality analysis (DPA), using statistical measures like Proportional Reporting Ratios (PRR) or Reporting Odds Ratios (ROR) to find drug-event pairs that are reported more frequently than expected. While useful, these methods are prone to false positives, can be slow to detect signals for new drugs, and struggle to identify complex patterns, such as interactions between multiple drugs or risks confined to specific patient subgroups (e.g., those with a particular comorbidity).

AI-driven signal detection platforms use more sophisticated pattern recognition. Machine learning algorithms can analyze thousands of variables simultaneously, uncovering subtle correlations that DPA would miss. For instance, leading AI-driven signal detection platforms have been shown to accelerate signal evaluation by approximately 80% and achieve a 40-50% reduction in false positive signals. This allows PV professionals to focus their limited time and resources on investigating genuine safety concerns rather than chasing statistical noise.

We can also leverage AI to monitor social media, forums, and other online channels for pharmacovigilance signals. Patients often share their experiences on platforms like Twitter or health forums using informal language, slang, or abbreviations. AI agents equipped with specialized NLP models can decode this informal language to identify potential ADRs, providing a real-world “patient voice” that traditional systems often miss. Similarly, AI significantly improves medical literature monitoring by automatically scanning thousands of publications for mentions of a drug and associated adverse reactions, flagging relevant articles for expert review.

Revolutionizing Causality Assessment

Causality assessment—determining if a drug caused an adverse event—is one of PV’s biggest challenges. Traditional methods, such as the Naranjo scale or expert opinion, are often slow, subjective, and can lead to inconsistent assessments between different evaluators.

AI-driven approaches, particularly Bayesian networks, are changing this process. These are probabilistic graphical models that represent variables and their conditional dependencies. In PV, a Bayesian network can model the relationship between a drug, a patient’s clinical characteristics, and an adverse event. It provides a quantitative score for the likelihood of a causal relationship, integrating evidence from multiple sources—the case report itself, pharmacological knowledge, and data from similar cases. This reduces subjectivity and ensures more consistent, evidence-based assessments.

For example, the Porto Pharmacovigilance Centre implemented an expert-defined Bayesian network that reduced causality assessment times from days to hours. The system showed high concordance with clinical evaluators (over 87% for ‘Probable’ causality). This demonstrates how AI can augment human expertise. These models are not static; they can be continuously updated with new cases, allowing them to learn and improve their accuracy over time, further reducing bias and strengthening the reliability of the assessment.

Predict Adverse Reactions Before They Happen: AI, RWE, and Proactive Safety

Generating Actionable Real-World Evidence (RWE)

The true power of AI-driven pharmacovigilance solutions emerges when we integrate diverse data sources to generate real-world evidence. This is about connecting the dots between electronic health records (EHRs), insurance claims, clinical narratives, patient-generated data from wearables, and even genomic data to understand how drugs perform in the real world.

Clinical trials use controlled environments and select, often healthier, patient populations. Real-world evidence captures the complexity of how drugs perform in diverse, real-world populations with multiple health conditions (comorbidities) and concurrent medications, revealing safety patterns that trials inevitably miss. AI is the engine that makes generating RWE at scale feasible.

The FDA’s Sentinel Initiative exemplifies this approach. This system uses automated algorithms to proactively monitor medical product safety across massive healthcare databases. By analyzing EHRs and insurance claims in real-time, Sentinel can identify potential safety signals as they emerge in actual patient populations. This represents a shift from waiting for reports to actively seeking out signals where they occur. To manage this data diversity, initiatives often rely on a Common Data Model (CDM), such as the OMOP CDM, which standardizes the format and terminology of disparate datasets. This allows analytical tools to be run consistently across different data sources, a critical step for scalable RWE generation.

AI makes this possible by fusing multiple data types. Machine learning models can simultaneously analyze structured data like lab results and diagnosis codes alongside unstructured clinical notes and patient-reported outcomes. The result is a comprehensive, longitudinal view of drug safety and efficacy across diverse patient populations that is impossible to achieve manually.

Predictive Modeling for Proactive Safety

Predictive models are the future of PV, allowing us to anticipate adverse drug reactions (ADRs) and drug-drug interactions before they happen. This shifts the focus from reacting to safety issues to proactively identifying high-risk individuals for early intervention.

These models analyze complex patterns across patient demographics, genetics, comorbidities, concomitant medications, and pharmacology to forecast safety risks. For example, machine learning models like Random Forests, Gradient Boosting Machines, and Deep Neural Networks have achieved accuracy rates of over 88% in predicting ADRs in older inpatients. A provider could use such a model to see that a patient with a specific genetic marker, taking drug X, and having elevated creatinine levels is at a 90% higher risk of kidney injury. This allows them to adjust the prescription, increase monitoring, or choose an alternative treatment for that patient.

The advantages of predictive models extend beyond individual patient care. They enable pharmaceutical companies and regulators to spot emerging safety patterns across entire populations, identifying vulnerable subgroups that may require labeling changes or risk mitigation strategies. This moves us from reactive pharmacovigilance to proactive, personalized patient protection.

However, many advanced AI models are “black boxes,” delivering predictions without explaining their reasoning. This opacity is a major hurdle in a field where regulatory scrutiny and human safety are paramount. Experts need to understand the ‘why’ behind an AI’s conclusion, not just accept it on faith. That’s why continuous model validation and the development of Explainable AI (XAI) solutions—systems that can articulate their reasoning in human-understandable terms—are critical for building trust and meeting regulatory expectations. Furthermore, models must be monitored for “model drift,” where performance degrades over time as real-world data patterns change, requiring periodic retraining and revalidation.

Key considerations for selecting ai-driven pharmacovigilance solutions

Choosing the right AI solution requires careful evaluation. Here are the key factors:

- Data Extraction and NLP: The solution must handle unstructured text from diverse sources like patient emails, call center notes, social media, and scientific literature. Its NLP capabilities should be robust enough to understand context, normalize medical terminology (e.g., to MedDRA), and handle multiple languages. Ask vendors about the accuracy of their extraction models and the languages they support.

- Model Transparency and Explainability (XAI): For regulatory compliance and user trust, prioritize solutions offering explainability. Your team must be able to understand and validate the AI’s conclusions. Ask vendors: Can the system show which part of a source document led to a specific conclusion? Can it quantify the factors contributing to a risk score? This is non-negotiable for high-stakes decisions.

- Scalability and Performance: Your solution must be architected to handle exponential growth in adverse event reports and accommodate new, large-scale data sources (like genomics or RWE) without performance degradation. It should be cloud-native or have a clear path to scaling resources as your data volume grows.

- System Integration: The AI tool is useless if it doesn’t integrate seamlessly with your existing safety databases (like Oracle Argus or Arisg), reporting platforms, and other IT systems. Look for solutions with well-documented APIs and support for interoperability standards like FHIR to ensure a smooth workflow and avoid creating new data silos.

- Vendor Validation and Support: Assess the vendor’s track record, their model validation processes, and their plan for ongoing support and model maintenance. Ask critical questions: What datasets were your models trained and validated on? How do you measure and mitigate bias? How do you handle model updates and revalidation? For smaller companies, partnering with specialized providers or Contract Research Organizations can distribute costs while providing access to top-tier, pre-validated AI.

Your AI Implementation Roadmap: From ROI and Regulation to Team Buy-In

Balancing Automation with Human Expertise

AI solutions don’t replace PV teams; they empower them. Successful “human-in-the-loop” models let AI handle the routine, high-volume, and repetitive tasks like data extraction, duplicate detection, and initial case triage. This frees PV professionals for complex interpretation, critical thinking, and expert judgment on high-stakes cases where human nuance is irreplaceable.

This shift turns team members from “document processors to safety strategists.” This transition requires thoughtful change management and investment in upskilling. User-friendly systems and comprehensive training are essential to ensure adoption. It’s critical to address job security concerns head-on by demonstrating how AI eliminates tedious, low-value work, allowing staff to focus on more rewarding analytical challenges like signal investigation, risk-benefit analysis, and causality assessment. When teams see AI as a tool that augments their expertise and enhances their professional value, they are far more likely to embrace it. New roles may emerge, such as AI model validators who audit system performance and PV data scientists who interpret complex model outputs.

Assessing Costs vs. Return on Investment (ROI)

Implementing AI requires a significant upfront investment in software licensing, cloud infrastructure, system integration, and employee training, which can be daunting for any organization.

However, the long-term operational cost reductions and risk mitigation benefits often dwarf the initial investment. The ROI is compelling. AI can reduce manual case processing workloads by up to 80%, cutting processing times from days to hours. Identifying safety signals in under 24 hours instead of weeks allows for early intervention, preventing patient harm and avoiding costly product recalls, litigation, or regulatory penalties.

For larger pharmaceutical companies with high case volumes, the ROI can be realized within 12-24 months. Smaller companies with lower case volumes may find that Contract Research Organizations (CROs) are invaluable partners, allowing them to access state-of-the-art AI capabilities through a subscription model without bearing the full infrastructure and maintenance costs.

When calculating ROI for PV automation, consider these key factors in detail:

- Upfront costs: This includes not just AI software licenses but also IT infrastructure upgrades (e.g., cloud computing resources), professional services for integration with existing safety databases, costs for validating the system to meet GVP standards, and comprehensive training programs for the PV team.

- Long-term savings: The most obvious saving is reduced manual labor for case processing. However, you should also model savings from faster signal detection (reducing the impact of safety issues), improved data quality (less rework), and streamlined regulatory reporting (avoiding non-compliance fines).

- Strategic benefits (Hidden ROI): These are harder to quantify but immensely valuable. They include reduced regulatory risk (fewer warning letters and a better relationship with health authorities), improved compliance posture, enhanced patient safety, and a significant competitive advantage in bringing safer drugs to market faster by de-risking development earlier.

Model your ROI over a 3-5 year period to capture both the immediate tangible efficiency gains and the long-term, harder-to-quantify strategic benefits like reduced risk and improved patient safety.

Navigating Regulatory and Validation Problems

The regulatory landscape for AI in pharmacovigilance is complex and evolving in real-time. As of 2025, no PV-specific AI regulations exist; instead, general provisions like Good Pharmacovigilance Practice (GVP) apply, meaning rigorous validation is non-negotiable. Any AI system used in a GVP context must be fit for purpose, and its performance must be documented and verifiable.

In the USA, the FDA’s CDER Emerging Drug Safety Technology Program (EDSTP) and draft guidance on AI suggest a risk-based model: the greater the AI’s influence on decisions, the higher the level of scrutiny and validation required. Europe’s EU AI Act is stricter, classifying most healthcare AI as high-risk. This designation mandates robust risk management systems, high-quality training data, detailed technical documentation, full traceability of decisions, and stringent human oversight.

Transparency and explainability are central to regulatory acceptance. This is why Lifebit prioritizes Explainable AI (XAI). Our platform ensures 100% data traceability, allowing every AI output—from an extracted data point to a predicted risk score—to be traced back to its source data and the model’s reasoning. This enables regulators and internal auditors to verify the logic, not just trust a black-box output.

Model validation processes must be thorough and continuous. A one-time validation at implementation is insufficient. Continuously learning AI models present an ongoing validation challenge, as does the phenomenon of “concept drift,” where a model’s accuracy degrades as real-world data evolves. Organizations must establish clear internal thresholds and documentation for when model updates or performance degradation triggers revalidation. This requires a robust post-market monitoring plan for the AI itself.

Finally, data privacy and security are mandatory. Regulations like GDPR in Europe and HIPAA in the USA impose strict rules on handling patient data. Federated learning is a game-changer for data privacy. It brings the analysis to the data, rather than moving sensitive patient information to a central location. Our platform uses this approach to enable analysis across multiple hospital or health system sites without sharing the underlying data, delivering powerful insights with ironclad privacy protection.

The Future PV Team: Data Scientists, Ethicists, and Global Strategists

The Evolving Role of the PV Professional

The role of the PV professional is undergoing a profound evolution. As AI automates repetitive tasks like data entry, duplicate checking, and literature screening, experts are shifting from manual processing to strategic analysis, clinical interpretation, and high-level decision-making.

This shift makes PV professionals more valuable, not obsolete. The future requires hybrid skill sets blending deep clinical and regulatory expertise with strong data science proficiency. Professionals will not need to be expert coders, but they must possess sufficient data literacy to understand AI models, critically evaluate their outputs, oversee system performance, and provide the nuanced clinical interpretation that algorithms cannot. They must be able to ask the right questions of the data and effectively communicate complex, data-driven findings to diverse stakeholders, from regulators to clinicians.

The future of the PV workforce is about AI-human collaboration, where technology amplifies human expertise, allowing teams to manage vastly more data with greater accuracy and insight. This partnership enables PV professionals to be more efficient, insightful, and ultimately more effective at their core mission: protecting patient safety.

Addressing Challenges for SMBs and Underserved Regions

While transformative, the adoption of advanced AI presents significant barriers for small and mid-sized biopharma companies (SMBs). The significant upfront investment in technology and talent is harder to justify with lower case volumes, as the ROI takes longer to realize. Furthermore, SMBs often lack the in-house data science and AI validation expertise required for successful implementation.

Contract Research Organizations (CROs) and specialized technology vendors are essential partners for SMBs. They democratize access to AI by distributing technology costs across multiple clients. CROs can offer flexible engagement models, from providing AI as a service (SaaS) for case processing to managing the entire PV function. This allows SMBs to leverage cutting-edge AI capabilities, manage implementation risk, and tap into specialized data science and regulatory expertise without the heavy upfront investment.

AI also holds enormous potential for improving pharmacovigilance in underserved regions, which often face unique challenges. AI-powered tools can extract safety data from handwritten notes, process reports in local languages, and support offline or SMS-based reporting systems where internet connectivity is scarce. For example, Singapore’s Active Surveillance System for Adverse Reactions to Medicines and Vaccines (ASAR) is an inspiring example of a nationwide AI-powered system. However, global harmonization efforts are crucial to ensure regulatory frameworks are flexible enough to support such implementations and that models are trained on data representative of local populations.

Ethical, Legal, and Data Privacy Considerations

AI in pharmacovigilance carries significant ethical and legal weight. Handling sensitive patient data and making critical safety decisions requires careful attention to several key principles:

- Data Governance: Organizations must establish clear ownership, accountability, and ethical use frameworks for all data handled by AI. This includes defining who is responsible for AI model performance and how to address errors or unintended consequences.

- Algorithmic Bias: AI models can inherit and amplify biases present in their training data. For example, if a model is trained primarily on data from a specific demographic group, it may perform poorly and produce inaccurate risk assessments for under-represented populations, potentially exacerbating health disparities. Mitigation requires a conscious effort to use representative data, employ fairness-aware machine learning techniques (like re-weighting or adversarial debiasing), and continuously audit models for biased outcomes across different subgroups.

- Explainability (XAI): The “black-box” nature of some complex AI models is a significant problem in a regulated field. XAI techniques make AI decisions transparent and interpretable, allowing human experts to understand, question, and trust the outputs. This is not just a technical feature but a prerequisite for regulatory acceptance and responsible use.

- Data Privacy: Strict adherence to regulations like GDPR and HIPAA is fundamental. This requires robust data anonymization, pseudonymization, and secure data handling protocols to protect patient confidentiality at all times.

Federated learning is a key technological solution that directly addresses the tension between data access and privacy. It allows AI models to train on decentralized data without ever moving sensitive patient information from its secure source environment (e.g., a hospital’s server). Only aggregated, anonymous model updates are shared. Lifebit’s federated AI platform is built on this principle, enabling secure, collaborative analysis across distributed datasets while ensuring 100% privacy and compliance.

These ethical, legal, and privacy considerations are not obstacles but essential guardrails for responsible innovation. By building AI with these principles in mind, we can harness its transformative power while maintaining the public and regulatory trust that pharmacovigilance demands.

Conclusion: Building a Smarter, Safer Future for Drug Safety

The shift to an AI-powered future is a complete reimagining of patient safety. We’ve seen how AI-driven pharmacovigilance solutions automate case processing, sharpen signal detection, and enable proactive safety monitoring through predictive analytics and real-world evidence.

The results are measurable: efficiency gains of up to 80% in case processing, signal detection that’s 40-50% faster, and the ability to analyze previously inaccessible data sources. Most importantly, the focus is shifting from reactive crisis management to proactive risk prevention.

Challenges like investment costs, evolving regulations, and ethical considerations around data privacy and bias remain. However, these are not roadblocks but guideposts for responsible innovation.

What’s needed now are platforms that can handle this complexity while maintaining the highest standards of security, compliance, and explainability. Platforms that integrate diverse data sources without compromising patient privacy.

Lifebit’s platform is built for this new era. Our federated AI approach keeps data secure at its source while enabling analysis across boundaries. Our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer) provide the compliant infrastructure for real-time, AI-driven safety surveillance. We empower pharmaceutical companies, regulators, and public health organizations to meet modern pharmacovigilance demands.

The future of drug safety is being written now—a future where AI augments human expertise, signals are detected in hours, and proactive prevention is the priority.

Discover how to implement secure, AI-driven pharmacovigilance and join us in building a smarter, safer future for drug safety. Because when it comes to protecting patients, every hour matters—and the tools to make a difference are here today.