Unlocking Unified Data: An AI Blueprint for Seamless Harmonization

Why AI for Data Harmonization Is Now Critical for Every Data-Driven Organization

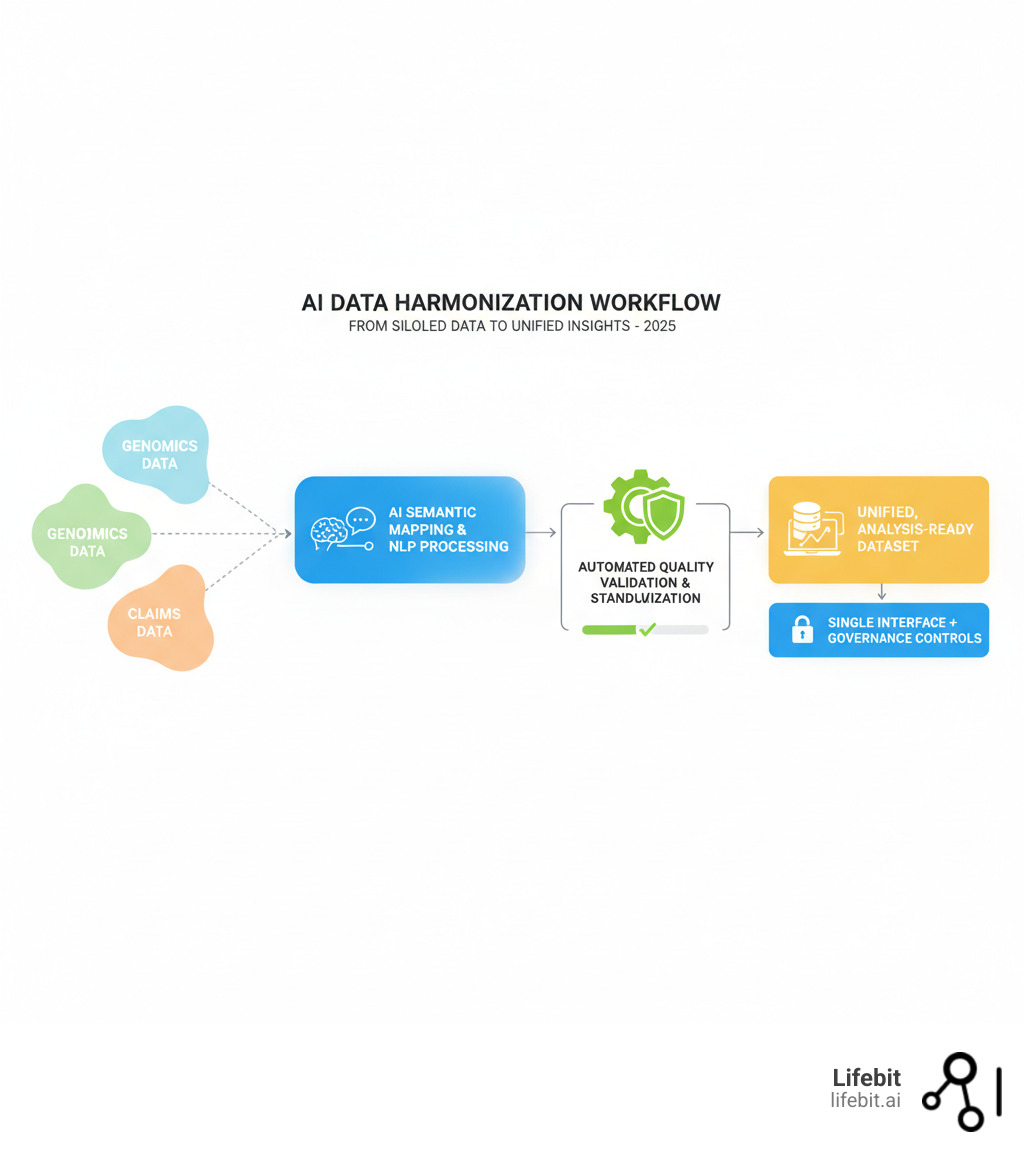

AI for data harmonization transforms how organizations unify disparate data sources, automatically resolving inconsistencies that traditionally required months of manual effort. Here’s what you need to know:

Quick Answer: How AI Enables Data Harmonization

- Semantic Understanding – Machine learning models use NLP to understand context and meaning across different labeling conventions, even in multiple languages.

- Automated Mapping – AI-powered tools automatically identify corresponding fields across data sources without rigid rule-based programming.

- Scalable Processing – Models adapt to new data patterns and handle millions of records without proportional increases in manual effort.

- Few-Shot Learning – Techniques like SetFit achieve 92%+ accuracy with as few as 8 training samples per class.

- Real-Time Adaptation – ML systems continuously learn from harmonization decisions and improve over time.

Organizations face a painful reality: data silos, inconsistent formats, and manual ETL processes that consume weeks of analyst time. A single pharmaceutical company might have patient data in 15 different formats. The cost is staggering: delayed insights, compliance risks, and unreliable AI models trained on poorly harmonized data.

Traditional rule-based approaches can’t keep pace. They require constant manual updates, break when encountering unexpected variations, and fail completely at understanding semantic differences—when “patientid” in one system means something different from “subjectidentifier” in another.

AI changes everything. Machine learning models adapt to diverse labeling conventions, use Natural Language Processing to understand context, and reduce harmonization time from months to days. They get smarter with every dataset they process.

As CEO and Co-founder of Lifebit, my work on platforms like Nextflow has shown me how AI-powered harmonization open ups insights that were previously impossible to access across secure biomedical environments.

This guide walks you through the practical application of AI to your data harmonization challenges—from zero-shot approaches requiring no labeled data to supervised methods achieving mission-critical accuracy.

What is Data Harmonization and Why Traditional Methods Are Obsolete

Imagine trying to have a conversation where everyone speaks a different dialect. One person says “customerid,” another says “clientcode.” They mean the same thing, but communication breaks down. That’s what happens with your data.

Data harmonization is the process of creating a common language for your data, aligning disparate sources into a consistent format you can analyze with confidence. It’s more than just data integration (gathering data in one place) or data standardization (enforcing uniform formats like YYYY-MM-DD). Harmonization reconciles the semantic differences—the actual meaning. It understands that “customerid” and “clientcode” refer to the same concept. This semantic alignment is what makes data truly ready for analytics and AI.

The Limitations of Traditional Methods

For decades, organizations relied on manual Extract, Transform, Load (ETL) processes. In today’s complex data landscape, they are obsolete.

- Rigidity and Brittleness: Rule-based ETL requires a handwritten rule for every variation. If a source system changes a column name, the entire pipeline breaks, requiring manual intervention. This creates a fragile system in a constant state of repair.

- Inability to Scale: Manual processes that work for five data sources crumble under fifty. The effort and time required grow exponentially, not linearly. What took a week now takes months, and critical business opportunities slip away while you wait for data to be ready.

- Semantic Blindness: A rule-based system can’t infer that “patientid” and “subjectidentifier” mean the same thing without being explicitly told. It fails with nuanced text, synonyms, abbreviations, and multiple languages, which is where the most valuable insights are often hidden.

- High Hidden Costs: The reliance on manual processes consumes an estimated 80% of a data analyst’s time, diverting expensive talent from high-value analysis to low-value data janitorial work. This translates to massive opportunity costs and project delays.

The AI Advantage

AI for data harmonization changes the game by understanding, adapting, and learning at scale.

- Adaptive Learning: Instead of rigid rules, machine learning models learn the underlying patterns from your data. They adapt to new labeling conventions and improve over time with minimal human intervention.

- Contextual Understanding: Through Natural Language Processing (NLP), AI grasps the semantics behind labels, even across languages. It recognizes that “customerid” and “clientcode” are the same based on context, not just a pre-programmed dictionary.

- Reduced Manual Effort: AI automates the repetitive, tedious tasks that consumed weeks of analyst time, freeing your experts for higher-value work. Harmonization projects that took months now happen in days.

The Critical Role of Harmonization in Building Modern AI

Deploying AI on unharmonized data is a recipe for disaster, a classic case of “garbage in, garbage out” (GIGO). AI models are only as good as the data they’re trained on. Poorly harmonized data introduces noise, contradictions, and hidden biases, leading to unreliable, inaccurate, or even harmful predictions. This is especially true for the latest generation of AI:

- Large Language Models (LLMs) and RAG: These models require clean, consistent data to provide accurate answers. If an LLM is fed conflicting information from unharmonized sources, it will hallucinate or provide incorrect information, eroding user trust.

- Agentic AI: Autonomous AI agents that perform tasks across multiple systems rely on a coherent understanding of the data landscape. If they can’t reliably interpret data from different sources, their actions will be ineffective.

Harmonization is also foundational for building a single source of truth (SSOT)—a unified, trusted view of your organization’s most critical data. This becomes the bedrock for reliable business intelligence, AI-driven decision-making, and auditable regulatory compliance. At Lifebit, our federated AI platform, with its Trusted Research Environment (TRE) and Trusted Data Lakehouse (TDL), is designed to deliver this SSOT for real-time insights.

Harmonized data is foundational for reliable analytics. Without it, dashboards show conflicting numbers, research is questionable, and strategic decisions are made on shaky ground. For a deeper dive, resources like A General-Purpose Data Harmonization Framework offer valuable insights.

Key Technologies Powering AI Harmonization

Several breakthroughs make AI harmonization possible at scale:

- Natural Language Processing (NLP): Gives AI the ability to understand semantics and context, interpreting the meaning of labels like “patient identifier” and “subject ID.”

- Vector Embeddings: These numerical representations capture semantic meaning, allowing AI to find relationships between terms that look different on the surface. They map words to a mathematical space where distance equates to semantic similarity.

- Sentence Transformers: Tools like Sentence Transformers generate high-quality embeddings that encode the full context of a label, enabling machines to “understand” data with a nuance that was previously impossible.

- Machine Learning Algorithms: Clustering and classification models tie everything together, learning from patterns to automate the reconciliation process at a massive scale.

Tools like LinkTransformer for deep record linkage integrate these technologies into standard data wrangling workflows, making AI for data harmonization accessible to a broader range of teams.

A Practical Guide to AI for Data Harmonization

The beauty of AI for data harmonization is its flexibility. You can start with zero labeled data and scale your approach as your needs grow. Let’s walk through the three main methodologies we use at Lifebit, which form a maturity curve for any organization.

Unsupervised (Zero-Shot) Harmonization

When you have no labeled training data, unsupervised (or zero-shot) harmonization is the fastest way to start. It leverages pre-trained models like those from Sentence Transformers that already possess a general understanding of language.

The process is simple: you define your target schema (the “golden” labels), convert those labels into vector embeddings, and store them in a fast search index like FAISS. When a new, messy label arrives, it’s also converted into an embedding, and the index is searched for the most semantically similar harmonized label, measured by cosine similarity. This entire process is automated and requires no manual labeling.

This approach is immediate and scalable, but its accuracy is moderate (around 43-53% in our research). It’s perfect for initial exploration, getting a rough map of your data, and automating the most obvious mappings, but it struggles with highly nuanced or domain-specific distinctions.

Few-Shot Learning for Rapid, High-Accuracy Results

What if you could achieve over 92% accuracy with just 8-10 labeled examples per category? That’s the promise of few-shot learning with models like The SetFit model on GitHub, which bridges the gap between speed and precision.

SetFit treats harmonization as a classification problem. You provide a handful of examples showing how messy labels map to standardized categories (e.g., “patientnum” and “subjectid” both map to Patient_ID). The model then uses a contrastive learning approach to fine-tune a Sentence Transformer, teaching it the specific nuances of your data domain. This adapted model can then classify new, unseen labels with high efficiency and accuracy.

This is ideal when you have limited labeling resources but need rapid deployment with high accuracy. A key advantage is multilingual support; SetFit handles labels in multiple languages without requiring separate models, which is essential for our global work across Europe, the USA, Canada, and Singapore. While it requires some upfront labeling, the payoff in speed and accuracy makes this our go-to recommendation for most organizations.

Supervised Harmonization

When accuracy is non-negotiable—for regulatory submissions, clinical decision support, or core financial reporting—supervised harmonization is the gold standard. This approach requires a substantial labeled dataset but delivers the highest possible precision and robustness.

Supervised models treat harmonization as an “extreme classification” problem, learning from thousands of examples to pick up on subtle patterns that simpler approaches miss. They can also incorporate additional features like the data type of a column, sample data values, or other metadata to make more informed decisions. This is critical in complex domains like multi-omic data integration, where context is everything.

The investment in creating and maintaining a large labeled dataset is significant, requiring a dedicated process for annotation and quality control. However, for mission-critical applications where errors carry real financial or safety consequences, this investment pays for itself through superior reliability and auditability.

[TABLE] Comparing AI Harmonization Approaches

| Approach | Data Requirement | Typical Accuracy | Best For |

|---|---|---|---|

| Unsupervised (Zero-Shot) | None | Moderate | Initial analysis, no labels available |

| Few-Shot | Minimal (e.g., 8-10 samples/class) | High | Limited labeling resources, rapid deployment |

| Supervised | Large Labeled Dataset | Very High | Mature systems, mission-critical accuracy |

Most organizations start with zero-shot exploration, move to few-shot learning for rapid improvements, and build toward supervised models for their most critical workflows. These approaches complement each other as your data maturity evolves.

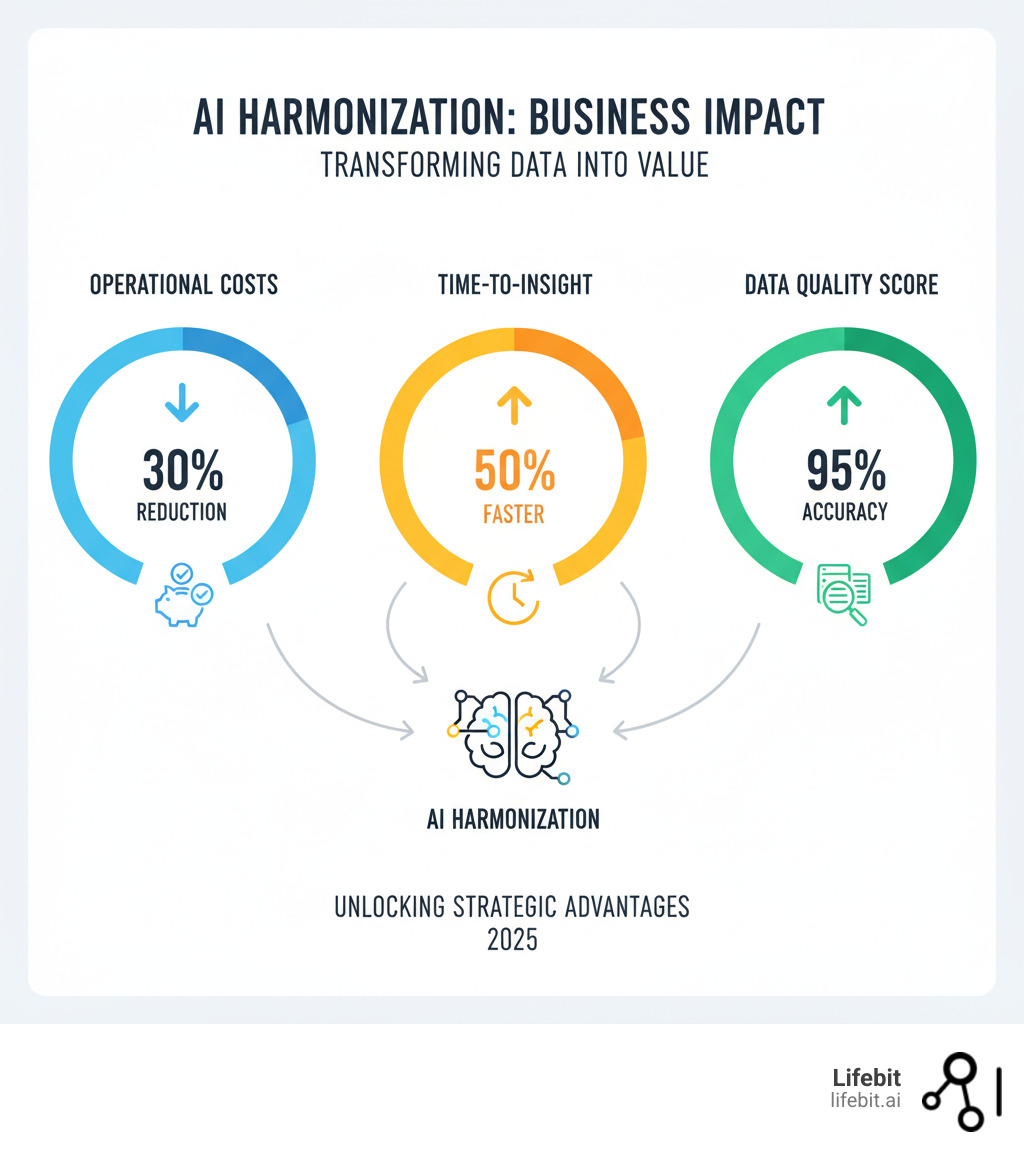

The Strategic Payoff: Real-World Wins and Business Benefits

Investing in AI for data harmonization isn’t just about cleaner data; it’s about unlocking strategic advantages that drive innovation and a competitive edge. When data becomes a harmonized, analysis-ready asset, you transform how you operate.

Tangible Business Benefits

- Dramatic Cost Reduction: Automate manual tasks, freeing data teams to focus on analysis instead of spending 80% of their time on data cleaning and preparation.

- Improved Operational Efficiency: Create a single source of truth that streamlines processes, eliminates errors caused by conflicting data, and improves cross-functional collaboration.

- Accelerated Innovation & AI Readiness: Fuel AI models with clean, unified data to get data-driven products to market faster. Move from “we need six months to prepare data” to “we can start building tomorrow.”

- Superior Decision-Making: Gain confidence in your data to make faster, more informed strategic choices, from budget allocation to market strategy, based on a complete and accurate picture of the business.

To see how Lifebit enables these outcomes, explore more info about achieving real-time evidence and analytics through our R.E.A.L. platform.

Real-World Applications of AI for Data Harmonization

The impact of AI for data harmonization is already changing industries.

-

Biomedical Research: Harmonizing multi-omic data (genomics, proteomics, clinical records from EHRs) is critical for breakthrough discoveries. AI is used to map proprietary data formats to industry standards like OMOP and FHIR, making massive datasets interoperable. The NIH Common Fund Data Ecosystem (CFDE) is pioneering these approaches to make complex biomedical datasets findable, accessible, interoperable, and reusable (FAIR). Our platform supports secure, real-time access to these datasets across federated environments, enabling critical analysis without compromising security.

-

Pharmacovigilance: Drug safety reports arrive in dozens of languages and formats from regulatory bodies, clinical notes, and social media. AI-driven harmonization automatically extracts and maps drug names (brand, generic, misspelled) and adverse event terms to a standardized medical dictionary like MedDRA. This transforms chaos into actionable intelligence, enabling faster identification of adverse drug reactions and improving patient safety.

-

Oil and Gas: A leading US company harmonized inconsistent material master data from different ERP systems across global centers. AI was used to analyze and classify 150,000 materials into standardized codes, understanding that “1/2 inch steel bolt” and “bolt, steel, .5in” were the same item. This improved inventory management, optimized procurement, and led to significant cost savings.

-

E-commerce: Harmonization creates a 360-degree customer view by unifying data from CRMs, social media, and transaction histories. By reconciling different customer identifiers and interaction logs, AI enables true personalization, leading to more effective marketing campaigns and increased customer lifetime value.

-

Financial Services: Harmonizing transaction data, customer profiles, and third-party watchlists is mission-critical for robust fraud detection and Anti-Money Laundering (AML) compliance. AI helps create a single, comprehensive customer risk profile from disparate sources, reducing false positives and enabling institutions to spot sophisticated financial crime patterns more effectively.

These are not future possibilities; they are happening now, delivering measurable ROI to organizations that have accepted AI-powered data harmonization.

Your Implementation Blueprint: Best Practices & Overcoming Problems

Moving from strategy to execution is where many harmonization initiatives stumble. A structured approach can deliver measurable results within 90 days. Here’s what we’ve learned helping biopharma, government, and research institutions harmonize millions of records.

Best Practices for a Successful Strategy

- Start with clear objectives. Don’t harmonize for its own sake. Define the specific business pain point and set measurable targets, like “reduce duplicate records by 80%” or “cut harmonization time from 12 to 2 weeks.”

- Establish Data Governance. A harmonization project is the perfect catalyst to formalize governance. Create a centralized data dictionary, define ownership for critical data domains, and establish clear policies for quality and access. This ensures your efforts are sustainable.

- Ensure cross-departmental collaboration. Bring IT, data science, compliance, and business end-users together from day one. This ensures the solution is technically sound, compliant, and practically useful.

- Start small and prove value fast. Begin with a focused 60-90 day pilot project on a single, high-pain data domain. A concrete win with 2-3 data sources creates internal champions and builds momentum for expansion.

- Let AI do the heavy lifting (with a human in the loop). Automate 80-90% of the mapping work with AI, but implement a workflow where human experts review and approve low-confidence suggestions. This hybrid approach ensures accuracy and continuously improves the model.

- Monitor continuously and adapt. Data is dynamic. Implement continuous monitoring and quality checks, and track AI model performance to ensure your system evolves with your data landscape.

Navigating the Challenges of AI for Data Harmonization

- Semantic Conflicts: AI is powerful, but it still needs human guidance for ambiguous cases where context is everything (e.g., does “lead time” mean order-to-ship or order-to-delivery?). Plan for a hybrid, human-in-the-loop approach where experts handle the trickiest distinctions.

- Data Quality Debt: AI can’t perform miracles on fundamentally bad data. Perform data profiling to identify and quantify quality issues first. Address critical problems at the source, then use AI to maintain consistency going forward.

- Organizational Resistance: Change is hard. Combat resistance with strong leadership, early wins from your pilot, and by showing how AI amplifies human expertise rather than replacing it. Frame it as a tool that frees analysts for more interesting, high-impact work.

- Technical Complexity: Integrating legacy systems and managing vast data volumes is challenging. A modern, scalable data platform is essential to provide the connectors and infrastructure to handle this complexity.

- Security and Compliance: In regulated industries like healthcare and finance, security is non-negotiable. Your system must comply with GDPR, HIPAA, and other regulations. Privacy-Enhancing Technologies (PETs) are key. Our Trusted Research Environment and federated governance model were designed to meet these stringent requirements without sacrificing speed or centralizing sensitive data.

- The Future Demands Real-Time Adaptation: Batch processing is becoming a relic. Harmonization must become a continuous, streaming process. Advanced AI systems can detect schema changes in source data and adapt automatically, ensuring your data remains analysis-ready in real time.

Conclusion: The Future is Unified

The evidence is clear: AI for data harmonization is no longer experimental—it’s a business necessity. Traditional methods can’t keep up. AI-driven approaches, from zero-shot to fully supervised models, deliver the accuracy and scale required today. The technology works, and the business case is proven.

Data is the strategic foundation of your competitive advantage. The companies leading their markets have unified their data and are extracting insights their competitors can’t see.

The future of harmonization is already taking shape: LLM agents handling complex semantic mapping, real-time processing becoming standard, and platforms automatically adapting to schema changes.

At Lifebit, we’re building this future. Our federated AI platform provides the tools for modern data harmonization:

- Trusted Research Environment (TRE): For secure, compliant collaboration.

- Trusted Data Lakehouse (TDL): For unified data storage and access.

- R.E.A.L. (Real-time Evidence & Analytics Layer): For instant insights and AI-driven safety surveillance.

Our platform creates a single source of truth that enables federated analysis across secure environments without moving sensitive data. It turns months of manual work into days of automated processing, all while meeting the strictest compliance requirements for partners across five continents.

Your data holds answers to your most critical questions. But you can only open up them if you can access, trust, and analyze that data as a unified whole.