Keep Calm and Centralize: Clinical Trial Monitoring Explained

Centralized monitoring system in clinical trials: Top 1 Power

Why Centralized Monitoring Systems Are Revolutionizing Clinical Trials

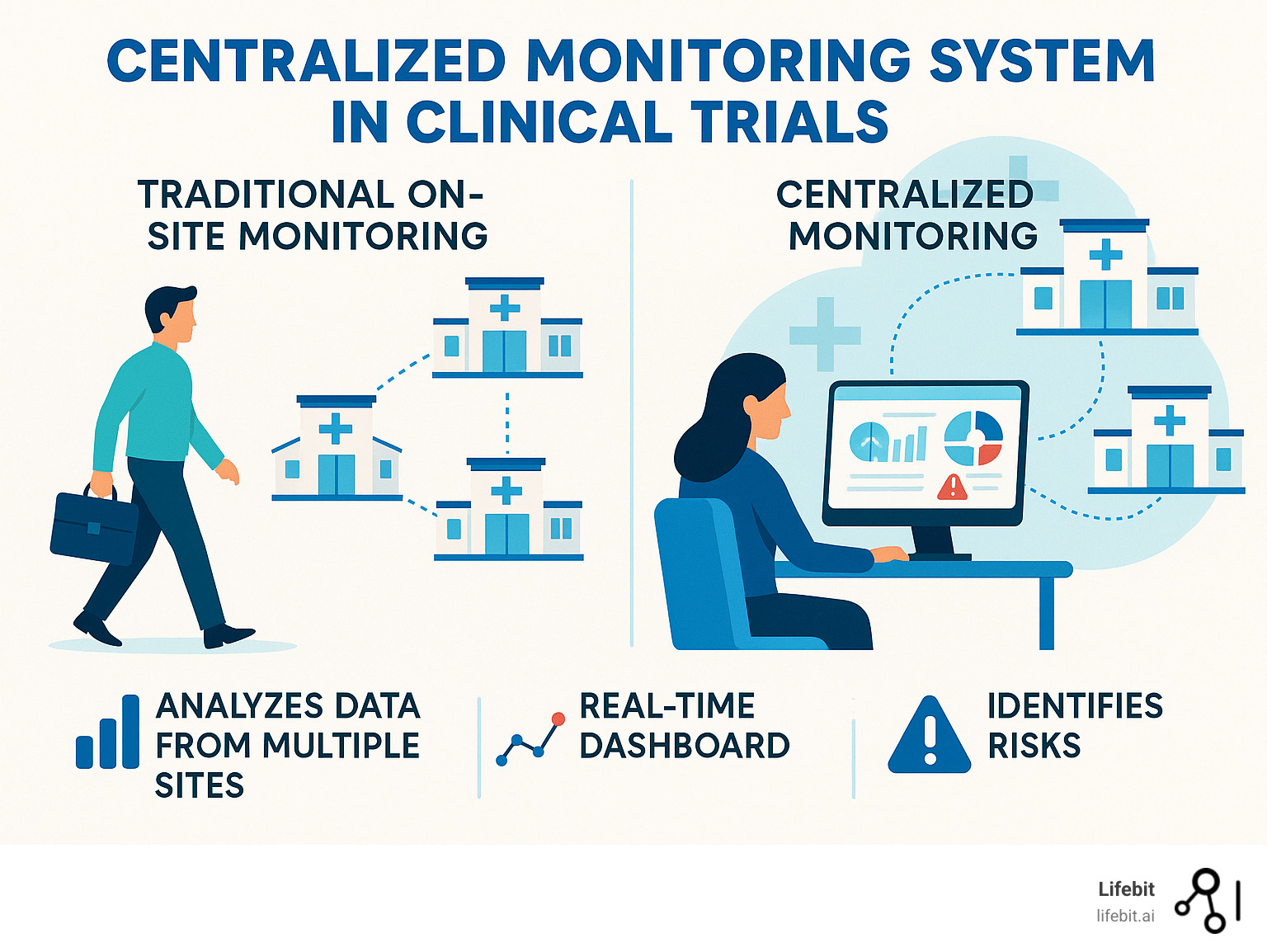

A centralized monitoring system in clinical trials is a remote evaluation approach that analyzes data from multiple trial sites in real-time to identify risks, ensure data quality, and improve patient safety. It relies on key components like Statistical Data Monitoring (SDM), Key Risk Indicators (KRIs), and Quality Tolerance Limits (QTLs) to detect anomalies and track performance.

This approach offers significant benefits:

- Reduces monitoring costs

- Improves data quality through real-time anomaly detection

- Enables faster risk identification and response

- Makes on-site visits more targeted and efficient

The clinical trial industry faces immense pressure. Traditional monitoring methods are struggling with modern data volumes, as Phase III trials now generate 3.6 million data points on average. With monitoring representing up to 25% of trial costs and 80% of trials facing costly delays, a new solution is needed.

Centralized monitoring provides this data-driven solution. Instead of relying on expensive on-site visits, it uses advanced analytics to monitor trial data continuously. This method is proven to be effective, with 83% of sites showing improved data quality scores after risk signals were identified and resolved.

As Maria Chatzou Dunford, CEO of Lifebit, I’ve seen how these systems transform data silos into actionable insights. The future belongs to platforms that can analyze diverse datasets securely, turning complex information into progress.

Important centralized monitoring system in clinical trials terms:

What is Centralized Monitoring and Why is it a Game-Changer?

Instead of sending monitors on expensive trips for manual data checks, imagine watching real-time analytics identify potential issues from a central location. That’s the power of centralized monitoring systems in clinical trials.

Traditional research relies on Clinical Research Associates (CRAs) traveling to sites, a process that can consume up to one-third of a trial’s budget. This staggering cost is driven by flights, hotels, and the significant personnel time required for on-site Source Data Verification (SDV)—the manual cross-referencing of data points. Yet, its return on investment is surprisingly low. A landmark study by TransCelerate BioPharma found that only 3.7% of electronic data capture (EDC) data is corrected after initial entry, and a mere 1.1% of those changes are a direct result of SDV. This means sponsors are spending a fortune to find a very small number of errors, many of which may not even be critical.

Enter centralized monitoring. The FDA defines it as “a remote evaluation carried out by sponsor personnel at a location other than the clinical investigation sites.” This approach uses statistical analysis to spot trends, outliers, and systemic problems across all trial sites simultaneously, turning raw data into a live surveillance feed of trial health.

This represents a fundamental shift toward risk-based approaches, a philosophy championed by regulatory bodies worldwide. Instead of applying the same intensive, and often inefficient, monitoring everywhere, resources are intelligently focused where data suggests they’re needed most. It’s a proactive, data-driven strategy that is revolutionizing trial oversight.

Centralized vs. Traditional On-Site Monitoring

Traditional monitoring is showing its age in our data-rich world. Here’s how centralized monitoring systems in clinical trials compare:

| Aspect | Traditional On-Site Monitoring | Centralized Monitoring System |

|---|---|---|

| Cost | High, due to travel, personnel time, and extensive manual SDV. Monitoring can be up to 1/4 of total trial costs. | Lower, by reducing the frequency and scope of on-site visits; shifts resources to data analysis and programming. |

| Efficiency | Lower, time-consuming visits, reactive problem-solving. Only 3.7% of EDC data is corrected after initial entry. | Higher, real-time data access, proactive issue detection, more targeted interventions. |

| Data Quality | Relies on manual checks; can miss systemic issues or subtle data patterns like digit preference or rounding errors. | Improved through advanced statistical analysis, identifies outliers and inconsistencies across sites. 83% of sites showed improved data quality scores. |

| Scalability | Challenging and expensive to scale across many sites or large trials. | Highly scalable, can monitor numerous sites simultaneously from a central location. |

| Risk Detection Speed | Slower, issues often identified during periodic visits, leading to delayed interventions. | Faster, continuous analysis allows for early detection of trends and anomalies. |

| Patient Safety Focus | Primarily site-specific checks, can be less efficient in identifying broader safety signals across the trial. | Proactive identification of safety trends across all sites, allowing for quicker responses to potential risks. |

| Regulatory Alignment | Becoming outdated. Regulators like the FDA and EMA now expect and encourage risk-based approaches (ICH E6 R2). | Fully aligned with modern regulatory expectations (ICH E6 R2/R3) for proactive quality and risk management. |

The contrast is clear: centralized monitoring spots problems as they develop, rather than after they’ve happened.

Key Benefits: Enhancing Efficiency, Quality, and Safety

Implementing a centralized monitoring system in clinical trials transforms trial oversight, creating a more intelligent, responsive, and safer research environment.

- Cost Reduction & Smarter Resource Allocation: The most immediate benefit is a dramatic reduction in travel costs. Consider a trial where sites traditionally receive quarterly visits. A centralized system might identify that 70% of sites are performing well, allowing their on-site visits to be reduced to once a year. The remaining 30% receive targeted, data-driven visits to address specific issues. This reallocates the budget from routine travel to high-impact activities like improved data analysis and site support where it’s truly needed.

- Improved Data Quality: By analyzing 100% of the data stream, not just samples, centralized systems detect subtle, systemic errors that manual SDV often misses. This includes identifying digit preference (e.g., a site where blood pressure readings consistently end in 0 or 5), unusual rounding patterns, or impossible data combinations. Statistics show 83% of sites had improved Data Inconsistency Scores when risk signals were addressed. This comprehensive approach creates more robust and reliable datasets for final analysis, as research demonstrates.

- Improved Patient Safety: Continuous, cross-site monitoring of safety data allows for the rapid identification of emerging risks. For example, a slight increase in a specific adverse event (AE) at a single site might not seem alarming. But a centralized system can detect a small but consistent increase in that same AE across multiple sites, revealing a potential safety signal related to the investigational product that would otherwise have been missed until much later. This enables faster investigation and intervention, protecting patients across the entire trial.

- Early Issue Detection & Resilience: Centralized systems act as an early warning system for operational issues. A sudden drop in data entry rates at one site could signal staff turnover, while inconsistent lab values across sites might point to an equipment calibration problem. This capability proved crucial during the COVID-19 pandemic. When physical travel was impossible, organizations with robust centralized monitoring systems were able to maintain full oversight of their trials, ensuring data integrity and patient safety without interruption, demonstrating unparalleled operational resilience.

- Improved Collaboration: Real-time data access breaks down silos and fosters a more collaborative, proactive culture. When a central monitor identifies a potential issue, they can share a dashboard with the site’s CRA and coordinator instantly. Instead of waiting weeks for the next on-site visit, the team can review the data together, diagnose the root cause, and implement a corrective action within days. This transforms the relationship between sponsor and site from one of periodic auditing to continuous partnership.

This change is philosophical as well as operational, moving the industry from reactive monitoring to proactive quality management.

The Core Components of a Centralized Monitoring System in Clinical Trials

A centralized monitoring system in clinical trials acts as a sophisticated oversight engine for your study. It relies on three powerful, complementary components: Statistical Data Monitoring (SDM), Key Risk Indicators (KRIs), and Quality Tolerance Limits (QTLs).

These pillars work in concert to transform raw clinical data into actionable intelligence, creating a comprehensive safety net through robust risk management and data-driven methodologies. Think of them as three layers of defense: SDM is the wide-net surveillance, KRIs are the targeted tripwires for known risks, and QTLs are the ultimate guardrails for the entire study.

This proactive approach continuously analyzes trial data, identifying potential issues before they escalate into serious problems. This represents a fundamental shift from reactive problem-solving to preventive quality management.

Statistical Data Monitoring (SDM)

Statistical Data Monitoring (SDM) is an unsupervised, hypothesis-generating approach. “Unsupervised” means it uses advanced statistical algorithms to find anomalies and inconsistencies across all trial data without being told what to look for. It learns the “normal” patterns within the data and then flags any site or data point that deviates significantly. This makes it invaluable for uncovering unknown or unexpected risks.

SDM is particularly effective at identifying:

- Data Fabrication or Sloppiness: It can spot patterns that are “too perfect” or lack the natural randomness of real data. For example, it might apply tests like Benford’s Law to numerical data to detect non-random digit distributions, or it might flag a site where repeated measurements (like heart rate) show impossibly low variance, suggesting data was copied and pasted rather than genuinely measured.

- Equipment or Procedural Issues: By comparing data distributions across sites, SDM can flag a site whose measurements are consistently higher or lower than all others, suggesting an uncalibrated thermometer, a malfunctioning scale, or a misunderstanding of a measurement procedure.

- Systematic Protocol Deviations: It can catch subtle but consistent errors, such as a site that always performs a specific assessment on the wrong day of the visit schedule.

The Data Inconsistency Score (DIS) is a key metric from SDM. It’s a composite score that quantifies how much a site’s overall data patterns deviate from the trial-wide norm. A high DIS doesn’t automatically mean fraud, but it acts as a clear signal for the central monitor to investigate further. Impressively, 83% of sites showed improved data scores when study teams addressed the risk signals that SDM identified.

Key Risk Indicators (KRIs)

While SDM performs broad, unsupervised surveillance, Key Risk Indicators (KRIs) are pre-defined, supervised metrics that monitor specific, known risk factors that could impact patient safety, data integrity, or trial timelines. They are the targeted alarms for the risks you identified as most critical during your initial risk assessment.

KRIs act as an early warning system, tracking metrics across different categories:

- Operational KRIs: Screen failure rates, patient enrollment velocity, patient withdrawal rates. A sudden spike in screen failures might signal a misunderstanding of eligibility criteria.

- Data Quality KRIs: Data query rates, time from patient visit to data entry, number of protocol deviations. High query rates from a specific site often point to training or data entry issues.

- Safety KRIs: Adverse event (AE) and serious adverse event (SAE) reporting rates, time from AE onset to reporting. High variance in reporting rates between sites could indicate underreporting or inconsistent assessment.

Setting KRI thresholds is a critical step. They shouldn’t be arbitrary; they are typically defined using statistical analysis of historical trial data, industry benchmarks, or by establishing a baseline during the first few months of the trial. When a site’s performance on a KRI crosses a pre-defined threshold (e.g., its query rate is two standard deviations above the trial average for three consecutive weeks), an alert is triggered. This targeted nature is highly effective: data shows that 83% of site KRIs improved when study teams closed the associated risk signals.

Quality Tolerance Limits (QTLs)

Quality Tolerance Limits (QTLs) are the ultimate safety net. They are study-level thresholds that define the acceptable upper and lower limits for specific, critical errors that could compromise the trial’s integrity or patient safety. This concept is a cornerstone of the ICH E6 (R2) guidelines on quality management, which mandate a proactive, risk-based approach to quality.

Unlike KRIs, which monitor site performance, QTLs monitor the overall health of the entire study. They focus on systemic issues by tracking critical-to-quality factors such as:

- Primary Endpoint Integrity: The percentage of patients with missing or unevaluable primary endpoint data.

- Protocol Compliance: The overall percentage of major protocol deviations that could impact patient safety or data integrity.

- Patient Discontinuation: The overall rate of patient withdrawal from the study.

A breached QTL is a major event that signals a systemic, study-level issue requiring an immediate and strategic response. For example, if the QTL for “percentage of patients missing primary endpoint data” is set at 5% and the trial-wide rate hits 5.1%, it triggers a formal escalation. A cross-functional team would immediately launch a root cause analysis to determine why this is happening across the study. The investigation might reveal that the protocol’s endpoint collection procedure is too burdensome for patients. The response would be a formal Corrective and Preventive Action (CAPA) plan, which could involve a protocol amendment, retraining all sites, and notifying regulatory authorities.

A Practical Guide to Implementing Centralized Monitoring

Implementing a centralized monitoring system in clinical trials is more about smart planning and strategic change management than sheer technical complexity. The key is shifting your team’s mindset from a reactive, site-by-site audit culture to a proactive, data-driven oversight model. Success hinges on a well-defined plan, cross-functional collaboration, and an iterative approach.

Start by getting stakeholders—from clinical operations to biostatistics and site managers—excited about the benefits, such as catching critical issues earlier and making on-site visits more productive and meaningful. Your monitoring plan is the blueprint for success, defining the risk assessment strategy, the specific mix of monitoring activities (centralized, remote, and on-site), and how they will work together in a cohesive system.

Best Practices for a Successful Centralized Monitoring System

- Start with cross-functional teams: True success requires a fusion of expertise. Involve data managers, biostatisticians, clinical monitors (CRAs), medical monitors, and site managers from the very beginning. This ensures that the selected KRIs are clinically relevant, statistically sound, and operationally feasible. This collaborative approach also builds buy-in and shared ownership of the process.

- Conduct thorough risk assessment and planning: This is the foundation of your entire strategy. Use a structured tool like a Risk Assessment and Categorization Tool (RACT) to identify and prioritize risks to patient safety and data integrity. For each high-priority risk, define how it will be monitored. This “quality by design” approach, mandated by ICH E6(R2), ensures your monitoring efforts are focused on what truly matters.

- Define KRIs and QTLs carefully: Avoid the temptation to monitor everything. An overwhelming number of indicators creates noise and leads to alert fatigue. Start with a focused set of 10-20 critical indicators tied directly to the highest risks identified in your assessment. Use resources like SOCRA’s suggested variables for guidance, but tailor them to your specific protocol, therapeutic area, and study phase.

- Select technology wisely: Choose solutions that integrate seamlessly with your existing systems, especially your Electronic Data Capture (EDC) platform. Key features to look for include interactive dashboards, automated alerting, robust statistical analysis tools, and clear audit trails. You can start with existing tools like SAS or R and evolve to more sophisticated, dedicated risk-based monitoring (RBM) platforms over time.

- Maintain clear documentation: A well-documented risk management plan, monitoring plan, and issue resolution workflows are crucial for regulatory inspections and team alignment. Document the rationale for each KRI and QTL, the defined thresholds, and the escalation path for any triggered alert. This creates a transparent and defensible process.

- Provide continuous training and support: Your team needs to be confident in the new process. Conduct regular workshops on how to interpret statistical outputs, investigate signals, and use the technology platform. Create a culture where central monitors feel empowered to act as data detectives, not just report generators.

- Take an iterative approach: Don’t aim for perfection on day one. Pilot your centralized monitoring system on a smaller or less complex study to identify process gaps and refine your KRIs. Collect feedback from the team and sites, and use it to improve the system before a full-scale rollout.

- Focus on critical data and processes: The goal is not to replicate 100% SDV remotely. It’s to shift focus from verifying every single data point to protecting the critical data and processes that ensure patient safety and the integrity of the study’s primary endpoints. This is a more efficient and effective use of resources.

Common Challenges and How to Overcome Them

- Data Privacy Concerns: Centralizing data analysis raises valid privacy questions. Overcome this by implementing robust technical and administrative controls, including strong data encryption (in transit and at rest), strict role-based access controls, and regular security audits. For highly sensitive data, federated approaches, where data remains at its source and only aggregated, non-identifiable insights are shared, offer a powerful solution for both security and analysis.

- Data Integration: Clinical trial data often lives in multiple, siloed systems (EDC, CTMS, labs, ePRO, wearables). The challenge is creating a single, unified view. Address this by using modern integration tools with robust APIs and by standardizing on data formats (like CDISC) where possible. A dedicated data integration layer can harmonize these diverse sources without introducing errors, providing a clean dataset for analysis.

- Site Resistance: Sites may perceive centralized monitoring as “big brother” or fear it will replace their trusted CRA relationship. Overcome this with clear communication. Emphasize that the goal is to reduce their burden from routine, time-consuming audit visits and make necessary on-site visits more focused, collaborative, and productive. Frame it as a partnership to improve quality, not a new form of policing.

- Perceived Complexity: The statistical terminology can be intimidating. Frame centralized monitoring as an extension of what clinical professionals already do: risk management. The core concepts—identifying risks, setting thresholds, and investigating deviations—are familiar. Focus training on the practical application and interpretation of the data, not on the complex statistical theory behind it.

- Resource Allocation and Skill Gaps: You will need team members with data analysis skills. However, many experienced CRAs and data managers have strong foundational abilities. Invest in targeted training to upskill your existing team in areas like statistical literacy, root cause analysis, and data visualization. You may need to hire or consult with a biostatistician, but the entire team can become proficient in using the system.

- Ensuring Data Integrity: While centralized systems are designed to improve data integrity, they are not infallible. The key is to establish clear, documented processes for what happens when the system identifies an issue. Define the workflow for investigating, escalating, and resolving signals, and ensure every action is documented in an audit trail. This closed-loop process ensures that insights from the system lead to tangible improvements in data quality.

The Future is Now: AI, Machine Learning, and the Evolution of Centralized Monitoring

The centralized monitoring system in clinical trials is rapidly evolving, driven by the transformative power of artificial intelligence (AI) and machine learning (ML). These technologies are moving trial oversight beyond descriptive analytics (what happened?) and into the fields of predictive (what will likely happen?) and prescriptive analytics (what should we do about it?).

AI and ML act as powerful force multipliers, processing vast and complex datasets to spot subtle trends, predict future problems, and recommend optimal interventions. This represents a massive leap forward, promising a future of semi-autonomous trial oversight characterized by greater efficiency, accuracy, and proactive risk management.

How AI and ML Improve a Centralized Monitoring System

- Improved Efficiency and Automation: AI algorithms can automate thousands of routine data checks, cross-referencing data across different forms and sources to flag inconsistencies in seconds. This frees up human monitors to focus their expertise on complex, high-stakes problem-solving and strategic decision-making rather than manual data review.

- Predictive Risk Modeling: This is where ML truly shines. By training models on historical data from previous trials, systems can predict which sites are most likely to encounter specific problems. For example, an ML model could analyze site characteristics and early performance data to predict that a site is 80% likely to fall behind on enrollment targets in the next quarter, enabling proactive support and intervention before the problem occurs.

- Natural Language Processing (NLP) for Unstructured Data: A vast amount of critical trial information is locked in unstructured, free-text fields like adverse event narratives, physician notes, or site communication logs. NLP algorithms can scan this text to identify unreported adverse events, inconsistencies between a narrative and structured data, or emerging site sentiment issues. In one case, an ML algorithm using NLP improved the quality and consistency of AE documentation by 25% by flagging incomplete or ambiguous narratives for review.

- Anomaly Detection at Scale: While traditional statistical monitoring is good at finding outliers, AI excels at finding complex, multi-dimensional patterns in massive datasets that are invisible to the human eye. This is crucial for detecting sophisticated fraud, subtle equipment drift across multiple parameters, or complex protocol deviations involving interactions between several variables.

- Dynamic Monitoring Plan Optimization: AI can move us beyond static monitoring plans. An AI-powered system can continuously analyze trial complexity, site performance, and emerging risks to recommend a dynamic, optimized mix of centralized, remote, and on-site monitoring activities for each site, updated in real-time. This ensures that monitoring resources are always deployed in the most efficient and effective way possible.

- Real-time Insights: AI-powered systems provide constant, 24/7 surveillance of a trial’s risk profile. Instead of monthly or quarterly risk reports, study managers can have a live, continuously updated view of trial health, allowing for instant alerts and agile adjustments to the monitoring strategy.

The Role of Federated Learning in Secure AI

At Lifebit, we are pioneering this revolution with our federated AI platform, which is purpose-built to power the next generation of centralized monitoring systems in clinical trials. A key challenge in applying AI is accessing large, diverse datasets without compromising patient privacy or data security. Our federated approach solves this. Instead of moving sensitive data to a central server for analysis, our platform sends the AI algorithms to the data’s secure location. The analysis happens behind the institution’s firewall within a secure Trusted Research Environment (TRE), and only the aggregated, non-identifiable results are returned. This allows powerful AI/ML models to be trained on global biomedical data while the data itself remains safe and compliant. Components like our R.E.A.L. (Real-time Evidence & Analytics Layer) leverage this federated architecture to deliver AI-driven insights, making it the ideal foundation for a secure, intelligent, and predictive centralized monitoring system.

Frequently Asked Questions about Centralized Monitoring

When teams consider adopting centralized monitoring systems in clinical trials, a few key questions consistently arise. Here are answers to the three most common concerns.

What is the difference between centralized monitoring and remote monitoring?

The terms are often used interchangeably, but they have distinct meanings. Remote monitoring is a broad term for any monitoring activity done without being physically at the site, such as reviewing documents electronically.

Centralized monitoring is a specific type of remote monitoring that involves the aggregate analysis of data from multiple sites. It uses statistical methods to identify cross-site trends, outliers, and systemic risks. For example, reviewing case report forms from one site is remote monitoring; analyzing enrollment patterns across all 50 sites to find outliers is centralized monitoring. The power of centralized monitoring lies in these cross-site insights.

Does centralized monitoring completely replace on-site visits?

No. Centralized monitoring complements on-site visits, making them smarter and more targeted, rather than replacing them. The goal is a hybrid, risk-based approach.

On-site visits remain essential for:

- Building strong site relationships and trust.

- Conducting hands-on training for complex procedures.

- Investigating serious issues flagged by centralized monitoring, such as potential data fabrication.

- Verifying essential documents and investigational product accountability.

The change is a shift from routine, calendar-based visits to focused, data-driven visits triggered by specific needs identified centrally.

What skills does a central monitor need?

While new skills are needed, many core competencies transfer from traditional monitoring. A central monitor needs a blend of expertise:

- Clinical Trial and GCP Knowledge: A strong foundation in trial processes and Good Clinical Practice (GCP) is non-negotiable.

- Data Management Expertise: Understanding data collection, cleaning, and validation is critical.

- Statistical Literacy: A PhD is not required, but understanding concepts like normal distribution and p-values is necessary to interpret data and spot outliers.

- Analytical and Problem-Solving Skills: Central monitors must act like detectives, forming hypotheses about data patterns and working with sites to resolve issues.

- Communication Skills: The ability to explain statistical findings to non-statisticians and collaborate with diverse stakeholders is vital.

Many experienced clinical research professionals already possess these foundational skills and can be upskilled with targeted training.

Conclusion

The clinical trial landscape is changing. As studies grow more complex and data volumes explode, traditional monitoring methods are no longer sufficient. The shift to proactive quality management through centralized monitoring systems in clinical trials is a revolution ensuring patient safety and data integrity.

We’ve seen how these systems change the game. By combining Statistical Data Monitoring (SDM), Key Risk Indicators (KRIs), and Quality Tolerance Limits (QTLs), we move from reactive to proactive oversight, addressing issues in real-time.

The results are compelling: 83% of sites show improved data quality scores when risk signals are addressed, leading to better patient outcomes and more reliable data for regulatory submissions.

The future of clinical trials is data-driven. AI and machine learning are enabling predictive analytics that can flag issues before they become problems, while federated approaches allow us to analyze sensitive data securely.

This evolution is about improving patient outcomes through smarter, more efficient research. When we detect safety signals faster and ensure data quality more effectively, patients, sponsors, and sites all benefit.

Data-driven decision making is essential for modern clinical research. Organizations that accept centralized monitoring today will lead tomorrow’s medical breakthroughs.

Ready to see how federated analytics can transform your clinical trial oversight? Explore our platform for advanced clinical trial analytics and find how Lifebit’s secure, real-time data platform can help you open up the full potential of your research data.