Secure Your Data Lakehouse: Principles for Robust Governance

Why Most Data Lakehouses Fail—And How to Fix Yours Fast

Data lakehouse governance is what makes your data platform a competitive advantage instead of a compliance nightmare. Here’s what you need to know.

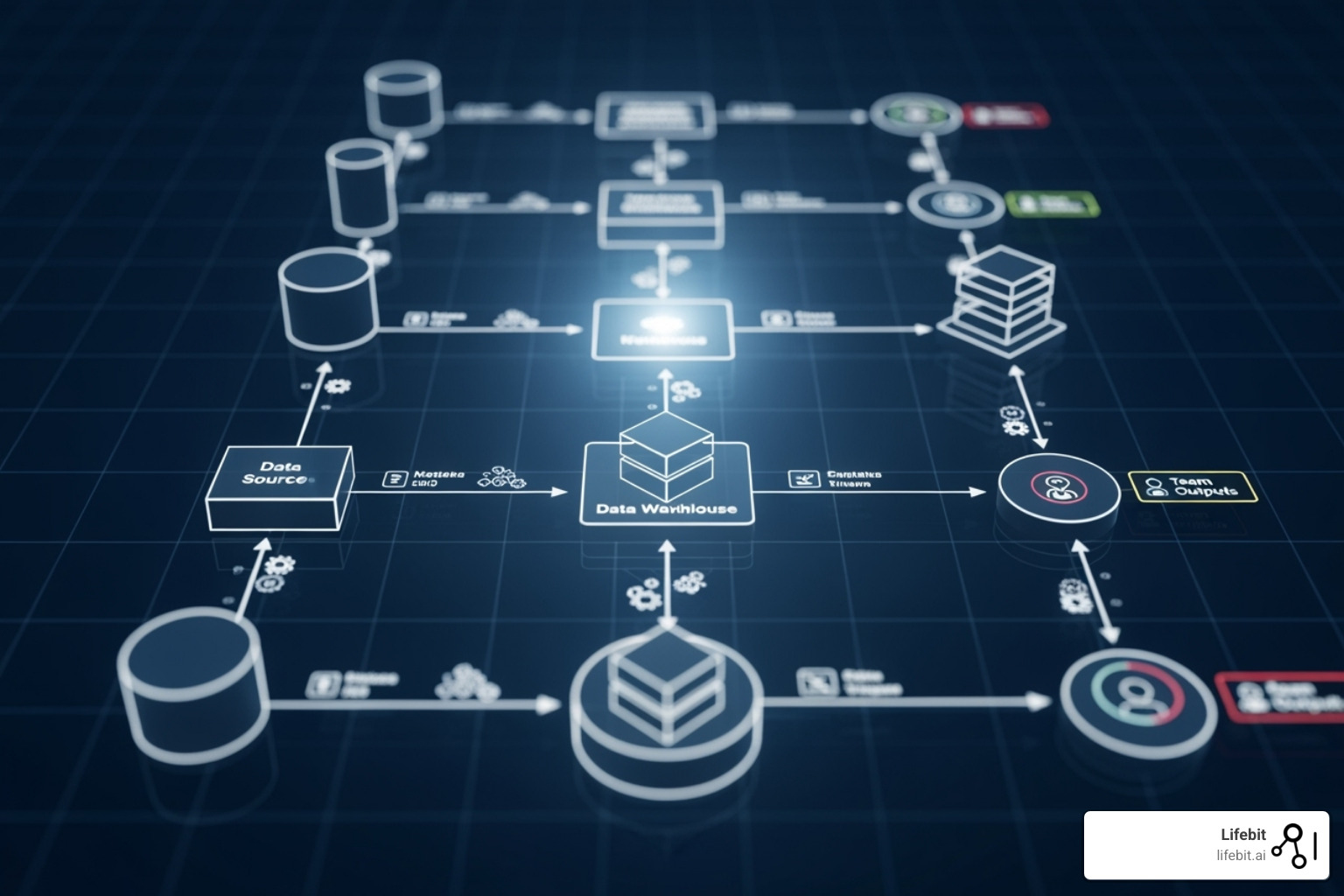

Key Components of Data Lakehouse Governance:

- Unified Management: A central catalog and single source of truth.

- Security & Access Control: Fine-grained permissions and audit trails for compliance (HIPAA, GDPR).

- Data Quality: Automated validation and lineage tracking.

- AI Governance: Model lifecycle management and bias detection.

The numbers are stark: Nearly 70% of organizations struggle with unified data management, leading to security gaps and failed analytics. Data teams waste 80% of their time finding and preparing data, leaving just 20% for analysis.

The reality is that data sprawl kills productivity. Poor governance turns your lakehouse into a data swamp where nothing can be trusted.

The good news? A well-governed lakehouse delivers unified access to trustworthy data, faster insights, and bulletproof compliance. Strong governance boosts efficiency, cuts costs, and democratizes data securely.

I’m Maria Chatzou Dunford, CEO of Lifebit. With over 15 years of experience building secure platforms for biomedical data, I’ve seen how critical data lakehouse governance is—from healthcare compliance to global federated analytics.

The 3 Pillars Every Data Lakehouse Needs to Stay Secure and Compliant

Does your data team waste Monday morning hunting for the right dataset, only to question if it’s clean, current, or compliant? This chaos is expensive. Data lakehouse governance isn’t complicated; it rests on three pillars that replace data archaeology with confident analysis.

These principles determine whether your teams can work with confidence or spend their days playing data detective.

Unify Data and AI Management—End Duplication and Guesswork

Imagine a Google-like experience for finding data in your organization. That’s what unified data and AI management delivers. Instead of hunting through folders or asking colleagues, everything lives in a central catalog.

This isn’t a file browser; it’s a rich repository for all your data, AI models, notebooks, and dashboards, complete with the business context you need. When someone searches for customer data, they see what’s in each dataset, when it was last updated, and who owns it. For example, a retail analyst looking to understand customer churn can search the catalog and instantly discover three key datasets: sales_transactions, marketing_engagement, and customer_support_tickets. The catalog provides descriptions, quality scores, and contact information for the data steward of each, turning a week of data hunting into a ten-minute task.

By eliminating data sprawl, you get a single source of truth. No more duplicate datasets with conflicting numbers or teams building the same model twice. When you govern AI assets like features and models alongside data, your machine learning teams find what they need as easily as your analysts find reports.

The result? Teams analyze instead of hunt. Trust replaces guesswork. Everyone works from the same reliable foundation. To understand how this architecture comes together, check out What is a Data Lakehouse?

Unify Data and AI Security—Stop Leaks Before They Start

Security must be baked in, not bolted on. Unified security means managing who can access what from a single control center. Set rules once, and they apply everywhere. Giving the marketing team access to customer demographics but not personal identifiers takes a few clicks, not a week-long project. This is often achieved with Attribute-Based Access Control (ABAC), where policies are dynamic. For instance, a single policy could state, “Allow access to patient_records if the user’s role is ‘Researcher’ AND the project’s purpose is ‘Approved Cancer Study’ AND the data’s sensitivity is ‘Anonymized’.” This is far more scalable and precise than managing thousands of static, role-based permissions.

This is critical for privacy-sensitive work. With health data or financial records, mistakes are career-ending. Privacy by design makes these protections automatic, not optional.

Compliance becomes manageable. Whether you’re navigating HIPAA, GDPR, or other regulations, a unified security model ensures you’re covered. Trying to piece together compliance across scattered systems is a nightmare.

When auditors come knocking, you have complete visibility into who accessed what data and when. Every interaction is tracked and ready for review. Organizations working with sensitive data know this is mission-critical. Learn more about our approach in Data Security in Nonprofit Health Research.

Enforce Data Quality—Trust Every Number, Every Time

What if your biggest business decisions are based on wrong data? Poor data quality is the silent killer of analytics. If your data is garbage, your insights will be too.

Data quality means having the right numbers. Is your customer data accurate? Are financial reports consistent? Is real-time data actually real-time?

Automated data quality is key. Instead of hoping someone checks for problems, your pipelines have built-in validation that catches issues before they spread. These checks can include tests for completeness (e.g., customer_id must not be null), uniqueness (no duplicate order_id values), validity (email addresses must match a standard format), and timeliness (data must be refreshed within the last 24 hours). It’s like a spell-check for your data, constantly running in the background and flagging or quarantining bad data before it pollutes your analytics.

Standards matter. When everyone uses the same definitions and formats, integration is smooth. No more debates about what a term means across departments.

Data quality isn’t a one-time fix; it’s an ongoing process of profiling, cleansing, and monitoring. When you get this right, people start trusting your data. Executives stop questioning every number, and analysts find insights instead of validating basics. Your AI models perform better because they’re trained on reliable information.

Organizations tackling complex data integration challenges know this firsthand. Find practical approaches in Data Harmonization Overcoming Challenges.

Build a Governance Framework That Actually Works: The 5 Must-Have Components

Governance isn’t a barrier; it’s the infrastructure that enables speed and safety. Data lakehouse governance is about building a system that lets your teams move fast while staying compliant. These five components are the essential building blocks for secure, self-service analytics.

Data Cataloging and Metadata Management—Find and Understand Data Instantly

A data catalog ends the frustrating hunt for data. It’s a centralized brain storing technical details (schemas), business context (definitions, ownership), and operational insights (usage patterns, quality scores). This is where the roles of Data Owners and Data Stewards become critical. A Data Steward, a subject-matter expert from the business, is responsible for curating their domain’s assets in the catalog, adding clear definitions and usage examples to bridge the gap between raw data and business meaning.

When users can find what they need, productivity soars. They search for “customer churn” and instantly see relevant datasets with descriptions and quality indicators. AI-generated comments can explain complex tables, while rich metadata provides the context to use data confidently.

This enables semantic consistency. When “customer” means the same thing everywhere, analysis becomes reliable. The catalog also becomes your governance command center, automatically identifying sensitive data, tracking compliance, and managing access policies. This metadata-driven approach makes enforcing rules feel effortless.

At Lifebit, we’ve built our platform around making data instantly findable and understandable. Explore our approach to open-source governance in our platform design.

Fine-Grained Access Control and Security—Lock Down What Matters

Giving users all-or-nothing data access is a recipe for disaster. Fine-grained access control means getting surgical with permissions.

We implement this through role-based controls that align with your organization and attribute-based access that considers context—who you are, what you’re accessing, and where you’re located. Modern governance platforms manage this through policy-as-code, where rules are defined in a human-readable language (like YAML) and managed in a Git repository. This makes your security posture version-controlled, auditable, and easy to update.

The real power comes from row-level security and column masking. You can share one customer dataset where different teams see different views, all automated. Sales might see everything, while marketing sees only demographics and finance sees only transaction amounts.

These controls adapt in real-time as roles and data classifications change, eliminating IT tickets and manual updates. The system just works, protecting sensitive information while enabling legitimate access. This approach is central to our Trusted Operational Governance Airlock.

Data Lineage—Prove Where Data Came From and Who Touched It

Data without lineage is untrustworthy. It’s like food without an ingredients label.

Data lineage tells the complete story of your data’s journey—from source systems through every change until it appears in a report or AI model.

This matters for several reasons. For compliance with regulations like GDPR, lineage provides an instant audit trail. For impact analysis, you can immediately see which downstream reports will be affected by a change in a data pipeline. For example, before changing a column name in a source table, a developer can use the lineage graph to see it will impact three key executive dashboards and two production ML models, allowing them to coordinate the change instead of causing unexpected failures. For root cause analysis, you can trace unexpected numbers back to the exact change that caused the issue.

Most importantly, lineage builds trust. When analysts see how their data was created, they gain confidence in their insights. We capture lineage automatically as data flows through your lakehouse, creating a living documentation system. This is fundamental to our best practices for data and AI governance.

Auditing and Monitoring—Catch Issues Before They Become Breaches

Effective auditing and monitoring catch security incidents before they become headlines. This is your early warning system for unusual behavior.

Automated audit logs capture everything: who accessed what data, when, and what queries they ran. Real-time monitoring watches for suspicious activities as they happen, such as a user downloading unusually large datasets at 3 AM or accessing new data types. A mature governance strategy integrates this with automated alerting. For instance, a query that attempts to access a restricted column could trigger an immediate alert to a security channel in Slack, allowing for instant investigation.

Monitoring isn’t just for security. It also helps you optimize performance and understand usage patterns. Which datasets are most popular? Where are the bottlenecks? These insights help you improve the platform continuously.

Compliance checks run automatically in the background, ensuring access permissions and data retention rules are properly enforced. The key is to balance vigilance with usability, providing comprehensive monitoring without overwhelming your team with false alarms.

Future-Proof Your Lakehouse: Govern AI, Accept Open Standards, and Scale

Your data lakehouse is more than a data repository; it’s your AI foundation. But data lakehouse governance must evolve beyond traditional data management. To future-proof your investment, you must govern AI models and complex workflows across multiple clouds and vendors.

Govern AI and ML Assets—No More Black Box Models

AI governance requires rigorous model lifecycle management. Every model needs the same oversight as your most sensitive data, tracking everything from feature engineering through training, deployment, and retirement. This includes governing the feature store, where standardized features are stored, shared, and versioned to ensure consistency across models.

Reproducibility is essential for trust and compliance. When a model’s decision is questioned, you must be able to recreate the exact conditions that led to the outcome. We log datasets, parameters, code versions, and even the containerized compute environment for every training run so you can always trace what happened and why.

Moving away from black box models toward explainable AI is a game-changer. Using techniques like SHAP (Shapley Additive Explanations), we help you understand not just what your models predict, but why. This transparency is crucial for building stakeholder trust and meeting regulatory requirements.

Bias detection should run continuously, monitoring models for unfair outcomes. The last thing you want is an algorithm that has been discriminating against certain groups for months. The result is an AI system you can trust and defend. Our approach to AI-Enabled Data Governance shows how this integration creates more reliable and ethical AI.

Open Formats and Interfaces—Stay Flexible, Avoid Lock-In

Vendor lock-in is expensive, trapping you when better solutions emerge. Smart organizations build their data lakehouses on open standards from day one.

Interoperability means your data works with the best tools available, today and tomorrow. By standardizing on open table formats like Delta Lake, Apache Iceberg, and Apache Hudi, you ensure your curated datasets remain accessible. While all three provide ACID transactions, schema evolution, and time travel, they differ in their architecture. For example, Iceberg’s approach of storing metadata in files alongside data can offer better performance and scalability in multi-engine environments compared to the transaction-log-based approach of Delta Lake.

This openness delivers immediate cost control. Accessing data directly from cloud storage with open formats helps you avoid proprietary platform fees and painful egress charges. Data longevity also becomes a real asset, as your investments compound instead of becoming stranded when you switch platforms.

Scale and Save—Build a Governance Model That Grows With You

The costliest governance mistake is building a system that can’t scale. Scalable governance manages more complexity without proportional overhead.

Decoupled storage and compute is the foundation for cost-effective growth. You scale each component independently, paying only for what you use. Spin up powerful compute for a massive AI training job, then scale down for routine reports.

On-demand scaling means your lakehouse breathes with your business, growing and shrinking automatically to keep costs aligned with value.

The real innovation is federated governance, which grants teams autonomy while maintaining central standards. This aligns closely with the data mesh paradigm, where a central platform team provides the infrastructure and global policies, but domain-oriented teams own their data as a product—including its quality, security, and documentation. Marketing can move fast with their data while following the same security rules as Finance. This prevents governance from becoming a bottleneck as you scale, turning it from a cost center into a competitive advantage.

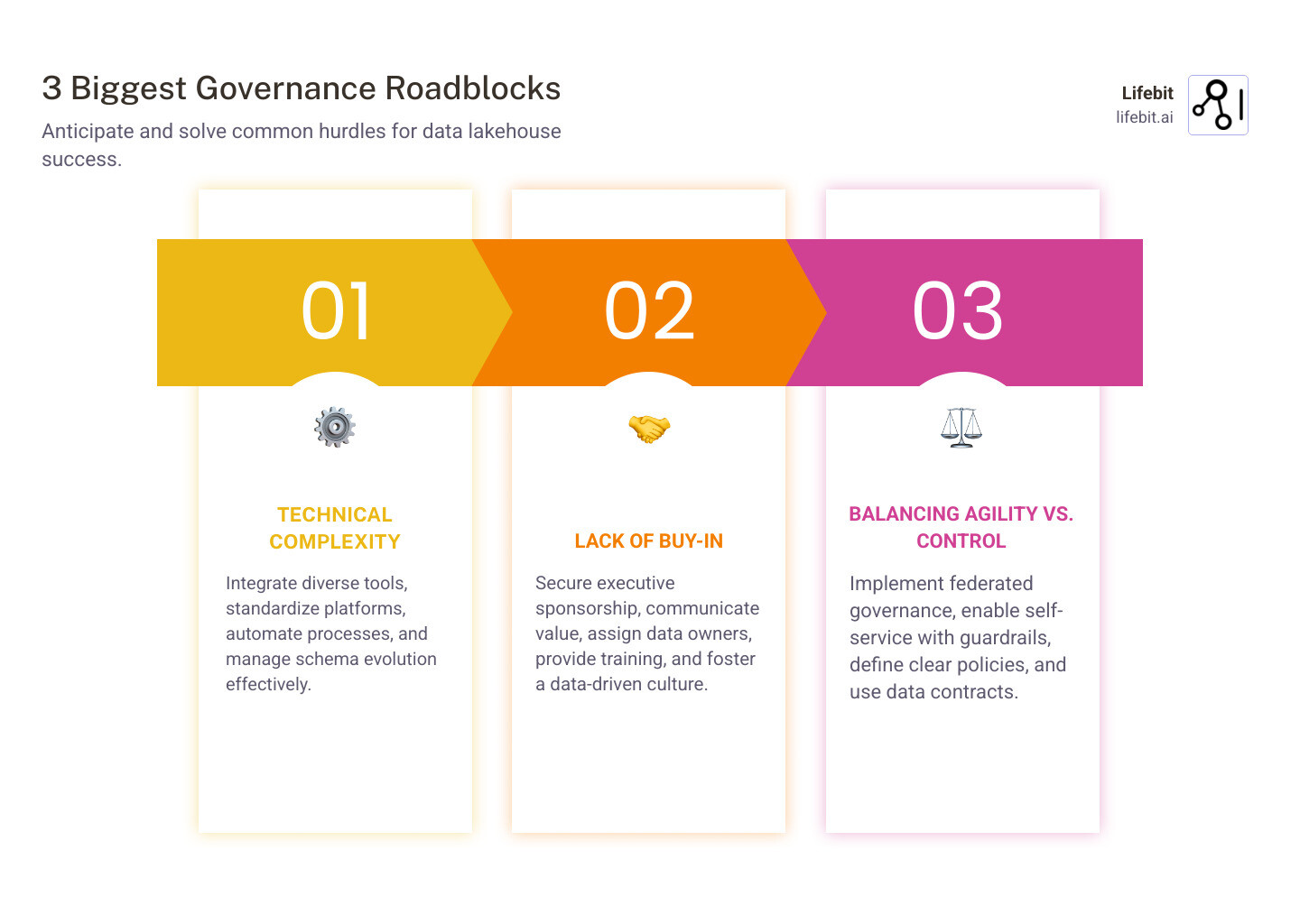

The 3 Biggest Data Lakehouse Governance Roadblocks—and How to Beat Them

Implementing data lakehouse governance is tough. Even with the best intentions, most organizations hit predictable walls that derail their initiatives. The good news? These roadblocks are beatable if you know what’s coming.

Technical and Architectural Problems

The technical complexity of a data lakehouse catches many teams off guard. The integration nightmare is often the first hurdle. Integrating diverse tools—each with its own security and metadata models—creates fragmented, unmanageable governance. A policy defined in Snowflake may not be respected in a connected BI tool.

Hybrid and multi-cloud environments add another layer of complexity, making it difficult to create a single pane of glass for governance. Then there’s schema evolution, the silent killer of data pipelines. As data structures change, downstream reports break and AI models produce garbage.

Our solution is a unified platform approach that natively integrates governance across the entire data and AI lifecycle. Instead of bolting on governance, we design it in from the ground up. This means you define a policy once—like “mask all PII columns for users outside the US”—and the platform enforces it everywhere, whether the data is being queried in Databricks, visualized in Tableau, or used to train a model in SageMaker. Our systems handle schema evolution gracefully, maintaining backward compatibility while enabling progress.

Organizational and Cultural Resistance

Technology is the easy part; the real challenge is people. Brilliant governance frameworks fail if nobody uses them because governance is fundamentally a people problem.

Executive sponsorship is critical. When leadership views governance as a business imperative, not a “nice-to-have,” teams follow suit. Without it, you get expensive shelf-ware.

Resistance to change is natural. Asking people to adopt new policies feels like a burden. To overcome this, focus on outcomes, not policies. Communicate the value: faster insights, reduced risk, and better decisions. Establish clear roles and responsibilities. A Data Owner (e.g., a VP of Marketing) is accountable for a data domain, while a Data Steward (e.g., a senior marketing analyst) is responsible for its day-to-day quality, definition, and access management. This clarity creates accountability and empowers the people closest to the data.

Most importantly, secure executive sponsorship early and make it visible. When people see leadership actively using governed data, adoption follows.

Balancing Governance with Agility

The key dilemma is balancing data democratization with security and control. Get it wrong, and you kill innovation.

Overly strict governance creates bottlenecks, frustrating users and encouraging workarounds that bypass security. But too much freedom is equally dangerous, leading to data exposure, poor-quality analyses, and compliance violations.

The answer is to enable freedom within boundaries. We implement federated governance models where central policies set the standards, but individual data domains have autonomy. Think of it as setting speed limits and rules of the road, rather than controlling every turn of the wheel.

Self-service analytics with built-in guardrails is the secret weapon. For example, a data scientist can use a self-service portal to discover and request access to a customer_transactions dataset. The request is automatically routed to the Data Owner for approval. Once approved, the platform grants access, but with policies automatically applied: PII columns are masked, and row-level security filters the data to only show anonymized records. The scientist gets the data they need in minutes, not weeks, and the organization’s data remains secure and compliant. This federated approach distributes the governance load while maintaining consistency, empowering teams while protecting what matters most. Our Federated Data Governance approach shows how this works in practice.

Your Top 3 Data Lakehouse Governance Questions, Answered

When organizations start their data lakehouse governance journey, the same questions come up. Here are the answers to the most common ones.

What are the core principles of data and AI governance in a data lakehouse?

Effective governance rests on three core principles:

- Unified Management: A central catalog for all data and AI assets, creating a single source of truth so assets are easily findable.

- Unified Security: Consistent, fine-grained access controls applied everywhere, from customer data to AI model outputs.

- Enforced Quality: Automated validation to ensure every piece of data is accurate, complete, and consistent.

Together, these principles create data assets that are trustworthy, secure, and findable—the foundation for everything from compliance to breakthrough insights.

How does a data lakehouse support both data democratization and strong governance?

This isn’t about compromise; it’s about combining centralized governance with self-service capabilities. A data lakehouse achieves this by providing a unified catalog that acts like a search engine, helping users find the data they need without IT bottlenecks.

Meanwhile, fine-grained access controls work invisibly in the background. Row-level security might filter a dataset so managers only see their region’s data. Column masking might hide sensitive PII from analysts who don’t need it. To the user, these guardrails are invisible—they just see a clean, relevant dataset ready for analysis.

This approach democratizes access to data and analytics while maintaining stringent security and compliance. Your teams get the freedom to innovate, and you get the peace of mind that comes with bulletproof governance.

What is the role of data lineage in data lakehouse governance?

Data lineage provides a complete audit trail for your data, from source to consumption. This visibility is critical for governance because it solves major operational headaches.

It’s essential for:

- Compliance: Instantly prove data handling for regulations like HIPAA or GDPR, providing a complete audit trail.

- Impact Analysis: See exactly which downstream reports and AI models will be affected by a data change before it happens.

- Troubleshooting: Trace data anomalies back to their root cause in minutes, not days.

- Trust: Show users how their data was created and transformed, building their confidence in the insights they generate.

Without clear data lineage, you’re flying blind, hoping everything works but having no way to prove it or fix it when it doesn’t.

Conclusion: Turn Governance from a Bottleneck into Your Competitive Edge

Here’s the truth: data lakehouse governance isn’t a bottleneck; it’s an accelerator.

Proper governance transforms a chaotic data swamp into a high-performing engine that drives real business results. It flips the equation: instead of spending 80% of their time hunting for data, your teams spend 80% of their time generating insights.

The path forward is clear:

- Unify management to create a single source of truth.

- Layer on unified security to protect what matters without friction.

- Enforce data quality to make every number trustworthy.

- Govern AI assets with the same rigor as your data.

- Accept open standards to stay flexible and future-ready.

Governance isn’t a one-time project; it’s an ongoing practice. The roadblocks are real, but they are beatable. With the right approach, you can turn technical complexity into an advantage and cultural resistance into alignment.

At Lifebit, we’ve spent years perfecting this balance. Our platform helps organizations build trust, drive results, and secure their future by making governance feel effortless. We believe that when you get governance right, you open up the full potential of your data.

Ready to see what a truly governed data lakehouse can do? Find out how governance can become your competitive edge, not your constraint.