Navigating the Full Spectrum of End-to-End Drug Discovery.

Why End-to-End Drug Findy is Changing Medicine

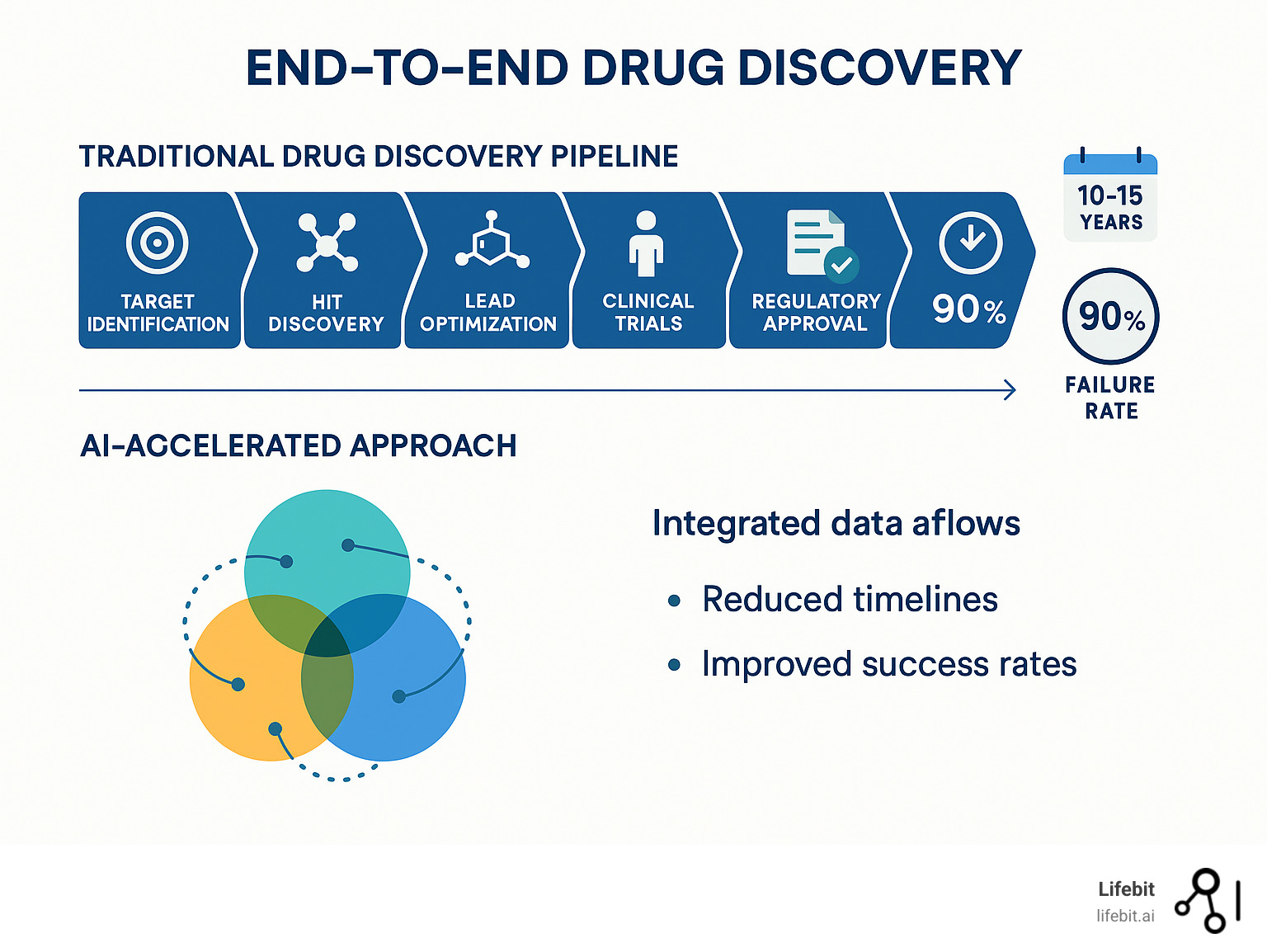

End to end drug findy represents a shift from fragmented, siloed approaches to an integrated system that spans the entire therapeutic development pipeline. This comprehensive approach promises to address the pharmaceutical industry’s most pressing challenges: excessive costs, lengthy timelines, and disappointing success rates.

What is End-to-End Drug Findy?

- Target identification – Finding disease-causing proteins or pathways

- Hit findy – Identifying compounds that interact with targets

- Lead optimization – Improving compound properties for safety and efficacy

- Preclinical testing – Laboratory and animal studies

- Clinical trials – Human testing phases I, II, and III

- Regulatory approval – FDA/EMA review and market authorization

- Post-market surveillance – Ongoing safety monitoring

The traditional approach treats each stage independently, creating inefficiencies and knowledge gaps. An end-to-end system integrates artificial intelligence, machine learning, and advanced analytics across all phases, enabling continuous feedback loops and data-driven decision making.

As Sir Archibald Garrod observed over a century ago: “Every active drug is a poison, when taken in large enough doses; and in some subjects, a dose which is innocuous to the majority of people has toxic effects, whereas others show exceptional tolerance of the same drug.” This insight about chemical individuality remains central to modern drug findy challenges.

The Current Reality:

- $2.6 billion average cost per approved drug

- 10-15 years typical development timeline

- 90% failure rate in clinical trials

- No improvement in timelines over the past decade

AI-powered platforms are beginning to change this landscape by exploring chemical spaces spanning 10³³ drug-like compounds, predicting molecular properties with unprecedented accuracy, and enabling autonomous experimental decision-making throughout the pipeline.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where I’ve spent over 15 years developing computational biology tools and federated data platforms that power precision medicine and end to end drug findy. My work focuses on breaking down data silos and enabling secure, compliant analytics across the entire therapeutic development spectrum.

End to end drug findy helpful reading:

- ai drug findy platform

- cloud-based drug findy platforms market

- data intelligence platform

The Traditional Gauntlet: Challenges in the Drug Development Pipeline

Traditional drug development is a long, treacherous journey where nine out of ten travelers never reach their destination. For decades, the industry has used a linear, sequential approach, treating each stage from target validation to regulatory approval as a separate silo. This fragmentation means that critical knowledge gained during discovery is often lost or poorly translated when a candidate moves to preclinical, and preclinical findings may not fully inform the design of clinical trials. Each handoff is a potential point of failure and information degradation, making it a major bottleneck for medical innovation.

While this pipeline has produced life-saving medicines, its challenges are staggering, highlighting why end to end drug findy is essential.

The Staggering Cost and Timeline

Bringing a single new drug to market costs an average of $2.6 billion, according to research on pharmaceutical R&D costs. The journey also demands 10 to 15 years, a timeline that hasn’t improved in the last decade despite other technological leaps.

This high-risk, high-cost environment creates a “Valley of Death” where promising early findies are abandoned due to overwhelming uncertainty and cost. The R&D productivity challenge means more spending doesn’t equal better results, driving an urgent need for smarter, integrated approaches.

Eroom’s Law: The Productivity Crisis

This predicament is famously captured by “Eroom’s Law”—Moore’s Law spelled backward. Coined in a 2012 Nature Reviews Drug Discovery article, it observes that despite decades of technological and scientific advances, the number of new drugs approved per billion US dollars spent on R&D has halved roughly every nine years since 1950. This counterintuitive trend, where innovation becomes slower and more expensive over time, underscores a deep-seated productivity crisis that brute-force spending cannot solve. It points to the increasing complexity of disease biology, stricter regulatory hurdles, and the exhaustion of ‘low-hanging fruit’ targets. An integrated end-to-end approach is seen as a primary strategy to reverse this trend.

Why Most Drugs Fail: A Phase-by-Phase Breakdown

A heartbreaking 90% of drug candidates that enter clinical trials never reach patients. This high failure rate stems from several key issues, with attrition occurring at every stage:

- Preclinical Failure: A significant number of candidates are eliminated before they ever reach humans due to unforeseen toxicity or poor pharmacokinetic properties discovered in animal models.

- Phase I Failure (Safety in Humans): Of the drugs that enter Phase I to test for safety and dosage in a small group of healthy volunteers, approximately 37% fail.

- Phase II Failure (Efficacy in Patients): This is the largest hurdle, often called the ‘graveyard’ of drug development. Here, the drug is tested for efficacy in a larger group of patients. Nearly 70% of drugs fail at this stage, most commonly because they are not effective enough against the disease.

- Phase III Failure (Large-Scale Confirmation): Even after passing Phase II, drugs can fail in large-scale Phase III trials due to more subtle safety issues or a lack of superior efficacy compared to existing treatments. Around 42% of drugs entering this phase do not proceed to approval.

Analysis of drug attrition rates confirms this has been a long-standing problem. Recognizing these challenges is the driving force behind the shift to end to end drug findy systems, which promise a more predictable and successful path forward by using data to make better decisions and fail faster, cheaper, and earlier in the process.

The AI Revolution: Integrating Intelligence Across the Pipeline

Artificial intelligence is revolutionizing drug development by making sense of the overwhelming complexity of biological data. Machine learning, deep learning, and generative AI are essential tools for finding patterns and solutions that were previously invisible. AI is not just speeding up individual tasks; it’s changing how we approach end to end drug findy by connecting insights across the entire pipeline.

AI’s Role in Early Findy and Design

In the early stages, AI accelerates progress by analyzing massive biological datasets to uncover hidden connections between genes, proteins, and diseases.

- Target and biomarker findy: AI processes genomics, proteomics, and patient data to spot novel disease-causing proteins and measurable indicators of drug response far faster than human researchers. This includes identifying novel targets that may have been overlooked by conventional research methods.

- Generative chemistry and de novo design: AI gets creative, designing entirely new molecules from scratch that are optimized for binding strength, safety, and absorption. These aren’t just random molecule generators. They employ sophisticated architectures like Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and Transformers. For instance, a GAN might consist of two competing neural networks: a ‘Generator’ that proposes new molecular structures and a ‘Discriminator’ that tries to distinguish these AI-generated molecules from real ones. Through this competition, the Generator learns to design highly realistic and novel compounds optimized for specific properties.

- Virtual screening and ADMET prediction: Instead of physically testing millions of compounds, AI can computationally screen vast chemical spaces and predict how a drug will be absorbed, distributed, metabolized, excreted, and whether it will be toxic (ADMET), weeding out poor candidates early. The scale of this task is astronomical. The known chemical space of drug-like molecules is estimated to be around 10⁶⁰, a number so vast it’s impossible to synthesize and test physically. AI-powered virtual screening can navigate this space, evaluating billions of virtual compounds in a fraction of the time and cost of traditional high-throughput screening (HTS).

These advances are driven by sophisticated AI models, including cutting-edge agentic frameworks that can automatically execute complex drug findy workflows.

How AI is Changing Clinical and Preclinical Phases

AI’s impact extends into the later stages of development, improving safety and efficacy.

- Predictive toxicology: AI models forecast potential safety issues earlier and more accurately, reducing reliance on animal testing and preventing dangerous compounds from reaching human trials.

- Patient stratification: By analyzing complex patient data, AI identifies subgroups most likely to benefit from a treatment, enabling personalized medicine and rescuing drugs that might have failed in a general population.

- Clinical trial optimization: AI helps design more efficient trials, predict recruitment challenges, and analyze real-world data from electronic health records to understand how drugs perform outside of controlled settings. This goes beyond simple logistics. AI algorithms can scan millions of electronic health records (EHRs) to identify patients who precisely match complex inclusion/exclusion criteria, a task that is slow and error-prone for humans. They can also predict which clinical trial sites will have the highest enrollment rates and lowest dropout rates. Furthermore, AI is enabling the use of ‘digital biomarkers’—data collected from smartphones or wearables—to monitor patient responses continuously and objectively, providing richer data than periodic clinic visits.

- Digital twins and endpoint prediction: Virtual models of patients allow for simulated treatment testing, while AI can predict long-term outcomes from early markers, potentially shortening trial durations.

What Makes an AI Approach a True End-to-End Drug Findy System?

A true end to end drug findy system is more than a collection of siloed AI tools; it’s an integrated orchestra. The key differences are:

- Integrated vs. Siloed: An integrated system ensures AI tools communicate and share insights across all stages, eliminating data loss between phases.

- Continuous Feedback Loops: Findings from later stages (like clinical trials) automatically inform and improve earlier stages (like molecule design), creating a system that learns and improves over time.

- Holistic Optimization: The entire pipeline is optimized for overall success, not just individual stage metrics. This means factors like manufacturability and clinical feasibility are considered from the very beginning.

This integrated approach, powered by advanced AI, ensures we find the right drug for the right patients at the right time.

The Reality Check: Progress, Challenges, and the Path Forward for End-to-End Drug Findy

The journey of AI in drug findy has seen both exciting breakthroughs and disappointing setbacks. While the promise remains enormous, it’s important to have a realistic view of where we stand today.

Recent headlines have told a mixed story. We’ve seen AI-designed drugs enter clinical trials, but some have struggled to prove their effectiveness in patients. This has led to some frustration, with observers noting that AI has yet to consistently deliver on its initial hype. However, these setbacks are not failures; they are crucial learning opportunities that teach us how to build better, more effective systems.

Key Benefits of a Unified End-to-End Drug Findy Process

Despite these challenges, the potential of an integrated end-to-end drug findy process remains game-changing.

- Accelerated timelines: AI can compress stages from years to months, shortening the 10-15 year slog.

- Reduced costs: By avoiding costly late-stage failures, AI can significantly slash the $2.6 billion price tag of drug development.

- Increased success rates: By weeding out problematic compounds early, AI aims to dramatically improve the current 10% clinical trial success rate.

- Novel chemistry exploration: Generative AI can design entirely new molecules in chemical spaces that are inaccessible to human intuition alone.

- Personalized medicine: AI can analyze complex patient data to help develop treatments custom to specific genetic profiles or disease subtypes.

Learning from Setbacks and Managing Expectations

The mixed clinical results from early AI-designed drugs are teaching us vital lessons.

- Efficacy challenges: Predicting whether a drug will work in the messy, complex human body is far harder than predicting if it will bind to a target. This remains a major hurdle.

- The “black box” problem: AI models that can’t explain their reasoning create trust issues with scientists and regulators. The focus must be on transparent, interpretable AI.

- The need for experimental validation: AI predictions are educated guesses until they are proven in the lab. The goal is to make lab work smarter, not to eliminate it.

Here’s how traditional and AI-driven approaches compare on key metrics:

| Metric | Traditional Drug Findy | AI-Driven End-to-End Drug Findy (Goal) |

|---|---|---|

| Time | 10-15 years | Significantly accelerated (e.g., 5-7 years) |

| Cost | ~$2.6 billion per approved drug | Substantially reduced |

| Success Rate | ~10% clinical trial success rate | Increased (e.g., 20-30%+) |

| Key Challenge | High attrition, manual processes, data silos | Data quality & access, model interpretability, validation |

These challenges are not roadblocks but course corrections. They show us where to focus our efforts to build truly effective end-to-end drug findy systems that can handle the complexity of real-world biology.

Building the Foundation: Data, Regulation, and Ethics

In end-to-end drug findy, data is the fuel for the AI engine. AI models are only as good as the information they are trained on, which makes data quality, organization, and accessibility paramount. Alongside data challenges, we must also steer a complex landscape of regulation and ethics.

The Data Dilemma: Fueling the AI Engine

Biomedical data is abundant but fragmented. Massive amounts of valuable information sit locked in disconnected systems.

- Multi-omics and Real-World Data: Integrating diverse datasets like genomics, proteomics, and real-world evidence from electronic health records is crucial for a complete picture of disease. However, this data is often messy, inconsistent, and stored in different formats.

- Data Silos and the FAIR Principles: A primary obstacle is that data is siloed across pharmaceutical companies, academic institutions, and government agencies. To combat this, the industry is increasingly adopting the FAIR Guiding Principles for data management: Findable (easy to discover), Accessible (retrievable by standard protocols), Interoperable (able to be combined with other data), and Reusable (well-described for future studies). Implementing FAIR principles is a foundational step for any serious AI-driven drug discovery effort.

- Federated Learning: This approach is a game-changer for overcoming data silos while respecting privacy. Instead of pooling all sensitive patient data into one massive central server, federated learning works by distributing the AI model itself. A master model is sent out to the secure environments of different hospitals or research institutions. The model trains locally on that institution’s private data, learning valuable patterns without the data ever leaving its secure perimeter. Only the model’s updated parameters—anonymized mathematical summaries of what it learned—are sent back to a central server. These updates are then aggregated to improve the master model, which is then sent out again for another round of training. This iterative process allows the AI to learn from a vast, diverse global dataset while upholding the strictest standards of data privacy and security.

Our platform addresses these challenges with tools like the Trusted Research Environment (TRE) and Trusted Data Lakehouse (TDL). These provide secure environments for data harmonization and analysis, with built-in federated governance to enable work with global biomedical data while ensuring compliance.

Navigating Regulatory and Ethical Landscapes

As AI becomes central to drug findy, regulators and researchers face new questions.

- Regulatory Adaptation: The FDA and EMA are working to determine how to evaluate drugs designed by algorithms. Recognizing this shift, regulatory bodies are proactively developing frameworks. The FDA, for example, has issued an ‘Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan.’ While focused on devices, its principles—such as the need for a ‘predetermined change control plan’ to manage continuously learning algorithms—are informing the conversation around AI in drug development. They are also exploring ‘regulatory sandboxes’ to allow for controlled testing of AI-driven submission packages.

- Transparency and Accountability: The “black box” nature of some AI models raises questions about accountability. If an AI-designed drug causes harm, who is responsible? Developing frameworks for interpretable AI (Explainable AI or XAI) is critical for building trust with scientists and regulators.

- Algorithmic Bias: AI models learn from historical data. If that data reflects biases, the AI will perpetuate them. For example, if an AI model for predicting drug toxicity is trained predominantly on data from clinical trials where participants were of European ancestry, it may fail to identify a toxicity risk that is specific to an African or Asian population. This could lead to the approval of a drug that is unsafe for certain groups. Actively curating diverse, representative datasets and developing methods to detect and mitigate bias in AI models are therefore not just technical challenges, but profound ethical imperatives.

- Data Governance and Privacy: Protecting patient privacy while making data Findable, Accessible, Interoperable, and Reusable (FAIR) is a critical balancing act. Strong data governance is essential.

Our R.E.A.L. (Real-time Evidence & Analytics Layer) provides secure, compliant access to biomedical data, addressing these governance needs. The path forward requires collaboration between AI developers, pharma, regulators, and ethicists to build a new, trustworthy framework for medical research.

Frequently Asked Questions about AI in Drug Findy

Here are answers to some common questions about AI’s role in end to end drug findy.

How does AI identify new drug targets?

AI acts like a superhuman researcher, analyzing massive biological datasets—genomics, proteomics, patient records, and scientific literature—to spot patterns humans might miss. It can identify proteins or pathways that play unexpected roles in disease by finding correlations in the data at a scale and speed that is impossible to do manually. For example, it might notice a protein behaving differently in diseased tissue across thousands of samples. This amplifies human insight by providing a highly qualified starting point for research.

Can AI replace human scientists in drug findy?

No. AI is a powerful partner, not a replacement. Think of it as the world’s most capable research assistant.

- What AI excels at: Automating repetitive tasks, analyzing massive datasets, identifying subtle patterns, and generating novel hypotheses.

- What humans bring: Creative problem-solving, strategic thinking, ethical judgment, and the intuition required for true breakthroughs.

The goal is a collaboration where AI handles the heavy computational lifting, freeing up scientists to focus on innovation and strategic decisions in end to end drug findy.

What is the biggest challenge for AI in drug findy today?

The biggest hurdle is the “garbage in, garbage out” problem. AI models are only as good as their training data, and accessing high-quality, comprehensive biomedical data is extremely difficult.

- Data Quality and Silos: Data needs to be accurate, well-annotated, and diverse. However, it is often locked in isolated silos across different institutions, preventing the creation of powerful, comprehensive models.

- Data Harmonization: Combining datasets from different sources is like solving a puzzle with mismatched pieces. It’s a major technical challenge.

This is why our federated AI platform is so critical. It enables secure analysis of global biomedical data within a Trusted Research Environment, breaking down data silos without compromising privacy. Solving these data challenges is the key to open uping AI’s full potential in medicine.

Conclusion: The Dawn of a New, Integrated Era

We stand at a remarkable crossroads in medicine. The traditional drug development gauntlet – with its $2.6 billion price tag, decade-plus timelines, and crushing 90% failure rates – has pushed us to reimagine everything we know about bringing life-saving medicines to patients.

End to end drug findy represents more than just technological advancement; it’s a fundamental shift in how we think about therapeutic development. Instead of treating each stage as an isolated silo, we’re creating an interconnected ecosystem where artificial intelligence learns, adapts, and improves across every phase of the pipeline.

The early clinical results from AI-designed drugs have been humbling, reminding us that even the most sophisticated algorithms can’t shortcut the complexity of human biology. But these setbacks aren’t failures – they’re invaluable lessons that are sharpening our focus on what truly matters: creating medicines that work in real patients, not just in computer models.

What excites me most is the emerging vision of “robot scientists” – autonomous systems that can hypothesize, design experiments, and analyze results with superhuman speed and precision. These aren’t replacing human creativity and insight, but amplifying them in ways we’re only beginning to understand.

The key to open uping this potential lies in solving the data challenge. Without high-quality, harmonized, and accessible data flowing seamlessly across the entire pipeline, even the most brilliant AI models are working with one hand tied behind their back. This is why our work at Lifebit focuses so on breaking down data silos and creating secure, federated platforms that enable real-time collaboration.

Our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer) aren’t just technical solutions – they’re the foundation for a new era of medicine. By enabling secure access to global biomedical data while maintaining privacy and compliance, we’re powering the kind of large-scale, integrated research that makes true end to end drug findy possible.

The change happening in pharmaceutical research reminds me of the shift from handwritten letters to instant global communication. The fundamental goal remains the same – connecting people and sharing knowledge – but the speed, scale, and possibilities have been revolutionized.

We’re not just building better tools; we’re architecting a future where innovative medicines reach patients faster, safer, and more affordably than ever before. The dawn of truly integrated end to end drug findy isn’t a distant dream – it’s happening right now, one data connection at a time.

Find a next-generation platform for end-to-end drug findy and join us in building this future.