The Exchange Advantage: Unlocking Collaborative Data Sharing

Cut Breach Risk and Compliance Cost Now: Federated Data Exchange Keeps Data Local, Shares Only Insights

A federated data exchange platform allows organizations to analyze shared data without moving it from its source. Instead of collecting information into a risky central database, this approach keeps data local and secure.

- How it works: Data stays at its source. Only insights, queries, or model updates are shared across the network.

- The advantage: It boosts privacy, improves security, and ensures regulatory compliance, enabling collaboration on sensitive data without direct sharing.

Data fuels modern innovation, but its exponential growth creates immense privacy and security challenges. Traditional centralized systems are slow, risky, and create data silos that block progress. This bottleneck stops important research and collaboration in its tracks.

As the CEO and Co-founder of Lifebit, I’ve spent over 15 years pioneering solutions in this field. My work focuses on changing global healthcare through federated platforms that provide secure, compliant access to diverse biomedical datasets.

End Silos and Risk Now: Deploy a Federated Data Exchange for Secure Collaboration

For decades, the playbook was to centralize data. This created data silos, security risks, and compliance headaches, forcing a choice between sharing data and risking exposure, or protecting it and missing breakthroughs.

There’s a better way. Instead of hoarding data, we can securely connect it where it lives. This shift from centralization to connection is what makes a federated data exchange platform so powerful.

Understanding the Federated Data Exchange Platform Model

A federated data exchange platform operates on a different philosophy: data stays where it is, under the control of its owner. This model is built on several foundational principles:

- Data Sovereignty: This is the core tenet. It means that the data owner—be it a hospital, a bank, or a research institution—retains absolute legal and operational control over their data. They define the rules of engagement, dictating who can access the data, for what purpose, and under what conditions. In a federated network, organizations don’t give up ownership; they gain a secure way to leverage their data assets for collaborative purposes.

- Decentralized Architecture: The architecture is inherently decentralized, with no central database that acts as a single point of failure or a high-value target for cyberattacks. Instead, data remains distributed across its original sources. This distributed nature not only enhances security but also improves resilience, as the failure of one node does not bring down the entire network.

- Compute-to-Data: When analysis is needed, the computation comes to the data. This is a paradigm shift from traditional models where massive datasets are moved to a central compute cluster. For example, to train an AI model on patient records from ten hospitals, the model’s algorithm travels to each hospital’s secure environment, learns from the local data, and only shares back the anonymized insights or model improvements. The sensitive patient records never move, never get copied, and are never exposed.

- Privacy by Design: This principle is woven into the fabric of the architecture. By ensuring raw data never leaves its secure perimeter and only aggregated, non-identifiable results are shared, the system inherently minimizes data exposure. It’s a direct application of the data minimization principle, a key component of modern privacy regulations like GDPR.

The differences are striking:

| Feature | Traditional Centralized Systems | Federated Data Exchange Platform |

|---|---|---|

| Data Location | All data copied/moved to a single, central repository. | Data remains at its source, distributed across participants. |

| Security Risk | Single point of failure; higher risk of large-scale breaches. | Distributed risk; breaches limited to individual nodes. |

| Scalability | Can become a bottleneck; complex to scale centrally. | Scales horizontally by adding more participant nodes. |

| Privacy | Data aggregation raises privacy concerns; requires strict access controls. | Data sovereignty; raw data never moves, enhancing privacy. |

| Control | Central entity owns/controls all data. | Data owners retain full control over their data at all times. |

| Interoperability | Often proprietary formats; requires complex integration. | Built on open standards; designed for seamless data exchange. |

| Compliance | Challenging to meet varied global regulations due to data movement. | Easier to comply with local regulations as data stays local. |

Core Benefits: Privacy, Security, and Interoperability

The advantages of a federated data exchange platform solve real-world problems:

- Improved Privacy: Since raw data never leaves its source, the risk of exposure is dramatically reduced. This is often augmented with other Privacy-Enhancing Technologies (PETs) like differential privacy, which adds mathematical noise to results to prevent re-identification. Only aggregated results or model parameters travel across the network, which is a game-changer for healthcare, finance, and any industry dealing with sensitive information.

- Improved Security: A decentralized system eliminates the single, high-value target that attracts cyberattacks. The “blast radius” of a potential breach is contained to a single node, protecting the rest of the network. This distributed security model is far more robust and resilient than trying to defend a monolithic central data lake.

- Regulatory Compliance: With data staying local, it’s far easier to comply with data residency and sovereignty regulations like GDPR in Europe, HIPAA in the US, LGPD in Brazil, and others worldwide. Data doesn’t cross borders unnecessarily, which dramatically simplifies the legal and compliance overhead associated with international data collaboration.

- Cost Savings: Centralizing data is expensive. It involves significant costs for data transmission (especially cloud egress fees), redundant storage, and the engineering effort to build and maintain complex data pipelines. A federated model reduces or eliminates these costs, allowing organizations to invest in analysis rather than data logistics.

- Fostering Trust: Trust is the currency of collaboration. When organizations know their data is secure and remains under their control, they are far more willing to participate in collaborative research and data-sharing initiatives. This trust unlocks innovation that would otherwise be impossible due to perceived risks, creating powerful network effects as more partners join.

- Semantic Interoperability: True collaboration requires that data from different sources can be understood and compared meaningfully. Federated platforms are designed to work with common data models and ontologies, translating disparate datasets into a common language. This ensures that the insights generated from the federated analysis are accurate, consistent, and actionable.

At Lifebit, we’ve built our entire platform around these principles. You can learn more about our federated platform and how it delivers these benefits in real-world biomedical research and healthcare applications.

Build a Federated Data Exchange That Scales: The 8 Components You Need

Understanding the internal workings of a federated data exchange platform reveals a sophisticated orchestration of technologies designed for secure, private, and efficient data collaboration.

Key Components of a Federated Data Exchange Platform

A robust platform integrates several critical components:

- Data Nodes: These are the individual, secure environments where raw data is stored and processed locally. A node can be a server in an on-premise data center, a virtual private cloud (VPC) in a public cloud provider, or any environment controlled by the data owner. The key is that the data never leaves this secure perimeter.

- Connectors and APIs: These are the standardized interfaces that allow different systems to communicate without requiring custom, one-off integrations. They act as universal translators, often using REST APIs and adhering to industry standards like HL7 FHIR in healthcare or open banking standards in finance to ensure seamless and secure data queries.

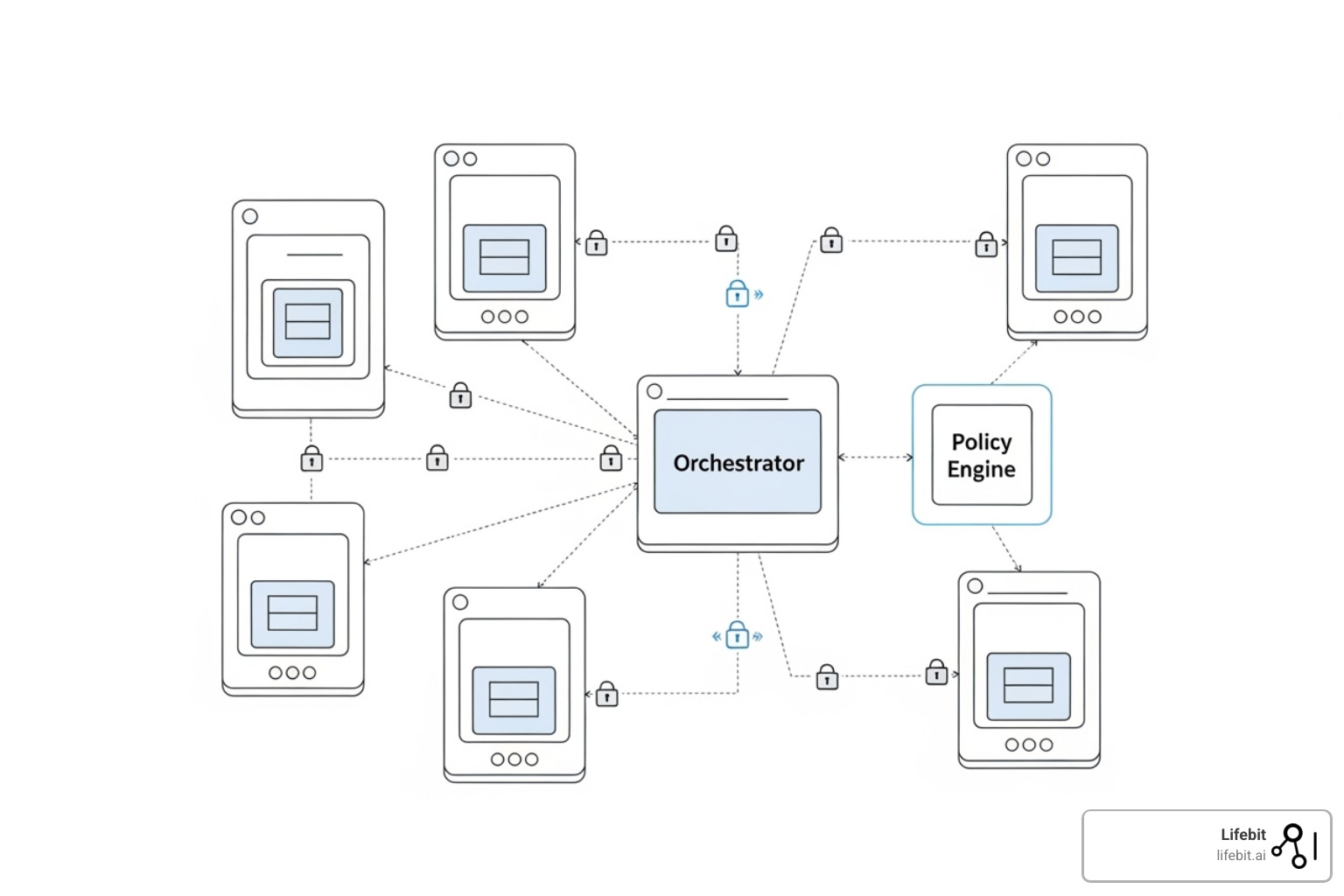

- Central Coordinator/Orchestrator: This is the network’s traffic controller. It manages the workflow of a federated analysis, such as distributing a machine learning model to the nodes, collecting the resulting updates, and coordinating the aggregation process. Crucially, the orchestrator never sees or touches the raw data; it only handles metadata and aggregated results.

- Policy Engine: This is the governance brain of the platform. It allows data owners to programmatically define and enforce granular access and usage policies. For example, a policy could state, “Allow Researcher X from Organization Y to run this specific statistical analysis on the de-identified records of patients over 50, and log all activity.” This ensures data owners retain full control.

- Identity and Access Management (IAM): This is the security checkpoint for the entire network. It handles user authentication (verifying who someone is) and authorization (what they are allowed to do), often integrating with existing enterprise systems like Active Directory. It enforces the principle of least privilege, ensuring users can only perform actions explicitly permitted by the policy engine.

- Secure Enclaves: For maximum security, computations can be run inside hardware-based secure enclaves, also known as Trusted Execution Environments (TEEs). Technologies like Intel SGX and AMD SEV create a completely isolated memory space where code and data are protected from the host system, meaning data remains encrypted and secure even while it’s being actively processed.

- Audit Logs: To build and maintain trust, every action on the network must be logged. Comprehensive, immutable audit logs provide a transparent, verifiable record of who did what, when, and where. These logs are often cryptographically signed to prevent tampering and are essential for compliance and security forensics.

- User Interfaces: The platform must be accessible to different types of users. This includes dashboards for data owners to manage their data and policies, interfaces for researchers to discover available datasets and submit analysis requests, and administrative consoles to monitor the health and security of the network.

How It Works: From Delegated Computation to Federated Learning

The platform’s power lies in its ability to perform analysis without moving data. This is achieved through two key mechanisms.

Delegated computation is the core principle: the analysis moves to the data. An analyst sends a query or algorithm to the data owner’s environment. The computation runs locally within the secure data node, and only the aggregated or anonymized results are returned. For example, a researcher could calculate the average patient response to a drug across three hospitals. The query is sent to each hospital, the average is calculated locally, and only that single number is returned from each. The central orchestrator then computes the overall average without ever seeing a single patient’s record.

Federated learning is a specialized form of this for machine learning. It allows multiple organizations to collaboratively train a powerful, shared AI model without sharing any of their sensitive training data.

The process is an elegant cycle: a global model (e.g., a neural network) is sent to each data node. Each node then trains the model on its local data, creating an updated version of the model based on its unique information. It then sends back only the learned updates (model weights or gradients)—not the raw data. A central server performs model aggregation, typically using an algorithm like Federated Averaging (FedAvg), which combines these updates to create an improved global model. This new, smarter global model is then sent back to the nodes for another round of training. This cycle repeats, allowing the model to learn from the collective intelligence of the entire network while preserving the privacy of each participant. This approach is transformative for building AI in finance and healthcare, but it must account for challenges like non-IID (non-independent and identically distributed) data, where data distributions vary significantly between nodes.

For even greater privacy, advanced cryptographic techniques like Secure Multi-Party Computation (SMPC) and homomorphic encryption can be used. SMPC allows multiple parties to jointly compute a function over their inputs without revealing those inputs to each other. Homomorphic encryption allows computations to be performed directly on encrypted data. While powerful, these methods often come with significant computational overhead and are used for specific high-sensitivity use cases.

3 Industries Proving Federated Data Exchange Cuts Risk and Time-to-Insight

The value of a federated data exchange platform is demonstrated by its impact across major industries, proving that progress doesn’t require sacrificing privacy.

Revolutionizing Healthcare and Genomics

Healthcare is a prime example where federated data exchange is not just beneficial but essential. Hospitals, labs, and pharma companies hold vast amounts of sensitive patient data governed by strict regulations like HIPAA and GDPR. A federated data exchange platform allows them to collaborate without moving this sensitive patient data. This unlocks numerous breakthroughs:

- Accelerating Drug Discovery: Pharmaceutical companies can identify eligible patient cohorts for clinical trials across a global network of hospitals without any patient data leaving the hospital’s firewall. This drastically speeds up trial recruitment, one of the most significant bottlenecks in drug development.

- Improving Pharmacovigilance: After a drug is on the market, federation allows for real-time safety monitoring (pharmacovigilance). By analyzing treatment data from multiple hospital systems, regulators and manufacturers can detect rare adverse events much faster than with siloed data, improving patient safety.

- Generating Real-World Evidence (RWE): Federation enables the generation of robust real-world evidence on how treatments perform in diverse, real-world populations. This helps payers, providers, and patients make more informed decisions about care.

- Enhancing Diagnostic AI: AI models for medical imaging (e.g., detecting tumors in MRIs or pneumonia in X-rays) can be trained on diverse datasets from hospitals around the world. This leads to more accurate and robust models that are less prone to bias, all without sharing the sensitive images themselves.

The NHS Federated Data Platform is a national-scale example, designed to improve care coordination while maintaining strict patient privacy. In genomics, federation enables secure analysis of vast omics data for personalized medicine, guided by standards from the Global Alliance for Genomics & Health (GA4GH). During the pandemic, the Viral AI network processed 1.6 million SARS-CoV-2 genomes using federated principles, enabling rapid pandemic surveillance to track variants while respecting data sovereignty across borders.

Powering Smart Mobility and Logistics (CCAM)

The future of transportation, Connected, Cooperative and Automated Mobility (CCAM), relies on sharing real-time vehicle data between automakers, cities, and logistics firms. Much of this data is proprietary or personally identifiable. A federated platform creates a secure data-sharing ecosystem.

- Optimizing Urban Traffic Flow: Cities can analyze data from multiple ride-sharing companies, delivery services, and public transit systems to build predictive models for traffic congestion, optimizing signal timing and routing emergency services more effectively.

- Improving Supply Chain Optimization: Ports, shipping lines, trucking companies, and warehouses can share logistical data to create a real-time, end-to-end view of the supply chain. This allows them to predict delays, optimize container handoffs, and reduce idle time without revealing sensitive commercial information.

- Enabling Predictive Maintenance: Vehicle manufacturers can train predictive maintenance models on operational data from their entire fleet of cars without accessing any individual driver’s location or usage data. This allows them to predict part failures before they happen, improving safety and reliability.

The EU’s initiative for creating a common European mobility data space highlights how federation is foundational for smart mobility, allowing for critical applications like scenario-based validation for autonomous vehicles by testing them against simulated scenarios derived from real-world driving data from multiple sources.

Securing Finance and IoT

In finance, banks must collaborate to fight sophisticated financial crime but are bound by strict regulations like PCI DSS and customer privacy obligations. A federated data exchange platform provides the solution.

- Advanced Fraud Detection and AML: A consortium of banks can collaboratively train a machine learning model to detect complex fraud or money laundering schemes that span multiple institutions. Each bank trains the model on its local transaction data and shares only the learned patterns, not the raw transaction details. This allows them to identify suspicious activity that would be invisible to any single bank, preserving customer data privacy throughout.

- Robust Credit Risk Modeling: Lenders can build more accurate and equitable credit risk models by learning from pooled, anonymized insights across the industry, helping to reduce bias and improve access to credit.

In the Internet of Things (IoT), edge computing combined with federated principles allows billions of smart devices to perform real-time learning locally. A smart thermostat learns your preferences without sending your daily routine to a central server. In a smart factory, sensor data from machines across multiple production lines or even different factories can be used to train models that predict equipment failure or optimize energy consumption, all without exposing proprietary manufacturing processes. This approach minimizes data transmission, reduces latency, and respects privacy from the smart home to the factory floor.

Avoid Costly Missteps: Governance and Standards to Launch Your Federated Data Exchange Right

Implementing a federated data exchange platform requires thoughtful planning, strong governance, and a clear view of the obstacles. Success depends as much on organizational alignment and trust as it does on technology.

Governance Models and Business Sustainability

Clear governance is the bedrock of trust in a federated network. It defines the rules of the road, responsibilities, and decision-making processes. There is no one-size-fits-all model; the right choice depends on the participants and goals.

- Consortium Model: A group of peer organizations with shared goals (e.g., an alliance of pharmaceutical companies) jointly owns and manages the platform. This model is excellent for industry-specific challenges, as it aligns incentives and distributes costs. However, decision-making by committee can be slow.

- Peer-to-Peer Model: Participants interact directly through strong bilateral or multilateral contractual agreements. This approach offers high flexibility and is suitable for smaller, highly trusted partnerships. Its main drawback is that it scales poorly, as each new participant requires a new set of legal and technical integrations.

- Centralized Governance with Decentralized Data: A neutral, trusted third party (which could be a government body, a non-profit, or a commercial entity) establishes and enforces the rules for the network, but the data itself remains decentralized. This model, used in initiatives like the NHS Federated Data Platform, provides clear authority and standardized processes, which can accelerate adoption. Its success hinges on the neutrality and trustworthiness of the central governing body.

Transparent data usage agreements are the legal glue that holds these models together. For long-term success, a sustainable business model is also critical. Unlike selling raw data, federated platforms often focus on utility trading—creating value from shared insights, models, or services. This can be monetized through subscription fees for network access, pay-per-query charges, or licensing fees for collaboratively trained AI models.

The Role of European Initiatives and Open Standards

Europe has championed federated data approaches as a way to foster innovation while upholding its strong commitment to data sovereignty. Key initiatives include:

- Gaia-X: A project to build a federated, secure, and sovereign data infrastructure for Europe. It focuses on creating an ecosystem based on transparency, user control, and interoperability, moving away from dependency on a few large tech providers. You can learn more by asking, “What is Gaia-X?”.

- Common European Data Spaces: These are sector-specific environments for trusted data sharing in key areas like health, mobility, and manufacturing. Supported by the Data Spaces Support Centre (DSSC), they aim to create a single market for data governed by common rules and standards.

These initiatives promote FAIR data principles (Findable, Accessible, Interoperable, Reusable) and push for interoperability standards. Standards from groups like the Global Alliance for Genomics & Health (GA4GH) and frameworks for a common European mobility data space are crucial for enabling seamless, large-scale collaboration.

Overcoming Implementation Problems

Implementing a federated platform involves real-world challenges that require careful planning:

- Technical Complexity: Building and maintaining a secure, performant distributed system requires specialized expertise in areas like cryptography, distributed computing, and MLOps. Managing issues like network latency and heterogeneous compute environments across nodes is non-trivial.

- Lack of Standardization: Inconsistent data formats, schemas, and terminologies across organizations is a major hurdle. A significant upfront effort in data harmonization—mapping disparate data sources to a Common Data Model (CDM)—is often required before any analysis can begin.

- Building Trust: Technology alone is not enough. Convincing organizations to join a federated network requires establishing trust through transparent governance, legally sound Data Use and Access Agreements (DUAAs), immutable audit trails, and often, the involvement of a neutral technology partner.

- Scalability: While federated systems scale horizontally, managing a growing network of hundreds or thousands of nodes presents operational challenges. The central orchestrator must be designed to handle the load, and processes must be in place for onboarding, monitoring, and retiring nodes efficiently.

- Defining Clear Policies: Crafting data access and usage policies that satisfy all stakeholders while ensuring regulatory compliance is intricate. There is often a delicate balance between maximizing the utility of the data for analysis and enforcing strict privacy controls to prevent potential data leakage or re-identification.

Your Top 3 Federated Data Exchange Questions—Answered Fast

As organizations explore federated data exchange platforms, several common questions arise. Here are answers to the most frequent concerns.

What is the difference between data federation and federated learning?

Though the terms sound similar and are often used together, they describe different concepts that solve different problems:

- Data federation is about data access. It provides a virtual, unified view of data from multiple, distributed sources. It allows you to run a query against what looks like a single database, but the query is actually executed across the distributed sources in their native locations. Think of it as creating a universal library card catalog that shows you where every book is located and allows you to request information from them without moving the books.

- Federated learning is a machine learning technique for collaborative model training. Instead of moving data to a central location, the AI model travels to each data source, trains on the local data, and shares only the learned mathematical updates back to a central server for aggregation. To continue the analogy, this is like sending a researcher to each library to read the books and bring back only their notes. The notes are then combined into a single, comprehensive report without anyone having to move the original books.

A robust federated data exchange platform, like the one offered by Lifebit, can use both. Data federation helps researchers find and query relevant datasets across the network, while federated learning provides a privacy-preserving way to build powerful AI models from that distributed data.

Is a federated data exchange platform completely secure?

No system is 100% secure, but a federated data exchange platform represents a massive leap forward in security and resilience compared to centralized systems. By keeping data at its source, the architecture dramatically reduces the attack surface and contains the impact of a breach. If one node is compromised, the rest of the network remains secure.

However, security is a multi-layered, shared responsibility. It depends on:

- Architectural Security: The platform’s distributed design, use of secure enclaves, and end-to-end encryption.

- Cryptographic Privacy: The use of advanced techniques to protect against specific threats. For example, to mitigate

model inversion attacks(where an attacker tries to reconstruct training data from model updates), platforms can incorporate differential privacy. This technique adds a carefully calibrated amount of statistical noise to the model updates, making it mathematically impossible to re-identify individual data points while preserving the overall accuracy of the global model. - Operational Security: The cybersecurity practices of all participants are critical. Strong access controls, regular security audits, and vigilant monitoring are essential.

At Lifebit, we build security into every layer, from hardware-level secure enclaves to comprehensive, immutable audit logs. A federated system is inherently more resilient, but it requires a culture of continuous vigilance among all partners.

How do you ensure data quality in a federated network?

Analyzing poor-quality data is worthless—garbage in, garbage out. In a federated network where you can’t directly inspect the raw data, ensuring quality requires a multi-layered approach built on trust and technology:

- Robust Governance and Standards: The network’s governing body must establish clear data quality standards that all participants agree to meet. This includes using common data models (CDMs) and standardized terminologies (e.g., SNOMED CT, LOINC in healthcare) to ensure data is consistent and comparable across the network.

- Validation at the Source: Before data is made available for federated analysis, it should pass through a “data quality firewall” at the source. This involves running automated scripts and manual checks at each data node to verify accuracy, completeness, and adherence to the agreed-upon format. This prevents low-quality data from ever entering a computation.

- Transparent Quality Metrics: The platform should generate and display metadata about the quality of each dataset (e.g., percentage of missing values, adherence to standards). This allows researchers to understand the landscape of the data they are analyzing and make informed decisions about which datasets to include.

- Quality-Aware Aggregation: In federated learning, advanced platforms can protect the integrity of the global model from low-quality data. During the aggregation step, the central server can weigh or exclude contributions from certain nodes based on their data quality metrics or the performance of their local model updates. This prevents a single source of poor data from corrupting the collaboratively trained model.

At Lifebit, our platform provides the tools and governance frameworks to ensure every analysis is built on a foundation of high-quality, trustworthy data.

Stop Building Bigger Silos: Launch a Federated Data Exchange and Cut Risk This Quarter

The era of hoarding data in isolated silos is ending. The future lies in a more powerful approach: collaboration without sacrificing control, privacy, or security. This is the promise of a federated data exchange platform.

Instead of building bigger, riskier databases, we can build bridges between organizations. This shift enables unprecedented data-driven insights across every sector. In healthcare, it accelerates life-saving research. In mobility, it optimizes transportation networks. In finance, it helps fight fraud collectively.

This new era of collaboration is built on trust. When organizations know their data is secure and under their control, secure partnerships flourish, and innovation accelerates.

At Lifebit, our next-generation federated AI platform is a bridge-building tool. We provide secure, real-time access to global biomedical data, empowering biopharma, governments, and public health agencies to collaborate with confidence. Our platform components, including the Trusted Research Environment (TRE) and Trusted Data Lakehouse (TDL), deliver real-time insights and AI-driven analytics while keeping sensitive data exactly where it belongs.

The future of collaboration isn’t about who has the biggest database; it’s about who can work together most effectively and ethically. It’s about protecting privacy while driving progress.

Ready to explore how a federated data exchange platform can transform your organization’s data strategy? Learn how to implement a federated biomedical data platform with us. Let’s build those bridges together.