NGS Uncovered – The DNA Revolution You Need to Know

Next-generation sequencing: 2025 Revolution

Decoding the Blueprint of Life at Unprecedented Speed

Next-generation sequencing is a DNA analysis technology that reads millions of genetic fragments simultaneously, making it thousands of times faster and cheaper than traditional methods. This breakthrough transformed genetics, disease diagnosis, and personalized medicine.

Key facts about next-generation sequencing:

- Speed: Can sequence an entire human genome in hours instead of years

- Cost: Reduced sequencing costs from billions to under $1,000 per genome

- Scale: Processes millions of DNA fragments in parallel

- Applications: Used in cancer research, rare disease diagnosis, drug findy, and population studies

- Data output: Generates massive amounts of genetic information requiring specialized analysis tools

NGS technology works by breaking DNA into small fragments, copying them millions of times, and reading each fragment simultaneously. This massively parallel approach creates unprecedented throughput that has revolutionized genomics research.

The impact is vast: pharma uses it for drug findy, regulatory agencies for safety assessments, and healthcare systems for faster diagnosis. However, this data flood creates challenges in storage, analysis, and bioinformatics expertise, with many datasets siloed and underused.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, with over 15 years of experience in computational biology and genomics. I contributed to the Nextflow framework for NGS data analysis and now lead Lifebit’s efforts to democratize genomic insights with federated AI.

Relevant articles related to next-generation sequencing:

The Leap from Traditional to Next-Generation Sequencing

In the 1970s, Frederick Sanger’s “first-generation” sequencing was groundbreaking, allowing scientists to read the exact order of genetic letters (A, T, C, G) for the first time.

The 1990 Human Genome Project used this method to read all 3 billion letters of human DNA. The ambitious project took 13 years and cost nearly $3 billion, giving us our first complete human genome sequence. While a landmark achievement, it revealed that traditional sequencing was too slow and expensive for large-scale work.

Then, in the mid-2000s, next-generation sequencing (NGS) arrived with a radically different, “massively parallel” approach. Instead of reading one DNA fragment at a time, NGS could read millions simultaneously, changing genetics into a high-speed, industrial operation.

The numbers are staggering: what took the Human Genome Project over a decade and billions of dollars can now be done in hours for under $1,000. This dramatic shift democratized genetic research.

| Feature | Sanger Sequencing | Next-Generation Sequencing (NGS) |

|---|---|---|

| Speed | Reads one DNA fragment at a time (slow) | Millions to billions of fragments simultaneously (fast) |

| Cost | High, billions for a whole human genome | Low, under $1,000 for a whole human genome |

| Throughput | Low, suitable for single genes or small regions | Extremely high, suitable for entire genomes or populations |

| Read Length | Long (500-1000 base pairs) | Short (50-600 base pairs, typically) |

The Evolution of Sequencing Generations

DNA sequencing has evolved through distinct generations:

First-generation Sanger sequencing used a precise “chain-termination” method, but it could only read one DNA piece at a time.

Second-generation sequencing (NGS) introduced massive parallelization, generating millions of short DNA reads (50-600 base pairs) simultaneously. This is like millions of people reading different pages of a book at once, with computers reassembling the story.

Third-generation sequencing (e.g., SMRT, Nanopore) addresses the short-read weakness of NGS by reading much longer DNA stretches. Though initially less accurate, their precision is rapidly improving, making them vital for complex genomic regions.

Sanger vs. NGS: A Head-to-Head Comparison

The difference is like a master craftsperson versus a modern factory.

Sanger sequencing is the precise craftsperson, producing accurate, long reads (up to 1,000 base pairs) for targeted work like confirming specific variants. However, it’s slow.

Next-generation sequencing is the factory, processing billions of shorter DNA fragments (50-600 base pairs) at once. The sheer volume and computational power allow for perfect reconstruction from these pieces. The data output is staggering; a single NGS run can generate terabytes of data, enabling whole-genome sequencing and large-scale studies.

While Sanger has higher individual read accuracy, NGS achieves excellent overall accuracy through depth of coverage—reading each genetic position multiple times. This redundancy allows for confident sequence determination despite minor errors in individual reads.

How Next-Generation Sequencing Works: Technologies and Principles

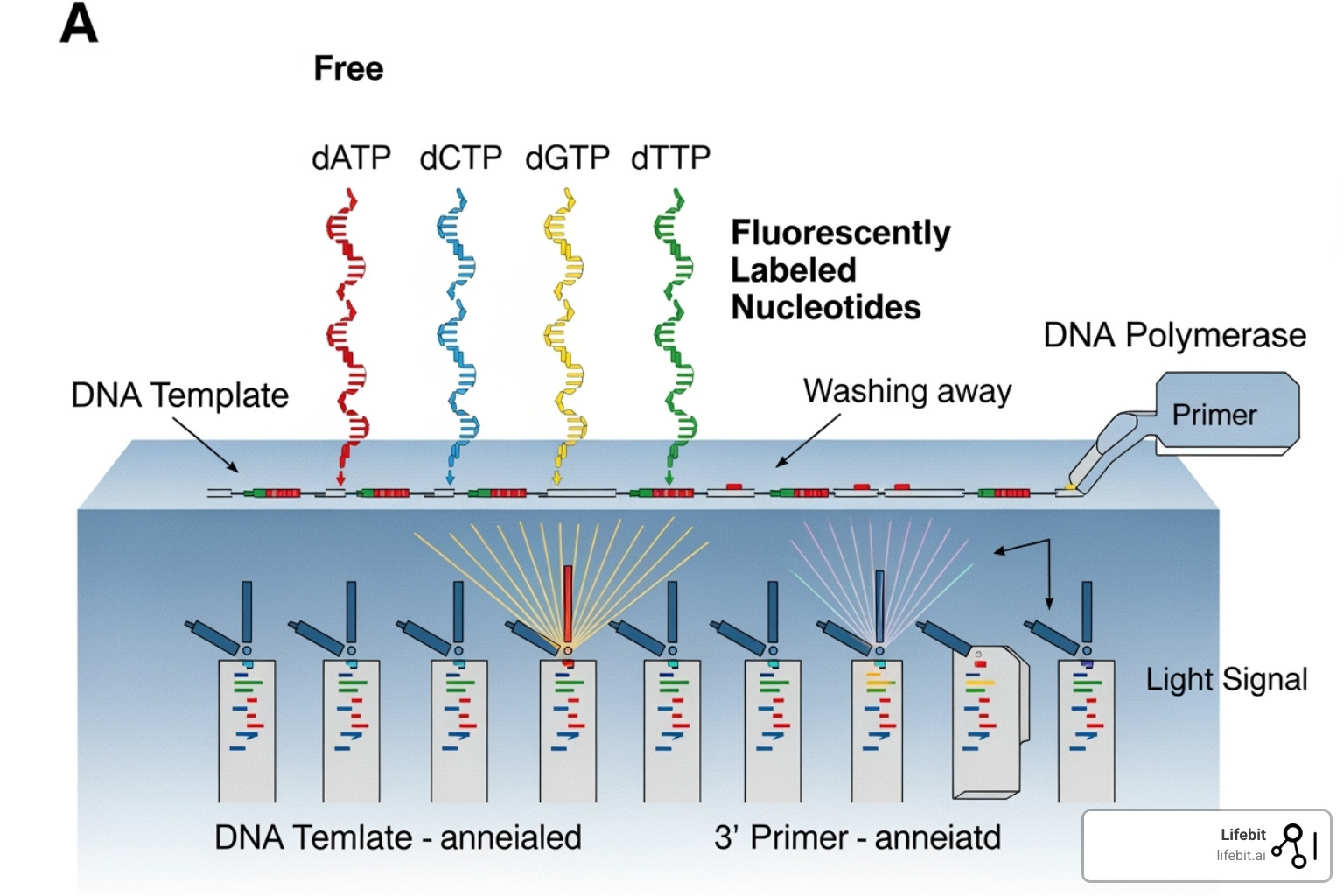

The magic behind next-generation sequencing is a process combining chemistry, engineering, and computation that decodes millions of DNA fragments at once. The most popular method is Sequencing by Synthesis (SBS) by Illumina.

Library preparation is the first step. DNA is fragmented into manageable pieces. Special ‘adapter’ sequences are attached to the ends, which allow the fragments to bind to the sequencing platform and act as starting points for copying.

Next is cluster generation. The DNA library is loaded onto a flow cell, a glass slide with millions of spots. Fragments bind to these spots and are amplified into clusters of millions of identical copies, creating a strong signal for detection.

During Sequencing by Synthesis, fluorescently-tagged nucleotides (A, T, C, G) are added one at a time. Each nucleotide type glows a different color when incorporated. A camera captures the color of each cluster after each addition. This cycle repeats, creating a ‘movie’ of colored lights that reveals the DNA sequence of each fragment.

Finally, in data analysis, the raw images are converted into millions of short DNA reads. These reads are then assembled using sophisticated algorithms and significant computing power, much like solving a massive jigsaw puzzle.

For those interested in diving deeper into the technical details, you can find more information on Illumina sequencing technology.

Short-Read Sequencing Platforms

While Illumina’s Sequencing by Synthesis (SBS) dominates the short-read NGS landscape, other technologies also contributed to the revolution.

- Sequencing by Synthesis (SBS) is the gold standard due to its high accuracy (over 99% per base) for short DNA fragments.

- Pyrosequencing, an earlier method, detected light flashes upon nucleotide addition but struggled with repetitive sequences (homopolymers).

- Ion semiconductor sequencing detected pH changes instead of light, offering a label-free method but also facing challenges with repetitive sequences.

All these platforms excel at accuracy over short fragments, making them ideal for spotting single nucleotide variants.

Long-Read vs. Short-Read: A Comparative Look

Short-read sequencing struggles with complex, repetitive genomic regions, like assembling a jigsaw puzzle with many identical-looking pieces.

Short-read sequencing is the cost-effective workhorse of genomics, excellent for detecting common genetic variations. However, it struggles with repetitive regions and large structural changes like deletions or rearrangements.

Long-read sequencing technologies (e.g., SMRT, Nanopore) are game-changers for these challenges. They produce reads thousands or millions of base pairs long, spanning complex regions that confuse short-read methods. This makes them ideal for assembling new genomes, understanding complex gene fusions in cancer, and even detecting epigenetic modifications directly.

While historically having higher error rates, long-read accuracy has improved dramatically. The choice between short and long reads now depends more on the research question than technical limits. Short-read NGS is still the go-to for high-throughput analysis, but for solving complex genomic puzzles, long-read sequencing is an essential tool.

The Revolutionary Impact of NGS Across Scientific Fields

Next-generation sequencing didn’t just improve research; it transformed entire scientific fields. The leap was like switching from a horse-drawn carriage to a high-speed train, compressing research timelines from years to days.

The effects are extraordinary. Researchers can now study thousands of genes at once, doctors can base treatments on a patient’s genetic blueprint, and public health officials can track outbreaks in real-time. NGS is also foundational to personalized medicine, where treatments are custom to an individual’s genetics. Its impact extends to agriculture, environmental science, and forensics, making it fundamental to modern biology.

Key applications of next-generation sequencing in Clinical Genetics

In clinical settings, next-generation sequencing has been a game-changer, providing definitive answers and proactive insights.

- Rare disease diagnosis: For many families, NGS has ended the painful “diagnostic odyssey,” which can involve years of specialist visits and inconclusive tests. Instead of a piecemeal approach, a single comprehensive test like whole-exome or whole-genome sequencing can screen thousands of genes simultaneously, providing answers in weeks. The Deciphering Developmental Disorders project is a prime example, having diagnosed thousands of children’s conditions by identifying causative mutations in genes previously unassociated with disease.

- Carrier screening: Carrier screening has become far more comprehensive. Prospective parents can now be screened for hundreds of recessive genetic conditions at once, providing a clearer picture of their reproductive risks and enabling better-informed family planning.

- Non-invasive prenatal testing (NIPT): NIPT represents a major advance in prenatal care. By analyzing fragments of fetal DNA circulating in the mother’s blood, it can accurately screen for chromosomal abnormalities like Down syndrome as early as nine weeks into pregnancy. This has significantly reduced the need for riskier invasive procedures like amniocentesis.

- Pharmacogenomics: Pharmacogenomics uses NGS to predict how an individual’s genetic makeup will affect their response to drugs. This allows doctors to move beyond a one-size-fits-all approach, selecting the right drug at the optimal dose from the start. This minimizes adverse reactions and avoids costly and ineffective trial-and-error prescribing, particularly in fields like psychiatry and cardiology.

Advancements in Oncology

NGS has revolutionized oncology. Since cancer is fundamentally a disease of the genome, the ability to read a tumor’s complete genetic story has changed every aspect of cancer care, from diagnosis to treatment.

- Tumor profiling: Comprehensive tumor profiling is now routine for many cancers. By sequencing a panel of hundreds of cancer-related genes, oncologists can identify the specific mutations driving a tumor’s growth. This molecular fingerprint guides the use of targeted therapies, such as prescribing BRAF inhibitors for melanoma patients with a BRAF V600E mutation or Herceptin for breast cancer patients with HER2 gene amplification.

- Liquid biopsies: One of the most exciting developments is liquid biopsies. These simple blood tests detect and sequence circulating tumor DNA (ctDNA) shed by cancer cells into the bloodstream. As shown in research on circulating tumor DNA in breast cancer, this non-invasive method can be used to track treatment response, detect minimal residual disease (MRD) after surgery, and identify the emergence of drug-resistant mutations, often months before they are visible on imaging scans.

- Monitoring treatment response: By repeatedly sequencing a tumor’s DNA (either from tissue or liquid biopsies) over time, doctors can watch how a cancer evolves in response to therapy. This dynamic monitoring allows for the early detection of drug resistance, enabling clinicians to adjust treatment strategies and stay one step ahead of the disease.

Breakthroughs in Microbiology and Infectious Disease

NGS has provided an unprecedented, high-resolution view into the microbial world, transforming public health and clinical microbiology.

- Pathogen identification: In cases of severe or unusual infections, NGS enables rapid, unbiased pathogen identification. Instead of culturing for specific suspected microbes, which can take days or weeks, sequencing all the genetic material in a patient sample can identify the causative agent in hours, which is critical for treating sepsis and encephalitis.

- Outbreak tracing: NGS has become an indispensable tool for outbreak tracing. During the COVID-19 pandemic, rapid whole-genome sequencing allowed public health labs worldwide to track the emergence and spread of variants like Alpha, Delta, and Omicron in near real-time. This genomic surveillance provided crucial information for developing public health policies, assessing vaccine effectiveness, and understanding viral transmission chains with pinpoint accuracy, as was previously demonstrated in MRSA outbreak analysis70268-2).

- Antimicrobial resistance: NGS is a key weapon in the fight against antimicrobial resistance (AMR). It can quickly identify the specific resistance genes present in a bacterial infection, helping doctors choose an effective antibiotic and preventing the use of drugs that would be ineffective. On a larger scale, it allows hospitals and public health agencies to track the spread of “superbugs.”

- Metagenomics: Metagenomics involves sequencing all the DNA from an entire community of organisms in a given sample (e.g., from the human gut, soil, or ocean water). This has revolutionized our understanding of the human microbiome, revealing how the trillions of microbes living in and on our bodies influence everything from digestion and immunity to mental health.

New Frontiers in Agriculture and Environmental Science

The impact of NGS extends beyond human health into the world around us.

- Agriculture: In agriculture, NGS accelerates crop and livestock improvement. Scientists can identify genetic markers associated with desirable traits like drought resistance, disease tolerance, or higher yield, enabling breeders to develop more resilient and productive varieties through marker-assisted selection. It is also used to analyze soil microbiomes to understand how microbes contribute to soil health and plant growth.

- Environmental Science: NGS is a powerful tool for monitoring biodiversity and ecosystem health. Using a technique called environmental DNA (eDNA), scientists can detect the presence of species—from rare fish to invasive insects—simply by sequencing DNA fragments found in samples of water, soil, or air. This non-invasive method provides a rapid and comprehensive snapshot of an ecosystem’s inhabitants.

Navigating the NGS Landscape: Advantages and Challenges

Working with next-generation sequencing offers superpowers in research and medicine, but these capabilities come with a unique and complex set of challenges.

The progress is remarkable: sequencing a human genome has dropped from billions of dollars over a decade to under $1,000 in hours. The high throughput, unprecedented speed, and comprehensive genomic coverage have democratized genomics. Furthermore, its high sensitivity for detecting variants and falling costs continue to accelerate research and its clinical applications.

The Advantages of NGS Technology

The benefits of next-generation sequencing continue to expand and redefine what is possible in biological science:

- Scalability for large projects: NGS is uniquely suited for massive-scale projects. It can analyze genomes from hundreds of thousands of individuals in population studies (like the UK Biobank), sequence entire microbial communities, or screen thousands of potential drug compounds, turning previously painstaking experiments into industrial-scale operations.

- Detection of novel variants: Unlike older methods that could only find the specific genetic variants they were designed to look for, NGS performs unbiased sequencing. This allows it to reveal all types of genetic variation, including novel single nucleotide polymorphisms (SNPs), insertions, deletions, copy number variations, and large-scale structural rearrangements.

- Quantitative analysis: NGS is not just qualitative; it is also highly quantitative. By counting the number of sequencing reads that map to a particular gene, researchers can precisely measure gene expression levels (in a method called RNA-Seq). This is invaluable for understanding gene regulation, cellular responses, and the molecular drivers of disease.

- Broad range of applications: The technology is a remarkably versatile tool. Its flexibility has led to a vast array of applications across clinical diagnostics (rare disease, oncology), basic research (functional genomics, evolution), public health (infectious disease surveillance), and applied sciences (agriculture, forensics), constantly opening up new avenues of inquiry.

Challenges in implementing and interpreting next-generation sequencing data

Despite its power, the widespread adoption of NGS has created significant hurdles that the scientific community is actively working to overcome:

- Data storage requirements: The output of NGS is enormous. A single sequencing run can generate several terabytes of raw data. Storing, managing, and archiving these massive datasets requires robust, scalable, and often expensive IT infrastructure, posing a major logistical and financial challenge for many institutions.

- Computational power needs: Processing raw NGS data into interpretable results is a computationally intensive task. Aligning reads to a reference genome, calling variants, and performing downstream analysis demands high-performance computing clusters or significant cloud computing resources, which can be a barrier to entry for smaller labs.

- Bioinformatic expertise: The demand for skilled bioinformaticians—scientists who can design analysis pipelines, manage large datasets, write custom scripts, and correctly interpret complex results—far outstrips the supply. This expertise gap is one of the most significant bottlenecks limiting the use of NGS.

- Data interpretation bottlenecks: Identifying millions of genetic variants in a genome is now the easy part; understanding their clinical significance is the major challenge. Distinguishing a pathogenic (disease-causing) mutation from a benign polymorphism requires extensive knowledge and the use of large-scale databases like ClinVar (which links variants to health status) and gnomAD (which provides variant frequencies across diverse populations). This interpretive step remains a time-consuming and highly specialized process.

- Ethical, Legal, and Social Implications (ELSI): Access to a person’s complete genetic blueprint raises profound ethical questions. Key issues include data privacy and the risk of re-identification from supposedly anonymous data; the potential for genetic discrimination in areas not fully covered by laws like the US Genetic Information Nondiscrimination Act (GINA), such as life or disability insurance; and the complexities of informed consent, especially regarding the use of data in future research and the handling of incidental findings (medically significant information discovered unintentionally).

- Variants of unknown significance (VUS): In clinical sequencing, it is common to find genetic changes whose impact on health is not yet understood. These VUS results create significant uncertainty for both clinicians and patients, often causing anxiety without providing a clear course of action. The ongoing effort to re-classify VUS as either pathogenic or benign as new evidence emerges is a major task for the clinical genetics community.

Despite these problems, the NGS revolution continues, driven by innovative solutions in data management, cloud computing, and federated analysis that help overcome these barriers.

The Future is Now: Trends and Advancements in DNA Sequencing

The next-generation sequencing revolution is not slowing down; it is accelerating, with a pace of innovation that continues to produce breakthroughs that once seemed like science fiction.

The pursuit of the $100 genome goal continues to drive down costs, making large-scale and personalized genomics increasingly accessible. This economic shift is fundamental to moving healthcare from a reactive treatment model to one based on proactive, genetically informed prevention.

Improvements in long-read accuracy are closing the gap with short-read technologies. High-fidelity (HiFi) long reads now offer both length and precision, enabling researchers to assemble complete, gap-free genomes and resolve complex structural variants that were previously intractable.

Perhaps the most transformative trend is multi-omics integration. Researchers are no longer looking at the genome in isolation. Instead, they are combining genomics (the DNA blueprint), transcriptomics (which genes are active, via RNA-Seq), proteomics (which proteins are being made), and epigenomics (modifications that regulate gene activity) to build a holistic, high-definition movie of cellular life. This integrated approach provides a dynamic understanding of how healthy cells function and how disease develops.

AI and machine learning are becoming indispensable for making sense of the data tsunami. AI algorithms can spot complex patterns in massive datasets that are invisible to human analysts. For example, deep learning models like Google’s DeepVariant significantly improve the accuracy of variant calling from raw sequencing data, while other models can help predict the functional impact of a VUS, aiding in its classification.

The emergence of portable sequencing devices, like the handheld nanopore sequencers from Oxford Nanopore, brings genomic analysis out of the lab and directly to the point of need. This enables real-time sequencing for outbreak investigations in remote villages, environmental monitoring in the field, and even potential applications in space.

The Next Frontiers: Single-Cell and Spatial Genomics

Two of the most exciting new frontiers are pushing the resolution of genomics to its ultimate limits: the individual cell and its place in tissue.

- Single-cell sequencing: Traditional NGS analyzes tissue samples containing thousands or millions of cells, providing an ‘average’ view that masks cellular differences. Single-cell sequencing deconstructs this average, allowing researchers to analyze the genome, transcriptome, or epigenome of each individual cell. This has been revolutionary for understanding complex tissues like the brain and for identifying rare cell populations, such as a small cluster of drug-resistant cells within a tumor.

- Spatial genomics: Taking this a step further, spatial genomics adds positional context to sequencing data. Instead of creating a ‘smoothie’ from a tissue sample, technologies like spatial transcriptomics create a detailed map showing which genes are active in which specific locations within a slice of tissue. This allows researchers to study cells in their native environment, revealing how they interact with their neighbors and form complex structures. It is a game-changer for understanding tissue development, neurobiology, and the intricate architecture of the tumor microenvironment.

These converging trends point to a future where deep, multi-layered genomic insights are seamlessly integrated into every aspect of healthcare and biological research. The primary challenge is shifting from data generation to the even greater task of extracting meaningful, actionable knowledge from these incredibly rich datasets to improve human health.

Conclusion: Using the Power of Genomic Data

Next-generation sequencing has transformed our understanding of life, evolving from the decade-long Human Genome Project to sequencing genomes in hours. It is now the backbone of modern biology and medicine.

We can now rapidly diagnose rare diseases, track cancer mutations in real-time, and trace outbreaks with precision. Next-generation sequencing is saving lives by enabling personalized medicine, with treatments custom to an individual’s genetic blueprint.

NGS also enables massive population studies, revealing genetic influences on common diseases like diabetes and heart disease. These insights shape public health policy and preventive strategies by revealing patterns invisible in smaller studies.

The challenge, however, is that this data is useless without effective access and analysis. The terabytes of information from NGS require sophisticated, secure infrastructure, yet many datasets remain in silos, limiting their potential.

Advanced data platforms are crucial. The future of genomics depends on securely sharing and analyzing data across organizations while protecting privacy. We need systems that harmonize datasets, apply AI analytics, and enable global collaboration.

Lifebit’s federated AI platform addresses these needs. Our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer) deliver real-time insights with top-tier security, enabling biopharma, governments, and public health agencies to open up the full potential of their genomic data.

The DNA revolution is here and accelerating. Daily, next-generation sequencing provides insights that bring us closer to a world of preventative medicine, precisely targeted cancer treatments, and contained infectious diseases.

Find how to accelerate your research with our Trusted Research Environment