On-Premise Meets Cloud: A Match Made in Data Heaven

On-premise cloud integration: Master It in 2025

Why On-Premise Cloud Integration is Reshaping Modern Data Architecture

On-premise cloud integration allows organizations to connect local infrastructure with cloud services, combining the security of on-premise systems with the scalability of the cloud. This hybrid approach is critical for maintaining control over sensitive data while accessing modern computing capabilities.

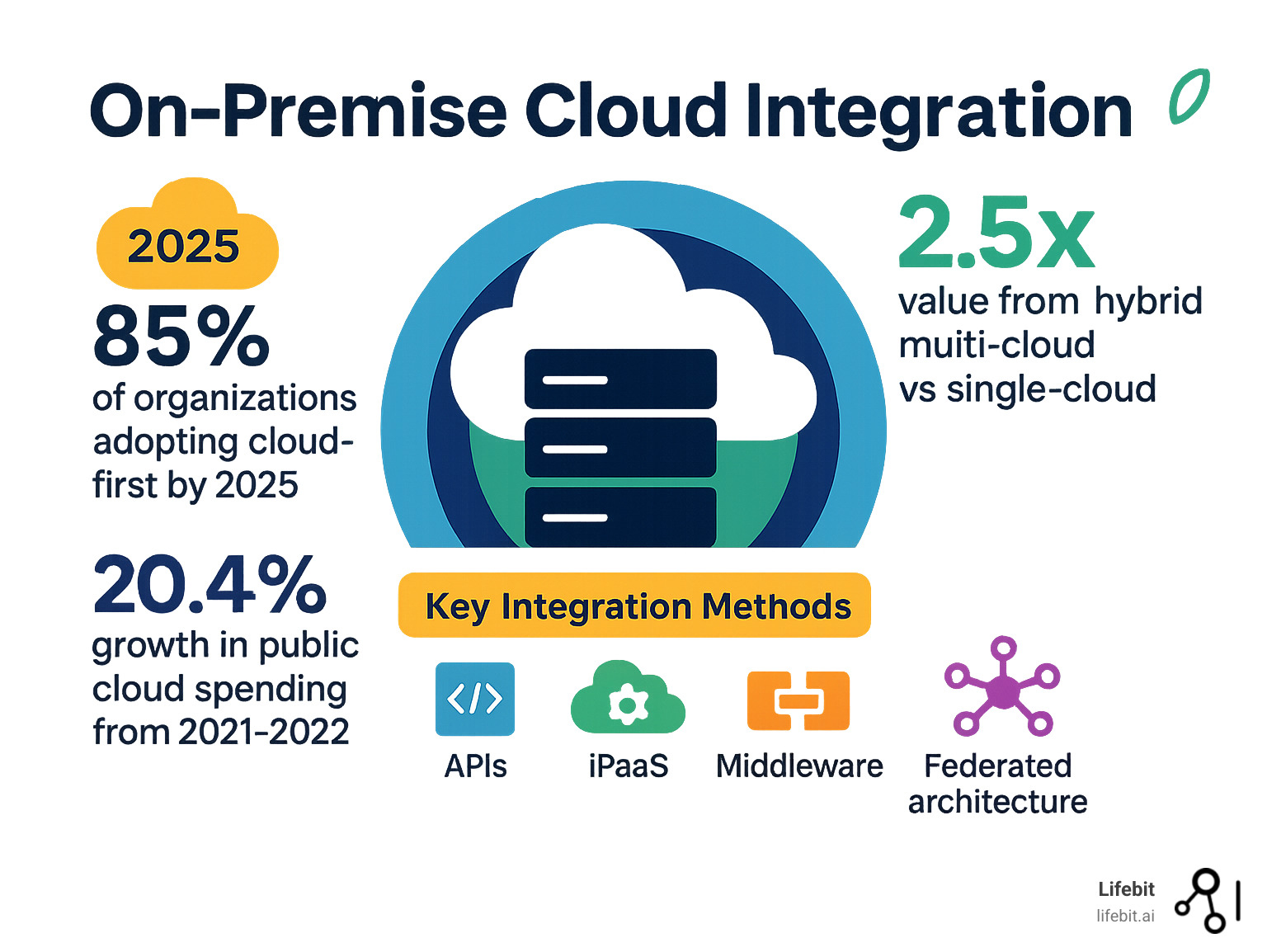

Key Integration Methods:

- APIs and Webhooks – Real-time data synchronization

- Integration Platform as a Service (iPaaS) – Centralized connection management

- Middleware Solutions – Bridge legacy and modern applications

- Federated Architecture – Analysis-to-data approach for sensitive data

Primary Benefits:

- Maintain data sovereignty

- Optimize resource utilization and reduce costs

- Enable secure, distributed collaboration

- Accelerate innovation without compromising compliance

Digital infrastructure has evolved rapidly, with research showing that companies gain up to 2.5 times the value from a hybrid multi-cloud approach compared to single-cloud strategies. Furthermore, 85% of organizations are expected to adopt cloud-first technology by 2025.

For organizations in pharma, the public sector, and other regulated fields, the challenge is connecting systems while ensuring compliance, data quality, and real-time analytics across silos. Traditional methods often fall short.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. We pioneer federated on-premise cloud integration for genomics and biomedical data. With over 15 years in computational biology and health-tech, I’ve seen how the right integration strategy can transform global healthcare through secure, compliant data analysis.

The Best of Both Worlds: Understanding On-Premise, Cloud, and Hybrid

Choosing an IT infrastructure involves balancing control, flexibility, and cost. On-premise cloud integration is about combining three deployment models—on-premise, cloud, and hybrid—to create a solution more powerful than any single approach.

Each model has distinct characteristics:

| Feature | On-Premise Solutions | Cloud Solutions | Hybrid Solutions |

|---|---|---|---|

| Deployment | Installed and managed on local servers within the company’s physical premises. | Hosted and managed by a third-party provider, accessed over the internet. | Combination of on-premise servers and third-party cloud services, orchestrated to work together. |

| Cost Structure | High upfront capital expenditure (CapEx) for hardware, software licenses, and setup. Ongoing operational costs for maintenance, power, and cooling. | Lower upfront costs, primarily operational expenditure (OpEx) through subscription or pay-as-you-go models. | Mix of CapEx and OpEx. Potential for cost optimization but can be complex to manage and predict. |

| Control | Complete control over hardware, software, data, and security protocols. Full customization capabilities. | Limited control over underlying infrastructure; control extends to applications and configurations within the cloud environment. | Balanced control. Retain full control over on-premise assets while leveraging provider-managed cloud infrastructure. |

| Security | In-house security measures; perceived higher security for sensitive data due to physical control. Responsibility entirely on the organization. | Relies on provider’s security measures; shared responsibility model. Concerns about data ownership and potential breaches at scale. | Complex security model. Requires consistent policies across environments and secure connections between them. Responsibility is shared between the organization and the cloud provider. |

| Scalability | Limited by physical hardware capacity; scaling up requires significant investment, time, and manual effort. | Highly elastic and on-demand; resources can be scaled up or down quickly and automatically. | Best of both worlds. Scale on-premise workloads to the cloud on demand (cloud bursting). Highly flexible. |

| Flexibility | Less flexible for rapid changes; updates and new deployments can be slow and complex. | Highly flexible and agile; enables faster deployment of new services and applications. | High flexibility to place workloads in the optimal environment based on performance, cost, and security needs. |

| Maintenance | Requires dedicated in-house IT staff for patching, backups, troubleshooting, and infrastructure management. | Managed by the cloud provider; includes updates, security patches, and infrastructure SLAs. | Requires skilled IT teams to manage both on-premise infrastructure and cloud services, as well as the integration layer connecting them. |

| Disaster Recovery | Requires costly and complex in-house disaster recovery planning and redundant systems. | Often includes built-in disaster recovery options and data redundancy across multiple locations. | Enhanced DR capabilities. The cloud can be used as a cost-effective disaster recovery site for on-premise workloads. |

On-Premise: The Fortress of Control

With on-premise software, applications and data reside on servers within your own facilities. This model provides complete data sovereignty, which is often a legal requirement in regulated industries dealing with sensitive biomedical data. You have full control to customize security protocols and ensure legacy systems function as needed. This makes it the default choice for core legacy applications that are difficult to migrate, or for workloads with predictable processing demands where the hardware has already been paid for.

The main drawbacks are high upfront capital expenditure for hardware and software, plus the need for a dedicated IT team for maintenance. Expansion is also slower, as it requires purchasing and configuring new physical servers.

The Cloud: A Universe of Scalability

Cloud computing offers access to applications and data hosted on a provider’s servers via the internet. Its primary advantage is agility; computing resources can be scaled up or down in minutes. This pay-as-you-go model converts large upfront costs into predictable operational expenditure.

Cloud providers also manage disaster recovery and infrastructure maintenance. You can choose from three different models of cloud services: Infrastructure-as-a-Service (IaaS) provides raw computing resources like virtual machines and storage (e.g., Amazon EC2, Google Compute Engine). Platform-as-a-Service (PaaS) offers a development and deployment environment, abstracting away the underlying infrastructure (e.g., Heroku, AWS Elastic Beanstalk). Software-as-a-Service (SaaS) delivers ready-to-use applications over the internet (e.g., Salesforce, Office 365). However, a key risk is vendor lock-in, where migrating away from a provider becomes difficult and costly.

Research by Gartner highlights the model’s popularity, with global public cloud spending growing 20.4% to $494.7 billion in 2022.

The Hybrid Cloud: Your Strategic Middle Ground

A hybrid cloud solution connects your on-premise infrastructure with private cloud and public cloud services. This allows you to keep sensitive data on-premise while using scalable cloud resources for development, testing, or handling demand spikes. Common hybrid cloud use cases include cloud bursting, where an application runs on-premise but ‘bursts’ into the public cloud to handle demand spikes, and tiered storage, where less frequently accessed data is moved to cheaper cloud storage. It also facilitates a phased migration to the cloud, allowing organizations to move workloads incrementally without a disruptive ‘big bang’ approach.

This strategy improves business continuity and enables cost optimization. Companies gain up to 2.5 times the value from a hybrid approach compared to single-cloud strategies. This is particularly relevant in healthcare, where Health Data Interoperability must balance access with security.

While managing a hybrid environment is more complex, requiring skilled teams to handle data flows and security policies, the strategic advantages often outweigh the challenges.

Crafting Your On-premise Cloud Integration Strategy

Developing an on-premise cloud integration strategy requires careful planning. Your plan must be rooted in business objectives, assess technical feasibility, identify risks, and include contingency plans. The goal is to create a durable foundation for your organization’s future.

Step 1: Define Goals and Choose Your Integration Method

First, clarify why you are integrating. Common goals include:

- Data analytics: Unifying scattered data for a complete operational view.

- Disaster recovery: Using the cloud as a secure backup for on-premise data.

- Application modernization: Connecting legacy systems to modern cloud applications.

- Workflow automation: Eliminating manual processes.

- Improved collaboration: Enabling seamless work across distributed teams.

With clear goals, you can select the right integration method.

- APIs (Application Programming Interfaces) are the modern standard for connecting applications. They define a set of rules for how software components should interact. REST (Representational State Transfer) APIs, which are lightweight and use standard HTTP methods, are the most common choice for web and mobile applications. For enterprise environments requiring high security and reliability, SOAP (Simple Object Access Protocol) APIs may still be used. Webhooks are a form of ‘reverse API’ where a cloud application sends real-time data to an on-premise system when a specific event occurs, enabling proactive updates without constant polling.

- Middleware is software that sits between an operating system and the applications running on it. In integration, it functions as a translation layer. Message-Oriented Middleware (MOM), for example, uses messaging queues to enable asynchronous communication between disparate systems, ensuring that messages are delivered even if the recipient system is temporarily unavailable. Other types include Enterprise Service Buses (ESB), which act as a central hub for routing and transforming messages within a service-oriented architecture.

- Integration Platform as a Service (iPaaS) is a cloud-based solution that centralizes the management of integrations. Leading platforms like MuleSoft, Dell Boomi, and Zapier provide a graphical interface, pre-built connectors for hundreds of popular SaaS and on-premise applications, and tools for data mapping and transformation. This significantly accelerates development and reduces the need for custom code.

- Event-driven architecture is an approach where services communicate by producing and consuming events. An ‘event’ is a significant change in state, such as a new customer order or an updated inventory level. This decoupled approach is highly scalable and resilient, making it ideal for complex, distributed systems that need to respond to changes in real-time.

Step 2: Plan for Security and Regulatory Compliance

Integrating on-premise and cloud environments extends your security perimeter. Key security measures include:

- Data encryption: Essential for data both in transit (using VPNs, TLS/SSL) and at rest.

- Access controls: Implement multi-factor authentication and role-based permissions to ensure only authorized users can access sensitive information.

- Network security: Use firewalls, intrusion detection systems, and secure connections to defend against threats.

Compliance with regulations like GDPR and HIPAA is non-negotiable. Your integration must adhere to the same standards as your existing systems. For example, under GDPR, any integration involving the personal data of EU citizens must ensure that data transfers outside the EU are legally protected, often through mechanisms like Standard Contractual Clauses (SCCs). The integration must also support data subject rights, such as the right to access or erase data. For HIPAA in the US, integrations handling Protected Health Information (PHI) must use strong encryption, maintain detailed audit logs of all data access, and typically require a Business Associate Agreement (BAA) with the cloud provider.

For sensitive biomedical data, Trusted Research Environments provide a necessary security framework. Adhering to standards like Health Data Standardisation ensures integrated systems can interoperate while meeting regulatory requirements.

Step 3: Analyze Cost Implications and TCO

Understanding the Total Cost of Ownership (TCO) is crucial. Consider both upfront and long-term costs. The objective is not to find the cheapest solution but to optimize your investment. A solution that automates manual work may have a higher initial cost but a better long-term ROI. Be mindful of cloud pricing models to avoid unexpected “bill shock” from data transfer or resource usage.

A detailed TCO analysis should model costs over a 3-5 year period and include:

- Direct Costs:

- Cloud Services: Subscription fees (e.g., per user, per month), pay-as-you-go charges for compute, storage, and data egress (transferring data out of the cloud).

- iPaaS/Middleware: Licensing or subscription costs for the integration platform.

- Hardware: Any necessary upgrades to on-premise servers, network equipment, or security appliances.

- Personnel: Salaries for developers, architects, and administrators.

- Indirect Costs:

- Training: Costs to upskill the existing team or hire new talent with hybrid cloud expertise.

- Migration: The one-time cost of moving data and refactoring applications.

- Downtime: Potential business losses during migration or from integration failures.

- Governance and Compliance: Costs associated with security audits and maintaining compliance.

A Step-by-Step Guide to Executing Your Integration

With a strategy in place, execution begins. This phase involves careful data migration, system testing, user training, and a well-planned go-live process. The goal is to ensure data integrity, manage roadblocks, and create a seamless user experience where data is accessible regardless of its location.

How to ensure seamless on-premise cloud integration

Data synchronization is the core of a successful on-premise cloud integration. To maintain data consistency, choose the right sync method:

- Real-time sync: Uses APIs or event-driven architectures for critical data that requires immediate updates, such as inventory levels or customer orders.

- Batch processing: Suitable for large volumes of less time-sensitive data, like nightly reports. This method is less demanding on network resources but introduces latency.

Master Data Management (MDM) is vital for establishing a single source of truth for core business data (e.g., customer or product information) across all systems. Also, be prepared to address network latency between on-premise and cloud environments, as it can degrade application performance. Solutions include optimizing network paths or using tools like Data Warehouses as centralized repositories.

Common challenges in on-premise cloud integration

Anticipating common challenges can prevent project delays:

- Data silos: This often happens when integration is treated as a purely technical task. Without a corresponding business process alignment and a strong data governance framework that defines data ownership, standards, and quality rules, systems may be connected, but the data remains functionally siloed and untrustworthy.

- Legacy system constraints: These systems may not have APIs, or their APIs may be poorly documented and inflexible. Integration might require specialized adapters, screen scraping techniques, or even direct database connections, all of which are brittle and create long-term maintenance burdens. A thorough assessment of the legacy system’s capabilities is critical during the planning phase.

- API management: As the number of APIs grows, organizations face ‘API sprawl.’ Without a centralized API gateway and management platform, it becomes difficult to enforce security policies, monitor performance, control access, and manage the API lifecycle (e.g., versioning and deprecation). This can lead to security vulnerabilities and inconsistent performance.

- Scalability bottlenecks: A common scenario is when a scalable, elastic cloud application floods an on-premise legacy database with more requests than it can handle, causing it to crash. Mitigating this requires careful architecture design, such as implementing a message queue to buffer requests and throttle the data flow to the on-premise system, or using caching layers to reduce the load.

- Complex maintenance: Managing a hybrid environment requires a ‘full-stack’ understanding. Teams need skills in on-premise infrastructure (servers, networking, storage), cloud platforms (AWS, Azure, GCP), containerization (Docker, Kubernetes), infrastructure-as-code (Terraform, Ansible), and integration technologies (APIs, iPaaS). This diverse skill set can be difficult and expensive to build and retain.

- Vendor lock-in mitigation: This involves using open-source technologies and standards (like Kubernetes for container orchestration or standard SQL for databases) where possible. It also means designing applications with an abstraction layer between the application code and the cloud provider’s proprietary services, making it easier to migrate to another provider or bring a workload back on-premise if needed.

The Human Element: Change Management and User Training

A successful integration project is not just about technology; it’s about people. Employees may be resistant to new workflows or tools. A formal change management plan is essential to ensure smooth adoption.

- Communicate Early and Often: Clearly explain the ‘why’ behind the integration—the business benefits and how it will improve daily work. Address concerns and set realistic expectations.

- Involve Key Stakeholders: Include end-users from different departments in the planning and testing phases. Their feedback is invaluable for designing user-friendly workflows and building advocacy for the new system.

- Provide Comprehensive Training: Don’t just train users on how to use the new software; train them on the new business processes. Offer training in various formats (e.g., live workshops, on-demand videos, written guides) to accommodate different learning styles.

- Establish a Support System: Designate super-users or champions who can provide peer support. Ensure the IT help desk is prepared to handle questions and troubleshoot issues post-launch.

Best practices for monitoring and testing

Continuous monitoring and testing are essential for long-term success.

- Performance and load testing: Simulate real-world and peak traffic conditions to identify system limitations and breaking points before they impact users.

- Error logging and alerting: Implement automated alerts to detect and diagnose integration failures quickly.

- Key Performance Indicators (KPIs): Track business-focused metrics, such as data sync latency and API success rates, not just technical ones.

- Automated monitoring: Use tools that provide end-to-end visibility across the hybrid environment with real-time dashboards and predictive analytics.

A strong monitoring strategy provides confidence in your integrated environment and enables continuous improvement.

Advanced Integration: The Rise of Federated Architecture

For organizations handling highly sensitive data like genomic sequences or patient records, traditional on-premise cloud integration models may not be sufficient. This is where federated architecture provides a alternative. Instead of moving data to the analysis, it brings the analysis to the data.

This approach is crucial for biomedical data, where privacy concerns and regulations often prohibit data movement. It solves the compliance challenges of moving patient data across borders or consolidating datasets from different institutions.

What is a federated approach to on-premise cloud integration?

A federated approach shifts from a “data-to-analysis” model to an “analysis-to-data” philosophy. Sensitive information remains in its secure on-premise or private cloud environment. Using federated learning and decentralized strategies, computations run where the data resides. Only aggregated results or models are shared, never the raw data itself.

This model is built on strong data governance, ensuring every computation is controlled and compliant. For example, a Federated Architecture in Genomics allows researchers to query distributed datasets without moving any DNA sequences. This respects data sovereignty, keeping data within its original jurisdiction while enabling collaborative research that would otherwise be impossible.

Benefits for Sensitive Data Ecosystems

Federated architecture offers game-changing advantages for sensitive data:

- Improved security: By never leaving its secure environment, data exposure risk is dramatically reduced. This is critical for genomic data, patient records, and proprietary research.

- Regulatory compliance: Since data doesn’t move across borders, navigating complex regulations like GDPR and HIPAA becomes much simpler. Data residency requirements are inherently met.

- Collaborative research without moving data: Researchers can perform complex Federated Data Analysis on distributed datasets, enabling large-scale studies previously blocked by data sharing restrictions.

- Real-time insights: Processing data at its source eliminates transfer delays and network latency, delivering faster results.

Federated architecture provides a perfect solution for organizations that cannot fully adopt public cloud due to security or legacy constraints. Our Healthcare Data Management Platform and the Lifebit CloudOS Genomic Data Federation demonstrate how this approach enables secure access to global biomedical datasets without centralization, open uping collaborative possibilities beyond traditional integration.

Frequently Asked Questions about On-Premise and Cloud Integration

Here are answers to common questions about on-premise cloud integration, based on our experience with biopharma and public health organizations.

What are the biggest security risks when integrating on-premise and cloud environments?

The primary risks are data breaches during transit, misconfigured access controls, and insecure APIs that can be exploited by attackers. Another significant risk is an expanded attack surface. Every connection point between on-premise and cloud is a potential vulnerability. A major challenge is maintaining consistent security policies across both environments. To mitigate these risks, a robust strategy must include end-to-end encryption, strict identity and access management (IAM) with multi-factor authentication, and regular security audits and penetration testing.

How do I choose the right integration tools for my business?

The right tool depends on your needs. For simple, point-to-point connections, custom APIs can be effective. For complex ecosystems with multiple applications (e.g., ERP, CRM, data warehouses), an Integration Platform as a Service (iPaaS) is invaluable. iPaaS solutions offer pre-built connectors, workflow automation, and centralized management, which simplifies development and reduces the learning curve for your team. When evaluating iPaaS solutions, consider factors like the breadth of their connector library, ease of use, scalability, and security certifications.

Can I integrate a legacy on-premise system with a modern cloud application?

Yes, this is a very common integration scenario and a key part of an application modernization strategy. It is achieved using middleware solutions or iPaaS platforms that act as a bridge. These tools translate data formats and communication protocols between the older system and the modern cloud application’s API. This allows you to leverage advanced cloud capabilities like AI and analytics on your historical data without replacing valuable legacy systems or disrupting established workflows.

What is the difference between middleware and iPaaS?

While both connect systems, their approach and scope differ. Middleware is typically a software you install and manage on-premise (though cloud-based versions exist). It’s often a more developer-centric tool, providing the underlying plumbing like message queues or an enterprise service bus (ESB) to build custom integrations. iPaaS, on the other hand, is a cloud-native, subscription-based service that provides a more complete, higher-level platform for integration. It includes a graphical interface, pre-built connectors, and management tools that are designed to be used by both developers and less-technical ‘citizen integrators,’ significantly speeding up the development of common integration patterns.

How do you manage data governance across on-premise and cloud systems?

Managing data governance in a hybrid environment is complex but critical. It requires a unified framework that applies consistent policies regardless of where data resides. Key practices include:

- Establish a Data Governance Council: A cross-functional team responsible for defining data policies, standards, and ownership.

- Create a Centralized Data Catalog: A tool that inventories all data assets across the hybrid landscape, documenting their location, lineage, quality, and classification (e.g., sensitive, public).

- Implement Master Data Management (MDM): As mentioned earlier, MDM creates a single, authoritative source for critical data entities like ‘customer’ or ‘product,’ preventing inconsistencies.

- Automate Policy Enforcement: Use tools to automatically apply data quality rules, access controls, and data masking policies across both on-premise and cloud environments.

Conclusion

Effective on-premise cloud integration is not about choosing between on-premise and cloud, but about creating a harmonious hybrid strategy. This approach combines the control and security of on-premise systems with the scalability and flexibility of the cloud, offering a significant strategic advantage.

By blending these two worlds, you can future-proof your IT infrastructure and make better data-driven decisions. The key is a well-planned integration strategy that prioritizes security, data governance, and performance.

For organizations handling highly sensitive information, such as in genomics or public health, federated architecture is the next frontier. It enables secure analysis and collaboration across distributed data without compromising data sovereignty.

If you are facing complex biomedical data challenges, explore how a next-generation federated platform can solve your integration puzzles. Learn more about the Lifebit Federated Biomedical Data Platform and see how to bring your data ecosystem together while keeping every component secure and in its place.