The Secure Lab: Choosing Your Research Data Management Platform

Why Research Data Management Can Make or Break Your Research Program

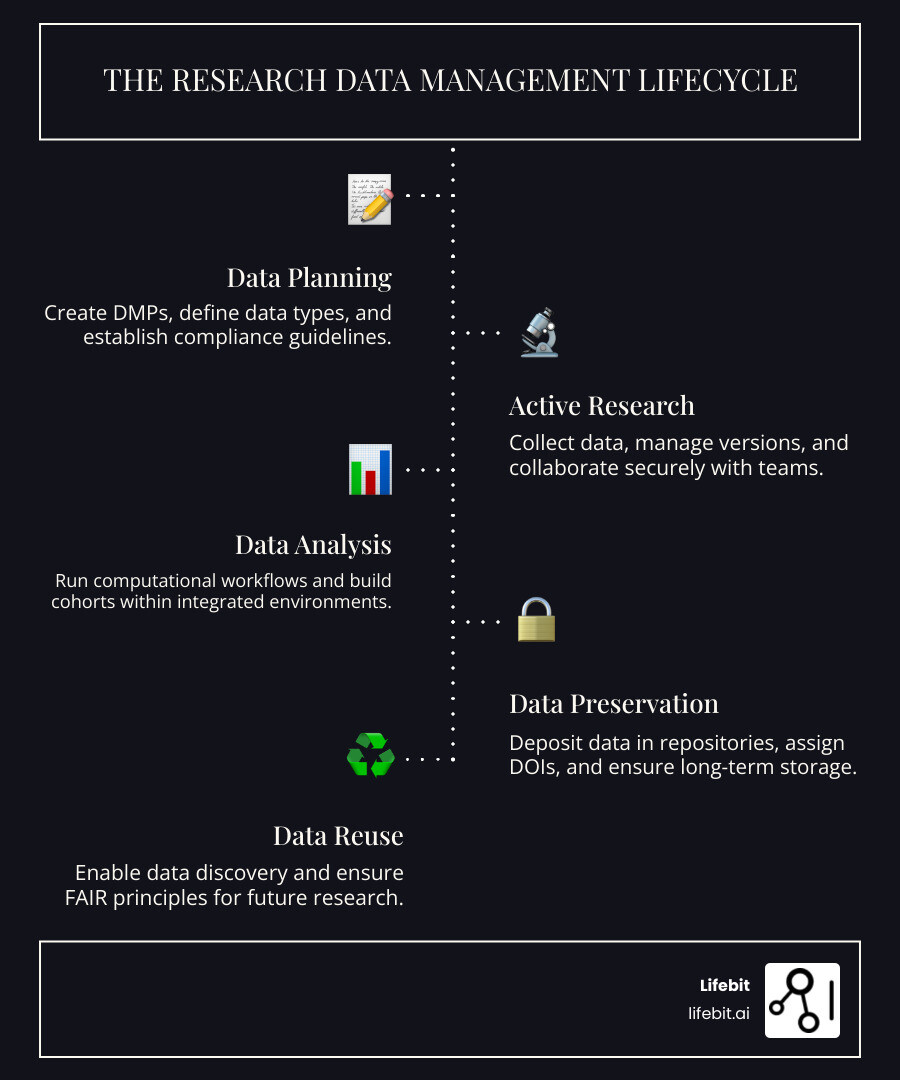

A research data management platform is a system for collecting, storing, organizing, analyzing, and preserving data throughout its lifecycle. These platforms are essential for meeting funder mandates, enabling secure collaboration, ensuring compliance, and making research reproducible.

Key capabilities of a modern research data management platform:

- Data Management Plans (DMPs): Tools to create funder-compliant plans.

- Secure Collaboration: Controlled access for teams across institutions.

- Analysis Environments: Integrated compute for R, Python, and other tools.

- Compliance Support: Built-in controls for HIPAA, GCP, and other policies.

- Data Sharing & Preservation: Repositories with persistent identifiers (DOIs).

Research data are the factual records that validate your findings. Without active management, this data can become lost, unusable, or unreproducible, leading to retracted papers, failed audits, and lost funding. The landscape has shifted: Canada’s Tri-Agency Research Data Management Policy and the NIH’s Data Management and Sharing Plan requirements are just two examples of a global trend making sound data management mandatory.

Many teams struggle with fragmented tools and siloed datasets, turning collaboration into a security risk and publishing into a scramble. Modern research data management platforms solve this by unifying the entire data lifecycle in a single, secure environment. They connect data sources, automate compliance, and enable AI-powered analysis and collaboration.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. We built a next-generation research data management platform for the life sciences, powering secure, federated analysis for leading pharma and public sector institutions. With over 15 years in computational biology and AI, I’ve seen how the right platform transforms research from chaotic to groundbreaking.

Why Your Research Is at Risk Without Proper Data Management

Your research data—microarray results, clinical observations, genomic sequences—are the factual records that validate your findings. They are a crucial research asset, standing between credibility and doubt. Today, nearly all research data is “born digital,” making it vulnerable in ways paper records never were. This digital data requires active management from study design to long-term reuse.

Without a coordinated approach, like a research data management platform, this scientific knowledge can be lost, and the public investments funding your research deliver far less value.

The High Cost of Poor RDM

Poor data practices create significant risks that can derail a research program. Consider a multi-year longitudinal study on a rare disease. Without a centralized platform, data was stored on individual researchers’ laptops and in a patchwork of cloud storage folders. When a key post-doc left the institution, their laptop was wiped, and a crucial subset of patient data was lost forever. The remaining data, stored in inconsistent formats with cryptic file names, was nearly impossible to integrate, rendering years of work and millions in funding unusable for the final analysis. This scenario is all too common and highlights several key risks:

- Lost data: Fragmented storage on personal devices, lack of automated backups, and corrupted files can erase years of work in an instant. This is almost always preventable with a managed system.

- Inefficient collaboration: When data is scattered across systems and emails, teams waste valuable time on digital archaeology instead of science, tracking down files, reconciling versions, and questioning data integrity.

- Failed reproducibility: If other researchers cannot reproduce your findings because the data is poorly documented, inaccessible, or lost, trust in science erodes. This is fundamentally a data management problem.

- Retracted papers: Many retractions trace back to inadequate data management, version control failures, or an inability to verify original findings, damaging reputations and public trust.

- Funding risk: Funders like the Canadian Tri-Agencies and the NIH now mandate detailed Data Management and Sharing Plans. Non-compliance doesn’t just risk your current project; it puts your next grant at risk.

The “FAIR” Principles: Your Blueprint for Valuable Data

The solution to these risks is to make your data Findable, Accessible, Interoperable, and Reusable—the FAIR principles. This blueprint transforms data from a liability into a lasting scientific contribution. An RDM platform is the engine that brings these principles to life.

- Findable: Data and its metadata must be easy to locate for both humans and computers. This goes beyond simple file names. It means creating rich, machine-readable metadata (data about the data) and assigning globally unique and persistent identifiers like Digital Object Identifiers (DOIs). A good platform automates metadata creation and registers DOIs, making your dataset a citable research output that appears in data catalogs and search engines.

- Accessible: Data should be retrievable by authorized users through standard, open protocols (like HTTPS). This doesn’t always mean “open access.” For sensitive data, accessibility means having clear, secure authentication and authorization procedures. A platform manages this by defining roles and permissions, ensuring that only the right people can access the data under the right conditions, all while logging every access request.

- Interoperable: Data must be able to work with other datasets and analysis tools. This requires using standard formats, controlled vocabularies (like SNOMED CT for clinical terms), and common data models (like the OMOP Common Data Model for observational health data). An RDM platform can enforce the use of these standards on data ingress and provide tools to harmonize disparate datasets, preventing the massive, time-consuming data cleaning projects that stall so much research.

- Reusable: The ultimate goal is for data to be so well-documented and licensed that another researcher can confidently use it years later. This requires a clear data usage license (e.g., Creative Commons) and detailed provenance—a complete record of the data’s origin and all transformations it has undergone. A platform captures this provenance automatically, tracking every analysis, version, and change, providing the context needed for confident reuse.

When your data is FAIR, it becomes a valuable asset for the entire scientific community, multiplying the return on research investment.

Meeting Mandates: How RDM Platforms Ensure Compliance

Keeping up with research data mandates is a growing challenge. Funders worldwide have made it clear: effective research data management is no longer optional. It’s a requirement for research excellence and accountability.

For example, Canada’s Tri-Agency Research Data Management Policy requires institutions to have public RDM strategies and for researchers to use Data Management Plans (DMPs) and deposit data into a recognized repository. The policy connects data management to broader principles of open access, ethical conduct, and Indigenous data sovereignty. Similarly, the U.S. National Institutes of Health (NIH) now requires a robust Data Management and Sharing (DMS) Plan for all funded research. The message is universal: funders expect robust strategies, detailed plans, and clear pathways for data deposit.

An NIH DMS Plan, for instance, must prospectively address six key elements, turning the plan into a concrete part of the research proposal:

- Data Type: Describing the types and amount of data to be generated, and which data will be preserved and shared.

- Related Tools, Software and/or Code: Identifying any specialized tools needed to access or manipulate the data.

- Standards: Specifying the data and metadata standards that will be applied.

- Data Preservation, Access, and Associated Timelines: Outlining the repository to be used and when and how long the data will be available.

- Access, Distribution, or Reuse Considerations: Describing any factors that might affect access, such as privacy or intellectual property protections.

- Oversight of Data Management and Sharing: Identifying who is responsible for ensuring the plan is followed.

The Role of a Research Data Management Platform in Compliance

A purpose-built research data management platform is essential for navigating these complex mandates. It moves compliance from a manual, error-prone checklist to an automated, integrated function of the research environment.

- Automated Reporting: Platforms can generate the compliance documents and reports funders require, saving countless hours of manual effort.

- Audit Trails: Every action—from data access to analysis to export—is logged in an immutable, timestamped record. This provides an irrefutable trail for audits, ensuring accountability with no scrambling or guesswork.

- Secure Environments: Trusted Research Environments (TREs) provide purpose-built, secure spaces where sensitive research can thrive, with robust controls protecting data while enabling collaboration.

- Access Controls: Granular, role-based permissions let you define precisely who can see, use, or modify data, which is non-negotiable for compliance with standards like HIPAA, GCP, and GDPR.

Planning for Success with Data Management Plans (DMPs)

A Data Management Plan is a living roadmap for your data’s journey. Modern DMP creation tools simplify this process. For instance, the DMP Assistant is a free, bilingual tool for Canadian researchers, and DMPTool is a popular equivalent in the U.S. These tools are designed for collaborative planning, allowing entire teams to contribute. They often include funder-specific templates that eliminate guesswork by aligning your plan with precise requirements, letting you focus on research while ensuring you check the right boxes.

The future, however, is machine-actionable DMPs (maDMPs). Instead of static PDF documents, maDMPs are structured data files (often JSON) that computers can read and act upon. This is a game-changer. An maDMP can communicate directly with other systems via APIs. For example, upon project approval, the maDMP could automatically:

- Provision a dedicated storage workspace within the RDM platform.

- Apply the access permissions defined in the plan.

- Pre-populate a metadata record in the chosen data repository.

- Set reminders for data deposit deadlines.

This integration turns the DMP from a bureaucratic hurdle into an active, intelligent component of the research infrastructure, automating tasks and ensuring the plan is actually followed.

The bottom line is that meeting mandates doesn’t have to be a burden. With the right platform and planning tools, compliance becomes an integrated, and even automated, part of your research workflow.

Choosing Your Research Data Management Platform: Essential Features

Choosing a research data management platform is like selecting the foundation for your entire research operation. The right choice accelerates collaboration and compliance; the wrong one leads to years of fighting fragmented tools and creates technical debt.

Here are the essentials that separate a transformative platform from a budgetary drain:

- Scalability: Your platform must handle data growth from gigabytes to petabytes without performance degradation. This applies to both storage and compute. At Lifebit, our platform was built to scale, handling over 150 terabytes of multimodal data.

- Security: Look for “security by design,” not as an afterthought. This includes end-to-end encryption (in transit and at rest), granular access controls, comprehensive audit trails, and proven compliance with regulations like HIPAA, GDPR, and 21 CFR Part 11.

- User Interface (UI) & Experience (UX): A platform should be intuitive for all users, from principal investigators to bioinformaticians. If your team needs extensive training just to upload a file or launch an analysis, it will hinder, not help, your research.

- Integration & Interoperability: The best platforms are not closed gardens. They connect seamlessly with your existing ecosystem via APIs, including Electronic Lab Notebooks (ELNs), LIMS, statistical software, and code repositories (like Git), creating a unified research hub.

Key Features to Demand for the Entire Data Lifecycle

A comprehensive research data management platform must support the entire research journey. Evaluate potential solutions against these stage-specific capabilities:

- Planning & Design: Look for robust DMP creation tools with customizable, funder-specific templates and collaborative features for team-based planning. The ability to create machine-actionable DMPs is a key forward-looking feature.

- Active Research: This is where your team lives day-to-day. You need secure collaborative workspaces for real-time work, automatic version control for both data and code, and deep integration with Electronic Lab Notebooks to prevent manual data transfer errors and capture experimental context.

- Analysis: The platform must provide scalable compute environments for key languages like **R, Python, and SAS**. Crucially, it should support container technologies like Docker and Singularity. This allows researchers to package their analysis code and all its dependencies, ensuring that workflows are perfectly reproducible years later, by anyone, on any system. Look for features that allow you to build, share, and govern reusable pipelines to ensure consistency across projects.

- Preservation & Sharing: The journey doesn’t end with publication. The platform should connect to a spectrum of long-term data repositories, including institutional repositories (like DSpace), domain-specific ones (like GenBank), and generalist options (like Zenodo or Dryad). It must offer DOI minting for citation and provide granular access controls to balance open science with privacy. Robust search and discovery tools ensure your valuable data can be found and reused by the wider community.

Types of Research Data Management Platform Solutions

Not all platforms are built the same. Understanding the architecture is key to choosing the right fit for your organization’s needs.

- Integrated Platforms: These solutions aim to handle everything—data management, analysis, collaboration, and compliance—in a single, unified environment. The Lifebit platform takes this approach, eliminating the friction and security risks of moving data between disparate tools, which is critical for speed and governance in the life sciences.

- Institutional Solutions: These are platforms adopted or customized by research organizations for their communities. Often built on open-source foundations (like InvenioRDM or Dataverse), they are tailored to local policies and researcher needs, offering deep integration with institutional support systems and infrastructure.

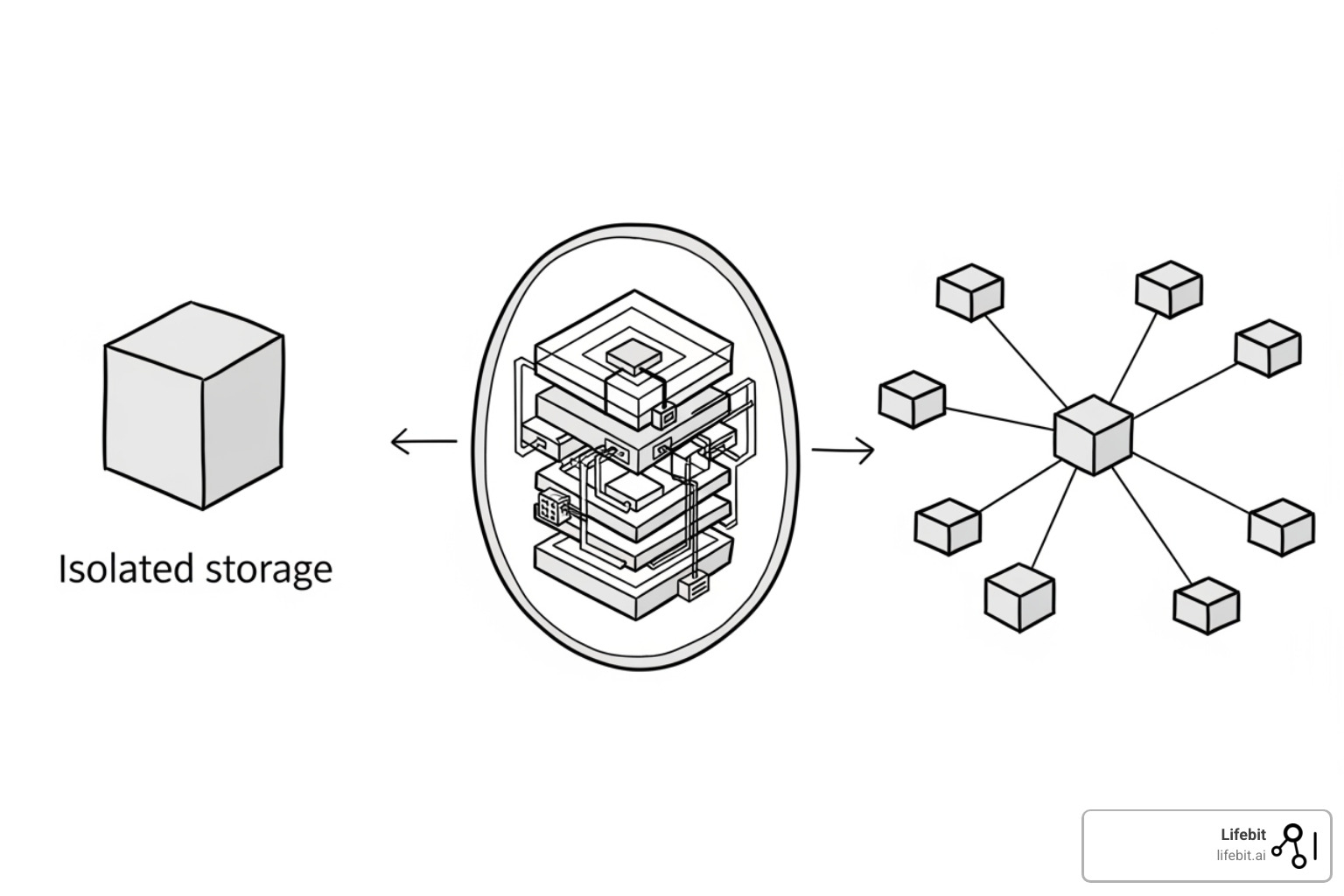

- Federated Environments: This cutting-edge approach brings the analysis to the data, rather than moving the data itself. For multi-institutional studies involving sensitive data, federation is transformative. Our next-generation federated AI platform enables secure analysis of global biomedical data without compromising privacy or data ownership. For research on sensitive clinical data across multiple hospital sites, federation is often the only viable path forward.

The Future is Now: AI, Federation, and Next-Generation RDM

The world of research data management is undergoing a change. We’re moving beyond better storage to research data management platforms that think, learn, and connect data across institutions without ever moving a single byte.

At Lifebit, our AI-native platform is built on this vision. Through our Trusted Research Environments (TREs) and federated learning capabilities, we enable biopharma, governments, and public health agencies to generate real-world evidence at unprecedented speed. Imagine analyzing patient data across dozens of hospitals without compromising privacy, building a complex patient cohort in minutes, or catching drug safety signals before they become public health crises. This isn’t the future—it’s happening now.

How AI is Revolutionizing Data Management and Analysis

Artificial Intelligence is now at the core of modern research data management platforms, solving problems that have plagued researchers for decades.

- AI-Powered Cohort Building: Traditionally a manual, months-long process, identifying the right patient population can now be done in minutes. Researchers can describe their needs in natural language, and AI automatically builds the cohort, ready for exploration.

- Automated Data Harmonization: Real-world biomedical data is notoriously messy. AI can automatically bridge the gaps between different data formats—from EHRs to multi-omics—and unify them into analysis-ready models. Our platform handles over 150+ terabytes of multimodal data with this level of sophistication.

- AI-Driven Safety Surveillance: In pharmacovigilance, speed saves lives. AI systems can continuously monitor vast datasets to spot safety signals and anomalies that would take human analysts months to find, helping organizations stay ahead of potential issues.

- Natural Language Data Querying: Not every researcher is a coding expert. Modern platforms allow users to ask questions of their data in plain English, democratizing access to complex datasets and accelerating discovery.

The Power of Federation: Analyze Data Without Moving It

If AI is changing how we analyze data, federation is changing where. Instead of copying and shipping sensitive data across networks, our federated research data management platform sends the analysis to the data.

This approach neatly solves the problem of data silos. The analytical queries are sent to where the data resides, and only the aggregated, anonymized results return. The original data never leaves its secure environment. This transforms secure collaboration, allowing researchers from different institutions to work together on sensitive datasets without compromising security or governance.

For multi-institutional studies, this is revolutionary. It eliminates the need for complex data sharing agreements for every analysis, as each institution retains full control of its data while contributing to the larger research effort. Our platform’s federated governance models ensure data owners define access rules, building the trust necessary for large-scale collaboration. Federation respects data governance while enabling cutting-edge research—the best of both worlds.

Frequently Asked Questions about Research Data Management Platforms

Navigating research data management can be confusing. Here are answers to some of the most common questions we hear from researchers.

What’s the difference between a data repository and an RDM platform?

Think of a data repository as a digital archive for finished research. It’s where you preserve and share completed datasets so they can be cited and found by others. A research data management platform, in contrast, is your active workspace for the entire project. It supports the full lifecycle, from planning and analysis in a secure, collaborative environment to the final deposit in a repository. A repository is the library shelf; the RDM platform is the entire lab.

How do RDM platforms handle sensitive data (e.g., clinical, genomic)?

Security for sensitive data is paramount. Modern research data management platforms use a multi-layered approach:

- Trusted Research Environments (TREs): These are highly secure, isolated computing environments designed specifically for sensitive data analysis, with strict controls on access and operations.

- Granular Access Controls: These define permissions at a very specific level, controlling who can see or use which data, even within a collaborative project.

- Robust Encryption & Audit Logs: Data is encrypted both at rest and in transit, and every interaction is recorded in an immutable log for accountability and compliance.

- Built-in Compliance: The platform architecture is designed from the ground up to comply with standards like HIPAA, GCP, and 21 CFR Part 11.

Are there free or open-source RDM platforms available?

Yes, the open science community has produced several valuable free and open-source tools. These platforms can offer excellent functionality for managing, sharing, and publishing research, including collaborative workspaces, version control, and data sharing features. For example, the DMP Assistant is a free, national tool in Canada for creating Data Management Plans. While they may not offer the same end-to-end, enterprise-grade security and AI features as a commercial platform, these open-source options are excellent starting points for building better data practices, especially for individual researchers or smaller institutions.

Conclusion: Build Your Research Future on a Secure Foundation

The journey from data chaos to research breakthroughs requires a fundamental shift in how we approach science. A robust research data management platform is the backbone of modern research, turning a compliance headache into a competitive advantage.

We’ve moved from a world of scattered datasets and funding risks to one of seamless collaboration, AI-powered insights from massive datasets, and the ability to analyze sensitive data without moving it. This is what next-generation platforms deliver today.

When your data is findable, accessible, interoperable, and reusable (FAIR), you multiply the impact of every research dollar. You enable new collaborations and build a foundation where reproducibility is the standard. Trends like AI, federation, and machine-actionable DMPs are accelerating this change.

At Lifebit, our platform unifies data, AI, and governance across the entire research lifecycle. From active research in secure workspaces to running scalable analyses and preserving final datasets, everything happens in one compliant ecosystem. Our Trusted Research Environment and federated technologies work together to deliver the real-time insights that drive breakthrough science.

The question is no longer whether to adopt comprehensive RDM—funder mandates have settled that. The question is whether you will choose a platform that merely ensures compliance, or one that propels your research forward.

Your research deserves a secure foundation built for the challenges and opportunities of modern science.

Take control of your research data with a next-generation platform.