Steps to Harmonize Your Data Like a Pro

Why Data Chaos Is Costing You Millions — And What You Can Do About It

How to do data harmonization starts with five essential steps: define clear objectives, find and profile your data sources, map variables to a unified schema, validate the output for quality and accuracy, and document every change for compliance and reproducibility.

Quick Answer: The 5-Step Data Harmonization Process

- Define objectives — Identify business goals and which datasets need harmonization

- Find and profile — Catalog data sources, assess quality, and understand metadata

- Map and transform — Align syntax, structure, and semantics to a unified schema

- Validate and reconcile — Run quality checks, detect anomalies, and confirm accuracy

- Document and govern — Create audit trails, crosswalks, and metadata for ongoing compliance

Organizations lose an average of $13 million annually due to poor data quality. When your data is fragmented across disparate sources — different file formats, conflicting schemas, and inconsistent terminology — you can’t get a complete picture of your business performance. You miss opportunities. You make expensive mistakes. And your teams waste time reconciling data instead of analyzing it.

Data harmonization solves this problem. It transforms data chaos into a consistent, comparable, and analyzable asset by standardizing formats, aligning schemas, and reconciling meanings across all your sources. The result? Faster insights, better decisions, and the foundation for advanced analytics like AI and machine learning.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, with over 15 years of expertise in computational biology, AI, and biomedical data integration. Throughout my career — from building breakthrough tools at the Centre for Genomic Regulation to leading Lifebit’s federated data platform — I’ve seen how to do data harmonization at scale for pharmaceutical organizations and public sector institutions. In this guide, I’ll show you exactly how to harmonize your data like a pro, avoid costly pitfalls, and turn disparate datasets into a competitive advantage.

How to do data harmonization terms simplified:

- data harmonization meaning

- data harmonization techniques

- how does data harmonization differ from data integration

What is Data Harmonization and Why It Beats Traditional ETL

At its core, data harmonization is the process of standardizing and integrating data that comes from disparate fields, formats, and dimensions. Think of it as the ultimate upgrade to your data strategy. While many organizations rely on traditional integration, harmonization goes deeper, ensuring that data is not just “in the same room” but actually “speaking the same language.”

To understand why this matters, we need to look at the differences between standard integration, standardization, and the traditional ETL approach.

| Process | Primary Goal | Result |

|---|---|---|

| Data Integration | Consolidating data from multiple sources into a single view. | Data is co-located but may still be inconsistent in meaning. |

| Data Standardization | Enforcing consistent formats (e.g., date formats). | Data looks the same but might not represent the same concepts. |

| ETL (Extract, Transform, Load) | Technical movement and cleaning of data. | A pipeline for data transfer, often requiring repetitive manual fixes. |

| Data Harmonization | Reconciling syntax, structure, and semantics. | A unified, “ready-for-analysis” dataset where all variables are comparable. |

The problem with the traditional ETL approach is that it often treats data movement as a one-off technical task. Every time you bring in a new source, you have to build a new set of rules. This increases complexity and leads to higher chances of inaccuracy.

In contrast, data harmonization creates a centralized, “smart” data model. Instead of just moving data, we are building a foundation of accuracy and reliability. By using a centralized approach, the data becomes easier to operate, enabling teams to spend less time cleaning and more time driving actionable insights that fuel scientific breakthroughs and business growth.

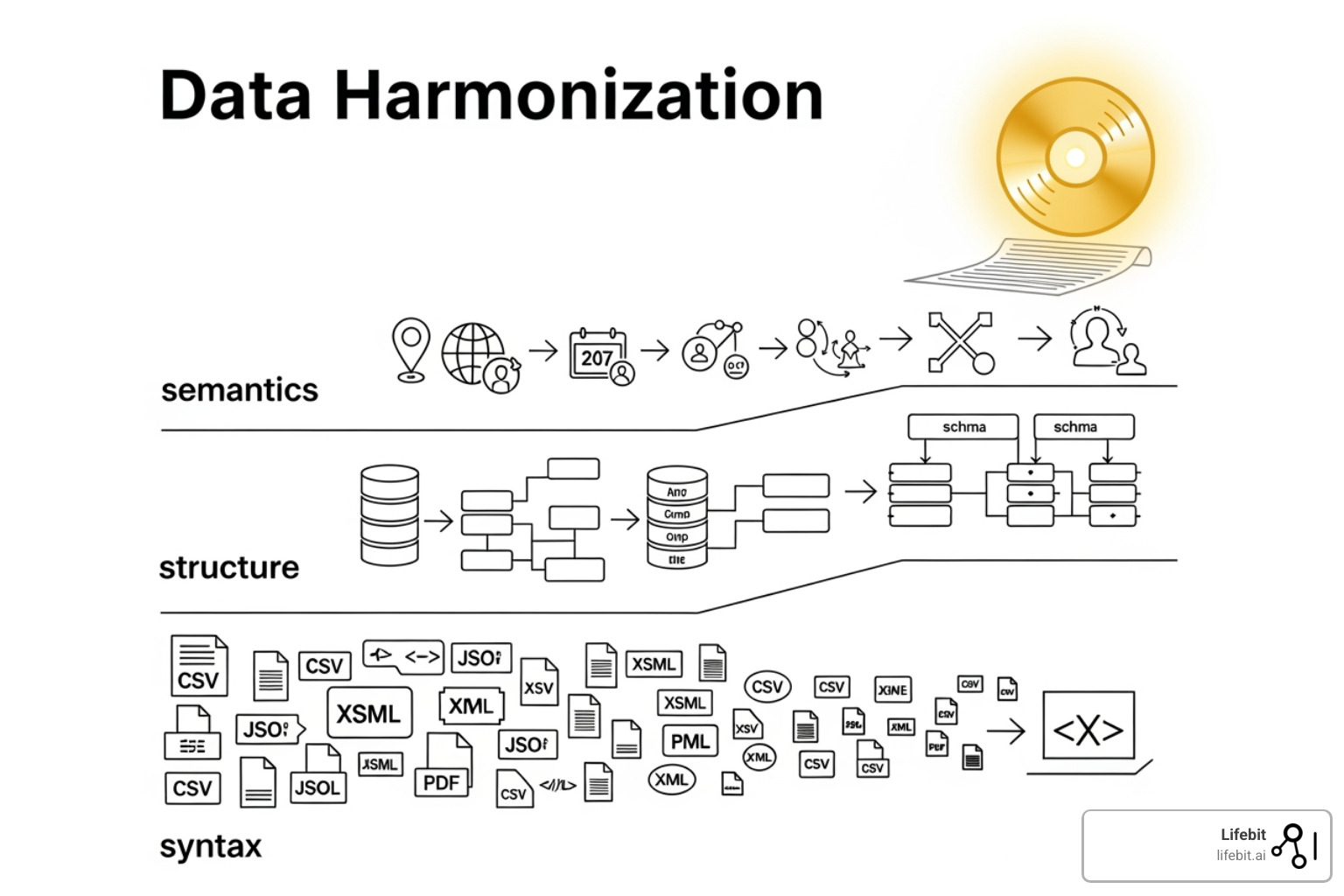

The Three Core Layers: Syntax, Structure, and Semantics

When we talk about how to do data harmonization, we are really talking about solving three distinct layers of “data friction.” If you ignore any of these, your “harmonized” dataset will still produce discordant results. Understanding these layers is critical for building a robust data pipeline that can scale with your organization’s needs.

- The Syntax Layer (Format): This is the surface level. It deals with file types and data representations. One dataset might be a

.CSV, another a.JSON, and a third might be buried in anSQLdatabase. Harmonization ensures these formats are reconciled into a single, usable type. This also includes character encoding (e.g., UTF-8 vs. ASCII) and compression formats that can hinder direct data access. - The Structure Layer (Schema): This involves the architecture of the data. For example, one database might store a customer’s name in a single column (

Full_Name), while another splits it intoFirst_NameandLast_Name. We map these disparate structures to a single target schema. This layer also addresses the cardinality of relationships—ensuring that one-to-many or many-to-many relationships are handled consistently across all integrated sources. - The Semantics Layer (Meaning): This is the most complex layer. It’s where we ensure that the meaning of the data is consistent. This is often referred to as the “Semantic Gap,” where the same term might have different definitions, or different terms might refer to the same concept.

How to do data harmonization at the semantic level

Semantic harmonization is where the real magic happens. Terminology can be identical but mean completely different things. A classic example is the definition of “young adults.” One study might define this as ages 18-25, while another uses 18-30. If you simply merge these without semantic alignment, your analysis of disease incidence in “young adults” will be fundamentally flawed. This is particularly dangerous in longitudinal studies where definitions might shift over decades.

To achieve semantic harmony, we use several key data harmonization techniques:

- Ontologies and Controlled Vocabularies: In healthcare, we use standards like LOINC for lab codes and SNOMED CT for clinical terms. This ensures that “Heart Attack” in one hospital is mapped to “Myocardial Infarction” in another. Beyond healthcare, industries use ISO standards or industry-specific taxonomies (like the FIBO for finance) to ensure everyone is speaking the same conceptual language.

- Metadata and Data Dictionaries: We create comprehensive definitions for every variable, so every user knows exactly what “active customer” or “baseline glucose” means. This includes documenting the provenance of the data—where it came from, how it was collected, and any transformations it underwent before reaching the harmonization stage.

- Crosswalks and Mapping Tables: These are mapping tables that show how a variable in Source A relates to the target variable in our unified model. For instance, a crosswalk might map a 5-point Likert scale in one survey to a 10-point scale in another using statistical normalization techniques.

At Lifebit, our platform automates much of this semantic mapping, using AI to suggest ontologies and identify conceptual overlaps that human eyes might miss. This reduces the manual burden on data stewards and minimizes the risk of human error in complex mapping tasks.

Input vs. Output: Choosing Your Harmonization Strategy

There are two primary schools of thought when it comes to the timing of your harmonization efforts. Choosing the right one depends on whether you are starting a new project or dealing with decades of legacy data.

1. Input Harmonization (Prospective)

This is the “proactive” approach. You set the standards before the data is collected. You provide researchers or departments with strict protocols, standardized questionnaires, and predefined data entry formats.

- Pros: Extremely high data quality and comparability.

- Cons: Can be rigid and may face institutional resistance. It also doesn’t help with existing historical data.

2. Output Harmonization (Retrospective/Ex-Post)

This is the “reactive” approach, often used when researchers decide that common concepts across existing surveys or studies can be turned into a harmonized data file. You take what you already have and map it into a unified scheme.

- Pros: Allows you to use vast amounts of historical data and disparate sources.

- Cons: Higher risk of information loss. If Source A is very granular and Source B is vague, the harmonized variable must often default to the “lowest common denominator” to remain accurate.

The Trade-off: Do you re-collect data or harmonize what you have? Re-collecting is expensive and time-consuming, but harmonizing existing data requires careful consideration of survey-level attributes like statistical weights and sampling designs. At Lifebit, we often recommend a hybrid approach, using retrospective harmonization for legacy data while establishing prospective standards for all new data flowing into our Trusted Data Lakehouse (TDL).

A Practical 5-Step Blueprint for How to Do Data Harmonization

Whether you are working in a global bank or a genomic research lab, the cycle of how to do data harmonization remains remarkably consistent. Here is our expanded 5-step blueprint designed to handle enterprise-scale data complexity:

Step 1: Define Objectives and Scope

Don’t harmonize for the sake of harmonizing. Identify the specific business or research questions you need to answer. Which datasets are essential? What level of granularity is required? Setting clear goals prevents “scope creep” and ensures you don’t waste resources on irrelevant variables. For example, if your goal is to analyze regional sales trends, you may not need to harmonize individual SKU-level data from every local warehouse immediately.

Step 2: Data Discovery and Profiling

You can’t fix what you don’t understand. Catalog your sources, assess their quality, and analyze their metadata. This involves:

- Null Analysis: Identifying how much data is missing and whether that missingness is random or systematic.

- Value Distribution: Checking if the data follows expected patterns (e.g., age should not be negative).

- Cardinality Checks: Understanding the uniqueness of data points to identify potential duplicate records.

Research shows that 20% of source variables in cross-national surveys contain at least one error, such as illegitimate or misleading values. Profiling helps you catch these before they infect your harmonized dataset.

Step 3: Mapping and Transformation Logic

This is the “doing” phase. You apply your crosswalks and change rules. This step often involves complex logic:

- Unit Conversion: Standardizing miles to kilometers or Celsius to Kelvin.

- Date Standardization: Converting all timestamps to ISO 8601 format (YYYY-MM-DD) to avoid confusion between US and European formats.

- Categorical Alignment: Mapping “Male/Female” to “M/F” or numeric codes (1/2).

- Schema Mapping: Using the OMOP Common Data Model or similar frameworks to ensure health data is structured for global research. This allows for “federated” queries where the data stays in its original location but can be queried using a unified language.

Step 4: Validation and Reconciliation

Run rigorous quality checks. Did the change preserve the integrity of the original data? Use anomaly detection to find outliers that might indicate a mapping error. We often establish “testing groups” of knowledgeable users to provide feedback on the analytic usefulness of the harmonized set. Validation should include “round-trip” testing, where you attempt to reconstruct the original data from the harmonized version to ensure no critical information was lost during transformation.

Step 5: Documentation and Governance

The most overlooked step. You must document the entire lifecycle. If a change isn’t documented, it’s not science—it’s a guess. This documentation serves as the “instruction manual” for any future analyst who uses the data.

Best practices for how to do data harmonization documentation

To ensure your data remains a “single source of truth,” you need robust documentation:

- Reversible Transformations: Always keep a path back to the raw data. If a user identifies an issue, you must be able to re-transform without losing the original context.

- Detailed Crosswalks: Provide users with tables showing exactly how source variables were converted to target variables, including the logic used for any statistical weighting.

- Metadata Products: Include information on statistical weight construction. In an analysis of 1721 national surveys, 25% lacked information on how weights were built—don’t let your data be part of that statistic.

- Audit Trails: Maintain a record of who changed what and when for regulatory compliance, particularly important for GDPR and HIPAA-regulated environments.

Real-World Impact: AI, Healthcare, and Advanced Analytics

Data harmonization isn’t just a technical exercise; it’s the engine behind modern innovation. When data “speaks the same language,” advanced analytics like AI and machine learning can finally reach their potential. Without harmonization, AI models often suffer from “garbage in, garbage out,” leading to biased or inaccurate predictions.

Healthcare and Life Sciences

Harmonization is a matter of life and death here. By harmonizing disparate electronic health records (EHRs), researchers can pool data from thousands of hospitals to find patterns in rare diseases that would be invisible in a single hospital’s dataset.

An excellent example is the National COVID Cohort Collaborative (N3C), which harmonized EHR data from over 70 institutions across the US. By using the OMOP Common Data Model, they created a unified dataset of over 6 million patients, enabling rapid scientific discovery during the pandemic. Furthermore, harmonizing multi-omics data (genomics, proteomics, and metabolomics) allows for a holistic molecular picture of disease, leading to more personalized medicine and targeted drug development.

Finance and Master Data Management (MDM)

In the financial sector, harmonization enables effective MDM. By creating a “golden record” of a customer across credit cards, mortgages, and savings accounts, banks can better manage risk and detect fraud. Regulations like BCBS 239 actually mandate this kind of comprehensive risk data aggregation. Harmonization allows banks to see the “total exposure” of a client across different global branches, which is essential for preventing systemic financial crises.

Manufacturing and Retail

In manufacturing, harmonizing IoT sensor data from different factory floors enables predictive maintenance—fixing a machine before it breaks. This requires harmonizing data from different equipment manufacturers who use different proprietary protocols. In retail, unifying data from social media, email campaigns, and web analytics allows for a 360-degree customer view. This “omnichannel” harmonization helps brands understand the customer journey from the first click to the final purchase, optimizing supply chains and personalizing the shopping experience in real-time.

Frequently Asked Questions about Data Harmonization

What are common pitfalls like quota sampling or processing errors?

One of the biggest pitfalls is quota sampling. In survey research, quota sampling can destroy comparability because it doesn’t provide a truly random representative sample. Another major issue is ignoring survey-level attributes. If you harmonize the variables but forget to include the statistical weights or the sampling design, your aggregated results will be statistically biased. Finally, watch out for processing errors—illegitimate values or “hidden” missing data can easily slip through if your validation step isn’t rigorous. For example, a “99” might mean “Missing” in one dataset but be a valid age in another.

How do you measure the success and quality of a harmonization project?

We look at several key metrics:

- Completeness: What percentage of the “essential” constructs were successfully harmonized? (Using tools like DataSHaPER, researchers found that 64% of essential constructs could be harmonized completely or partially across 53 studies).

- Usability: How quickly can a data scientist produce a report from the harmonized set vs. the raw sources? A successful project should reduce data preparation time by at least 50-70%.

- Statistical Power: Has the harmonization increased the sample size enough to find significant effects that were invisible in individual datasets?

- Accuracy: The rate of reconciled anomalies during the validation phase. A high rate of anomalies found and fixed indicates a rigorous process.

What is the difference between data harmonization and data virtualization?

While data harmonization focuses on standardizing the content and meaning of data, data virtualization is a technology that allows you to retrieve and manipulate data without needing technical details about its source or where it is physically stored. You can use virtualization to access data for harmonization, but virtualization alone does not solve the problem of inconsistent schemas or conflicting semantics.

How does data harmonization impact GDPR and data privacy?

Harmonization can actually improve GDPR compliance by providing a clearer view of where personal data resides across an organization. By standardizing how “Personal Identifiable Information” (PII) is labeled and stored, organizations can more easily fulfill “Right to be Forgotten” requests and ensure that data usage aligns with the original consent provided by the user. However, the process of harmonization itself must be done within a secure environment, such as a Trusted Research Environment (TRE), to ensure that sensitive data is not exposed during the transformation phase.

Conclusion

How to do data harmonization is no longer just a “nice-to-have” technical skill—it is a strategic necessity. When data volume is exploding, the organizations that win will be the ones that can turn that volume into a harmonious, usable asset. By following our 5-step blueprint and focusing on the layers of syntax, structure, and semantics, you can stop losing millions to poor data quality and start driving the insights that define the future.

At Lifebit, we are committed to making this process seamless. Our federated AI platform, featuring the Trusted Research Environment (TRE) and Trusted Data Lakehouse (TDL), provides the secure, scalable infrastructure you need to harmonize even the most complex multi-omic and clinical datasets.

Ready to turn your data chaos into a competitive advantage?