The AI Revolution: Accelerating Drug Development with Intelligent Systems

Why Drug Findy Is Broken—and How AI Can Fix It Now

AI-powered drug findy is revolutionizing how new medicines reach patients. It cuts development timelines from over a decade to as little as 18 months, slashes costs from billions to millions, and dramatically improves success rates that traditionally hover around a dismal 5%.

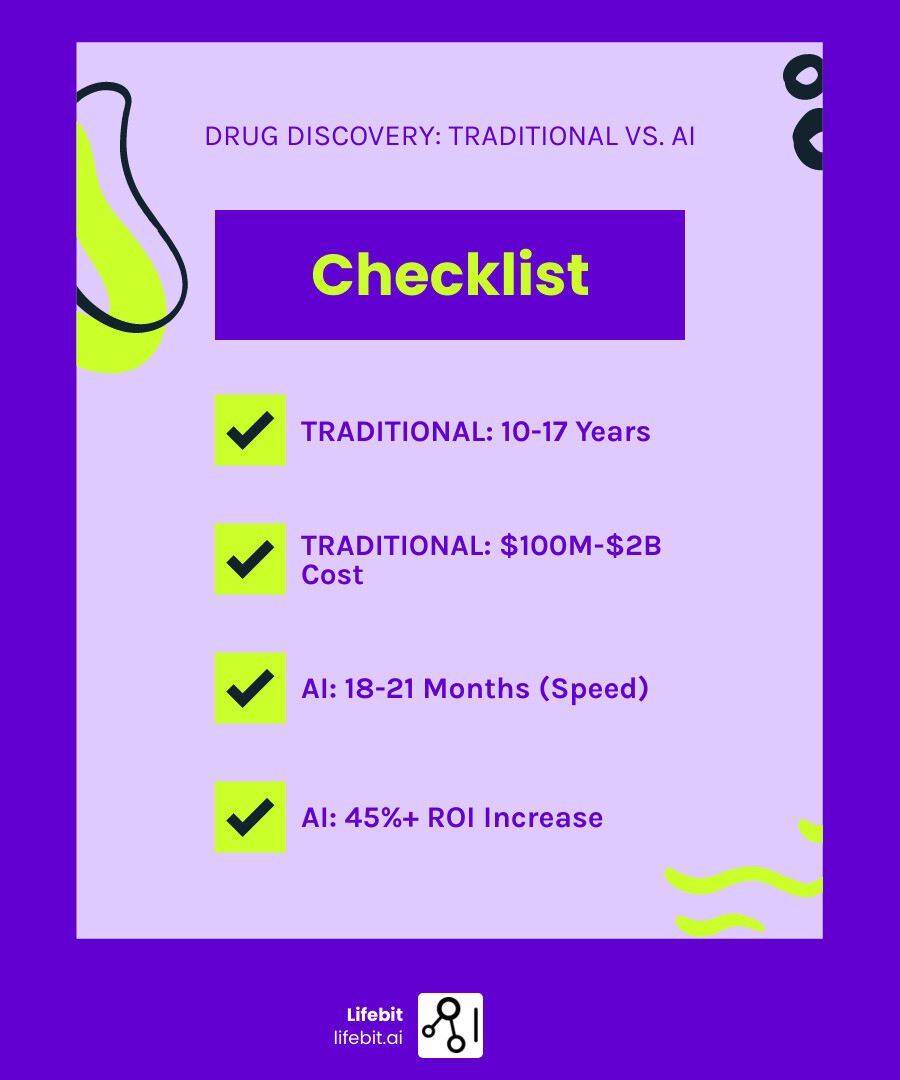

The traditional drug findy process is extraordinarily difficult, slow, and expensive. It can take 10 to 17 years and cost anywhere from $100 million to $2 billion to bring a single drug to market. With treatments existing for only about 500 of the 7,000 known rare diseases, the need for a new approach is urgent.

AI is that new approach. For instance, AI has been used to identify new uses for existing drugs, advancing them to Phase II clinical trials in just 18 months. In other cases, AI platforms have screened candidates for rare genetic disorders in a similar timeframe. One fully AI-generated drug for idiopathic pulmonary fibrosis went from concept to Phase IIa trials in just a few years, with the initial findy stage completed in only 21 days.

How? AI removes much of the uncertainty. Instead of relying on trial-and-error, AI analyzes massive biological and chemical datasets to predict how molecules will interact with target proteins, designing optimized drug candidates with unprecedented speed and accuracy.

For pharma leaders and public sector organizations, the challenge is accessing and analyzing diverse, siloed datasets (EHR, genomics, claims data) in secure, compliant environments. Real-time insights and federated analytics are essential to keeping pace with this revolution.

I’m Dr. Maria Chatzou Dunford, CEO and Co-founder of Lifebit. We’ve spent over a decade building federated data platforms that enable secure, real-time AI-powered drug findy. My background in computational biology and AI has positioned me at the intersection of precision medicine and data-driven pharmaceutical innovation, helping organizations open up their data’s full potential without compromising security.

Cut 10+ Years and Billions: How AI Transforms Drug Findy from Gamble to Science

The pharmaceutical industry has long been plagued by a broken process. It costs between $100 million and $2 billion to bring a single new drug to market over a 10- to 17-year timeline. Even after passing Phase I clinical trials, a drug candidate has a mere 5% chance of reaching patients. This is an inefficient gamble with astronomical stakes, often relying on serendipitous findies and slow, trial-and-error optimization.

This is where AI-powered drug findy steps in. By investing in AI, the pharmaceutical industry could increase its return on investment by more than 45%. AI is fundamentally reshaping drug R&D, making it faster, more cost-effective, and more successful.

AI removes much of the uncertainty of traditional methods. Instead of blindly testing millions of compounds, AI algorithms intelligently predict promising candidates, analyze complex biological interactions, and optimize molecular properties before they are even synthesized. This shift from a gamble to a science accelerates timelines, improves success rates, and brings desperately needed treatments to patients faster than ever before.

The Power Trio: Data, Algorithms, and Computation—The Heart of AI-Driven Drug Findy

At the core of every successful AI-powered drug findy initiative lies a powerful triumvirate: data, algorithms, and computation. These are the fuel, engine, and accelerator driving the R&D process.

Data is our fuel. It’s the lifeblood that nourishes AI models. This includes vast and diverse biological and chemical datasets. For instance, genomics provides the genetic blueprint, transcriptomics (e.g., RNA-seq data) reveals which genes are active in a disease state, proteomics identifies the proteins at work, and phenomics captures the observable traits of a cell or organism. We also incorporate chemical data like ADME (Absorption, Distribution, Metabolism, and Excretion) profiles and de-identified patient data from electronic health records (EHRs). The quality, breadth, and integration of this multi-omics data are paramount, as an AI system is only as intelligent and unbiased as the data it consumes.

Algorithms are our engine. These sophisticated mathematical models process data, identify patterns, and make predictions. They range from classic machine learning to cutting-edge deep learning architectures designed to understand complex biological interactions and generate novel chemical structures.

Computation is our accelerator. Processing petabytes of data with intricate algorithms demands immense computational power. Advancements in graphics processing units (GPUs), which excel at the parallel calculations required by deep learning, have been a game-changer. Alongside GPUs, specialized hardware like Google’s Tensor Processing Units (TPUs) further accelerate model training. The rise of cloud computing platforms (like AWS, Google Cloud, and Azure) provides the critical, on-demand high-performance computing (HPC) infrastructure needed to train complex models and run massive simulations without prohibitive upfront investment in physical supercomputers.

Machine Learning and Deep Learning: The Brains Behind the Breakthroughs

Machine Learning (ML) and Deep Learning (DL) are the primary architects of these breakthroughs.

Machine Learning (ML) empowers computers to learn from data without explicit programming. In AI-powered drug findy, ML models excel at tasks like predictive screening, sifting through millions of compounds to identify those most likely to interact with a biological target.

Deep Learning (DL), a more advanced subset of ML, uses multi-layered neural networks to automatically learn features from high-dimensional, complex data. This enables end-to-end learning without extensive human intervention. DL applications are already changing areas like computer vision (analyzing microscopy images) and Natural Language Processing (NLP) (extracting insights from scientific literature). In drug findy, DL models can design novel molecules from scratch (de novo drug design) or predict a protein’s 3D structure from its amino acid sequence, making them indispensable for identifying potent drug candidates at unprecedented speed.

Essential AI Algorithms and Their Real-World Uses

The landscape of AI-powered drug findy leverages a variety of algorithms for specific tasks:

- Supervised Learning: Trains models on labeled data, where the ‘right answer’ is known. Applications: Predicting a molecule’s binding affinity to a target protein, forecasting ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity) properties, or classifying whether a compound is likely to be toxic. For example, a model trained on thousands of known drug-target pairs can predict whether a new candidate molecule will bind to a specific cancer-related protein, assigning it a probability score.

- Unsupervised Learning: Finds hidden patterns and structures in unlabeled data. Applications: Clustering chemically similar compounds to identify novel scaffolds, identifying previously unknown disease subtypes from patient gene expression data, or simplifying high-dimensional datasets for visualization and further analysis.

- Reinforcement Learning (RL): Learns to make optimal decisions through trial and error by rewarding desired outcomes. Applications: De novo drug design, where an ‘agent’ (the algorithm) iteratively generates and refines molecular structures within a ‘chemical space’ environment. The agent receives a ‘reward’ for creating molecules that meet predefined criteria (e.g., high potency, low toxicity, good synthesizability), effectively learning to design ideal drugs.

- Convolutional Neural Networks (CNNs): Powerful for recognizing spatial features in grid-like data. Applications: Analyzing 2D and 3D molecular structures to predict properties, identifying active binding sites on proteins from their 3D structural data, and interpreting high-content microscopy images to assess a drug’s effect on cells.

- Recurrent Neural Networks (RNNs): Designed to process sequential data, where order matters. Applications: Generating novel molecular structures represented as sequences (like SMILES strings), analyzing time-series data from clinical trials or wearable sensors, and understanding the sequence-based language of DNA and proteins.

- Generative Adversarial Networks (GANs): Two competing neural networks—a Generator and a Discriminator—that work together to create new, realistic data. Applications: Generating novel chemical compounds with desired properties. The Generator creates new molecules, while the Discriminator (trained on real molecules) tries to tell if they are real or fake. This adversarial process pushes the Generator to create increasingly plausible and effective drug candidates. A GAN-based approach was famously used to complete an AI drug findy challenge, generating novel molecules in just 21 days.

- Graph Neural Networks (GNNs): Specialized for processing graph data. Since molecules are inherently graphs (atoms as nodes, bonds as edges), GNNs are a natural fit. Applications: Predicting drug-target interactions with high accuracy by learning directly from the molecular graph, analyzing complex biological networks (like protein-protein interaction networks), and predicting molecular properties more accurately than methods that don’t consider the graph structure.

- Natural Language Processing (NLP): Enables computers to understand and process human language. Applications: Systematically analyzing millions of scientific papers, patents, and clinical trial reports to extract relationships between genes, diseases, and compounds. This helps identify potential drug targets, uncover disease-relevant pathways, and stay ahead of emerging research.

From Target to Treatment: How AI Accelerates Every Step of the R&D Pipeline

The traditional drug findy pipeline is a marathon fraught with bottlenecks. With AI-powered drug findy, we’re changing this arduous journey into an efficient, intelligent process. AI is an end-to-end catalyst, fundamentally changing R&D from initial target identification to clinical trial acceleration.

By integrating AI, we can streamline complex processes and make data-driven decisions that were previously impossible. Let’s dig into how AI is making a difference at each critical juncture.

AI in Target Findy and Validation

Identifying the right biological target is the most crucial first step. AI revolutionizes this stage by sifting through mountains of biological data to pinpoint disease-causing mechanisms. We leverage AI to build intricate biomedical knowledge graphs. These are constructed by using NLP to mine millions of scientific articles, patent databases, and clinical trial records, creating a vast, interconnected map of biology. For example, a knowledge graph might link ‘Gene X’ to ‘Protein Y,’ show that ‘Protein Y’ is overexpressed in ‘Pancreatic Cancer,’ and also reveal that ‘Compound Z’ has been shown to inhibit a similar protein family, instantly generating a testable therapeutic hypothesis that would have been nearly impossible for a human to uncover. AI can also analyze population-level genomic data from sources like Genome-Wide Association Studies (GWAS) to find genetic variants strongly associated with disease susceptibility, pointing directly to causal biological pathways and potential targets.

Perhaps AI’s most groundbreaking contribution has been in protein structure prediction. Resolving a protein’s 3D structure is essential for understanding drug binding but was historically slow and expensive. DeepMind’s AlphaFold models have transformed this landscape. A look at AI’s impact on protein prediction shows how this technology has achieved groundbreaking accuracy, with predictions now covering nearly the entire human proteome. This provides a nearly complete structural dataset for the entire human proteome, enabling large-scale virtual screening and the findy of novel drug-target interactions with unprecedented accuracy.

AI-Driven Molecule Design: Opening New Chemical Space

Once a target is identified, the next challenge is designing a molecule to interact with it. The chemical space of possible small molecules is astronomical (estimated at 10^60). For small molecule drug findy, AI excels in molecular generation. Using techniques like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), we can design novel molecules de novo with a specific property profile. These generative models can intelligently explore the vast chemical space to invent compounds optimized for potency, selectivity, and low toxicity, moving far beyond the limitations of screening existing compound libraries.

Peptide-based drug findy is another area where AI is making significant strides. Peptides, short chains of amino acids, offer a compelling middle ground between small molecules and large biologics, often providing higher specificity and lower toxicity. However, they have traditionally been hampered by poor stability and cell permeability. AI-driven approaches are overcoming these hurdles, enabling the design and selection of potent peptide drug candidates at unprecedented speed. Machine learning models can design peptides from scratch, optimizing their therapeutic properties with high accuracy, often leveraging structural prediction tools like AlphaFold to ensure they fold correctly and bind to their target.

In virtual screening, AI-powered drug design employs both Structure-Based (SBVS) and Ligand-Based (LBVS) methods. SBVS predicts interactions using the target’s 3D structure, a field revitalized by deep learning. LBVS operates on the principle that structurally similar compounds have similar biological activities, and AI is greatly enhancing its predictive power.

Synthetic Route Planning

A brilliant molecule is useless if it cannot be made in a lab. AI also assists in synthetic route planning. AI platforms can analyze a target molecule’s structure and work backward, predicting the most efficient step-by-step sequence of chemical reactions to synthesize it. This saves medicinal chemists weeks or even months of trial-and-error experimentation, dramatically accelerating the journey from digital design to physical compound.

Smarter, Faster Clinical Trials with AI

The clinical trial phase is the longest, most expensive, and riskiest part of drug development. AI is now deployed to make these trials smarter, faster, and more likely to succeed.

We’re using AI for better patient stratification and recruitment. By analyzing patient data—including EHRs, genomic profiles, and even medical images—AI can identify subgroups most likely to respond to a treatment. For example, in an oncology trial, an AI model might analyze CT scans and biomarker data to find a small subset of patients whose tumors have a specific signature, allowing for a smaller, faster, and more successful trial focused only on those ‘hyper-responders.’ AI-driven patient-trial matching platforms have been shown to lead to 35% faster enrollment in some oncology trials by automatically connecting eligible patients with relevant studies.

Optimizing Trial Operations and Monitoring

Beyond recruitment, AI is streamlining the operational side of clinical trials. It can optimize site selection by predicting which hospitals and clinics will enroll patients most effectively. During the trial, AI can monitor for adverse events in real-time by analyzing streams of data from wearable sensors or patient diaries, allowing for earlier intervention. These tools also help ensure data integrity by automatically flagging anomalies or potential errors in data collection, improving the overall quality and reliability of trial results.

AI also helps predict clinical trial results and identify biomarkers. Predictive models can analyze preclinical and early-phase data to forecast the probability of success in later, more expensive phases. This allows companies to identify potential safety (ADMET) concerns early and terminate unpromising projects, saving billions in potential late-stage failures. AI also facilitates drug repurposing by identifying new uses for existing drugs, as seen with sildenafil (Viagra) being explored for Alzheimer’s disease after AI models identified its potential impact on Alzheimer’s-related proteins. A more recent example is Baricitinib, an arthritis drug that was rapidly identified by an AI platform as a potential treatment for COVID-19 and later received FDA approval for that use. The concept of in silico clinical trials, using computer models to complement traditional trials, is also gaining traction, promising further efficiencies.

The Data Bottleneck: The #1 Threat to AI Drug Findy—and How to Solve It

While the promise of AI-powered drug findy is immense, its potential hinges on a critical factor: data. High-performance algorithms are useless without vast quantities of high-quality, relevant, and accessible data to learn from. This is where the revolution currently faces its most significant bottlenecks.

The Challenge of Data Silos, Security, and IP

One of the main challenges is the complex interplay of data sharing, security, and intellectual property (IP) concerns. Pharmaceutical companies, hospitals, and research institutions hold massive amounts of proprietary data that is incredibly valuable for training AI models. However, this data is often locked away in secure ‘silos’ due to its commercial sensitivity and patient privacy regulations (like GDPR and HIPAA). Companies are reluctant to share this IP, and moving patient data is a compliance nightmare, creating information barriers that hinder collective progress. Only a fraction of this data ever enters the public domain.

Furthermore, a successful fusion of wet-lab and dry-lab experiments is paramount. We need a continuously improving feedback loop between experimental results and AI platforms. Without this seamless integration, AI models risk operating in a vacuum, detached from the biological reality they aim to model.

Data Quality, Standardization, and the Hidden Cost of “Positive-Only” Results

The biggest hurdle for AI is often the quality and consistency of the data. Key issues include:

- Inconsistent experimental methods: Different labs using different equipment, reagents, and protocols can introduce ‘batch effects’ into the data. This technical noise can make it impossible to perform “apples-to-apples comparisons” and can mislead AI models, which might learn patterns from the experimental artifacts rather than the underlying biology.

- Bias toward publishing only positive results: The scientific community’s tendency to report only successful experiments creates a dangerously distorted view of the biological landscape. AI algorithms trained on such data will learn a skewed reality, full of false promise. Negative results—knowing what doesn’t work—are just as important for teaching an AI what to avoid, preventing it from wasting resources on known dead ends.

Forging Solutions: From Standardization to Federated Learning

To overcome these issues, several solutions are being implemented:

- Standardize reporting and methods: Initiatives like The Human Cell Atlas are working to establish standardized protocols for running and reporting experiments.

- Data certification platforms: Endeavors like Polaris, a benchmarking platform for drug findy, aim to help clean up and standardize datasets for machine learning by introducing certification for high-quality data.

- Generating “avoid-ome” data: Some researchers are actively generating data on problematic proteins and interactions to provide AI with a more complete picture, including what not to pursue.

- Federated Learning: A Solution for Data Silos: To overcome the data sharing and privacy hurdles, a new approach called Federated Learning is gaining prominence. Instead of pooling sensitive data in a central location, the AI model is sent directly to the data where it resides (e.g., inside a hospital’s or pharma company’s firewall). The model trains locally on this private data, and only the anonymized mathematical learnings (updated model weights) are sent back to a central server to be aggregated. This allows multiple organizations to collaborate on building a more powerful, robust AI model without ever exposing or moving their proprietary or sensitive data, ensuring both IP protection and regulatory compliance.

Bridging Biology and AI for Real-World Results

For AI-powered drug findy to deliver on its promise, we must create a symbiotic, iterative relationship between “wet lab” biology and “dry lab” computation. This is often called an active learning loop:

- Prediction: The AI model analyzes all available data and makes a highly targeted prediction (e.g., ‘Of the one million possible compounds, these 10 are most likely to bind to our target protein’).

- Experimentation: Experimental biologists in the wet lab synthesize and test only those 10 promising compounds, a far more manageable task than traditional high-throughput screening.

- Feedback: The results of these experiments—both the successes and the failures—are digitized and fed back into the AI system.

- Refinement: The AI model retrains on this new data, updating its understanding and becoming smarter and more accurate for the next cycle of predictions.

This closed-loop process ensures that AI models are continuously grounded in biological reality, leading to predictions that are not only statistically powerful but also interpretable and experimentally actionable.

Beyond the Hype: Real-World AI Success Stories and What’s Next for Pharma

The narrative around AI often oscillates between hype and skepticism, but in AI-powered drug findy, the real-world successes are steadily accumulating, moving beyond theoretical potential to tangible clinical progress.

While no AI-discovered drug has yet completed the full journey to receive approval from the U.S. Food and Drug Administration (U.S. FDA), this milestone is anticipated within the next few years. In the meantime, several AI-driven pharmaceutical companies have successfully accelerated candidates into advanced clinical phases, proving the technology’s value.

A landmark example is Insilico Medicine’s INS018_055, a drug for idiopathic pulmonary fibrosis (IPF). It was the first drug with both an AI-discovered target and an AI-generated molecular design to enter Phase II clinical trials, a monumental step for the field.

Exscientia, a pioneer in the space, has used its platform to advance multiple AI-designed molecules into clinical trials for oncology and psychiatry, consistently demonstrating its ability to shorten preclinical timelines from an average of 4-5 years to less than 12 months.

Recursion Pharmaceuticals leverages a unique, scaled approach, using robotics and machine learning to run millions of biological experiments weekly. This creates massive, proprietary datasets that fuel their AI platform to map biology and identify new drug candidates for rare and common diseases.

In the realm of biologics, AbCellera famously demonstrated the speed of its AI-powered antibody discovery platform by helping to develop bamlanivimab, a treatment for COVID-19, in a fraction of the typical time.

These examples are just the tip of the iceberg. Numerous AI-enabled drug development pipelines are entering clinical phases globally, demonstrating that the technology has reached a new level of maturity.

The integration of AI in Computer-Aided Drug Design (CADD) will continue to revolutionize drug R&D, making future drug findy efforts faster, more cost-effective, and more successful. Looking ahead, the future of AI-powered drug findy promises:

- Personalized Medicine at Scale: AI will enable the design of drugs customized to an individual’s genetic makeup. This could evolve into the creation of ‘digital twins’—complex computational models of a patient’s unique biology—allowing researchers to simulate a drug’s efficacy and safety for that specific person before they ever take it.

- AI in Biologics and Advanced Therapies: While much of the focus has been on small molecules, AI is making significant inroads into more complex biologics. This includes designing highly stable and specific antibodies, optimizing the delivery vectors for gene therapies, and even engineering cell therapies like CAR-T for better efficacy and reduced side effects.

- An Evolving Regulatory Landscape: Recognizing the transformative potential of AI, regulatory bodies like the FDA and European Medicines Agency (EMA) are actively developing new frameworks for evaluating and approving drugs developed using AI/ML. They are collaborating with industry leaders to establish standards for model validation, transparency, and reproducibility, paving the way for broader adoption and trust.

- Higher ROI: With increased efficiency and success rates, the pharmaceutical industry could see a return on investment increase of more than 45%.

- Faster Cures: The acceleration of timelines means that treatments for currently intractable diseases could reach patients much sooner.

The journey is long, but the trajectory is clear: AI is not just a tool; it’s a partner in the quest for new medicines, pushing the boundaries of pharmaceutical innovation.

Conclusion: The New Era of Medicine Starts Now—Are You Ready?

We stand at the precipice of a new era in medicine, one where AI-powered drug findy is no longer a futuristic concept but a present-day reality. The statistics speak for themselves: dramatically faster timelines, higher success rates, and a tangible reduction in the astronomical costs of bringing life-saving drugs to market. The days of relying on serendipity and painstaking manual optimization are fading, replaced by intelligent systems that innovate with unprecedented precision.

However, realizing this transformative potential hinges on addressing the foundational challenge: secure access to and intelligent analysis of vast, diverse, and often siloed biomedical data. This is where we, at Lifebit, come in. We understand that opening up the full power of AI requires a robust, compliant, and collaborative data ecosystem. Our federated AI platform provides exactly that, enabling secure, real-time access to global biomedical data. With built-in capabilities for harmonization, advanced AI/ML analytics, and federated governance, Lifebit powers large-scale, compliant research across biopharma, governments, and public health agencies.

The future of pharmaceuticals is here, driven by AI. It’s a future of accelerated breakthroughs and personalized treatments. The question is no longer “if” AI will transform drug findy, but “how quickly” we can accept its full potential. With the right data infrastructure, we can collectively usher in this new era of medicine.