Data Integrity in Healthcare: Why It Matters More Than Ever

Your $13 Million Blind Spot: How Data Errors Are Silently Killing Patients and Profits

What is data integrity in health care? It’s the unwavering accuracy, completeness, consistency, and reliability of patient information throughout its entire lifecycle. From the moment a nurse records a patient’s vitals to the instant a researcher queries a decade-old dataset, the information must remain truthful and trustworthy. In simple terms, it means your patient data tells the truth, the whole truth, and nothing but the truth—no matter how many times it’s copied, transferred, or analyzed.

This isn’t just an IT buzzword; it’s the bedrock of modern medicine. Poor integrity introduces risk at every step of the patient journey and undermines the foundation of evidence-based practice. To truly grasp its importance, we must dissect its core components:

- Accuracy: The data must correctly represent real-world facts. This goes far beyond a correct diagnosis. It means the exact dosage of a high-risk medication like insulin is recorded as 10.0 units, not 100. It means the genetic marker for a targeted cancer therapy is identified as a BRAF V600E mutation, not a similar but ineffective variant. Inaccurate data can be catastrophic; a misplaced decimal point can be fatal, and a misidentified genetic marker can lead to prescribing a multi-thousand-dollar drug that offers no benefit and potential harm. Accuracy is the difference between precision medicine and medical roulette.

- Completeness: All necessary information must be present. A missing allergy to penicillin isn’t a small oversight; it’s a direct trigger for a potential anaphylactic shock. An incomplete medication history, failing to list a patient’s use of blood thinners, can lead to fatal drug interactions during emergency surgery. Beyond clinical data, completeness extends to social determinants of health (SDOH). Is the patient’s housing status recorded? Lack of stable housing can severely impact treatment adherence for chronic conditions like diabetes, but without this data point, a clinician may wrongly assume the patient is non-compliant rather than in need of social support.

- Consistency: Data must remain uniform and semantically coherent across all systems. A patient’s name must be “John B. Smith” everywhere—not “J. Smith” in billing, “John Smith” in the lab system, and “Jonathan Smith” in radiology. These inconsistencies spawn duplicate records, fragmenting a patient’s history and creating a high risk of misidentification. This extends to clinical codes; if one department uses ICD-10 for diagnoses and another uses SNOMED CT without proper mapping, it becomes impossible to get a unified view of a patient’s conditions. This semantic inconsistency corrupts analytics, biases AI models, and can lead to the wrong person receiving the wrong treatment.

- Timeliness: Information must be up-to-date and available when needed. A sepsis alert is useless if the critical lab result that triggers it arrives hours after the patient has gone into septic shock. In the ICU, a 15-minute delay in receiving updated blood gas results can mean the difference between proactive intervention and irreversible organ damage. Timeliness is about closing the gap between data capture and clinical action, ensuring that insights from patient data are delivered at the speed of care.

- Validity: Data must conform to required formats, rules, and standards. On a basic level, this means a date of birth must be in a DD/MM/YYYY format, not free text. But true validity goes deeper. It involves value set validation (e.g., a diagnosis code must be a valid, non-deprecated ICD-10 code) and cross-field validation (e.g., a procedure date cannot be before the patient’s date of birth). Without robust validity checks, systems can accept nonsensical data, leading to silent computational errors that corrupt reports and clinical decision support alerts down the line.

What great integrity looks like in practice:

- One patient, one record: a trusted master patient identity that eliminates duplicates and links every encounter, lab result, and imaging study to the right person, creating a longitudinal patient record.

- Standardized vocabularies and models (like OMOP) across sources so terms, units, and codes mean the same thing everywhere—enabling reliable analytics and AI.

- Built-in validation at the point of capture (range checks, mandatory fields, unit normalization) and at every interface to catch errors before they propagate through the data ecosystem.

- End-to-end provenance and lineage so you can trace data from its source to its use, seeing who captured what, when, how it changed, and which analyses or reports used it.

- Near-real-time data availability for safety-critical workflows (e.g., early warning scores, medication reconciliation) where minutes matter, with monitored service-level agreements (SLAs) for data latency.

Why It Matters More Than Ever: The Staggering Cost of Bad Data

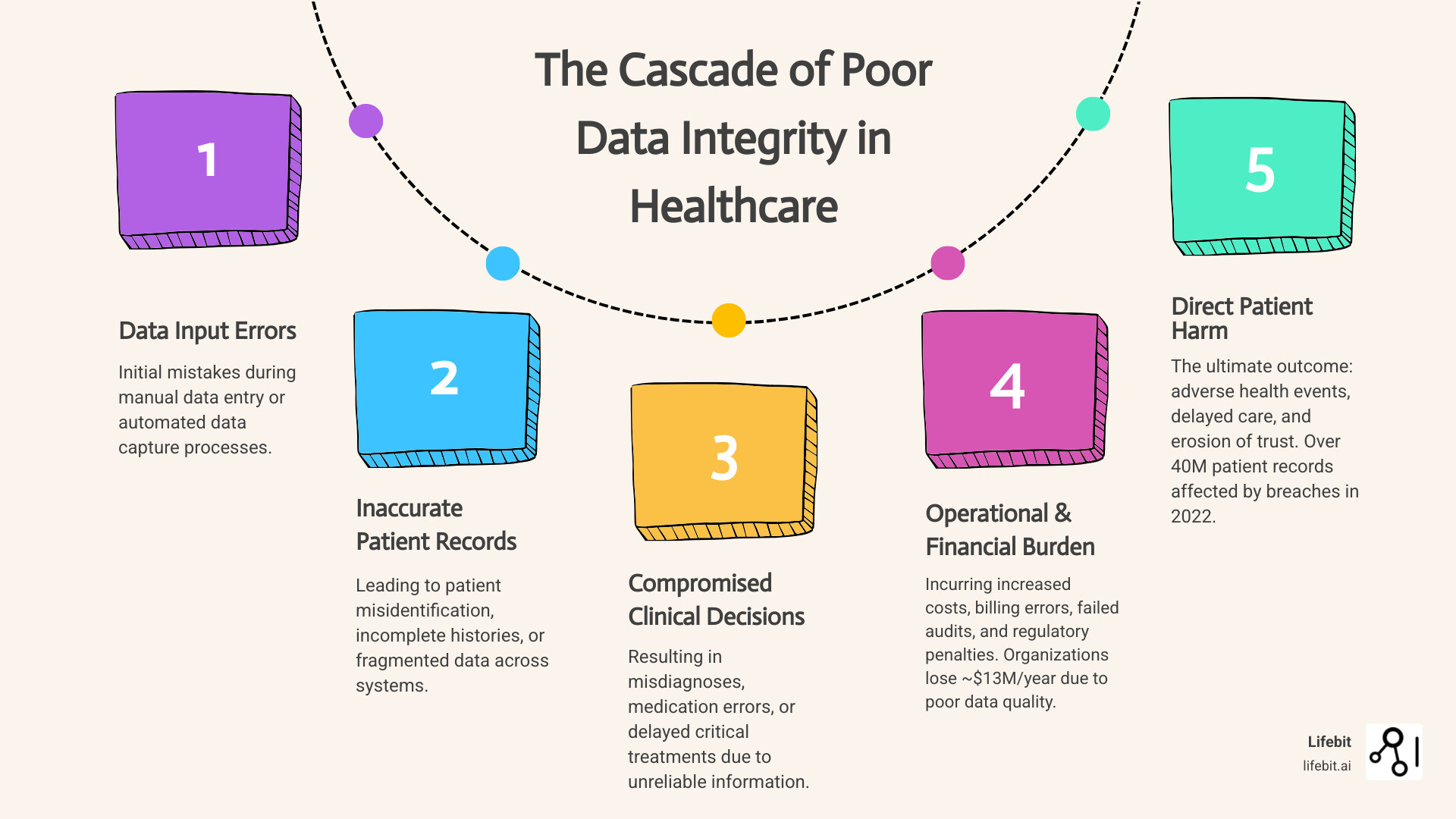

The consequences of failure are staggering. According to research from Gartner, organizations lose an average of nearly $13 million per year due to poor data quality. This isn’t just a number on a spreadsheet; it’s money diverted from hiring more nurses, buying new MRI machines, or funding critical research. This financial drain stems from multiple sources:

- Operational Inefficiency: Staff spend countless hours manually correcting errors, chasing missing information, and reconciling conflicting records.

- Wasted Resources: Inaccurate data leads to repeated lab tests and imaging scans, increasing costs and unnecessarily burdening patients.

- Revenue Cycle Disruption: Billing errors from incorrect patient information or coding lead to denied claims, delayed payments, and significant administrative overhead.

- Compliance and Legal Risks: Poor data integrity can lead to failed audits, regulatory fines (e.g., under HIPAA for misidentification), and increased exposure to malpractice lawsuits.

On the human side, the cost is infinitely higher. While healthcare data breaches affecting over 40 million patient records in 2022 made headlines, the silent threat of inaccurate data leads to misdiagnoses, medication errors, delayed treatment, and preventable patient deaths every single day.

This problem is escalating as healthcare organizations drown in a data tsunami from an ever-expanding universe of sources:

- Electronic Health Records (EHRs): The core clinical repository, containing a mix of structured fields (diagnoses, medications) and unstructured notes (physician narratives, discharge summaries) that are notoriously difficult to standardize.

- Genomic and Multi-omic Data: Massive files from sequencers (VCF, BAM) that hold the key to precision medicine but require specialized bioinformatics pipelines to ensure accuracy and proper annotation.

- Medical Imaging (PACS): Petabytes of data from MRIs, CT scans, and X-rays, where metadata integrity (correct patient ID, date, and laterality) is as critical as the image itself.

- Wearables and IoT Devices: A continuous stream of real-world data on heart rate, activity levels, and glucose, which offers immense potential but poses challenges in validation and integration with clinical records.

- Claims and Administrative Data: A primary source for health economics and outcomes research, but often lacks the clinical granularity needed for deep analysis, and its accuracy is tied to billing incentives.

This information is often siloed across incompatible platforms, creating a fragmented and dangerously incomplete picture of the patient. A single typo in a medication dosage, a duplicate patient record, or a delayed lab result can cascade into life-threatening consequences.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. Before founding Lifebit, I spent over 15 years in computational biology and precision medicine, where I witnessed how promising clinical trials for life-saving drugs were delayed or derailed by simple data inconsistencies. I saw brilliant researchers unable to access vital datasets because they were locked in incompatible hospital systems. This wasn’t a theoretical problem; it was a roadblock to medical progress. Lifebit was founded to solve this exact challenge: to build the technological bridges that allow secure analysis of sensitive data where it resides, preserving both its integrity and privacy. Our federated data platforms are the direct answer to the question of what is data integrity in health care on a global scale, enabling pharma, regulatory bodies, and public health institutions to analyze diverse datasets securely without compromising accuracy or compliance.

To make that possible in the real world, Lifebit’s next-generation federated AI platform brings the analysis to the data, not the other way around. With components like the Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer), organizations can:

- Harmonize multi-omic, clinical, and real-world datasets without moving raw patient data, mapping them to common data models for consistent analysis.

- Enforce federated governance and access controls consistently across hybrid, multi-institution environments, ensuring rules are applied at the source.

- Run advanced AI/ML and pharmacovigilance analytics in place, maintaining full integrity, provenance, and reproducibility from source to insight.

Quick self-check for leaders:

- Can you quantify your duplicate patient rate and the time-to-correct across your core systems?

- What percentage of medication orders fail initial validation due to missing units, contraindications, or outdated patient allergy lists?

- How long does it take for critical lab results to surface in frontline care pathways and decision support tools—and is that latency monitored as a key performance indicator?

Data Integrity vs. Data Security: Why Your “Secure” System Is Still a Major Risk

When we ask what is data integrity in health care, the answer boils down to a single, powerful word: trust.

Can a clinician trust that the patient data in the EHR is accurate enough to base a life-or-death decision on? Can a pharmacist trust that the medication list is complete, with no missing drugs or outdated dosages? Can a researcher trust that the data from multiple hospitals is consistent enough to yield valid insights? Can an executive trust that the data is reliable enough to meet regulatory requirements and justify strategic investments?

This trust is the invisible thread holding the entire healthcare ecosystem together. When it frays, the consequences are immediate and severe. Think of a patient record not just as a file, but as a patient’s digital twin—a story containing their demographics, medical history, genomic data, test results, and treatment plans. Every chapter must be precise, because clinicians read this story to make critical decisions. Imagine a single error: a nurse transcribes a blood sugar reading as “180” instead of “108.” The EHR, trusting the data, flags it as dangerously high. An automated insulin dose is recommended. The doctor, under pressure, approves it. The patient, whose blood sugar was actually normal, now faces a severe hypoglycemic event. The story took a wrong turn because one detail was false.

Now imagine a research and AI scenario: three hospitals contribute oncology data to build a predictive model, but one site uses a different coding system and stage definitions for tumors. The model “learns” conflicting truths, underperforms for certain patient groups, and quietly embeds bias into care pathways. The data were secure—but they weren’t trustworthy.

The ALCOA+ Framework: A Regulatory Mandate for Trust

To prevent these failures, data integrity must span the entire data lifecycle. This isn’t just a best practice; it’s a regulatory expectation governed by the globally recognized ALCOA+ principles. This framework, mandated by bodies like the FDA and EMA, provides the definitive checklist for trustworthy data.

Data must be:

- Attributable: All data must be traceable to its source. This means knowing who recorded the data (or what system generated it), and exactly when it was recorded. In a legal or clinical investigation, being able to see that Dr. Evans entered a specific diagnosis on a specific date and time is non-negotiable. Attributability requires robust user authentication and detailed, immutable audit logs.

- Legible: Data must be readable and understandable for its entire lifespan, which can be decades. In the digital age, this means more than just avoiding bad handwriting. It means the data must be stored in durable formats that won’t become obsolete, and all associated metadata, context, and definitions must be preserved alongside it. A cryptic code from a retired system is illegible and therefore useless.

- Contemporaneous: The data must be recorded at the time the event or observation occurred. Recording all of a patient’s vitals at the end of a long shift from memory or scribbled notes dramatically increases the risk of error. Contemporaneous recording, enabled by point-of-care devices and mobile EHR access, ensures that the data accurately reflects the moment in time it describes.

- Original: The data must be the source data itself or a certified true copy. This principle focuses on preserving the primary evidence. When data is migrated, transformed, or copied, a clear, verifiable trail must exist to link the new version back to the original record. This prevents unverified or altered data from being mistaken for the source of truth.

- Accurate: The data must be a correct reflection of the real-world event or observation. It should be free from errors, whether from manual entry, instrument malfunction, or processing glitches. Accuracy is achieved through a combination of well-trained staff, calibrated equipment, and automated validation checks (e.g., flagging a body temperature of “9.8” instead of “98.6”).

The “+” extends these core principles to address the complexities of modern data systems:

- Complete: Data should not be missing critical elements. This includes not just the data itself, but the metadata that gives it context. A complete record includes the audit trail (Attributable), is understandable (Legible), and captures the full story. An incomplete record can be as misleading as an inaccurate one.

- Consistent: The data must be recorded in a consistent manner throughout the process. This includes chronological consistency (e.g., a discharge date cannot be before an admission date) and consistency in terminology and formatting across the entire data lifecycle. Consistent data is predictable and reliable for analysis.

- Enduring: Data must be stored in a way that ensures it lasts for its required lifespan. This involves durable storage media, defined archiving policies, and periodic checks to ensure data hasn’t been corrupted or lost over time, which is critical for longitudinal research and legal requirements.

- Available: The data must be accessible whenever it is needed. Data that is accurate but locked in an inaccessible silo or on a system that is down is of no use. Availability requires robust system infrastructure, disaster recovery plans, and appropriate access controls to ensure clinicians and researchers can get the data they need, when they need it.

Operationalizing Data Integrity: From Principles to Practice

Adhering to ALCOA+ requires a deliberate strategy that combines technology, governance, and process.

- Establish Robust Master Data Management (MDM): The cornerstone is a Master Patient Index (MPI) that uses a combination of deterministic (matching on exact fields like SSN) and probabilistic (using algorithms to score the likelihood of a match based on name, DOB, address) matching to create a single source of truth for patient identity. This is the only way to reliably link records and prevent dangerous fragmentation of a patient’s history.

- Enforce Immutable Audit Trails and Data Provenance: Every creation, modification, or deletion of data must be logged in a secure, unchangeable audit trail that captures the ‘who, what, when, and why’ of the change. This is a core requirement for regulations like the FDA’s 21 CFR Part 11 and is essential for data forensics and establishing trust.

- Implement Strong Data Governance and Standardization: A formal data governance council, composed of clinical, IT, and business leaders, must be established. This body is responsible for defining data policies, assigning data ownership, and mandating the use of controlled vocabularies and industry standards like HL7 FHIR, LOINC, and SNOMED CT to ensure data is consistent and interoperable.

- Automate Validation and Quality Monitoring: Data integrity cannot rely on manual checks alone. Automated rules should be built into data capture forms and system interfaces to validate data at the point of entry. Furthermore, continuous data quality monitoring dashboards should be used to track key metrics (e.g., duplicate record rate, missing data fields) and generate alerts when anomalies are detected.

The Critical Distinction: Data Integrity vs. Data Security

This brings us to a crucial distinction: data integrity is not the same as data security. While often confused, they solve two different, though related, problems:

| Feature | Data Integrity | Data Security |

|---|---|---|

| Primary Focus | Ensuring data is accurate and trustworthy | Protecting data from unauthorized access |

| Key Question | “Is this information correct and complete?” | “Who can see or modify this information?” |

| Main Threat | Human error, system failures, data corruption | Hackers, breaches, unauthorized users |

| Example Problem | A patient’s blood type is recorded incorrectly | An unauthorized person accesses patient records |

Think of it this way: Data security builds the fortress walls; data integrity ensures the intelligence reports inside those walls are correct. You could have an impenetrable fortress (perfect security), but if you’re protecting false intelligence (poor integrity), your decisions will be catastrophic. The two are deeply intertwined. A security breach (e.g., a ransomware attack) can destroy data integrity by making data unavailable or corrupting it. Conversely, a user with legitimate access (no security breach) could accidentally or maliciously alter data, causing an integrity failure.

Both are non-negotiable, but organizations often over-index on security firewalls while neglecting the silent, corrosive threat of poor integrity. The stakes couldn’t be higher. You cannot achieve the promise of precision medicine, AI-driven diagnostics, or value-based care on a foundation of flawed data. This is why understanding what is data integrity in health care has become the top priority for any organization serious about patient safety, operational excellence, and future innovation.

Fast-Start Actions to Close the Integrity Gap

- Baseline and Scorecard: Baseline data quality on your top five patient safety data elements (e.g., identifiers, allergies, medications, critical labs, problem lists) and publish internal scorecards to create visibility and accountability.

- Set and Monitor SLAs: Establish and monitor Service Level Agreements (SLAs) for data latency and error correction times in safety-critical workflows. Treat data quality as a clinical KPI.

- Target Duplicate Records: Launch a targeted duplicate-record reduction initiative with clear ownership, executive sponsorship, and weekly burn-down metrics.

- Adopt a Federated Approach: For cross-institution analytics, adopt a federated, compute-to-data pattern so sensitive data stays in place—preserving security and provenance—while insights flow.

- Empower a Governance Council: Stand up or empower a data governance council with the authority to define data standards, assign data stewards, and arbitrate data-related decisions.

Learn more about how secure clinical data practices complement data integrity efforts to protect both the accuracy and confidentiality of patient information.