Why Federated Learning is the Secret Sauce for Private Medical Data

Stop Data Silos: Why Healthcare’s Most Valuable Data Never Leaves Home

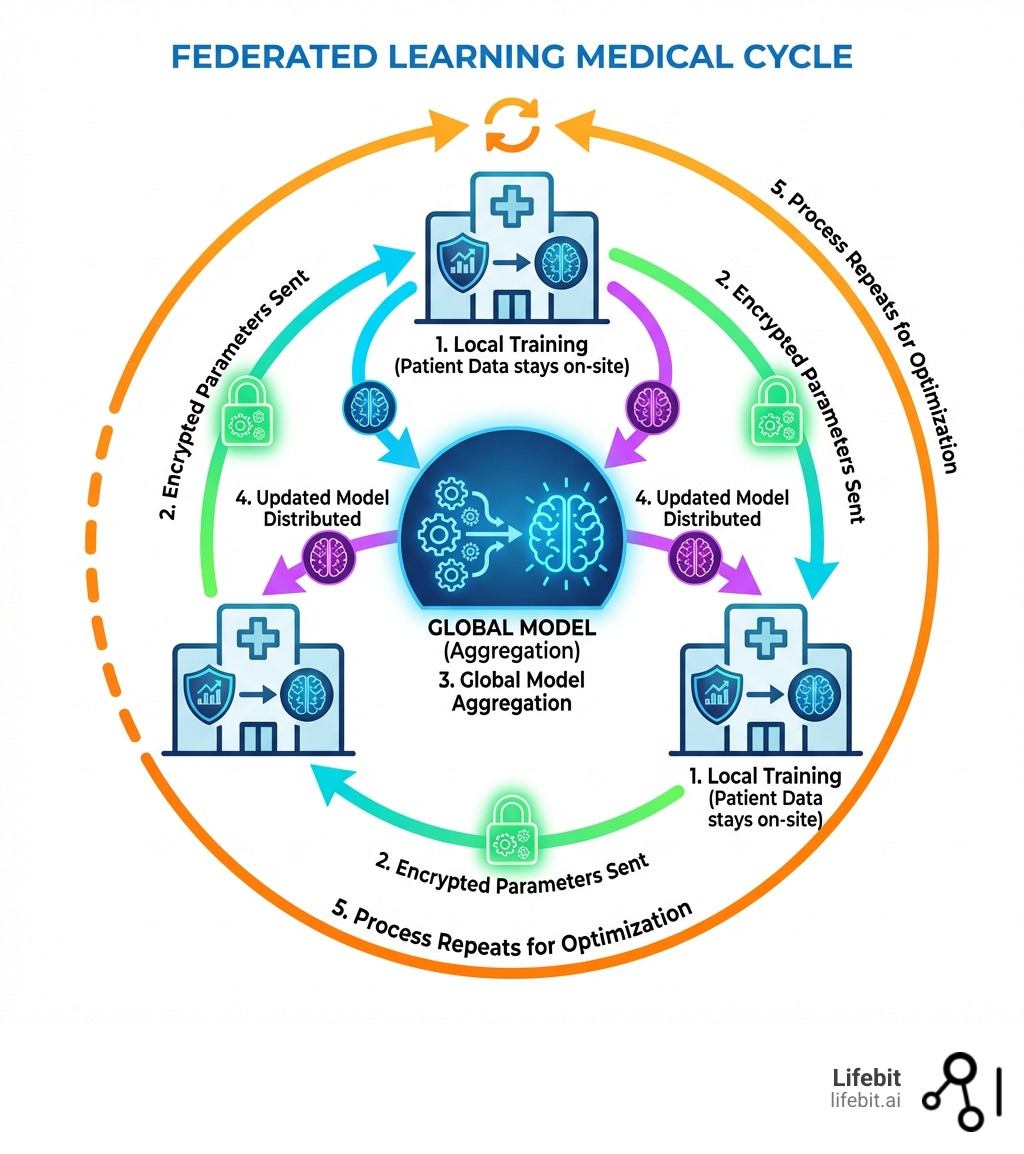

Federated learning medical research is revolutionizing how hospitals, pharmaceutical companies, and research institutions collaborate on AI models without sharing sensitive patient data. Instead of centralizing records in one location, federated learning (FL) trains machine learning models directly where data lives—across multiple sites—then aggregates only the insights, not the raw information. This approach solves healthcare’s biggest paradox: how to build powerful AI while keeping patient privacy intact.

Key benefits of federated learning in medicine:

- Privacy-first collaboration – Raw patient data never leaves its original hospital or institution

- Regulatory compliance – Meets GDPR, HIPAA, and other data protection standards by design

- Better AI models – Trains on diverse, real-world datasets from multiple sites without centralization

- Faster research – Eliminates lengthy data-sharing agreements and legal bottlenecks

- Reduced costs – No need to move, store, or duplicate massive healthcare datasets

Consider the MELLODDY consortium: 10 pharmaceutical companies pooled insights from over 10 million molecules and 1 billion assays to accelerate drug findy—without exposing their proprietary compound libraries. During COVID-19, two French hospitals built a severity prediction model in just 2 months using federated learning, analyzing CT scans and clinical data to guide treatment decisions while respecting patient privacy. These aren’t theoretical experiments. They’re production systems saving time, money, and lives.

As Maria Chatzou Dunford, CEO of Lifebit and a pioneer in genomics and federated data platforms, I’ve spent over 15 years helping organizations open up the power of distributed biomedical data. Federated learning medical systems are central to our mission: enabling secure, compliant AI that works across borders and institutions without compromising patient trust.

Federated learning medical terms to know:

Why Federated Learning Medical Research is the Future of Privacy

In the old days of medical research, if you wanted to train an AI to spot a rare lung disease, you had to gather thousands of X-rays into one giant central database. We call this “Centralized Data Sharing” (CDS). It sounds simple, but in practice, it’s a nightmare. Hospitals are (rightfully) protective of patient privacy, and moving that data across borders or even between cities triggers a mountain of paperwork and security risks. Furthermore, the sheer volume of high-resolution medical imaging data makes the bandwidth costs of centralization prohibitive for many smaller institutions.

Federated learning medical frameworks flip the script. Instead of bringing the data to the model, we bring the model to the data.

Think of it like a group of chefs writing a secret cookbook. Instead of sharing their secret family recipes in one kitchen, each chef stays in their own restaurant. They practice a technique, like “how to make the fluffiest souffle,” and then they only share their notes on what worked. The secret recipes never leave their respective kitchens, but the collective cookbook becomes world-class. In technical terms, the “notes” are the gradients or weights of the neural network, which are mathematically aggregated to improve the global model without ever exposing the underlying patient records.

This matters because of “Eroom’s Law.” You might have heard of Moore’s Law (computers getting faster), but Eroom’s Law is the opposite: drug discovery is becoming slower and more expensive over time despite better tech. The cost of developing a new drug doubles approximately every nine years. By using Federated learning medical techniques, we can break down the data silos that slow us down. Research shows that federated models can achieve 99% of the quality of centralized models. We get all the brainpower of a global dataset with none of the privacy headaches. This efficiency is critical for rare diseases, where no single hospital has enough cases to train a robust model, but a global network of 50 hospitals might have thousands.

How Federated Learning Compares to Traditional Methods

| Feature | Centralized Data Sharing (CDS) | Federated Learning (FL) |

|---|---|---|

| Data Location | Moved to a central server | Stays at the source (hospital/lab) |

| Privacy Risk | High (data can be intercepted or leaked) | Low (only model updates are shared) |

| Regulatory Burden | Massive (HIPAA/GDPR transfers) | Minimal (data stays in situ) |

| Model Quality | Gold standard | Matches CDS within ~1% |

| Collaboration | Difficult between competitors | Enables “Coopetition” |

| Network Usage | High (transferring raw datasets) | Low (transferring model weights) |

Advanced Security in Federated Learning Medical Frameworks

We don’t just stop at “not moving the data.” To make these systems truly bulletproof, we use advanced cryptographic “spices” to ensure that even the model updates don’t accidentally reveal patient secrets. This is crucial because of “Inference Attacks,” where a sophisticated hacker might try to reverse-engineer the original data from the model’s updates.

- Differential Privacy: This adds a bit of mathematical “noise” to the model updates. It’s like blurring a photo just enough so you can tell it’s a person, but you can’t tell who it is. This prevents “re-identification attacks” by ensuring that the presence or absence of a single patient’s data doesn’t significantly change the model’s output.

- Secure Aggregation: This ensures the central server only sees the sum of all updates, never the individual contribution from a single hospital. Using multi-party computation (MPC), the server can calculate the average of 100 hospitals’ updates without ever knowing what Hospital A or Hospital B specifically sent.

- Homomorphic Encryption: A fancy way of saying we can perform math on data while it’s still encrypted. This allows the central server to aggregate model weights without ever decrypting them, providing an extra layer of mathematical certainty.

At Lifebit, we take this a step further with our Lifebit Trusted Research Environment (TRE). We provide a secure “airlock” where researchers can work with data without ever being able to download or “leak” the raw files. It’s about data minimization—using the absolute least amount of information necessary to get the job done. This environment supports the entire lifecycle of a federated project, from initial data discovery to the final deployment of the trained AI model.

Solve the Privacy Paradox: How FL Secures Global Healthcare Data

The “Privacy Paradox” is the idea that we want better medicine (which requires data) but we also want total privacy (which hides data). Federated learning medical solutions solve this by ensuring that individual-level data never leaves its institution of origin. This creates a “win-win” scenario where researchers get the scale they need while patients maintain the confidentiality they deserve.

This is a game-changer for compliance. In Europe, the GDPR (General Data Protection Regulation) is incredibly strict about how health data is handled, particularly regarding “data sovereignty” and the right to be forgotten. In the US, HIPAA sets the bar for protected health information (PHI). By 2022, Gartner predicted that 50% of the world’s population would be covered by such laws. Traditional data sharing is becoming legally impossible in many cases because the risk of a data breach carries multi-million dollar fines and irreparable brand damage.

Privacy-first health research with federated learning isn’t just a buzzword; it’s a technical necessity. When we use federated learning, we aren’t “sharing data”; we are “sharing insights.” This satisfies the legal requirement for data residency—keeping data within its home country or even its home building. This is particularly important for international consortia where data cannot legally cross borders, such as between the EU and China or the US.

Real-World Applications of Federated Learning Medical Systems

We aren’t just talking about the future; this is happening right now across the five continents we serve. The scale of these projects demonstrates that FL is ready for prime time.

- The MELLODDY Project: This is the ultimate example of “coopetition.” Ten giant pharma companies—usually fierce rivals including Amgen, Bayer, and Novartis—used the MELLODDY project to train drug discovery models on their combined libraries of 10 million molecules. They all got better at finding drugs, but none of them saw each other’s secret chemical structures. This project proved that FL could protect intellectual property as effectively as it protects patient privacy.

- The EXAM Study: One of the largest federated learning studies to date, the EXAM (Electronic Medical Record Learning for Outcomes in Medicine) study involved 20 institutes across the globe. They collaborated to build an AI model that predicts the oxygen needs of COVID-19 patients. The model, called CORISK, was trained on data from North and South America, Europe, and Asia. It achieved a 16% improvement in average specificity and a 38% increase in generalizability compared to models trained at any single site.

- Glioblastoma Detection: Researchers trained an FL model across 71 sites on 6 continents to detect brain tumors in MRI scans. The result? A 33% improvement in accuracy compared to models trained at a single site. This is vital because glioblastoma is a rare and aggressive cancer; no single hospital has enough data to account for all the variations in tumor shape and location.

- Prostate Cancer Segmentation: A study across three institutions used FL to identify prostate cancer in MRIs, achieving a “Dice coefficient” (a measure of accuracy) of 0.889, outperforming models trained locally. This demonstrates that FL can handle complex 3D imaging tasks just as well as simple tabular data.

- Rare Disease Diagnostics: In the field of genomics, FL is being used to identify rare genetic variants. By connecting genomic databases across different countries, researchers can find the “needle in the haystack”—the second or third patient in the world with a specific mutation—without violating national data protection laws.

Horizontal vs. Vertical: Choosing the Right FL Framework

Not all medical data is organized the same way, which is why we use different types of Federated learning medical architectures. Choosing the right one depends on how the data is partitioned across different institutions.

- Horizontal Federated Learning (HFL): This is the most common. Imagine two hospitals that both collect the same types of data (like blood pressure, age, and BMI) but for different patients. We use HFL to combine these “horizontal” slices into a bigger, more diverse dataset. This is ideal for general disease prediction models where the feature set is standardized across the medical community.

- Vertical Federated Learning (VFL): This is for when different organizations have different types of data on the same patients. For example, a hospital has a patient’s medical records, but a local pharmacy has their medication history, and an insurance company has their long-term health outcomes. VFL lets us link these features together using “Private Set Intersection” (PSI) to match patients without either party seeing the other’s raw data. This provides a 360-degree view of patient health that was previously impossible to achieve.

- Federated Transfer Learning (FTL): This is the “advanced mode.” It’s used when the datasets don’t overlap much in patients or features. It uses a pre-trained model (perhaps trained on a large, public dataset) and “transfers” that knowledge to a new, smaller, and private dataset. This is perfect for rare diseases where data is scarce and you need to leverage existing medical knowledge to jumpstart the learning process.

Challenges with “Messy” Data (Non-IID)

In a perfect world, every hospital would collect data exactly the same way. In the real world, Hospital A might use one type of MRI machine, while Hospital B uses another. Hospital C might serve an elderly population, while Hospital D serves a pediatric one. This creates “Statistical Heterogeneity” or Non-IID (Independent and Identically Distributed) data.

If we aren’t careful, the AI might learn the “bias” of the machine or the specific demographic instead of the “signal” of the disease. This is known as “client drift,” where the local models move too far away from the global goal. We solve this by using smart algorithms like:

- FedAvg (Federated Averaging): The baseline algorithm that weights updates based on the number of samples at each site.

- FedProx: An optimization that adds a “proximal term” to handle the differences between local and global models, making the system more stable when data is very diverse.

- Scaffold: An algorithm that uses “control variates” to correct for the drift in local updates, ensuring the model converges faster even with messy data.

Integrating FL with Blockchain and Edge Computing

To make Federated learning medical systems even more robust, we can pair them with other “cool” tech to solve issues of trust and speed:

- Blockchain: We use decentralized ledgers to create a permanent, unchangeable audit trail. Every time a model is updated, it’s recorded on the blockchain. This ensures total traceability—we know exactly which site contributed what, which is vital for regulatory audits and for detecting “malicious” participants who might try to poison the model.

- Edge Computing: Sometimes, we need results fast. Edge computing means running the AI directly on the medical device (like a wearable heart monitor or a bedside ventilator). This reduces “latency” (lag) and allows for real-time diagnostics. In a federated setup, the device can learn from the patient’s data locally and only send small updates back to the hospital’s server.

- Personalized Federated Learning (PFL): This is a newer trend where the goal isn’t just one global model, but a global model that can be slightly “tuned” for each hospital. This allows a hospital to benefit from global knowledge while still having a model that performs perfectly for its specific patient demographic.

Overcoming Implementation Problems in Clinical Settings

If federated learning is so great, why isn’t every hospital using it yet? Because the “plumbing” is hard. Implementing FL requires a convergence of expertise in medicine, data science, and DevOps.

Communication Bottlenecks are a real issue. Moving large model updates (which can be hundreds of megabytes) back and forth across the internet thousands of times requires serious bandwidth. In many parts of the world, hospital internet connections are not optimized for this. We solve this through “Gradient Compression” and “Sparsification,” which reduce the size of the updates by up to 90% without losing accuracy.

Furthermore, many hospitals don’t have high-end GPUs (powerful computer chips) sitting around to run complex AI training. This is where “Asynchronous Federated Learning” comes in, allowing faster hospitals to keep training while slower ones catch up, ensuring the whole project doesn’t move at the speed of the slowest computer.

Then there’s the “Language Barrier.” One hospital might record “High Blood Pressure” while another records “Hypertension.” One might use Celsius, another Fahrenheit. Without Data Harmonization, the AI gets confused. This is why we champion global standards like:

- OMOP Common Data Model: A standard way of organizing medical data so everyone speaks the same “language.” It allows us to write one piece of code that runs on data in London, Tokyo, and New York.

- HL7 FHIR: The gold standard for exchanging electronic health records. By building FL connectors for FHIR, we can make the data “plug-and-play.”

At Lifebit, we automate this harmonization process. Our platform takes messy, unstructured data and cleans it up so it’s ready for federated training. We use NLP (Natural Language Processing) to map local hospital codes to international standards, making the “secret sauce” easy to cook.

Security Threats: Model Poisoning and Inversion

As FL grows, so do the threats. We must protect the system from:

- Model Poisoning: A malicious actor (or a compromised hospital server) could send “bad” updates to the central server to make the AI biased or inaccurate. We use “Robust Aggregation” techniques that can identify and ignore “outlier” updates that look suspicious.

- Model Inversion: A theoretical attack where someone tries to recreate a patient’s face or record by querying the model repeatedly. We counter this with the “Differential Privacy” mentioned earlier, ensuring the model never learns the specifics of any one individual.

Ethical Governance and Adoption Mechanisms

Success in Federated learning medical projects isn’t just about the code; it’s about the people. We need strong governance to ensure everyone plays by the rules. We look at this through three lenses:

- Procedural Mechanisms: Formal contracts and data-sharing agreements that define who owns the final model. If 10 hospitals build a model, who gets to sell it? These legal frameworks must be established before the first line of code is written.

- Relational Trust: Building a “social license” with patients. We use “citizen juries” and stakeholder engagement to make sure patients are comfortable with how their data is being used. Transparency is key; patients should know that their data never leaves the hospital.

- Structural Oversight: Independent bodies that monitor the models for bias. For example, if an AI is trained on data from mostly wealthy countries, will it work for patients in developing nations? Federated learning actually helps solve this by making it easier to include data from diverse, underrepresented regions.

One interesting debate is “Gatekeeper Consent.” Since it’s often impossible to get a new signature from 100,000 patients for every new research project, we often rely on data stewards or ethics boards to act as “gatekeepers” who provide consent on behalf of the patient group, provided the privacy protections (like FL) are high enough to meet the “low-risk” threshold.

Conclusion: The Future of Global Medical Research

The days of hoarding medical data in dark, isolated silos are coming to an end. Federated learning medical systems are the key to unlocking “Precision Medicine”—treatments tailored to your specific genetic makeup, environment, and lifestyle, trained on a global scale. We are moving toward a world where a doctor in a rural clinic can access an AI tool trained on the collective wisdom of the world’s leading medical centers, all while the patients in those centers remain completely anonymous.

As we look forward, we are working on even smarter ways to optimize these systems. This includes Hyperparameter Optimization (automatically tuning the AI’s settings across the network) and AutoML, which can design the best neural network architecture for a specific medical task without human intervention. We are also exploring the intersection of FL and Quantum Computing, which could allow us to process complex genomic data at speeds currently unimaginable.

At Lifebit, we are proud to be at the forefront of this movement. Our federated AI platform is designed to make global, compliant research a reality. We believe that by keeping data local but insights global, we can solve the world’s toughest medical challenges together—from curing rare cancers to predicting the next pandemic before it starts. The technology is here, the regulatory path is clear, and the potential to save lives is limitless.

Learn more about Lifebit’s federated AI platform and how we can help you unlock your data’s potential without ever letting it leave home. Together, we can build a future where medical knowledge knows no borders.

Frequently Asked Questions about Federated Learning Medical

Does federated learning achieve the same accuracy as centralized models?

Yes. Extensive research, including studies on brain tumor segmentation and heart failure prediction, shows that Federated learning medical models reach approximately 99% of the quality of centralized models. They match performance in key metrics like AUC (Area Under the Curve) and Dice scores, meaning you don’t have to sacrifice scientific accuracy for patient privacy.

How does federated learning handle data privacy regulations like GDPR?

FL is “compliant by design.” Because individual-level data stays at its source and only encrypted, non-identifiable model parameters are shared, it satisfies the strict data residency and security requirements of GDPR, HIPAA, and CCPA. It minimizes the risk of data breaches because there is no central database for a hacker to target.

What are the main challenges of implementing FL in hospitals?

The primary problems are technical and organizational. Technically, you have to deal with “Non-IID” data (data collected differently at different sites) and communication bottlenecks. Organizationally, hospitals need to agree on governance frameworks and data standards like OMOP or HL7 FHIR to ensure the AI can “read” the data correctly across different institutions.