Distributed Data Analysis Platforms Explained (Without the Tech Jargon)

Distributed Data Analysis Platform 2025: Unlock Simplicity

What is a Distributed Data Analysis Platform?

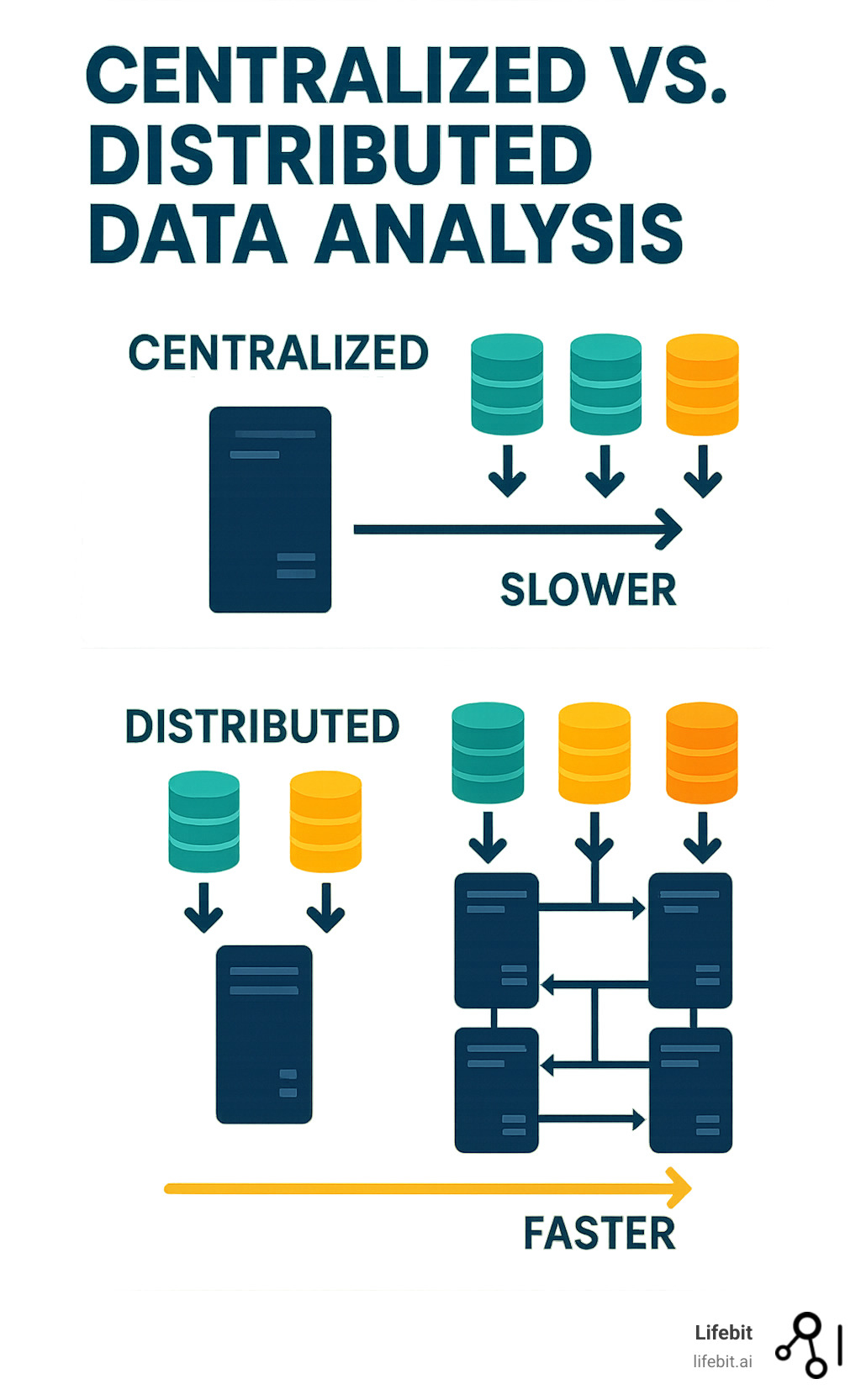

A distributed data analysis platform is a system that spreads data processing across multiple computers to handle massive datasets and complex analytics. It’s like a team of specialists working together on a big project, rather than one person trying to do it all.

Quick Answer: Key Features of Distributed Data Analysis Platforms

- Scalability: Automatically adds more computing power as data grows

- Speed: Processes data in parallel across multiple machines

- Flexibility: Handles structured data (databases) and unstructured data (documents, images)

- Real-time insights: Analyzes streaming data as it arrives

- Cost efficiency: Uses resources only when needed

- Fault tolerance: Keeps running even if some components fail

The primary purpose is to break down impossibly large data tasks into smaller, manageable pieces that can be processed simultaneously. This approach tackles the three V’s of big data—volume, velocity, and variety—to deliver actionable insights impossible with traditional single-machine systems.

For organizations dealing with siloed datasets across different departments or locations, these platforms offer a game-changing solution. As one industry expert noted: “Dask has reduced model training times by 91% within a few months of development effort at Capital One,” demonstrating the real-world impact of distributed processing.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. I’ve spent over 15 years building distributed data analysis platforms for genomics and biomedical research, developing tools that enable secure, federated analysis across healthcare institutions without moving sensitive patient data.

How a Distributed Data Analysis Platform Works: Core Components and Architectures

A distributed data analysis platform operates like a well-orchestrated symphony, breaking down massive data challenges into smaller pieces for simultaneous processing across multiple machines.

Five key components work in harmony. Processing engines are the workhorses, crunching computations in parallel. Storage systems provide a resilient, high-speed foundation by spreading data across multiple locations. Data ingestion brings in both batch and real-time data streams. Resource management acts as an air traffic controller, allocating computing power to prevent bottlenecks. Finally, query federation lets you access and combine data from multiple sources in a single query without copying entire datasets—a huge win for efficiency and governance.

The Building Blocks: Key Open-Source Technologies

The open-source community has built the foundation that powers most modern distributed platforms. Apache Spark™ is the Swiss Army knife of big data processing. It’s a unified engine for everything from data manipulation to machine learning, and its in-memory processing makes it much faster than older systems.

For real-time processing, Apache Flink® excels at stateful computations over data streams, ensuring exactly-once state consistency with fast-moving data. This makes it ideal for event-driven applications and real-time analytics.

Python lovers gravitate toward Dask, which scales familiar Python tools like NumPy and Pandas for datasets larger than memory. It has reduced model training times by 91% in real-world applications, making distributed computing more accessible for data scientists.

Apache Hadoop is the foundational framework for storing and processing vast amounts of data across clusters of commodity hardware. It continues to evolve, with recent versions containing numerous bug fixes.

Finally, Kafka is a high-throughput messaging system that reliably moves data, enabling real-time data flows between edge devices and cloud analytics systems.

Handling Data-in-Motion vs. Data-at-Rest

Modern platforms must gracefully handle data in two very different states. Streaming data represents information continuously generated and processed as it arrives – think sensor readings, financial transactions, or website clicks. This data-in-motion requires real-time analysis for immediate insights and anomaly detection.

Edge computing often plays a crucial role here, performing initial processing close to the data source before sending it further. Projects like dataClay excel at handling this edge data processing.

On the flip side, batch processing deals with historical data stored in databases, warehouses, or lakes. This data-at-rest analysis typically happens in scheduled chunks, perfect for complex analytical tasks, reporting, and training machine learning models. Tools like Druid shine at aggregating this stored data for comprehensive cloud-based analysis.

The most effective distributed data analysis platforms bridge both worlds seamlessly, capturing immediate insights from streaming data while leveraging the depth of historical data for long-term trends and predictive modeling.

The Rise of the Lakehouse Architecture

For years, organizations faced an impossible choice: data warehouses (great for structured data and business intelligence) or data lakes (perfect for raw data and machine learning). The lakehouse architecture eliminates this dilemma by combining the best of both worlds.

A lakehouse unifies structured and unstructured data on a single platform, allowing you to run traditional SQL queries and BI dashboards alongside advanced AI and machine learning applications – all on the same data. This architectural approach delivers impressive results, with some implementations achieving 12x better price/performance compared to legacy systems.

This unified approach streamlines everything from data integration and storage to processing, governance, and analytics. It provides one end-to-end view of data lineage and provenance, which is exactly why we’ve acceptd the Trusted Data Lakehouse concept.

The lakehouse architecture ensures data remains secure and auditable while enabling powerful analysis across diverse data types. Whether you’re processing raw genomic sequences or clinical trial results, this unified approach provides the flexibility and performance modern analytics demands. If you’re curious to explore this further, we’ve detailed What Is A Data Lakehouse? and how it’s changing data analytics.

Key Capabilities and Real-World Use Cases

Modern distributed data analysis platforms deliver incredible performance, ingesting millions of rows and querying billions per second, while maintaining 99.9999% availability across thousands of nodes. They accelerate time-to-insight by operationalizing the entire data journey—from raw information to predictive models and actionable intelligence—to help organizations make smarter, faster decisions.

Core Capabilities of a Modern Distributed Data Analysis Platform

A modern distributed data analysis platform offers capabilities that once seemed like science fiction. Data integration connects to hundreds of diverse sources seamlessly; KNIME, for instance, has over 300 connectors. In-place analysis, a game-changer, lets us analyze data where it lives without copying terabytes. Trino exemplifies this by enabling analysis across Hadoop, S3, and more without moving data. Predictive analytics turns historical patterns into future insights, while AI/ML model training on platforms like Spark and Dask provides the power to build sophisticated models on massive datasets. Natural language processing extracts meaning from unstructured text like customer reviews or medical notes. Vector databases like Elasticsearch enable semantic search that understands meaning, not just keywords. Finally, observability has evolved to monitor performance, usage patterns, costs, and even AI model safety, which is crucial for organizations using Large Language Models.

You can explore more about how we provide these comprehensive services through our Data Intelligence Platform services.

Driving Innovation in Healthcare and Life Sciences

In healthcare and life sciences, distributed data analysis platforms aren’t just useful – they’re essential for saving lives and accelerating findies. The challenges we face require processing petabytes of genomic, clinical, and multi-omic data from diverse populations around the world.

Genomics research has been transformed by our ability to analyze massive datasets in real-time. We can now identify genetic markers for diseases and drug responses across global populations, scaling genomics research in ways that were impossible before. Our detailed exploration of Scaling Genomics In Clinical Environments shows how this works in practice.

Drug findy and development benefits enormously from distributed analysis. We can accelerate the identification of new drug targets, optimize clinical trial designs, and predict drug efficacy and safety profiles using vast amounts of historical and real-world data.

Real-world evidence studies analyze patient data from electronic health records, claims databases, and wearable devices to understand how treatments work in actual clinical practice. This helps us move beyond controlled trial environments to see what happens in the messy, complex world of real healthcare.

Population health studies give us insights into public health trends, disease outbreaks, and intervention impacts across large populations. During the pandemic, these capabilities proved invaluable for tracking spread patterns and measuring policy effectiveness.

Our federated AI platform enables all of this while keeping sensitive patient data exactly where it belongs – secure and private at its source. This approach, which we detail in Federated Data Analysis, allows global collaboration without compromising patient privacy.

Changing Other Key Sectors

Financial services rely on these systems for split-second fraud detection and sophisticated risk modeling. When a credit card transaction happens anywhere in the world, distributed systems analyze it against millions of patterns in milliseconds to protect both banks and customers.

Retail and e-commerce companies use these platforms to personalize every customer interaction. They analyze purchase histories, browsing patterns, and inventory levels simultaneously to predict what you might want before you even know it yourself. Supply chain optimization happens in real-time, adjusting to disruptions and demand changes as they occur.

Telecommunications companies are building something even more ambitious – sovereign AI infrastructure powered by distributed platforms. Telcos across five continents are building NVIDIA-powered sovereign AI, using distributed analysis to manage network traffic and optimize service delivery at unprecedented scales.

Smart mobility and logistics applications showcase the predictive power of these platforms. Cities use distributed analysis for predictive maintenance of infrastructure, detecting potential problems with track segments or bridges before they become dangerous. Supply chain companies leverage real-time data to steer the constantly evolving global logistics landscape.

Cybersecurity represents perhaps the most critical application. Platforms like Elasticsearch analyze petabytes of security logs in real-time, using AI to detect threats and automate responses. Security Operations Centers now rely on distributed analysis to stay ahead of increasingly sophisticated cyber threats.

Navigating the Complexities: Security, Governance, and Deployment

Building a distributed data analysis platform presents significant complexities, especially when dealing with sensitive data scattered across multiple locations, systems, and organizations. Key challenges include overcoming data silos, navigating compliance requirements like GDPR and HIPAA, and making critical deployment decisions about on-premise, cloud, or hybrid models. However, with the right approach, these challenges can be overcome to build a platform that is powerful, secure, compliant, and flexible.

Tackling Data Integration, Security, and Governance

In a distributed data analysis platform, data security and governance are foundational, requiring systems that protect data integrity throughout its entire journey, from collection to analysis to insights.

The cornerstone of this approach is a unified data fabric, which creates a single, logical view of the data landscape by making disparate data sources speak the same language, even when information lives in dozens of different systems.

Data encryption forms a first line of defense, scrambling information both at rest (in storage) and in transit (traveling across networks) to ensure confidentiality.

Access control works like a sophisticated security system for your data. Instead of giving everyone the master key, you create granular permissions that ensure people only see what they need to see. A researcher studying heart disease shouldn’t accidentally stumble across psychiatric records, for example.

One of the most critical aspects is data lineage and provenance, which creates a detailed audit trail for every piece of information. This tracks where data came from, how it was changed, and where it went, which is essential for compliance officers and regulators.

When it comes to compliance frameworks like GDPR and HIPAA, the distributed nature of these platforms actually creates unique opportunities. Instead of trying to centralize all sensitive data (which creates massive security risks), we can build Trusted Research Environments that provide secure, controlled access while keeping data in its original, protected location.

This leads to federated data governance, an neat solution where analysis travels to the data, not the other way around. Instead of forcing all data into one central repository, this approach lets information stay in place while applying consistent policies and controls across the entire distributed system.

For organizations dealing with sensitive information, this federated data governance approach solves multiple problems at once – it maintains security, respects geographic and privacy boundaries, and still enables powerful collaborative analysis.

Deployment Considerations for a Distributed Data Analysis Platform

Choosing where to deploy your distributed data analysis platform is like choosing where to build your dream house – location, location, location matters, but so do your budget, lifestyle, and long-term plans.

On-premise deployment is like owning your own castle. You have complete control over every aspect, from security protocols to hardware specifications. For organizations with stable, predictable workloads and strong IT teams, this can be incredibly cost-effective. You’re not paying ongoing cloud fees, and you know exactly where your data lives. The downside? You’re responsible for everything – maintenance, upgrades, scaling, and that 3 AM call when something breaks.

Cloud deployment feels more like staying in a luxury hotel with world-class service. Need more computing power? It’s available instantly. Want to try a new analytics tool? It’s probably already integrated. The pay-as-you-go model means you’re only paying for what you actually use, which can be a game-changer for organizations with variable or unpredictable workloads. The trade-off is ongoing costs and less direct control over your infrastructure.

Hybrid deployment combines the best of both worlds – it’s like having a primary residence with access to vacation homes around the globe. You can keep your most sensitive data and stable workloads on-premise while leveraging cloud resources for burst capacity, experimentation, or specialized services. This approach is becoming increasingly popular because it offers flexibility without forcing you to put all your eggs in one basket.

Multi-cloud strategies take this even further, spreading your platform across multiple cloud providers. It’s like having accounts at different banks – it reduces your risk if one provider has issues, and you can optimize costs by using each provider’s strengths.

The key factors driving these decisions are surprisingly practical. Cost considerations go beyond just the monthly bill – you need to factor in setup costs, ongoing maintenance, and the hidden expenses of scaling. Skills matter enormously – managing a distributed system requires specialized expertise that not every organization has in-house.

Data gravity is a fascinating concept that often surprises people. Large datasets tend to attract applications and services because moving massive amounts of information is expensive and time-consuming. Sometimes it’s much smarter to bring your analysis to where the data already lives.

Compliance requirements can be the deciding factor, especially in highly regulated industries. Some data simply cannot leave certain geographic boundaries or must be stored in specific types of facilities. This is particularly relevant when building European Trusted Research Environments where data sovereignty and privacy regulations create very specific requirements.

The best deployment strategy isn’t one-size-fits-all – it’s the one that matches your organization’s unique combination of needs, constraints, and ambitions.

Frequently Asked Questions about Distributed Data Analysis

When we talk about distributed data analysis platforms with organizations, certain questions come up again and again. These are the real concerns keeping data leaders up at night, so let’s address them head-on.

What is the main difference between a data warehouse and a distributed data platform?

I like to think of this comparison using a simple analogy. A data warehouse is like a beautifully organized library – everything has its place, the catalog is perfect, and you can quickly find exactly what you’re looking for. It’s designed specifically for structured data and business intelligence queries. You walk in, ask for a specific report, and get a clean, precise answer.

A distributed data platform, on the other hand, is more like a busy research campus. It’s built to handle any type of data – whether that’s neat spreadsheets, messy text documents, images, or real-time sensor feeds. Instead of just answering “what happened” like a traditional data warehouse, it helps you understand “why it happened” and “what’s likely to happen next.”

The key differences boil down to scalability and flexibility. While a data warehouse excels at predefined queries on clean data, a distributed data analysis platform breaks down complex tasks across multiple machines, handling everything from basic reporting to advanced machine learning and AI applications. As one industry example shows, companies can achieve 12x better price/performance by moving from legacy cloud data warehouses to more flexible architectures.

Can a small business benefit from a distributed data analysis platform?

This is one of my favorite questions because the answer is a resounding yes – and it’s getting easier every day!

Gone are the days when only tech giants could afford these powerful platforms. Cloud-based solutions have completely changed the game. Small businesses can now access the same sophisticated analytics capabilities without buying expensive servers or hiring a team of infrastructure specialists.

The magic lies in pay-as-you-go models. You only pay for what you actually use, whether that’s processing this month’s sales data or running a one-time customer analysis. If your business is seasonal, you can scale up during busy periods and scale down when things are quiet.

Open-source tools make this even more accessible. Technologies like Apache Spark, Dask, and Hadoop are freely available, meaning you’re not locked into expensive licensing fees. Many platforms offer free tiers or community editions that let you start small and grow your capabilities as your business expands.

The beauty of these platforms is their scalability for growth. You might begin with simple sales reporting, but as your data needs evolve, the same platform can handle customer segmentation, predictive analytics, and even AI-powered recommendations – all without starting from scratch.

How does federated analysis relate to distributed data analysis?

Think of federated analysis as a specialized, privacy-conscious cousin of distributed data analysis. While distributed analysis spreads processing across multiple machines, federated analysis takes this concept further by ensuring data stays in place.

In traditional distributed systems, we might copy data from various sources into a central system for processing. But in federated analysis, the analysis travels to the data instead of the other way around. The actual computations happen right where the data lives, and only the results (never the raw data) come back to you.

This approach solves privacy and governance issues that many organizations face. Imagine trying to analyze patient data from hospitals across different countries – each institution has strict rules about where that data can go. With federated analysis, the hospitals never have to share their sensitive information. Instead, your analytical queries visit each hospital’s data, perform the analysis locally, and return only the aggregated insights.

At Lifebit, this is exactly how our Federation technology works. We enable large-scale biomedical research across global datasets while ensuring that sensitive patient information never leaves its secure, original location. It’s like having a trusted research assistant who can visit multiple libraries, read their books, and bring back only the summary – without ever photocopying a single page.

This approach respects geographic and privacy boundaries while still enabling powerful, collaborative analysis. It’s particularly valuable in healthcare, finance, and any field where data privacy isn’t just important – it’s legally required.

Conclusion: Embracing the Future of Data Analytics

We’ve reached an exciting turning point in how we handle data. Distributed data analysis platforms aren’t just changing the game – they’re creating an entirely new playing field where the seemingly impossible becomes routine.

Think about what we’ve explored together: systems that can crunch through petabytes of genomic data while keeping patient information completely secure, platforms that turn months of analysis into minutes of insight, and architectures that scale from startup budgets to enterprise needs without missing a beat. It’s pretty remarkable when you step back and look at the bigger picture.

The benefits we’ve discussed – blazing speed, massive scalability, smart cost efficiency, and incredible versatility – are already changing industries from healthcare to finance to telecommunications. But here’s what gets me most excited: these powerful tools are no longer locked away in the ivory towers of tech giants. With cloud-based solutions and open-source technologies, small businesses can now wield the same analytical superpowers that were once reserved for companies with unlimited budgets.

Looking ahead, the future holds even more promise. AI advancements are making these platforms smarter and more intuitive. Federated learning is solving privacy puzzles that seemed unsolvable just a few years ago – imagine training AI models on sensitive data without ever moving that data from its secure home. And serverless computing is removing the last barriers to entry, making advanced analytics as easy as flipping a switch.

At the heart of this revolution is a simple but powerful idea: data should work for us, not against us. It should be secure, accessible, and actionable. Organizations shouldn’t have to choose between protecting sensitive information and gaining valuable insights.

This is exactly why we built our federated AI platform at Lifebit. We’ve seen how distributed data analysis platforms can accelerate medical breakthroughs, improve patient outcomes, and enable findies that were previously impossible. Our platform brings together the Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer) to deliver something special: the ability to open up insights from global biomedical data while keeping that data exactly where it belongs – safe and secure.

The future of data analytics isn’t just about bigger, faster, or cheaper. It’s about empowering organizations to make findies that matter, whether that’s identifying the next breakthrough treatment, detecting safety signals before they become problems, or enabling collaboration across institutions that was never possible before.

Ready to see what this future looks like? We’d love to show you how our approach to federated analysis is opening new possibilities for secure, large-scale research. Explore Lifebit’s next-generation federated AI platform and find what becomes possible when data works the way it should.