Clinical Trials Get Smart: Unpacking AI’s Role and Future

Why Clinical Trials Are Becoming AI’s Next Frontier

AI for clinical trials is changing how new medicines reach patients by accelerating recruitment, optimizing study design, improving data analysis, and reducing both costs and timelines. AI technologies are addressing the fundamental bottlenecks that have plagued drug development for decades.

Key ways AI is revolutionizing clinical trials:

- Patient Recruitment: AI finds eligible participants faster by analyzing EHRs and real-world data, tackling the enrollment shortfalls that delay 85% of trials.

- Trial Design: Machine learning optimizes protocols to avoid costly amendments, which affect 60% of all studies.

- Data Analysis: AI automates the processing of complex data from wearables, imaging, and genomics for faster insights.

- Safety Monitoring: AI algorithms predict and detect adverse events before they become critical.

- Regulatory Submissions: NLP automates document generation, significantly reducing submission timelines.

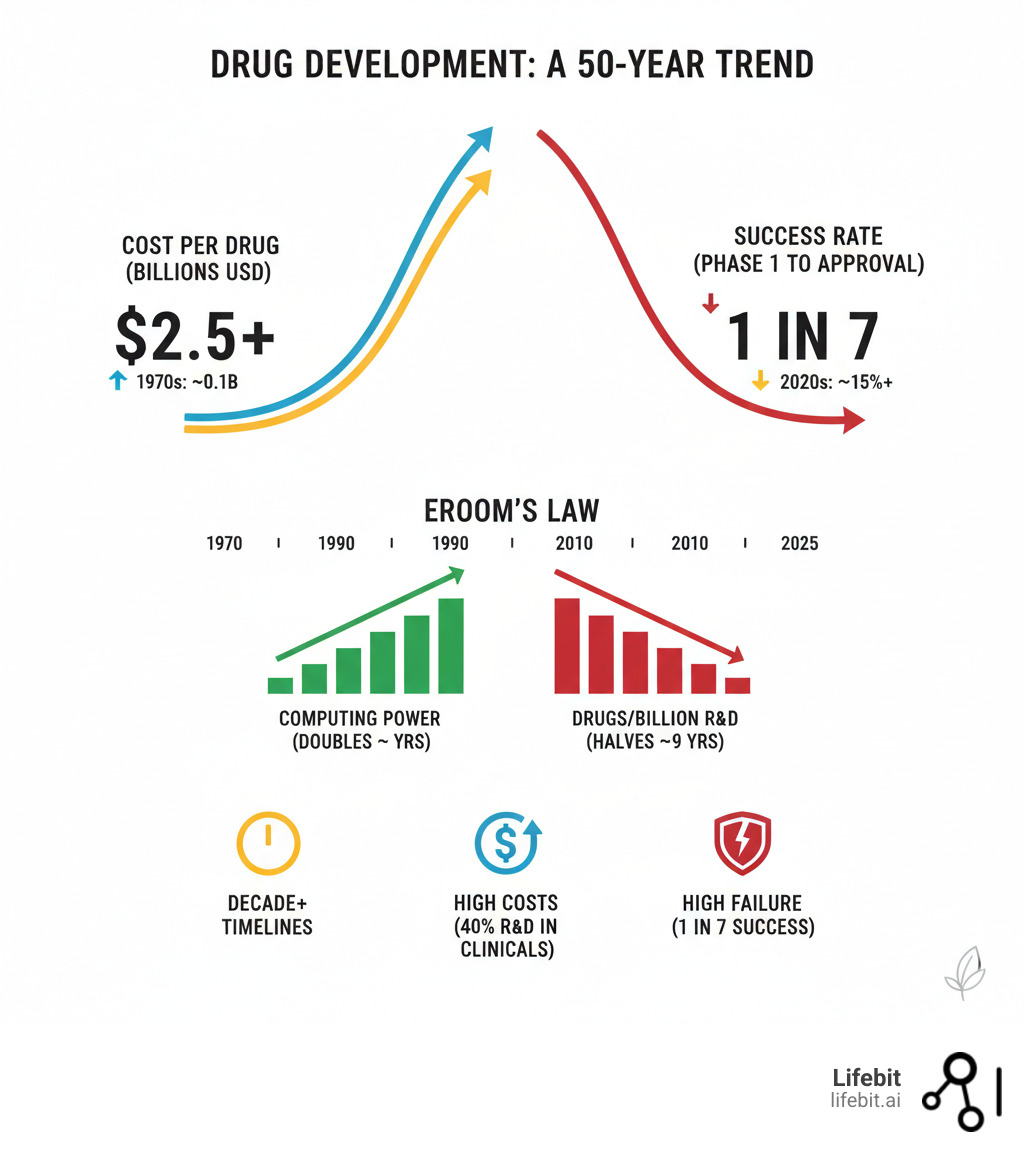

The numbers are stark. Drug development takes over a decade, costs over $2.5 billion per approval, and sees only one in seven drugs succeed. This inefficiency is known as “Eroom’s Law” (Moore’s Law in reverse): while computing power grew exponentially, drug R&D productivity has halved every nine years for six decades.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where we’ve spent over 15 years building federated platforms that enable secure, compliant AI for clinical trials across genomic and real-world data. Our work with pharmaceutical companies and public health institutions has shown how AI for clinical trials can open up insights from siloed datasets while maintaining patient privacy and regulatory compliance.

Easy ai for clinical trials glossary:

The Problem: Why Traditional Clinical Trials Are Hitting a Wall

The drug development industry is governed by a grim reality known as Eroom’s Law—Moore’s Law in reverse. While technology has driven exponential progress in other fields, its inverse has held true in pharma. For over 60 years, the number of new drugs approved per billion R&D dollars has consistently halved every nine years. This means that despite monumental scientific advances, drug development is becoming exponentially more expensive and less efficient over time.

Today, bringing a single new drug to market requires over a decade and costs an average of $2.5 billion. Clinical trials are the largest driver of this expense, consuming more than half of the total budget. The financial risk is immense, compounded by a staggering failure rate of 86%; only one in seven drugs that enter Phase I trials will ever receive regulatory approval. This high-attrition pipeline means that the costs of the many failures must be absorbed by the few successes, driving up drug prices for everyone.

Several fundamental bottlenecks cripple the traditional trial system:

- Patient Recruitment and Retention: This is the single greatest cause of trial delays. An estimated 80% of trials fail to meet their enrollment timelines, and 30% of sites fail to enroll a single patient. Each day of delay can cost a sponsor up to $8 million in lost revenue for a blockbuster drug. Furthermore, a lack of diversity in trial populations—with minority groups chronically underrepresented—means that trial results may not be generalizable to the real-world patient population, posing both scientific and ethical problems.

- Complex Protocols and Costly Amendments: Modern trial protocols have become extraordinarily complex, with some containing over 40 eligibility criteria. These rigid and often overly restrictive criteria make it difficult to find eligible patients. When protocols prove unworkable, they must be amended. Around 60% of trial protocols require at least one major amendment after initiation, adding an average of 260 days to timelines and costing the industry over $2 billion annually. These amendments are often avoidable and result from poor initial design.

- Manual Data Management and Quality Issues: Trials generate enormous volumes of complex, multimodal data from disparate sources. The traditional approach relies on manual data entry, cleaning, and reconciliation, which is slow, labor-intensive, and prone to human error. Poor data quality can compromise the integrity of a trial’s results, leading to inconclusive findings or even regulatory rejection.

This vicious cycle of rising costs, prolonged timelines, and frequent failures makes the development of new therapies unsustainably expensive and slow. We believe AI for clinical trials is the key to breaking this cycle and reversing the trend of Eroom’s Law.

How AI for Clinical Trials is Revolutionizing the Entire Lifecycle

The integration of AI for clinical trials represents a fundamental paradigm shift in drug development, reimagining every stage of the process from initial design to final regulatory submission.

Smarter Trial Design and Protocol Optimization

AI transforms trial design from an exercise in guesswork to a data-driven strategy. By analyzing vast datasets of historical trial data, real-world evidence, and biological information, AI models can simulate thousands of potential trial outcomes. This allows researchers to identify optimal protocols, predict patient responses, and design smaller, faster, and more targeted studies. For instance, in an oncology trial, an AI model could analyze genomic data from past studies to predict that patients with a specific genetic marker are three times more likely to respond to a drug, enabling the trial to focus exclusively on this sub-population.

AI also excels at optimizing site selection by predicting which locations will have the highest concentration of eligible patients and the best track record for enrollment. Furthermore, it can analyze complex eligibility criteria, identifying which rules can be safely relaxed to broaden the patient pool without compromising study integrity. This leads to the creation of adaptive trial designs, where protocols can be modified in real-time based on incoming data, ensuring the trial remains on the most efficient path to a clear answer. Harnessing AI to improve clinical trial design explores this shift in detail.

Accelerating Patient Recruitment and Improving Diversity

Patient recruitment is the biggest bottleneck in clinical trials, and it’s where AI delivers some of its most dramatic results. Natural Language Processing (NLP) algorithms can scan millions of electronic health records (EHRs) in seconds, extracting key eligibility data from unstructured clinical notes—a task that is impossible for humans to perform at scale. These NLP models are trained to recognize and extract specific clinical concepts, such as “myocardial infarction” or “hemoglobin A1c > 7.0%,” even accounting for typos, abbreviations, and varied phrasing. Automated matching platforms then connect the right patients to the right trials with incredible speed and accuracy.

This process not only accelerates enrollment but also actively improves trial diversity. By analyzing demographic and geographic data, AI can identify underserved patient populations and help sponsors target recruitment efforts in those communities, leading to more equitable and generalizable trial results.

Enhancing Trial Execution and Data Collection

During the trial execution phase, AI enables a shift from periodic, site-based data collection to continuous, real-time monitoring. Remote monitoring using wearables (like smartwatches) and digital health apps captures a rich, continuous stream of patient data, from vital signs and activity levels to medication adherence and self-reported symptoms. This provides a far more comprehensive view of a patient’s health than occasional clinic visits.

From this data, AI can identify digital biomarkers—objective, quantifiable measures of disease progression or treatment response. For example, in a Parkinson’s disease trial, AI can analyze smartphone accelerometer data to create a digital biomarker for gait stability, providing a more sensitive and continuous measure of motor function than a bi-monthly clinical assessment. For safety, AI algorithms constantly monitor incoming data streams to detect adverse event patterns and even predict risks before they become critical, enabling proactive patient management and intervention.

Streamlining Data Analysis and Submission

In the final stages, AI automates and accelerates data analysis and regulatory submission. It handles tedious but critical tasks like data cleaning, harmonization of data from different sources, and error detection, ensuring data quality and integrity. Instead of waiting until a trial’s conclusion, real-time analysis provides a continuous stream of insights. This allows for the early detection of safety signals or efficacy trends, enabling sponsors to make informed go/no-go decisions much earlier in the process, potentially saving hundreds of millions of dollars on trials destined to fail.

Generative AI is now being used to assist in drafting regulatory documents, clinical study reports, and manuscripts, significantly compressing submission timelines. Finally, predictive models can forecast final trial outcomes with increasing accuracy, helping companies optimize their R&D investments and prioritize the most promising therapies for advancement.

At Lifebit, our federated platform is built to support these AI-driven workflows, ensuring security, compliance, and interoperability across the entire trial lifecycle.

The AI Toolkit: Key Technologies Driving the Change

Behind every breakthrough in AI for clinical trials sits a powerful set of technologies working in concert to solve problems that once seemed insurmountable. Understanding this toolkit is key to appreciating the depth of the current revolution.

Machine Learning (ML) and Predictive Analytics

Machine Learning (ML) is the engine of predictive analytics, comprising algorithms that learn to identify complex patterns and make predictions from vast datasets without being explicitly programmed. This includes supervised learning, where models are trained on labeled data (e.g., patient records with known treatment outcomes) to make predictions, and unsupervised learning, which can discover hidden patterns in unlabeled data, such as identifying novel patient subgroups. In clinical trials, its key applications include:

- Patient Stratification: Identifying patient subgroups most likely to respond positively (or negatively) to a treatment based on their genomic, clinical, or lifestyle data. This enables smaller, more targeted, and more successful trials.

- Biomarker Discovery: Sifting through petabytes of genomic, proteomic, and imaging data to find novel digital or molecular biomarkers that can predict therapeutic response or disease progression.

- Predicting Treatment Response: Building models that forecast how an individual patient will react to a drug, paving the way for truly personalized trial designs and, eventually, personalized medicine.

- Risk Scoring: Creating algorithms that predict a patient’s risk for disease progression, non-adherence to the protocol, or developing specific adverse events, allowing for proactive mitigation.

Natural Language Processing (NLP) for Unstructured Data

An estimated 80% of valuable clinical data is locked away in unstructured text formats like physician’s notes, pathology reports, and discharge summaries. This data has been historically inaccessible to automated analysis. Natural Language Processing (NLP), a branch of AI focused on understanding human language, unlocks this data by:

- Analyzing Clinical Notes: Extracting critical information from free-text records to rapidly determine a patient’s eligibility for a trial, matching complex criteria in minutes instead of hours.

- Processing Real-World Evidence (RWE): Making sense of high-volume data from EHRs, insurance claims, and patient registries to design trials that better reflect real-world clinical practice and outcomes.

- Automating Literature Reviews: Using techniques like literature-based discovery to scan and synthesize millions of scientific papers. This can help researchers identify novel drug targets or repurposing opportunities by connecting disparate pieces of information that no human team could process.

- Understanding Patient-Reported Outcomes: Analyzing patient speech or text from diaries, forums, or social media to capture direct feedback on symptoms, side effects, and quality of life, providing a richer understanding of the patient experience.

Digital Twins and In-Silico Trials

Digital twins are dynamic, virtual models of a patient that simulate human biology and disease progression over time. These models are constructed by integrating multi-modal data—including genomics, proteomics, imaging, and clinical history—into complex mathematical frameworks. This allows researchers to conduct in-silico (computer-based) trials, testing hypotheses virtually before or alongside human trials. Key uses include:

- Simulating Drug Efficacy: Testing a compound’s effect on simulated biological pathways to predict its effectiveness and potential side effects without exposing human patients to risk.

- Optimizing Dosing: Running dozens of virtual dosing scenarios to identify the optimal dose, frequency, and duration for a specific patient population, minimizing toxicity and maximizing efficacy.

- Augmenting Control Arms: Using virtual patients, built from historical clinical trial and real-world data, to create a synthetic or digital control group. This can reduce the number of patients required for placebo arms, addressing a key ethical challenge in research and accelerating recruitment.

Here’s how traditional trial arms compare to digital twin approaches:

| Feature | Traditional Trial Arms | Digital Twin Arms (AI-driven) |

|---|---|---|

| Patient Enrollment | Large groups, often difficult and slow to recruit | Smaller human groups, complemented by virtual patients |

| Ethical Considerations | Placebo arms raise concerns, especially for severe diseases | Ethical use of historical data, reduced need for placebo groups |

| Cost & Time | High cost, lengthy recruitment and execution | Lower cost, faster “recruitment” (virtual patient generation) |

| Data Generation | Limited by physical visits and patient compliance | Comprehensive, real-time virtual data generation |

| Trial Flexibility | Rigid protocols, costly amendments | Adaptive designs, rapid simulation of changes |

| Personalization | Limited to broad patient groups | Highly personalized virtual patient models |

Navigating the Problems: Challenges and Ethical Considerations

While AI for clinical trials promises immense benefits, its implementation is not without significant challenges and ethical considerations that must be addressed thoughtfully and proactively to ensure the technology is used responsibly and equitably.

Data Bias, Privacy, and Security

Effective and ethical AI is entirely dependent on high-quality, representative data, which introduces three major challenges:

- Algorithmic Bias: AI models trained on historically biased or non-diverse data will learn and perpetuate those biases, potentially widening health inequities. For instance, a diagnostic AI for skin cancer trained primarily on data from fair-skinned individuals may perform poorly at identifying melanoma in patients with darker skin tones. Mitigating bias requires a conscious effort to collect diverse datasets and audit algorithms for performance across different demographic groups.

- Data Privacy and Security: Clinical trials involve highly sensitive personal health information. Using this data requires strict adherence to regulations like GDPR in Europe and HIPAA in the US. Patients must have absolute trust that their information is secure, de-identified where appropriate, and used only for its intended purpose. Any breach of this trust could undermine participation in future research.

- Data Integrity: The principle of “garbage in, garbage out” is paramount in AI. Models require data that is accurate, complete, and consistently formatted to produce reliable results. Issues like missing data, inconsistent terminology across different hospital systems, and simple data entry errors can all corrupt the training process and lead to flawed, unreliable models.

Federated learning offers a powerful technological solution to the privacy dilemma. This approach trains AI models across decentralized datasets without ever moving or centralizing raw patient data. At Lifebit, we use this privacy-preserving technology to enable secure collaboration while maintaining the strictest privacy and governance controls.

The Regulatory Landscape for AI in Clinical Trials

Regulators are cautiously optimistic, working to create frameworks that encourage innovation while ensuring patient safety and scientific validity. The FDA is actively developing guidance, as seen in its discussion paper on AI/ML in drug development, and the EU AI Act classifies most AI used in clinical trials as “high-risk,” mandating strict transparency, validation, and oversight requirements. The emerging consensus is clear: AI models and their outputs will not be accepted on faith. Sponsors must be able to demonstrate that their models are robust, generalizable, and validated on independent datasets. Regulators are increasingly demanding “Good Machine Learning Practice” (GMLP), a framework analogous to Good Clinical Practice (GCP), to ensure that model development, validation, and monitoring are rigorous, documented, and transparent.

Overcoming Implementation and ‘Black Box’ Challenges

Widespread adoption of AI faces several practical hurdles:

- Cost and Talent: The high initial investment in AI infrastructure, computing power, and the global shortage of specialized data science talent can be significant barriers for many organizations.

- The ‘Black Box’ Problem: Many of the most powerful AI models, particularly deep learning networks, are not inherently interpretable. This “black box” nature makes it difficult for clinicians and regulators to understand or trust their recommendations. Building confidence requires the use of Explainable AI (XAI), a set of techniques that aim to illuminate how a model arrived at its conclusion. XAI methods like SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-agnostic Explanations) can highlight which specific data points (e.g., which lab values or words in a clinical note) most influenced a model’s prediction. This transparency is essential for debugging models, gaining clinician trust, and satisfying regulatory scrutiny.

- Integration and Interoperability: Healthcare IT systems are notoriously fragmented and siloed. Integrating new AI solutions with existing, non-interoperable Electronic Health Record (EHR) systems is a major technical and logistical challenge that can stall implementation.

At Lifebit, our federated platform is specifically designed to address these integration and security issues, enabling secure connections across disparate data sources while upholding the highest governance standards.

Frequently Asked Questions about AI in Clinical Trials

How does AI reduce the cost of clinical trials?

AI reduces costs by optimizing trial design to avoid expensive amendments, which affect 60% of trials. It dramatically accelerates patient recruitment—a major bottleneck—saving millions in potential lost revenue for each day a trial is shortened. AI also helps predict trial failures earlier, allowing resources to be redirected from studies unlikely to succeed. Finally, by automating manual tasks like data cleaning and report generation, AI shortens overall trial durations and improves resource allocation.

Can AI replace human researchers in clinical trials?

No. AI is a tool to augment human expertise, not replace it. AI excels at processing massive datasets and identifying complex patterns, freeing researchers from repetitive work. This allows them to focus on what humans do best: nuanced scientific judgment, creative problem-solving, ethical oversight, and compassionate patient care. AI provides the data-driven insights; researchers provide the wisdom and strategic vision.

Is data from AI-driven trials accepted by regulatory bodies like the FDA?

Yes. Regulatory bodies like the FDA and EMA are actively developing frameworks to accept data from AI-driven trials. They recognize AI’s potential to accelerate drug development. The key requirement is that the AI methods must be transparent, validated, and reliable. This means proving that models work on independent datasets and that the methodology is scientifically sound. As long as these standards are met, regulators are encouraging the responsible use of AI. Lifebit’s federated platform is built to meet these regulatory demands for governance, traceability, and security.

The Future is Federated: What’s Next for AI in Clinical Research?

The future of AI for clinical trials is not just about smarter algorithms, but about how we securely connect them to the world’s biomedical data. This is the promise of federated AI platforms, which bring the analysis to the data, not the other way around. This approach solves a core paradox: how to learn from vast, diverse datasets while ensuring patient data remains private and secure in its local environment.

Technologies like Generative AI are already automating documentation and report generation, while swarm learning (an advanced form of federated learning) enables models to train collaboratively across institutions without sharing raw data. This is especially powerful for rare disease research and for building equitable AI that works for all populations.

The common thread is secure cross-institutional collaboration. At Lifebit, we’ve built our platform on this principle. Our federated technology enables pharmaceutical companies and public health institutions to collaborate at scale without compromising security or compliance. Our platform components—the Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer)—work together to deliver AI-driven insights from siloed data.

This federated approach is better technology and better ethics. It allows researchers to learn from global datasets while honoring patient trust. The future of clinical research is collaborative, intelligent, and secure. We’re helping build that future, one federated connection at a time. Explore our secure, AI-ready platform to see how federated AI can accelerate your research while maintaining the highest standards of data privacy and governance.