From Lab to Life: How AI is Supercharging Drug Development

Why AI in Healthcare Research Matters Now

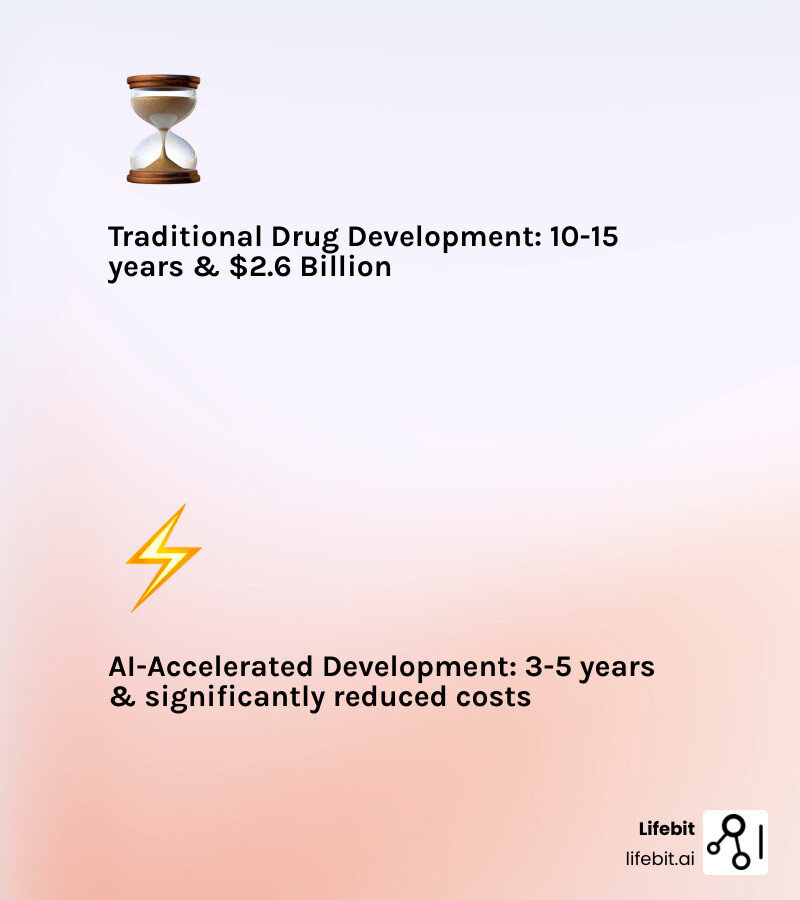

AI in healthcare research is changing how we find drugs, diagnose disease, and deliver personalized care. It’s cutting development timelines from years to months, slashing costs, and uncovering treatments that would have remained hidden using traditional methods alone.

What AI does in healthcare research:

- Accelerates drug findy – Predicts promising drug candidates before lab testing, saving years of trial-and-error

- Improves diagnostic precision – Analyzes medical images with accuracy matching or exceeding human experts (87-90% specificity in FDA-approved systems)

- Open ups complex data – Makes sense of genomics, proteomics, and massive clinical datasets that are impossible to analyze manually

- Enables personalized medicine – Identifies which treatments will work best for individual patients based on their unique biology

- Reduces costs dramatically – Cuts radiotherapy planning time by up to 90% and streamlines clinical trial design

The impact is real and measurable. More than half of AI medical devices approved between 2015-2020 were for radiology, and AI algorithms are now detecting diabetic retinopathy with 87% sensitivity and 90% specificity—performance good enough to earn Medicare reimbursement.

But AI isn’t replacing researchers and clinicians. It’s augmenting their capabilities, handling the data-heavy grunt work so human experts can focus on the complex judgments and creative insights that machines can’t replicate.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, with over 15 years of experience in computational biology, AI, and genomics. My work has focused on building secure, federated platforms that enable AI in healthcare research to deliver real-world impact while protecting patient privacy and ensuring regulatory compliance.

AI in healthcare research basics:

How AI Accelerates Findy and Delivers Precision

AI in healthcare research is revolutionizing every stage of research, from finding new drug candidates to improving diagnostics. By analyzing massive datasets in seconds, AI uncovers hidden patterns, speeds up decision-making, and helps researchers and clinicians act with confidence. Our goal is to empower researchers to mine data and reach new boundaries in research, integrating AI into medical education alongside research. The Temerty Centre for Artificial Intelligence Research and Education in Medicine (T-CAIREM) in Canada, for example, is actively integrating machine learning and analytics into its research and education programs, demonstrating a commitment to this future vision https://tcairem.utoronto.ca/.

Boosting Diagnostic Accuracy and Speed with AI

One of the most immediate and impactful applications of AI in healthcare research is in enhancing diagnostic precision. AI models, especially deep learning, now interpret medical images—like X-rays, MRIs, and CT scans—with accuracy that rivals or exceeds human experts https://doi.org/10.1038/s41591-018-0316-z. This means earlier detection, fewer errors, and better outcomes for patients. The ability of AI to process and interpret various medical tests with high accuracy reduces the likelihood of physician errors https://doi.org/10.1016/j.glmedi.2024.100108.

Consider diabetic retinopathy detection. The Centers for Medicare & Medicaid Services approved Medicare reimbursement for the use of the Food and Drug Administration (FDA) approved AI algorithm ‘IDx-DR,’ which demonstrated an impressive 87% sensitivity and 90% specificity for detecting more-than-mild diabetic retinopathy. This is a testament to the robust diagnostic performance AI can offer https://www.wired.com/story/us-government-pay-doctors-use-ai-algorithms. More than half (58%) of AI/ML-based medical devices approved in the USA and 53% in Europe from 2015–2020 were approved or CE marked for radiological use, highlighting the maturity of AI in this field https://doi.org/10.1016/S2589-7500(20)30292-230292-2).

In pathology, AI in healthcare research is changing how we analyze tissue samples. AI algorithms are assisting in computational histopathology for cancer diagnosis, leading to more precise and consistent results https://doi.org/10.1186/s13000-023-01375-z. For example, AI can detect lymph node metastases in women with breast cancer with a level of accuracy comparable to a human pathologist, offering crucial support and a potential second opinion https://doi.org/10.1001/jama.2017.14585. Beyond diagnostics, AI is even cutting preparation time for radiotherapy planning for head and neck, and prostate cancers by up to 90%, allowing treatments to begin sooner.

The applications extend beyond imaging and pathology. In cardiology, AI algorithms can analyze electrocardiograms (ECGs) to detect conditions like atrial fibrillation with superhuman accuracy, often identifying subtle patterns that are invisible to the human eye. In neurology, machine learning models analyze MRI scans and cognitive test data to predict the progression of diseases like Alzheimer’s, enabling earlier intervention and better planning for patient care. Our research shows that AI’s ability to process and interpret diverse medical data with superior accuracy and speed is invaluable for early detection and improving outcomes.

| Diagnostic Task | Human Accuracy (Average) | AI Accuracy (Average/Example) | Source/Reference |

|---|---|---|---|

| Diabetic Retinopathy | Varies by clinician | 87% sensitivity, 90% specificity (IDx-DR) | https://www.wired.com/story/us-government-pay-doctors-use-ai-algorithms |

| Breast Cancer Metastasis | Varies by pathologist | Comparable to human experts https://doi.org/10.1001/jama.2017.14585 | https://doi.org/10.1001/jama.2017.14585 |

| Radiotherapy Planning | Time-intensive | Up to 90% time reduction | https://pubmed.ncbi.nlm.nih.gov/33495491/ |

| Colorectal Cancer Genotyping | Manual, time-consuming | Can cut costs while maintaining accuracy https://doi.org/10.3389/fonc.2021.630953 | https://doi.org/10.3389/fonc.2021.630953 |

Changing Drug and Therapy Findy

The journey from finding a new molecule to bringing a life-saving drug to market is notoriously long and expensive. AI in healthcare research is making drug findy faster and more targeted by intervening at every stage. It predicts which compounds are most likely to work—and which are likely to fail—before they ever reach the lab, saving time and money https://pubmed.ncbi.nlm.nih.gov/31320117.

One of the most striking examples of AI’s power is protein structure prediction. DeepMind’s AlphaFold has revolutionized this field, solving a 50-year-old grand challenge in biology by predicting protein structures with unprecedented accuracy https://pubmed.ncbi.nlm.nih.gov/31942072. This capability is crucial for understanding disease mechanisms and designing drugs that bind precisely to their targets. AI is also accelerating antibiotic findy. In 2020, a deep learning approach led to the rapid identification of potent DDR1 kinase inhibitors and new antibiotics, showcasing the potential for AI to tackle pressing global health challenges https://pubmed.ncbi.nlm.nih.gov/31477924, https://pubmed.ncbi.nlm.nih.gov/32084340. We can even make molecule findy more efficient using AI, as highlighted by Canada’s National Research Council https://nrc.canada.ca/en/stories/using-artificial-intelligence-make-molecule-discovery-more-efficient.

Furthermore, AI optimizes clinical trials by improving patient recruitment, monitoring adherence, and even enabling adaptive trial designs that can change based on incoming data, making the entire process more efficient and effective https://pubmed.ncbi.nlm.nih.gov/27406349. Generative AI is also emerging as a powerful tool for de novo drug design, creating novel molecular structures with desired properties from scratch. Companies like Insilico Medicine have used AI to identify a novel target and design a new molecule for idiopathic pulmonary fibrosis, moving from discovery to the first human clinical trial in under 30 months—a fraction of the traditional time.

Open uping Complex Data: AI’s Superpower in Healthcare Research

Healthcare data is exploding—from genomics to clinical notes. AI in healthcare research‘s ability to analyze these complex datasets is open uping new insights, driving personalized medicine, and revealing the root causes of disease. From Singapore’s efforts to build trust in AI for better patient care https://www.weforum.org/stories/2025/09/singapore-healthcare-ai/ to Imperial College London’s dedicated AI for healthcare initiatives https://www.imperial.ac.uk/stories/healthcare-ai/, the global push for data-driven insights is undeniable.

Making Sense of ‘Omics’ and Big Biological Data

AI is essential for analyzing high-dimensional ‘omics’ data, which includes genomics, proteomics, and metabolomics. These vast datasets hold the key to understanding individual biological differences. Machine learning algorithms can find genetic markers, predict treatment responses, and uncover the biology behind complex diseases https://pubmed.ncbi.nlm.nih.gov/25948244.

A key frontier is multi-omics integration, where AI combines data from genomics (the genetic blueprint), transcriptomics (gene expression), proteomics (proteins), and metabolomics (metabolites) to create a holistic biological picture of a patient. For example, in precision oncology, integrating these data layers can reveal why some patients with the same cancer type respond to immunotherapy while others do not. AI models can identify complex signatures across these data types to stratify patients into distinct subgroups, leading to more effective, personalized treatment strategies.

For example, AI can help in computational phenotyping, identifying patients with similar characteristics from complex datasets to better understand disease progression and treatment efficacy https://pubmed.ncbi.nlm.nih.gov/23826094/. This is crucial for advancing personalized medicine, where treatments are custom to an individual’s unique genetic makeup and disease profile https://pubmed.ncbi.nlm.nih.gov/32961010. Our federated AI platform is designed precisely to handle such multi-omic data securely and at scale, enabling compliant research and pharmacovigilance across diverse datasets.

Mining Unstructured Data with Natural Language Processing (NLP)

Vast amounts of valuable information are buried in unstructured text—clinical notes, research papers, patient reports, and even social media data. Natural Language Processing (NLP), a branch of AI, extracts this data to predict disease trends, spot adverse drug reactions, and build richer patient profiles. This can be critical for generating real-world evidence. Ambient clinical intelligence, for instance, uses NLP to automate administrative tasks and optimize clinical workflows. By using microphones to capture doctor-patient conversations, these systems can auto-populate electronic health records (EHRs), draft clinical notes, and queue up prescriptions, saving clinicians hours of administrative burden each day.

NLP’s capabilities extend to analyzing social media data for public health insights, such as improving sexually transmitted infection (STI) prevention and control by identifying trends and misinformation https://doi.org/10.3389/fdgth.2025.1644041/full. In pharmacovigilance, NLP algorithms can scan patient forums and social media to detect potential adverse drug reactions far more quickly than traditional reporting systems, providing an early warning system for drug safety.

Large language models (LLMs) are pushing the boundaries further. Models like Google’s Med-PaLM 2 and OpenAI’s GPT-4 are being fine-tuned on medical data to perform a range of tasks. They are capable of summarizing complex clinical notes, generating discharge summaries, and even assisting in the interpretation of radiology reports for conditions like lung cancer https://pubmed.ncbi.nlm.nih.gov/40179389, https://pubmed.ncbi.nlm.nih.gov/37460753. This significantly reduces the burden on healthcare professionals, allowing them to focus on direct patient care. We are keenly aware that these tools, while powerful, must be implemented with careful consideration for data privacy, factual accuracy (to avoid ‘hallucinations’), and ethical guidelines.

Building Trust: Overcoming Challenges and Setting Ethical Standards

AI in healthcare research brings new challenges. Protecting data privacy, eliminating bias, and ensuring transparency are critical for building trust and delivering safe, effective solutions. The journey towards trustworthy AI in healthcare is a collaborative one, involving researchers, clinicians, policymakers, and patients.

Technical and Ethical Problems to Address

Some AI models are often called “black boxes”—their reasoning is hard to explain, making it difficult to understand how they arrived at a particular conclusion https://doi.org/10.1093/jamia/ocaa268. This lack of transparency is a major barrier to clinical adoption, as doctors need to be able to trust and verify the rationale behind a recommendation. To address this, the field of Explainable AI (XAI) is developing methods to make models more interpretable. Techniques like SHAP (SHapley Additive exPlanations) can highlight which specific features—such as pixels in a medical image or specific lab results—contributed most to an AI’s prediction. This allows a clinician to validate the AI’s reasoning against their own expertise, building confidence and enabling safer use.

If trained on biased data, these models can perpetuate or even worsen health disparities. A well-known example involved an algorithm widely used in US hospitals to predict which patients would need extra care. The algorithm used healthcare cost as a proxy for health needs, which led it to falsely conclude that Black patients were healthier than equally sick white patients, because less money was historically spent on their care. This resulted in the algorithm systematically underestimating the health needs of Black patients https://pubmed.ncbi.nlm.nih.gov/30508424. Mitigating such biases requires a concerted effort, including auditing datasets for representation, using fairness-aware algorithms, and continuous monitoring of model performance across different demographic groups post-deployment. Fairness, transparency, and data security must be built into the system from the start.

Key ethical considerations include:

- Data privacy: Safeguarding sensitive patient information is paramount. Our federated platforms are built to ensure data remains secure within its original environment.

- Algorithmic bias: Actively identifying and mitigating biases in training data and model outputs is essential to ensure equitable outcomes.

- Explainability (XAI): Developing AI systems that can provide meaningful insights into their decisions, fostering trust and allowing clinicians to understand the rationale behind recommendations.

- Data security: Robust cybersecurity measures are needed to protect against breaches and misuse of health data.

The WHO’s guidance on AI ethics emphasizes principles such as protecting autonomy, promoting human well-being, safety and public interest, ensuring transparency and explainability, fostering responsibility and accountability, ensuring equity, and promoting AI that is responsive and sustainable https://www.who.int/publications/i/item/9789241511308-eng.pdf. These principles guide our approach to developing responsible and inclusive AI tools.

The Need for Clear Regulatory and Governance Guidelines

As AI in healthcare research evolves, so must the rules. Global and national bodies are creating new frameworks to ensure AI is safe, effective, and respects patient rights. The EU AI Act, for example, classifies all AI tools in healthcare as high-risk, necessitating strict compliance with safety, transparency, and quality obligations https://artificialintelligenceact.eu/the-act/. Similarly, the UK is developing a pro-innovation approach to AI regulation https://www.gov.uk/government/consultations/ai-regulation-a-pro-innovation-approach-policy-proposals/outcome/a-pro-innovation-approach-to-ai-regulation-government-response. The US FDA is also adapting its approach for AI/ML-based Software as a Medical Device (SaMD), developing pathways that allow for iterative improvements to algorithms while ensuring continued safety and effectiveness.

For us, compliance with regulations like GDPR for Europe https://gdpr.eu/ and HIPAA for the USA https://www.hhs.gov/hipaa/for-professionals/privacy/laws-regulations/index.html is non-negotiable. Our federated approach allows AI models to train on data across multiple secure environments without the raw data ever leaving its source, inherently addressing many privacy concerns. The FUTURE-AI international consensus guideline provides a structured roadmap for trustworthy and deployable AI in healthcare, built on principles of fairness, universality, traceability, usability, robustness, and explainability https://www.bmj.com/content/388/bmj-2024-081554. This framework aligns perfectly with our commitment to ethical and responsible AI development.

The Future Is Here: AI Adoption, Trends, and Global Impact

AI in healthcare research is moving from theory to practice in labs and clinics worldwide. Adoption is accelerating, driven by proven results and clear value. The next wave will see even deeper integration—democratizing expertise and closing global health gaps.

Where AI in Healthcare Research Is Headed Next

The market for AI-powered medical tools is booming, especially in diagnostics. As we mentioned, over half of AI/ML-based medical devices approved in the USA and Europe from 2015–2020 were for radiological use. This trend is expected to continue, with AI becoming increasingly integrated into routine clinical practice. Mount Sinai’s AI initiatives in New York https://icahn.mssm.edu/about/artificial-intelligence and Israel’s developing AI-based healthcare industry https://www.trade.gov/market-intelligence/israel-developing-ai-based-healthcare-industry are prime examples of this rapid adoption.

The future of AI in healthcare research holds exciting possibilities:

- Generative AI for synthetic data: Creating realistic but artificial patient data is a game-changer for research. Since real patient data is highly sensitive and often siloed, it can be difficult to access for training robust AI models. Generative models, such as Generative Adversarial Networks (GANs), can learn the statistical patterns of a real dataset and then produce a new, synthetic dataset that mimics these properties without containing any real patient information. This synthetic data can be used to train and validate AI models, augment rare disease datasets, and be shared more freely among researchers, all while preserving patient privacy.

-

Digital twins: This futuristic concept involves creating a dynamic, virtual representation of a patient. A digital twin would integrate an individual’s genomic data, real-time physiological data from wearables, clinical history, and imaging scans into a comprehensive computational model. This would allow doctors to simulate how a patient might respond to a specific drug or therapy in silico before administering it, test different surgical approaches virtually, and predict the individual’s disease trajectory over time. It represents the ultimate vision of personalized medicine, moving from reactive to proactive and predictive care.

-

Federated learning: This privacy-preserving technique is crucial for collaborative research. Instead of pooling sensitive data into a central location, federated learning allows AI models to be trained on decentralized datasets located in different institutions (e.g., hospitals, research centers). The model ‘travels’ to the data, learns from it locally, and only a summary of the model’s updates—not the raw data itself—is sent back to a central server. This is a cornerstone of our work at Lifebit, enabling secure, real-time access to global biomedical and multi-omic data for large-scale, compliant research. This technology is vital for overcoming data silos and accelerating findies while upholding stringent privacy standards like GDPR and HIPAA.

Using AI to Close the Global Health Gap

One of the most profound potentials of AI in healthcare research is its ability to address health inequalities and improve global health equity. AI can bring specialist-level diagnostics and decision support to underserved regions—making healthcare more accessible, affordable, and equitable worldwide https://pubmed.ncbi.nlm.nih.gov/32366172.

For example, AI-powered remote diagnostics can assist healthcare workers in low-resource settings in accurately diagnosing diseases like diabetic retinopathy from a simple retinal scan on a smartphone [https://doi.org/10.1016/S2589-7500(19)30004-4). In another example, AI models can analyze climate and environmental data to predict malaria outbreaks, allowing public health officials to deploy resources proactively. Telehealth solutions, often augmented by AI, can extend the reach of medical expertise to remote areas, improving access to care and reducing the need for patients to travel long distances. The Canadian healthcare system, for instance, could greatly benefit from AI in improving access and efficiency https://www.mckinsey.com/industries/healthcare/our-insights/the-potential-benefits-of-ai-for-healthcare-in-canada.

However, deploying AI in these settings comes with unique challenges, including a lack of digital infrastructure, limited internet connectivity, and the need for models that can run on low-power devices. It is crucial that these solutions are not just ‘parachuted in’ but are co-designed with local communities and healthcare workers to ensure they are culturally appropriate and address real-world needs. By automating tasks, optimizing resource allocation, and providing predictive analytics, AI can significantly reduce healthcare costs, making quality care more affordable globally. Our federated approach ensures that valuable research insights can be derived from diverse global datasets without compromising data sovereignty or privacy, fostering collaborative research that directly benefits global health initiatives.

Frequently Asked Questions about AI in Healthcare Research

How is patient data kept private when using AI?

Patient data is protected through robust measures such as anonymization, de-identification, and secure, access-controlled platforms like Trusted Research Environments (TREs). Federated learning is a key technology we employ, allowing AI models to train on data across multiple locations without the data ever leaving its secure environment. This ensures strict compliance with regulations like GDPR in Europe and HIPAA in the USA, safeguarding patient privacy while enabling crucial research.

Can AI replace human researchers and doctors?

No. AI is designed to augment—not replace—human expertise. While AI excels at processing vast amounts of data and identifying complex patterns far beyond human capabilities, it lacks the critical thinking, empathy, and nuanced judgment that human experts bring. The most effective systems are “human-in-the-loop,” where AI empowers researchers and clinicians to make better, faster, and more informed decisions, freeing them to focus on the intricate and human-centric aspects of their work.

What is the biggest challenge to implementing AI in healthcare research?

The biggest challenges revolve around data quality and access, regulatory complexities, and the “black box” problem of explainability. High-quality, diverse, and well-annotated data is essential for training effective AI models, yet access to such data is often restricted by privacy concerns and data silos. Navigating the evolving regulatory landscape for AI medical devices and ensuring ethical governance is also crucial. Finally, building trust among clinicians and patients requires that AI systems are not only accurate and secure but also fair, transparent, and explainable, allowing users to understand and validate their decisions.

Conclusion: Partnering with AI for Faster, Safer Breakthroughs

AI in healthcare research is fundamentally changing the landscape of medical findy—speeding up findy, cutting costs, and enabling personalized care. By securely analyzing complex data at scale, AI brings life-saving innovations from the lab to patients faster than ever before. We believe the future of medicine is a powerful partnership between human expertise and artificial intelligence.

At Lifebit, we provide a next-generation federated AI platform that enables secure, real-time access to global biomedical and multi-omic data. With built-in capabilities for harmonization, advanced AI/ML analytics, and federated governance, we power large-scale, compliant research and pharmacovigilance across biopharma, governments, and public health agencies. Our platform is designed to deliver real-time insights, AI-driven safety surveillance, and secure collaboration across hybrid data ecosystems, ensuring that the promise of AI in healthcare research can be fully realized.

Find how federated AI platforms can securely power your research