Beyond Vigilance: AI’s Role in Proactive Drug Safety

The Urgent Need for Smarter Drug Safety Surveillance

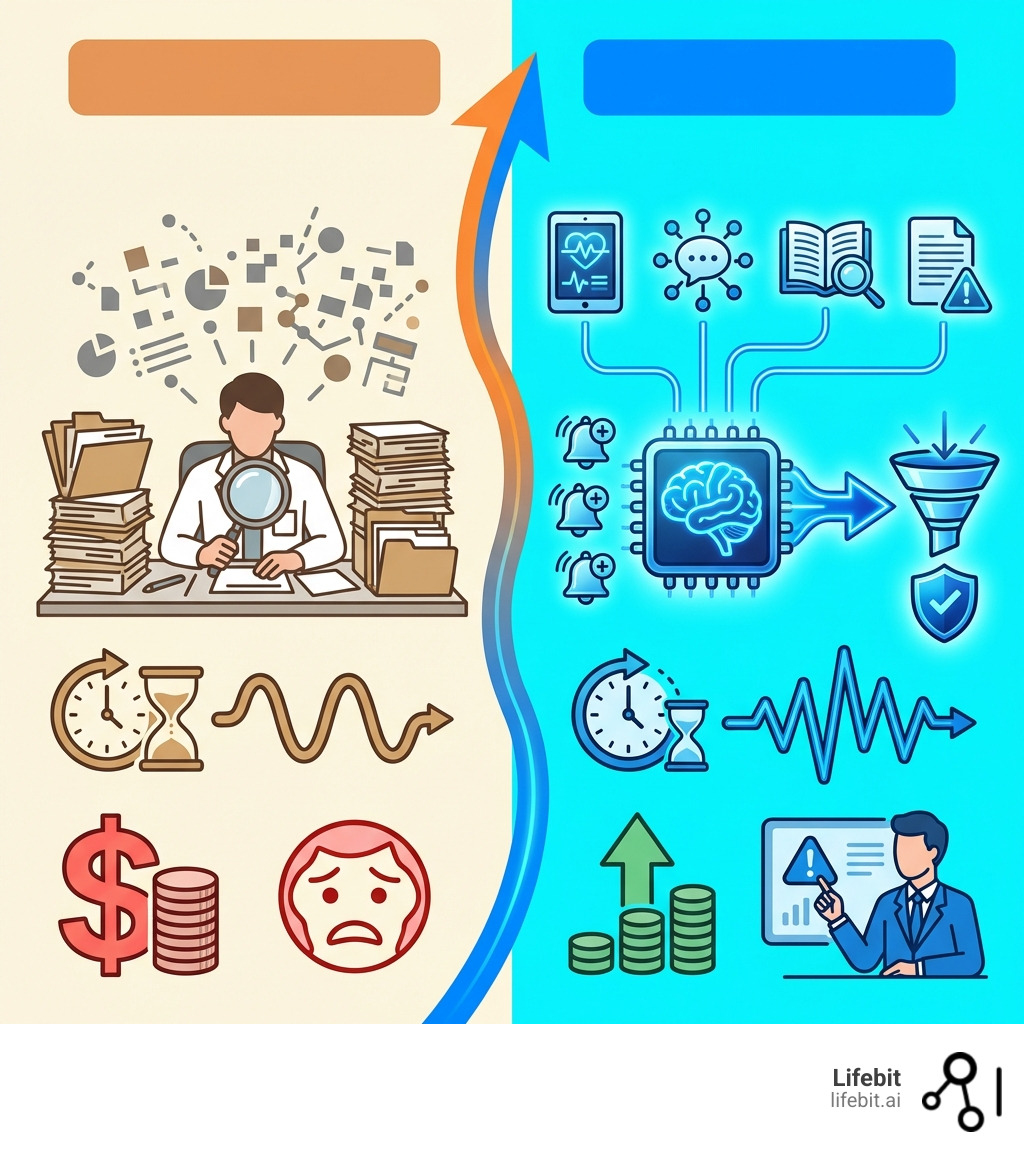

Drug safety AI is changing how pharmaceutical companies, regulatory agencies, and healthcare organizations detect, assess, and prevent adverse drug reactions—cutting analysis time from months to minutes while reducing costs by up to 70%.

Quick Answer: What Drug Safety AI Does

- Automates adverse event detection from EHRs, social media, and clinical reports using Natural Language Processing

- Accelerates signal detection by analyzing millions of safety reports in real-time (vs. weeks with manual review)

- Reduces case processing costs by two-thirds through intelligent automation

- Improves accuracy by eliminating human error in repetitive tasks

- Enables proactive monitoring across diverse, global data sources without moving sensitive data

The numbers tell a stark story. The global pharmacovigilance market is valued at $8 billion, yet the estimated annual cost of managing adverse drug reactions reaches $30.1 billion. Traditional drug safety monitoring is reactive, fragmented, and heavily human-dependent—leading to dangerous delays, compliance risks, and missed safety signals. In elderly populations alone, 15-35% experience an adverse drug reaction during hospitalization, yet over 90% of adverse events go unreported through official channels.

The vast majority of safety data lives in unstructured formats: call-center logs, medical literature, social media posts, and clinical narratives buried in electronic health records. Manual review of these sources is slow, expensive, and inconsistent. Case processing activities alone consume up to two-thirds of a typical pharmaceutical company’s pharmacovigilance budget.

Enterprise customers implementing AI-driven pharmacovigilance solutions have reported efficiency gains of up to 70%, regulatory reporting that’s 90% faster, and substantial cost savings. These aren’t experimental results—they’re production outcomes from systems already deployed at scale.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where we’ve spent over 15 years building federated AI platforms that enable secure, real-time analysis of biomedical data—including drug safety AI systems that help regulatory agencies and pharmaceutical companies detect safety signals without moving sensitive patient data. Our work in computational biology, genomics, and AI has positioned us at the intersection of precision medicine and proactive pharmacovigilance.

The High Cost of Reactive Safety: Why Traditional Pharmacovigilance is Failing

Historically, pharmacovigilance (PV)—the science and activities relating to the detection, assessment, understanding, and prevention of adverse effects or any other drug-related problem—has been a fragmented, reactive, and heavily human-dependent process. This reliance on manual review across diverse data sources has led to significant inefficiencies, compliance risks, and potential brand damage. The core challenge lies in the sheer volume and complexity of data that needs to be continuously monitored to ensure drug safety.

The Challenge of Data Overload

Imagine a global network of adverse event reports, patient forums, and scientific publications, all constantly generating information about drug safety. This is the reality of pharmacovigilance. Spontaneous Reporting Systems (SRSs), like the FDA Adverse Event Reporting System (FAERS) and the WHO’s VigiBase, receive millions of Individual Case Safety Reports (ICSRs) annually. While invaluable, these systems are just one piece of the puzzle.

The vast majority of adverse drug events (ADEs) are actually captured in unstructured formats: emails and phone calls to patient support centers, social media posts, sales conversations, clinical notes within Electronic Health Records (EHRs), and online patient forums. Less than 5% of ADEs are reported via official channels, meaning a treasure trove of critical safety information remains hidden within free-text data.

The problem intensifies with data silos. Information is often scattered across various institutions and systems, making it incredibly difficult to integrate and analyze comprehensively. This fragmentation, coupled with the unstructured nature of much of the data, means that critical insights are often delayed, preventing a truly proactive approach to patient safety.

The Human Factor: Inefficiency and Risk

The traditional PV workflow places an immense burden on human reviewers. Tasks such as adverse event case intake, data entry, coding, and initial assessment are highly repetitive and consume a disproportionate amount of resources. In fact, case processing activities alone can consume up to two-thirds of a typical pharmaceutical company’s PV budget.

This human dependency introduces several inefficiencies and risks:

- Repetitive Tasks: AI’s ability to perform deep reasoning across large, unstructured datasets and synthesize multimodal information can execute many repetitive, moderate complexity tasks better, faster, and cheaper than humans.

- Error-Prone Operations: Manual data entry and review are susceptible to human error, which can lead to inconsistencies and potentially missed safety signals.

- Slow Reporting Cycles: The manual nature of processing and analyzing vast amounts of data means that regulatory reporting, which often has tight deadlines (e.g., 15 days for serious and unexpected ADRs), can be significantly delayed. This slow pace can prevent early detection of safety issues.

- High Resource Consumption: The estimated annual management cost of ADRs is $30.1 billion, highlighting the financial strain of reactive, human-intensive PV.

- Inconsistent Analysis: Variability in human interpretation can lead to inconsistent analysis of adverse event reports, making it harder to identify emerging patterns reliably.

These challenges underscore the urgent need for a paradigm shift in pharmacovigilance, moving from a reactive, human-dependent model to one empowered by advanced AI.

From Data Mining to Deep Learning: The Evolution of AI in Pharmacovigilance

The journey of AI in pharmacovigilance has been one of continuous evolution, mirroring the broader advancements in artificial intelligence. What began with foundational data mining techniques has now transformed into sophisticated applications of machine learning and deep learning, enabling unprecedented capabilities in drug safety.

Early Data Mining and Statistical Methods

The earliest applications of AI in PV primarily focused on enhancing signal detection within spontaneous reporting systems. These methods aimed to identify disproportionate reporting of adverse events associated with specific drugs, hinting at potential safety concerns.

Pioneering statistical methods included:

- BCPNN (Bayesian Confidence Propagation Neural Network): Developed by Bate et al. in 1998, this method used Bayesian principles to identify unexpected associations between drugs and adverse events in databases like VigiBase, the WHO global database of ICSRs.

- MGPS (Multi-item Gamma Poisson Shrinker): Introduced by DuMouchel in 1999, MGPS applied Bayesian data mining to large frequency tables, such as those found in the FDA Spontaneous Reporting System (FAERS), to detect signals.

These early approaches significantly improved upon traditional statistical methods, which typically achieved AUCs (Area Under the Receiver Operating Characteristic Curve) of 0.7–0.8 for classifying known causes of ADRs. While foundational, these methods were primarily statistical in nature and laid the groundwork for more complex AI. For more on the early days of data mining in this field, you can review scientific research on novel data-mining methodologies.

The Rise of Machine Learning and NLP

As data sources diversified and computational power grew, machine learning (ML) and Natural Language Processing (NLP) began to revolutionize PV. The ability of NLP to process and understand human language opened doors to extracting valuable insights from unstructured text data, a critical step given the prevalence of free-text narratives in adverse event reports and other sources.

Key developments included:

- Automated Data Extraction: NLP techniques are now used to automatically scan ICSR narratives, clinical notes, and medical literature to extract relevant clinical information, such as drug names, adverse reactions, and patient outcomes.

- Social Media Mining: The “patient voice” on social media platforms like Twitter and DailyStrength contains rich, real-time safety information. NLP techniques have been successfully used to extract ADR data from social media, achieving F-measures of 0.82 for DailyStrength and 0.72 for Twitter, demonstrating its effectiveness in capturing informal safety signals. A review of NLP for ADR extraction from social media further elaborates on these advancements.

- Knowledge Graphs: These symbolic AI structures integrate diverse data sources and capture complex relationships between drugs, adverse events, patient characteristics, and biological pathways. Knowledge graph-based methods have shown remarkable performance, achieving an AUC of 0.92 in classifying known causes of ADRs, significantly outperforming traditional statistical methods.

- Deep Learning: More recently, deep learning architectures, including recurrent neural networks (RNNs), convolutional neural networks (CNNs), and transformer models (like BERT), have emerged. These advanced models can process vast amounts of complex, multi-modal data (combining text, numerical, and even visual information) to predict ADRs with high accuracy, enabling a more nuanced understanding of drug safety profiles. For instance, deep neural networks have achieved AUCs of 0.94–0.99 for predicting duodenal ulcer ADRs and 0.76–0.96 for hepatitis fulminant ADRs.

This evolution has transformed AI from a tool for statistical signal detection into a comprehensive framework for understanding, predicting, and preventing adverse drug reactions across the entire drug lifecycle.

Core Applications: How AI is Revolutionizing ADR and Signal Detection

The application of drug safety AI has moved beyond theoretical promise to deliver tangible benefits, revolutionizing how adverse drug reactions (ADRs) are detected and how safety signals are identified. By leveraging real-world data and advanced analytical capabilities, AI enables a more proactive and efficient approach to drug safety surveillance. We are seeing efficiency gains of up to 70% and regulatory reporting that is 90% faster.

Automating Adverse Drug Reaction (ADR) Detection

AI’s strength lies in its ability to process and synthesize vast, complex, and often unstructured datasets from various sources, delivering end-to-end services to address critical PV challenges.

- Multi-modal Data Analysis: Modern AI systems can integrate and analyze data from diverse sources, including individual case safety reports (ICSRs), electronic health records (EHRs), insurance claims, social media, and biomedical literature. This multi-modal approach provides a comprehensive view of potential ADRs, capturing signals that might be missed by isolated data analysis. For example, some approaches combine textual information with visual aids to improve ADR detection.

- Natural Language Processing (NLP): NLP is fundamental to automating ADR detection from unstructured text. It can automatically extract critical information like drug names, adverse events, and patient demographics from clinical notes, call-center logs, and social media posts. Transformer models, such as BERT, have proven highly effective in these tasks, and recurrent neural network (RNN) models are specifically designed for social media analysis. Named Entity Recognition (NER) and normalization techniques further refine this process, allowing for precise identification and categorization of medical terms.

- Deep Learning for Prediction: Deep learning frameworks are being developed to predict the seriousness of adverse reactions to drugs and even new, previously unknown ADRs. Research on deep learning for ADR prediction highlights the potential of these models.

- Causal Inference: Moving beyond mere correlation, AI is increasingly employed to establish causal relationships between drugs and adverse events, particularly in complex polypharmacy scenarios where patients take multiple medications. This is crucial for understanding true drug interactions and preventing future harm.

Enhancing Signal Detection and Proactive Surveillance

AI-powered algorithms can detect potential safety signals hidden within vast amounts of data, enabling PV experts to identify and assess emerging risks earlier and more accurately than traditional methods.

- Advanced Signal Detection Algorithms: Beyond early disproportionality methods, machine learning algorithms like Gradient Boosting Machines (GBM) have shown high accuracy. For instance, a GBM model achieved an AUC of 0.95 for detecting safety signals for specific drugs like nivolumab and docetaxel.

- Real-time Monitoring and Active Surveillance: AI facilitates real-time pharmacovigilance by continuously monitoring live data streams from various sources. The FDA’s Sentinel System, for example, uses automated algorithms across large healthcare databases to identify drug-outcome associations, having conducted over 250 safety analyses. You can learn more about the FDA’s Sentinel Initiative.

- Duplicate Detection and Prioritization: The Uppsala Monitoring Centre (UMC), which manages VigiBase, employs sophisticated AI. Its vigiMatch algorithm can process approximately 50 million report pairs per second to detect duplicate ICSRs, ensuring data accuracy. Furthermore, UMC’s vigiRank algorithm improves signal detection by incorporating multiple evidence aspects beyond traditional disproportionality analysis, helping to prioritize the most critical signals.

- Industry Examples of Efficiency: Leading AI-powered pharmacovigilance platforms have reported significant improvements, including a 40–50% reduction in false positive signals and an 80% acceleration in signal evaluation compared to traditional methods. These systems demonstrate substantial noise reduction and efficiency gains by ingesting multi-channel data.

By automating these processes, AI augments human medical reviewers, allowing them to focus on interpreting complex signals and making critical decisions, ultimately leading to a more proactive and robust drug safety ecosystem.

Navigating the Problems: Key Challenges in Implementing Drug Safety AI

While the potential of drug safety AI is immense, its implementation is not without significant problems. As we integrate AI into such a critical field, we must steer challenges related to algorithmic transparency, data quality, bias, and regulatory acceptance. These issues are not mere technicalities; they directly impact patient safety and public trust.

The “Black Box” Problem: Why Explainable AI (XAI) is Crucial for Drug Safety AI

One of the most pressing challenges in deploying advanced AI models, particularly deep learning, is their “black box” nature. These complex models can deliver highly accurate predictions, but often without providing clear, human-understandable explanations for their decisions. In pharmacovigilance, where lives are at stake, this lack of transparency is a major impediment.

- Trust and Regulatory Compliance: For AI-driven PV systems to gain regulatory acceptance and public trust, they must be explainable. Regulatory bodies, including the FDA, require clear justifications for AI outputs. We need to understand why an AI flagged a certain drug-ADR association, not just that it did. This interpretability ensures that stakeholders can validate the AI’s insights and confidently act upon them.

- XAI Methodologies: Explainable AI (XAI) aims to shed light on these black boxes. Methods like LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations) are widely used XAI libraries that have demonstrated effectiveness in PV applications, such as predicting adverse outcomes with up to 72% accuracy. These tools help identify which features or data points were most influential in an AI’s prediction. However, as some researchers argue, for high-stakes decisions, inherently interpretable models might be preferable to post-hoc explanations of complex black-box models. For a deeper dive into these methods, consider this perspective on explainable AI methods.

Mitigating Bias and Complexity in Drug Safety AI Models

AI models are only as good as the data they are trained on. Several factors can introduce bias and complexity, hindering their reliability and generalizability.

- Algorithmic Bias: A significant concern is dataset bias. AI models trained primarily on data from European or North American populations may struggle to detect ADRs in patients from Asia or Africa, or other underrepresented groups, due to differences in genetics, lifestyle, co-morbidities, and drug metabolism. This can lead to missed safety signals for specific demographics. Addressing this requires diverse and representative training data, as detailed in a survey on bias and fairness in machine learning.

- Polypharmacy: Patients, especially the elderly (who already face a higher risk of ADRs), often take multiple medications simultaneously. Disentangling the causal effects of individual drugs from complex drug-drug interactions (polypharmacy) is extremely challenging. AI models need advanced causal inference capabilities (e.g., causal graph neural networks, InferBERT, Bayesian networks) to move beyond correlation and identify true causality in these intricate scenarios.

- Temporal Dynamics (Concept Drift): Drug safety profiles are not static. New ADRs can emerge over time, and the understanding of existing ones can evolve. AI models must be capable of adapting to these “concept drifts,” requiring continuous learning and retraining to remain relevant and accurate.

- Data Quality and Harmonization: Integrating multi-modal data sources (EHRs, social media, claims, ICSRs) requires robust data quality checks and harmonization efforts. Inconsistent data formats, missing values, and varying terminologies can severely impact AI model performance.

The Regulatory Landscape: FDA’s EDSTP and Ethical Frameworks

Regulatory bodies worldwide are actively engaging with the integration of AI in healthcare, recognizing both its potential and the need for robust oversight.

- FDA’s Emerging Drug Safety Technology Program (EDSTP): The U.S. Food and Drug Administration (FDA) has established the CDER Emerging Drug Safety Technology Program (EDSTP) to facilitate dialogue between industry and the agency. This program aims to manage and transfer knowledge within the FDA regarding AI and other emerging technologies in PV, informing potential regulatory and policy approaches. The FDA is keenly interested in how industry establishes the credibility and trustworthiness of AI models, emphasizing aspects like human-led governance, data quality, bias mitigation, and model evaluation. You can learn more about the CDER Emerging Drug Safety Technology Program (EDSTP).

- Ethical Frameworks and Compliance: Beyond specific programs, overarching ethical frameworks and regulations guide AI use. The EU AI Act, for instance, classifies AI systems in healthcare as high-risk, demanding transparency, traceability, and human oversight. Data privacy regulations like GDPR (Europe) and HIPAA (USA) are paramount, ensuring that sensitive patient data used by AI remains secure and confidential. Countries like the UK, through its MHRA, are also leading efforts to ensure the safe use of AI in healthcare. These frameworks collectively emphasize patient welfare, privacy, transparency, and accountability in AI-driven PV.

Navigating these challenges requires a collaborative effort between AI developers, pharmaceutical companies, regulatory agencies, and healthcare providers to ensure that drug safety AI is not only powerful but also trustworthy, fair, and compliant.

The Future is Now: Emerging Trends Shaping AI-Powered Drug Safety

The landscape of drug safety AI is continuously evolving, driven by rapid advancements in technology and a growing demand for more proactive and precise pharmacovigilance. We are at the cusp of a new era where AI will not only augment human capabilities but also open up entirely new paradigms for ensuring patient safety.

Here are the key trends shaping the future of drug safety AI:

- Federated Learning: Enhancing collaboration without sacrificing privacy.

- Quantum Computing: Revolutionizing complex data analysis.

- Blockchain Technology: Ensuring data integrity and secure sharing.

- In Silico Trials & Digital Twins: Proactive safety assessments through virtual simulations.

- Continuously Learning Models: Adapting to new safety data in real-time.

- Multi-lingual Natural Language Processing (NLP): Globalizing data insights.

- Integration of Multi-Omic & Real-World Data: Comprehensive patient safety profiles.

Federated Learning: Enhancing Collaboration Without Sacrificing Privacy

One of the most promising advancements addressing data privacy and bias is federated learning. This innovative approach allows AI models to be trained on decentralized datasets located at various institutions (e.g., hospitals, research centers) without ever requiring the raw data to leave its original source.

- Privacy Preservation: In regions like Europe with strict GDPR regulations, and the USA with HIPAA, federated learning is a game-changer. It enables collaborative model development and improvement while keeping sensitive patient data secure and private, addressing a major ethical and regulatory concern.

- Mitigating Algorithmic Bias: By allowing models to learn from diverse, geographically distributed datasets, federated learning inherently helps mitigate algorithmic bias. Models can be trained on data representing various populations (e.g., from different continents like Europe, North America, Asia, Africa), leading to more robust and generalizable safety predictions. For more insights, refer to research on the future of digital health with federated learning.

- Global Collaboration: This technology fosters unprecedented global collaboration among pharmaceutical companies, public health agencies, and research institutions, allowing collective intelligence to improve drug safety without compromising proprietary or patient-identifiable information.

As a federated AI platform provider, we at Lifebit are at the forefront of enabling this secure, collaborative research, ensuring that insights from vast, siloed datasets can be open uped for drug safety without ever moving the underlying data.

Next-Generation Technologies on the Horizon

Beyond federated learning, several other cutting-edge technologies are ready to further transform drug safety AI:

- Quantum Computing: While still in its early stages, quantum computing holds the potential to process and analyze data at speeds and scales currently unimaginable. For pharmacovigilance, this could mean simulating drug-body interactions with unparalleled precision, identifying subtle ADRs that conventional computing might miss, and accelerating the findy of complex safety signals.

- Blockchain Technology: Blockchain offers a decentralized and immutable ledger system that can improve data integrity, traceability, and secure sharing in PV. It could be used to create a tamper-proof record of adverse event reports, drug manufacturing batches, and patient data, ensuring trust and transparency across the drug supply chain and PV processes.

- In Silico Trials and Virtual Patient Simulations: The development of “digital twins” or virtual patient simulations will allow for proactive safety assessments. Instead of waiting for adverse events to occur in real patients, AI can simulate how a drug might affect different patient populations under various conditions, predicting potential ADRs before a drug even reaches clinical trials.

- Continuously Learning AI Models: Future AI models in PV will be designed for continuous learning, automatically updating and refining their knowledge as new safety data emerges. This ensures that the systems remain adaptive to the temporal dynamics of drug safety profiles (concept drift) and can identify emerging risks in real-time.

- Generative AI (LLMs): The recent explosion of Large Language Models (LLMs) will significantly impact PV. They can act as “PV copilots,” assisting safety specialists in summarizing complex case narratives, performing quality control on reports, and even drafting sections of regulatory documents, freeing up human experts for higher-level analysis and decision-making.

- Multi-lingual NLP: As drug development and usage are global endeavors, AI-powered multi-lingual NLP will be crucial for processing safety data from diverse linguistic sources, breaking down language barriers in global pharmacovigilance.

These emerging trends collectively point towards a future where drug safety AI becomes an indispensable tool, enabling a truly proactive, predictive, and personalized approach to safeguarding public health.

Conclusion

The journey from reactive vigilance to proactive prediction in drug safety is not just an aspiration; it’s a rapidly unfolding reality powered by drug safety AI. The challenges of traditional pharmacovigilance—from overwhelming data volumes and human-dependent inefficiencies to the high costs of adverse drug reactions—are being systematically addressed by AI-driven solutions.

We’ve seen how AI has evolved from early data mining techniques to sophisticated machine learning and deep learning, now capable of automating adverse drug reaction detection, enhancing signal detection, and enabling real-time surveillance across diverse, multi-modal data sources. These advancements are translating into tangible benefits, with enterprise customers reporting up to 70% efficiency gains and 90% faster regulatory reporting.

However, the path forward requires careful navigation of complexities such as algorithmic bias, the “black box” problem of AI, and the intricate regulatory landscape. The emphasis on Explainable AI (XAI), robust bias mitigation strategies, and collaborative regulatory initiatives like the FDA’s EDSTP are crucial for building trust and ensuring the responsible deployment of AI in this high-stakes domain.

The future of drug safety AI is bright, with emerging trends like federated learning promising to open up global data insights while preserving privacy, and next-generation technologies like quantum computing and blockchain ready to further revolutionize data analysis and integrity.

At Lifebit, we are committed to building the future of proactive drug safety. Our federated AI platform enables secure, real-time access to global biomedical and multi-omic data, providing the foundational infrastructure for compliant, large-scale research and pharmacovigilance. By changing the traditional vigilance model into a predictive, AI-driven workflow, we can significantly improve efficiency, achieve substantial cost savings, and, most importantly, improve patient outcomes worldwide.

Find how federated AI can transform your pharmacovigilance strategy and empower your organization to move beyond vigilance to prediction. Explore our solutions today.