Beyond Integration: Understanding Data Harmonization

Why Data Quality, Not Just Data Quantity, Determines AI Success

How does data harmonization differ from data integration? Here’s the quick answer:

| Aspect | Data Integration | Data Harmonization |

|---|---|---|

| Purpose | Combines data from different sources into one location | Reconciles discrepancies and creates semantic consistency across datasets |

| Focus | Technical connectivity and data movement | Meaning, comparability, and analytical readiness |

| Output | Consolidated data repository | Analysis-ready, AI-compatible unified dataset |

| Analogy | Getting everyone in the same room | Making sure everyone speaks the same language |

Most organizations today face a familiar pain: data scattered across silos, stored in various formats, making it nearly impossible to trust or act on. You’ve spent millions consolidating data into warehouses and lakes, yet your analysts still spend 80% of their time cleaning and prepping data instead of generating insights. Your AI initiatives stall because models trained on inconsistent data produce unreliable results.

The problem isn’t just where your data lives—it’s whether your data can actually talk to itself.

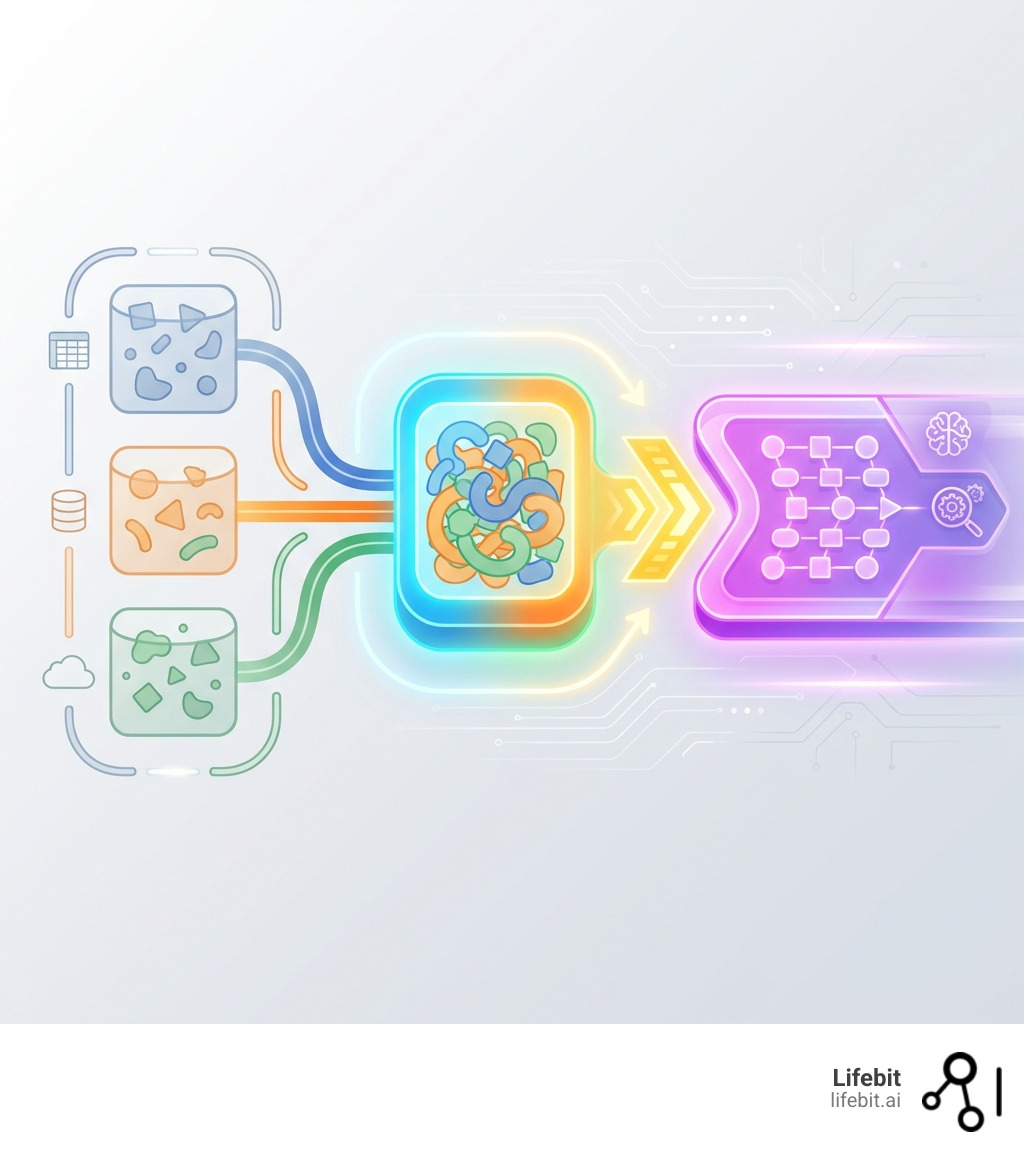

Integration focuses on moving data between systems and creating technical connectivity. Harmonization goes further by ensuring that integrated data maintains semantic consistency and business meaning across different sources, creating unified data models that preserve contextual relationships and enable advanced analytics and AI applications.

When data integration alone is implemented, organizations often end up with what’s called a “data swamp”—a repository full of data that’s technically accessible but practically unusable for meaningful analysis. Harmonization addresses this by reconciling discrepancies like different measurement units (Celsius vs. Fahrenheit), inconsistent naming conventions (“customer” vs. “client” vs. “user”), and varying data structures, changing raw integrated data into an analysis-ready asset.

As Maria Chatzou Dunford, CEO and Co-founder of Lifebit, I’ve spent over 15 years working at the intersection of computational biology, AI, and biomedical data integration—directly tackling how does data harmonization differ from data integration in the context of genomics and precision medicine. Understanding this distinction has been critical to enabling secure, federated analysis across global healthcare institutions where semantic consistency can literally impact patient outcomes.

Terms related to how does data harmonization differ from data integration:

Data Integration: Getting Your Data in the Same Room

Imagine you’re hosting a massive potluck, and everyone brings their favorite dish. Data integration is like gathering all those dishes and putting them on one big table. You’ve successfully brought everything together in a central location, making it accessible. But are all the dishes compatible? Can they be eaten together? Probably not without some culinary chaos!

At its core, data integration is the process of combining data from various disparate sources into a single, unified data repository. Our primary goal with integration is often to centralize data, provide easier access, and establish technical connectivity between different systems. This process helps us consolidate information, ideally leading to a “single source of truth” where all relevant data resides. The methods for achieving this vary, each with its own strengths and weaknesses:

- ETL (Extract, Transform, Load): This is the traditional workhorse of data integration. Data is extracted from its source systems, transformed into a predefined structure and schema on a separate processing server, and then loaded into the target data warehouse. This approach is highly effective for structured, predictable data and is ideal for building well-governed, performance-optimized data repositories for business intelligence.

- ELT (Extract, Load, Transform): A more modern approach that has gained popularity with the rise of powerful, scalable cloud data lakes and warehouses. In ELT, raw data is extracted and loaded directly into the target repository with minimal upfront transformation. The transformation logic is applied later, within the data lake or warehouse itself, as needed for specific analyses. This provides greater flexibility, as it allows data scientists and analysts to work with the raw data and apply different transformations for different use cases. It’s particularly well-suited for handling large volumes of unstructured or semi-structured data.

- API-Based Integration and Data Streaming: For real-time needs, Application Programming Interfaces (APIs) allow different applications to communicate and exchange data directly. This is essential for operational processes, such as updating a customer record in a CRM immediately after a purchase is made on an e-commerce site. Data streaming technologies like Apache Kafka take this further, enabling a continuous flow of data from sources to targets, which is critical for applications like fraud detection and IoT analytics.

- Data Virtualization: This method creates an abstract data layer that provides a unified view of data from multiple sources without physically moving it. It queries the source systems in real-time and presents the combined results to the user. While it offers great agility and avoids the cost of creating a separate data store, it can put a significant performance load on the source systems and may not be suitable for complex analytical queries.

For example, we might integrate customer data from our CRM system, sales data from our e-commerce platform, and marketing campaign data from our automation tool into a central data warehouse. This gives us a consolidated view of customer interactions and sales figures. However, the limitation here is crucial: while the data is now in one place, its structural and semantic differences often remain. “Customer ID” in one system might be “Client_Num” in another, and “Product Category” in one might have different values or hierarchies than in another. We’ve put all the puzzle pieces in the same box, but they still have different shapes and colors.

Data Harmonization: Teaching Your Data to Speak the Same Language

Building on our potluck analogy, if data integration is putting all the dishes on one table, data harmonization is akin to ensuring all those dishes follow a consistent recipe or theme. It’s about changing disparate ingredients (data) into a cohesive, delicious meal that makes sense together. Harmonization goes beyond merely combining data; it involves a deeper, more thoughtful process of reconciling discrepancies and creating semantic consistency across datasets.

The definition of data harmonization is the process of bringing together data from different sources, aligning on common standards to ensure they are comparable and usable in analysis. This is critical for our journey towards achieving true analysis-readiness. It means we’re not just merging data, but actively working to resolve inconsistencies in data types, formats, structures, and semantics. For instance, if one dataset records patient age in years and another in days, harmonization converts them all to a common unit. If one system uses “USA” and another “U.S.” for the same country, harmonization standardizes it.

This process can be approached in two ways:

- Prospective Harmonization: This is the ideal scenario, where data collection standards are established before a project begins. For example, in a multi-center clinical trial, all participating hospitals agree to use the same forms, variable names, and measurement protocols from the outset. This minimizes downstream cleaning and alignment efforts but is often impractical in the real world, where we must work with pre-existing data.

- Retrospective Harmonization: This is the more common and challenging approach, where existing datasets collected under different standards are aligned after the fact. This requires a significant effort in data mapping, transformation, and validation to create a cohesive dataset from disparate sources.

A key component of data harmonization, especially retrospective harmonization, is the adoption of a Common Data Model (CDM). CDMs provide a standardized structure and terminology that allow data from different sources to be mapped and organized in a uniform way. A CDM is more than just a database schema; it’s a shared vocabulary and set of conventions that give the data its meaning. For example, the OMOP Common Data Model, widely used in healthcare, standardizes disparate electronic health record data (diagnoses, procedures, medications) into a common format with a common set of concepts. This allows researchers to write a single analysis script and run it across data from different hospitals worldwide, generating reproducible and comparable results. Other examples include the Financial Industry Business Ontology (FIBO) for standardizing financial concepts and the i2b2 (Informatics for Integrating Biology and the Bedside) model. To achieve this, harmonization often involves mapping local terms to standardized ontologies and terminologies, such as SNOMED CT for clinical terms or LOINC for lab tests, adding a layer of semantic richness that simple value mapping lacks.

This hierarchical reorganization of data into a single, unified schema is what truly opens up deeper insights and enables advanced analytics and AI/ML applications. As research from the University of Michigan explains, the goal isn’t perfection—it’s actionable insights that drive real business decisions and scientific breakthroughs. We transform fragmented and inaccurate collections into actionable intelligence. Essentially, data harmonization ensures that our integrated data maintains semantic consistency and business meaning across different sources. It moves us beyond mere technical connectivity to create unified data models that preserve contextual relationships, which is vital for sophisticated analysis and AI applications. Without harmonization, even integrated data can lead to what we call “data chaos”—a situation where our data is present, but its inconsistencies prevent us from extracting its full value.

For a deeper dive into this transformative process, we recommend A general primer on data harmonization.

How Does Data Harmonization Differ from Data Integration? A Head-to-Head Breakdown

Understanding how does data harmonization differ from data integration is crucial for any organization aiming to leverage its data effectively. While often used interchangeably, they represent distinct stages in the data management lifecycle, with different objectives and outcomes.

Let’s use an analogy to clarify: Data integration is like a conductor gathering all the musicians into one orchestra pit. They are all in the same room, ready to play. Data harmonization, however, is ensuring that all those musicians are playing from the same sheet music, in the same key, and interpreting the composer’s intent uniformly. Without harmonization, even if all the instruments are present (integration), the resulting sound would be chaotic.

Here’s a head-to-head comparison:

| Aspect | Data Integration | Data Harmonization |

|---|---|---|

| Primary Goal | To consolidate data from various sources into a central repository for access and storage. | To ensure semantic consistency and comparability across integrated datasets, making them analysis-ready. |

| Core Process | Extracting data, basic cleansing/reformatting, and loading into a data warehouse or data lake (ETL/ELT). Focuses on data movement. | Variable mapping, value standardization, schema alignment, context preservation, and reconciliation of discrepancies. Focuses on data meaning. |

| Output | A consolidated data repository, potentially a “data swamp” if inconsistencies persist. | An analysis-ready, AI-compatible, unified dataset that is reliable and comparable. A “single source of meaning.” |

| Analogy | Gathering ingredients for a meal. | Following a recipe to ensure all ingredients combine harmoniously into a delicious dish. |

| Key Challenge | Technical connectivity, data volume, moving data efficiently. | Semantic conflicts, inconsistent definitions, varying data structures, maintaining data context. |

| Value Proposition | Centralized access, reduced silos, foundational for reporting. | Deeper insights, advanced analytics, AI/ML readiness, improved decision-making. |

How does data harmonization differ from data integration in scope and goals?

The scope and goals are where the fundamental difference lies. Data integration’s primary goal is to centralize data. We aim to pull data from disparate systems into one location, providing access and establishing technical connectivity. Think of it as building the plumbing system that gets water from various sources into a central reservoir. This is essential for foundational reporting and ensuring all our data is accessible.

Data harmonization, on the other hand, has a much broader and deeper goal: to create semantic meaning and ensure comparability across all our integrated datasets. Its purpose is to transform that pooled data into a format that enables advanced analytics and AI. Harmonization goes beyond mere technical connectivity; it’s about making sure that when we look at “patient age” from five different hospitals across the United Kingdom or USA, we are truly comparing apples to apples. This is particularly vital for scientific research on large-scale data collaboration, where researchers need to trust that data from different studies can be meaningfully combined.

How does data harmonization differ from data integration in process?

The processes involved in each are also distinct. Our data integration process typically involves:

- Data Extraction: Pulling raw data from various source systems.

- Basic Cleansing: Performing rudimentary clean-up, such as removing obvious errors or duplicates, but often without deep semantic understanding.

- Loading: Moving the data into a data lake or data warehouse.

This is largely a technical operation focused on efficient data movement and storage.

The data harmonization process is far more intricate and knowledge-intensive. It typically includes:

- Data Assessment and Profiling: Deeply understanding the content, structure, and quality of each source.

- Designing the Harmonization Framework: Establishing common formats, units, and categorization schemes, often involving Common Data Models (CDMs).

- Data Mapping: Precisely defining how variables from different sources correspond to the unified model. This can involve mapping “customername” to “clientfull_name”.

- Data Change: This is where the magic happens. We perform:

- Value Standardization: Converting “M” and “Male” to a consistent “Male” value.

- Unit Conversion: Changing Celsius to Fahrenheit, or kilograms to pounds, to ensure comparability.

- Schema Alignment: Reconciling different data structures, like mapping multiple address fields into a single, standardized address object.

- Context Preservation: Ensuring that the meaning and relationships within the data are maintained and even enriched.

- Automated Cleansing: Utilizing tools and AI to identify and correct inaccuracies, fill missing values, and deduplicate records. AI and machine learning play a crucial role here, automatically identifying corresponding fields and adapting to schema changes without manual intervention.

- Quality Assurance and Validation: Rigorously testing the harmonized data against our original sources and business requirements.

- Maintenance and Monitoring: Continuously overseeing the harmonized data pipeline to ensure ongoing quality and adaptability to new data sources or evolving standards.

This multi-layered process, often incorporating AI-driven techniques for semantic inference and real-time schema evolution, ensures that our data isn’t just stored together, but truly understood and aligned.

How do the required skill sets and team structures differ?

The human element—the teams and skills required—also starkly illustrates the difference between integration and harmonization. Building the right team is as critical as choosing the right technology.

Data Integration is primarily an engineering discipline. The teams are typically composed of:

- Data Engineers: Specialists in building and maintaining scalable data pipelines. They are proficient in SQL, Python/Java/Scala, and technologies like Apache Spark, and are experts on cloud platforms (AWS, Azure, GCP).

- ETL/ELT Developers: Focus specifically on designing and implementing data movement and basic transformation workflows.

- Database Administrators (DBAs): Manage the performance, security, and availability of the target data warehouses or lakes.

The focus is on technical execution: building robust plumbing, ensuring data flows efficiently and reliably, and managing the underlying infrastructure. The key challenge is scale and performance.

Data Harmonization, conversely, requires a multidisciplinary, collaborative effort that bridges the gap between IT and the business or research domain. A harmonization team is a hybrid force that includes:

- Domain Experts: These are the most critical players. They are clinicians, financial analysts, supply chain specialists, or biologists who deeply understand the data’s context, meaning, and nuances. They are essential for defining the rules of harmonization, validating mappings, and ensuring the final dataset is scientifically or commercially valid.

- Data Stewards: Individuals responsible for managing and overseeing an organization’s data assets. They define data policies, ensure quality, and often lead the governance efforts around creating and maintaining a common data model.

- Data Scientists and Informaticians: These roles bring analytical and semantic expertise. They understand how the data will be used for modeling and analysis, and they can help design a harmonization strategy that supports these downstream applications. They are often skilled in ontologies, terminologies, and statistical methods for data cleaning.

- Data Engineers: The same engineers from the integration phase are still needed, but here they work in close collaboration with domain experts to implement the complex transformation and mapping logic.

In short, if data integration is about building the library, data harmonization is about employing librarians and subject matter experts to curate the collection, create a card catalog, and ensure every book is in its rightful place and understood in context.

What is the difference in the final outcome?

The end-state of each process yields vastly different results for our organization.

The outcome of data integration is a consolidated data repository. While this provides a centralized location for our data, without harmonization, it often becomes what we call a “data swamp.” It’s a vast collection of raw data that, while technically accessible, requires significant manual pre-processing and interpretation before it can be used for any meaningful analysis. Analysts still spend excessive amounts of time trying to make sense of disparate formats and definitions.

The outcome of data harmonization, conversely, is an analysis-ready dataset. This is not just a collection of data, but a “single source of meaning”—a unified, reliable, and comparable dataset that is immediately usable for advanced analytics, business intelligence, and AI/ML applications. This harmonized data allows us to:

- Gain a 360-degree view of our enterprise.

- Improve analytics and business intelligence significantly.

- Improve customer relationship management by providing a consistent view of customer data.

- Optimize logistics and supply chain decisions with accurate, cohesive information.

- Expedite auditing and compliance processes by reducing the time spent on data collation and cleaning.

Crucially, harmonized data is inherently AI-compatible. It provides the clean, consistent, and semantically rich foundation that machine learning models need to learn effectively and produce reliable, actionable insights.

When Is Harmonization Mission-Critical?

While data integration is a necessary first step for almost any data strategy, there are specific scenarios where data harmonization becomes not just beneficial, but absolutely mission-critical for our success.

When Integration is Sufficient:

For simpler needs, data integration might be enough. If we’re primarily focused on:

- Simple BI Reporting: Generating basic dashboards or reports from a few sources with minimal semantic overlap (e.g., pulling sales figures from a single ERP system).

- Data Archiving: Storing historical data for compliance or long-term retention without immediate analytical needs.

- Consolidating Already-Similar Systems: Bringing together data from systems that inherently have very similar schemas and terminology (e.g., merging two instances of the exact same ERP system after a company acquisition).

When Harmonization is Necessary:

However, when we move beyond basic consolidation and aim for deep insights, cross-domain analysis, or cutting-edge AI, harmonization is indispensable. This is particularly true in complex fields like biomedical research and healthcare, where Lifebit specializes. Here are key scenarios where harmonization is the only path forward:

- Multi-site Clinical Trials & Research: Global pharma companies and public sector organizations often have Electronic Health Record (EHR) systems, genomics databases, and clinical trial data trapped in incompatible silos across different institutions in the UK, USA, Europe, Canada, and Singapore. To pool this data for larger, more statistically powerful studies, harmonization is essential. A prime example is The National COVID Cohort Collaborative (N3C), which harmonized EHR data from institutions across the US to enable rapid scientific discovery during the pandemic. This effort allowed researchers to combine vast amounts of patient data, overcoming the inherent variability in how different hospitals record clinical information using different coding systems (e.g., ICD-9 vs. ICD-10) and local terminologies.

- Federated Learning: In federated learning, AI models are trained across decentralized datasets without centralizing the data itself, which is crucial for data privacy and security. For these models to learn effectively, the data at each site must be semantically aligned. The model needs to understand that “blood pressure” in a dataset in London means the same thing as “BP” in a dataset in New York. Harmonization provides this common semantic foundation, enabling the model to learn from diverse data without violating privacy.

- Training Robust AI Models: Any AI or machine learning initiative that relies on diverse data sources (e.g., combining patient demographics, lab results, imaging data, and genomic markers) will fail without harmonization. Inconsistent data—with different units, scales, and category labels—introduces noise and bias, leading to inaccurate models and unreliable predictions. Harmonization ensures the AI is learning from a unified, coherent representation of reality, dramatically improving model performance and trustworthiness.

- Real-World Evidence (RWE) Studies: Generating robust RWE involves integrating and harmonizing data from a wide array of sources: EHRs, insurance claims, patient registries, pharmacy data, and even data from wearables. Each source has its own structure, coding system, and level of quality. Harmonization is the painstaking process of mapping these disparate sources to a common data model to create a longitudinal patient view, allowing for comprehensive insights into disease progression, treatment effectiveness, and patient outcomes in real-world settings.

- Financial Services Risk & Compliance: A multinational bank must harmonize data from different countries’ regulatory systems, trading platforms, and loan portfolios to get a single, accurate view of its global risk exposure. Different regions may define “risk” differently, use different currencies, and follow disparate accounting standards. Harmonization is the only way to aggregate this information meaningfully for global risk management, stress testing, and reporting to regulators. Similarly, for Anti-Money Laundering (AML), harmonization is key to linking customer entities across systems and standardizing transaction data to detect suspicious patterns.

- Master Data Management (MDM): Harmonization is a core pillar of any successful MDM strategy. The goal of MDM is to create a single “golden record” for critical business entities like customers, products, or suppliers. This requires harmonizing data from CRMs, ERPs, billing systems, and marketing platforms to resolve conflicting information, deduplicate records, and create a single, authoritative source of truth that powers the entire organization.

- Global Supply Chain Optimization: For a manufacturing company operating across 5 continents, harmonizing data from multiple factories, warehouses, logistics partners, and suppliers is vital. Each location may use different systems to track inventory, production output, and shipping times. Harmonizing this data—standardizing product codes, location identifiers, and status updates—enables accurate global inventory management, demand forecasting, and predictive analytics to prevent disruptions and optimize delivery.

- Cross-Channel Retail & Market Analysis: To achieve a true “Customer 360” view, a retailer must harmonize data from online browsing history, in-store POS systems, loyalty programs, customer service calls, and social media interactions. This involves complex identity resolution and the standardization of product catalogs and behavioral event data. In real estate, harmonizing property listings, sales transactions, zoning laws, and demographic data from various municipal and private sources enables on-demand market analysis, trend forecasting, and accurate valuations in cities like New York or London.

Whenever the goal is to extract deep, reliable, and actionable insights from diverse data, especially for advanced analytical applications like AI, harmonization shifts from a nice-to-have to a non-negotiable imperative.

Conclusion: Stop Drowning in Data, Start Driving Decisions

We’ve explored the crucial distinction between data integration and data harmonization. Data integration primarily solves for location, bringing disparate datasets together into a central repository. It’s the foundational step, ensuring our data is accessible. However, without further refinement, this often results in a “data swamp”—a vast collection that’s technically present but semantically chaotic.

Data harmonization, on the other hand, solves for meaning. It goes beyond mere consolidation to reconcile discrepancies, standardize values, align schemas, and preserve context, effectively teaching our data to speak a common language. This rigorous process transforms raw, integrated data into a unified, analysis-ready, and AI-compatible asset—a “single source of meaning” that we can truly trust.

For our organizations across the UK, USA, Europe, Canada, Israel, and Singapore, particularly those engaged in complex scientific research, healthcare, or advanced business intelligence, harmonization is non-negotiable. It’s the difference between merely having data and truly leveraging it for breakthrough findings and strategic advantages. As we’ve seen, organizations lose an average of $13 million annually due to poor data quality, a direct consequence of fragmented and unharmonized data. Data chaos is a choice; continuing with it means leaving money on the table and insights in the dark.

At Lifebit, we understand this challenge intimately. Our next-generation federated AI platform is designed to provide secure, real-time data harmonization capabilities, enabling large-scale, compliant research across biomedical and multi-omic data. Through components like our Trusted Research Environments (TREs) and advanced AI/ML analytics, we empower biopharma, governments, and public health agencies to move beyond integration to achieve true data harmony. We help our users transform their raw, complex data into intelligence-ready assets, driving decisions faster and with greater confidence.