The Brains Behind the Bytes: How Deep Learning Powers Analytics

Why Deep Learning Analytics is Changing Data-Driven Research

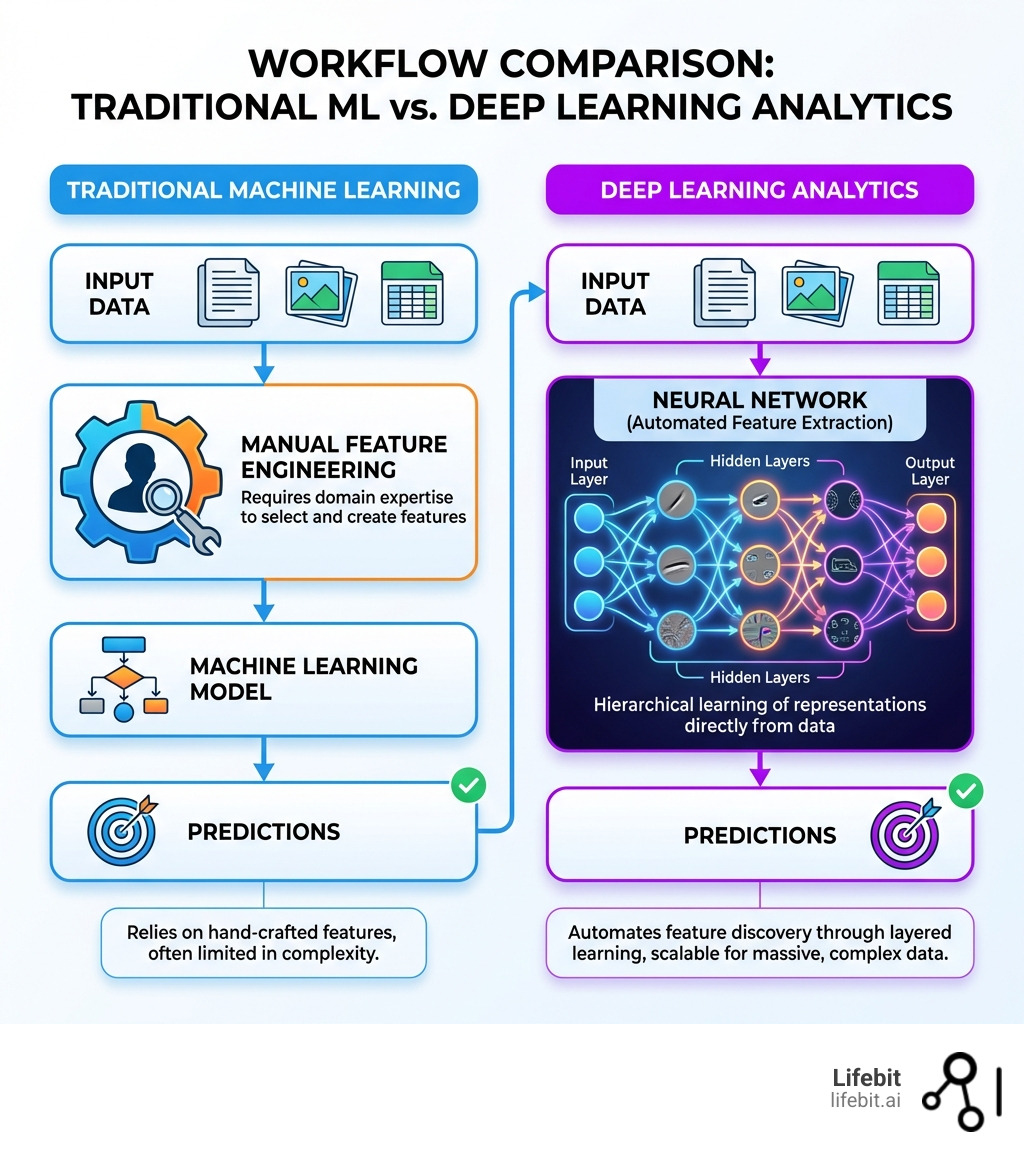

Deep learning analytics is a sophisticated subset of machine learning that trains computers to recognize patterns in massive, complex datasets without explicit programming. Unlike traditional algorithms that require human intervention to define what features to look for, deep learning models learn representations directly from the data through layered neural networks. This paradigm shift is not merely an incremental improvement; it is a fundamental change in how we extract value from information.

Historically, the concept of neural networks dates back to the 1950s with the “Perceptron,” but it wasn’t until the last decade—fueled by the explosion of big data and the availability of high-performance GPUs—that deep learning became the dominant force in artificial intelligence. Today, it is the engine behind the most advanced technologies, from autonomous vehicles to precision medicine.

Key Characteristics that Define Deep Learning Analytics:

- Automated feature extraction: The model identifies the most relevant variables on its own, eliminating the need for manual rule-setting by data scientists.

- Hierarchical learning: Multiple layers of neurons identify patterns at different levels of abstraction, moving from broad shapes to granular details.

- Scalable insights: These models thrive on data; the more information they process, the more accurate they become, making them ideal for datasets that are too large for human analysis.

- Real-world applications: It powers everything from real-time speech recognition and medical imaging to fraud detection and genomic research.

Core Components of the Architecture:

- Neurons – The basic computational units that receive input, process it, and pass it along.

- Weights and biases – The internal parameters that the model adjusts during training to minimize error and improve prediction accuracy.

- Activation functions – Mathematical gates that determine whether a signal is strong enough to be passed to the next layer.

- Hidden layers – The “deep” part of deep learning, where complex hierarchical features are learned and refined.

If you’re working with electronic health records (EHR), genomic datasets, or multi-omic research data, deep learning analytics can reduce preprocessing steps by up to 10 stages while improving accuracy by more than 10% compared to traditional statistical methods. This is particularly vital in the life sciences, where the data is often high-dimensional and noisy.

The challenge today isn’t whether deep learning works—it’s how to implement it across siloed, highly regulated datasets without moving sensitive information. For pharma researchers, public health agencies, and regulatory bodies managing patient data across borders, the computational cost and data privacy requirements create barriers that traditional centralized approaches can’t solve. Moving petabytes of genomic data is not only expensive but often legally impossible due to data residency laws.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where we’ve built a federated biomedical data platform that enables deep learning analytics across distributed datasets without compromising compliance or security. With a PhD in Biomedicine and over 15 years in computational biology and AI, I’ve seen how the right infrastructure can transform what’s possible with sensitive health data. By bringing the analysis to the data, we unlock insights that were previously trapped behind institutional walls.

Easy Deep learning analytics glossary:

The Architecture of Intelligence: How Deep Learning Analytics Works

To understand how deep learning analytics finds “needles in haystacks,” we have to look at its biological inspiration. At its core, a deep learning model is a digital mimic of the human brain’s neocortex. It consists of thousands, or even billions, of interconnected “neurons” organized into layers that process information in a non-linear fashion.

The Building Blocks: Neurons, Weights, and Biases

Every individual neuron in a network is a tiny processing station. When data enters the network, it is multiplied by a weight, which determines the “importance” or influence of that specific input. For instance, in a model predicting heart disease, a weight might be higher for “blood pressure” than for “eye color.” A bias term is then added, much like the intercept in a standard regression, to help the model better fit the data and account for variations that aren’t captured by the inputs alone.

The magic happens when this information passes through an activation function. This function decides if the signal is strong enough to be passed on to the next layer. Common functions include the Rectified Linear Unit (ReLU), which helps the model learn complex patterns by introducing non-linearity, and the softmax function, which is often used in the final output layer to turn raw numbers into probabilities—such as a 95% certainty that a medical image shows a specific type of tissue.

The Mathematics of Learning: Backpropagation and Loss Functions

How does the model actually “learn”? It uses a process called backpropagation. During training, the model makes a prediction, and a loss function (like Mean Squared Error or Cross-Entropy) measures how far off that prediction was from the actual truth. The model then works backward from the output layer to the input layer, adjusting the weights and biases using an optimization algorithm called Gradient Descent. This iterative process continues until the error is minimized, effectively “tuning” the brain to recognize the correct patterns.

The Power of Hidden Layers and Hierarchical Learning

What distinguishes a “deep” model from a standard neural network, like a restricted Boltzmann machine (RBN) or a simple multilayer perceptron, is the number of hidden layers. These layers are where the heavy lifting of feature extraction occurs.

These layers enable hierarchical feature learning:

- Earlier layers identify broad, simple patterns. In image recognition, these might be edges, lines, or simple gradients. In speech, these might be basic frequencies.

- Middle layers combine these simple patterns to identify more complex shapes, like circles or textures.

- Deeper layers synthesize everything to identify complex features, such as a human face, a specific protein structure, or the grammatical nuances of a sentence.

The Inference Process: The Forward Pass

Once the model is trained, it enters the inference phase. When we want a trained model to analyze new, unseen data, it performs a forward pass. Data travels from the input layer, through the hidden layers, and finally to the output layer to provide a prediction. This happens in milliseconds, providing real-time evidence in action for clinicians, researchers, and analysts. This ability to generalize from training data to real-world scenarios is what makes deep learning analytics so transformative for modern industry.

Why Traditional Programming is Failing Your Big Data Strategy

For decades, the world relied on “traditional” programming where a human expert wrote explicit rules: If X happens, then do Y. This deterministic approach worked for simple spreadsheets and basic automation, but it crumbles under the weight of modern big data, particularly in fields like genomics and high-frequency finance.

The Curse of Dimensionality

In traditional statistics, we often struggle with the “curse of dimensionality”—as the number of variables (features) increases, the amount of data needed to make reliable generalizations grows exponentially. Traditional models become sluggish and inaccurate when faced with thousands of variables. Deep learning analytics thrives in this environment. It is designed to handle high-dimensional data by finding the underlying structure (the manifold) that defines the data, effectively ignoring the noise that would trip up a standard regression model.

Feature Engineering vs. Feature Representation

In traditional machine learning, data scientists spend up to 80% of their time on feature engineering—the manual process of selecting, cleaning, and transforming variables to make them useful for an algorithm. This requires deep domain expertise and is prone to human bias.

In deep learning analytics, the model performs automated extraction. It learns the feature representation directly from the raw data. For example, instead of a human telling a model to “look for the ratio of cholesterol to HDL,” a deep learning model might discover a much more complex, non-linear relationship between dozens of blood markers that a human would never have thought to correlate.

| Feature | Traditional Programming | Deep Learning Analytics |

|---|---|---|

| Logic | Explicit, human-written rules | Learned patterns from data |

| Feature Extraction | Manual (Feature Engineering) | Automated (Representation Learning) |

| Data Types | Structured (Tables/Spreadsheets) | Unstructured (Images, Voice, Omics, Text) |

| Scalability | Limited by human complexity | Improves with more data and compute |

| Adaptability | Requires manual updates for new data | Self-adjusts through retraining |

Learning Through Trial and Error: The Unsupervised Journey

Deep learning is often an unsupervised or semi-supervised journey of pattern recognition. By using huge datasets and constant feedback loops, the model identifies its own conditions for success. This is vital when dealing with “messy” data—where labels might be missing or the relationships between variables are constantly shifting. In the context of a “Big Data Strategy,” deep learning isn’t just a tool; it’s a necessity for any organization that wants to move beyond descriptive analytics (what happened) to predictive and prescriptive analytics (what will happen and how to optimize for it).

From Vision to Voice: Real-World Applications of Deep Learning

Deep learning isn’t just a theoretical concept; it’s the engine behind the world’s most advanced technologies. From the virtual assistants in our pockets like Siri and ChatGPT to the vision systems in autonomous vehicles, the impact is ubiquitous. However, its most profound impact is being felt in specialized scientific and industrial fields.

The Specialized Networks: Choosing the Right Tool

Different problems require different neural architectures, and the field of deep learning analytics has evolved to provide specialized solutions for specific data types:

- Convolutional Neural Networks (CNNs): These are the gold standard for computer vision. They use “convolutional layers” to scan images or spatial data, detecting objects, anomalies, or landmarks. In healthcare, CNNs are used to scan pathology slides for cancerous cells with a level of consistency that exceeds human performance.

- Recurrent Neural Networks (RNNs) and LSTMs (Long Short-Term Memory): These are designed for sequential data where the order matters. They are the “memory” of the AI world, perfect for natural language processing (NLP) and time-series forecasting. They can predict stock market trends or analyze a patient’s heart rate over time to predict an upcoming cardiac event.

- Transformers: The architecture behind Large Language Models (LLMs). Transformers use “attention mechanisms” to weigh the importance of different parts of the input data, allowing them to understand context in a way that was previously impossible. This is now being applied to “DNA as a language,” where models read genetic sequences to predict disease risk.

Researchers can Learn more in the blog post about how Generative Adversarial Networks (GANs) are even being used to create synthetic data. This is a game-changer for training models when real-world data is scarce or too sensitive to share.

Changing Healthcare with Deep Learning Analytics

In our work at Lifebit, we see how deep learning analytics is the backbone of precision medicine. By analyzing multi-omic data (genomics, transcriptomics, proteomics) alongside electronic health records, researchers can identify disease markers that were previously invisible to the human eye.

- Medical Imaging: CNNs can identify tumors in MRI scans or anomalies in X-rays with higher precision and speed than manual review, allowing radiologists to focus on the most complex cases.

- Drug Discovery: Deep learning models can simulate how billions of different chemical compounds will interact with specific human proteins. This allows researchers to “fail fast” in a digital environment, slashing years and billions of dollars off the drug development timeline. Projects like AlphaFold have used deep learning to solve the 50-year-old challenge of protein folding, opening new doors for treating diseases like Alzheimer’s.

- Population Health: By analyzing data from millions of patients, public health agencies can use deep learning to predict the next outbreak or identify which populations are most at risk for chronic conditions, allowing for proactive intervention.

Revolutionizing Finance, Retail, and Beyond

- Fraud Detection: Financial institutions use deep learning to analyze millions of transactions in real-time. The models spot subtle patterns in unstructured data—such as the timing, location, and velocity of purchases—that suggest fraudulent activity, stopping it in milliseconds.

- Customer Personalization: Retailers use deep learning to move beyond simple “people who bought this also bought that” recommendations. They use it to predict a customer’s future needs based on browsing behavior, sentiment analysis of reviews, and even local weather patterns, significantly reducing stock waste.

- Supply Chain Optimization: Deep learning models predict logistics bottlenecks by analyzing global shipping data, weather, and geopolitical trends, allowing companies to reroute goods before a delay occurs.

The LLM Revolution: Using AI as a Strategic Thought Partner

The rise of Large Language Models (LLMs) and Generative AI has added a transformative new dimension to deep learning analytics. We are moving past the era where AI was just a “black box” that gave us a number; we are entering an era where AI acts as a strategic thought partner that can explain its reasoning and help us explore data creatively.

For a data analyst or a research scientist, an LLM serves as a force multiplier that can:

- Interpret Complex Data Visualizations: Instead of just looking at a heat map of gene expression, an analyst can ask an LLM-powered tool, “What are the three most significant outliers in this chart and how do they relate to known metabolic pathways?”

- Simulation and Scenario Modeling: Researchers can run “what-if” scenarios at lightning speed. For example, “If we change the dosage of this compound by 10%, what is the predicted impact on protein binding based on our current deep learning model?”

- Code Generation and Debugging: Deep learning models are notoriously difficult to code. LLMs can instantly find errors in complex SQL queries, Python scripts, or PyTorch implementations, allowing researchers to focus on the science rather than the syntax.

- Literature Synthesis: LLMs can scan thousands of newly published research papers to find connections between a deep learning model’s findings and existing scientific literature, accelerating the “bench-to-bedside” process.

This integration of Generative AI allows us to move from raw insight extraction to actual data-informed decision-making. It’s not about replacing the human analyst; it’s about giving them a “digital brain” that can process the world’s library of information in seconds, providing context that makes the deep learning outputs actionable.

Overcoming the High Cost and Privacy Barriers of Deep Learning

Despite the “magic” of deep learning, implementation is not without significant hurdles. These models are notoriously data-hungry and power-hungry, and they operate in a world of increasing regulatory scrutiny.

Computational Cost and the GPU Arms Race

Training a state-of-the-art model with billions of parameters requires massive computational power. This has led to a reliance on GPU acceleration (Graphics Processing Units) and specialized hardware like TPUs (Tensor Processing Units). Without this specialized hardware, training a modern model could take years instead of days. For many organizations, the cost of maintaining this infrastructure is prohibitive, leading to a shift toward cloud-based deep learning services. However, this creates a new problem: how do you use the cloud without moving sensitive data?

Data Requirements and the Risk of Overfitting

A common pitfall in deep learning is overfitting. This occurs when a model becomes so specialized in its training data that it “memorizes” the noise and outliers rather than the underlying patterns. When this happens, the model performs perfectly on the training set but fails miserably in the real world. We combat this using several techniques:

- Regularization: Adding penalties to the model for being too complex.

- Dropout: Randomly “turning off” neurons during training to ensure the model doesn’t rely too heavily on any single path.

- Normalization: Scaling data (like image pixels or genomic counts) to values between 0 and 1 to make the learning process more stable and efficient.

The Privacy and Ethics Barrier: The Federated Solution

Perhaps the biggest challenge in deep learning analytics today is data privacy. In fields like biopharma and government, you cannot simply move patient data to a central cloud for analysis. Data residency laws (like GDPR in Europe or HIPAA in the US) and institutional silos often keep the most valuable data locked away.

This is where Lifebit’s federated approach changes the game. Instead of the traditional “move-and-copy” model—where data is sent to the algorithm—we use Federated Analytics. This brings the analysis to the data. The data stays securely within its original environment (a Trusted Research Environment), and only the model’s “learnings” (the gradients and weights) are shared. This solves the ethical, legal, and compliance nightmare of global research, allowing for deep learning on a scale that was previously impossible.

For those interested in the technical landscape of these challenges, A Comprehensive Survey of Deep Learning Applications in Big Data Analytics provides a deep dive into how these hurdles are being addressed across different industries, from cybersecurity to healthcare.

Frequently Asked Questions about Deep Learning Analytics

What is the fundamental difference between machine learning and deep learning analytics?

Traditional machine learning is often “shallow,” meaning it requires humans to define features (e.g., “look for these specific shapes to identify a car”). Deep learning analytics is a subset of ML that uses multi-layered neural networks to find those features automatically from raw, unstructured data. If machine learning is a calculator, deep learning is a brain.

How does deep learning eliminate data preprocessing steps?

Because deep neural networks perform “automated feature extraction,” they can often handle “messy” raw data that would break traditional models. In speech-to-text tasks, for example, deep learning has been shown to eliminate up to 10 steps of manual data cleaning and feature engineering, as the model learns to ignore background noise and focus on the relevant frequencies on its own.

What are the most common neural networks used in analytics today?

The “Big Three” are CNNs (optimized for images and spatial data), RNNs/LSTMs (optimized for text, speech, and time-series data), and Transformers (the architecture behind LLMs like ChatGPT, which excels at understanding long-range dependencies in data).

Is deep learning always better than traditional statistics?

Not necessarily. Deep learning requires massive amounts of data to be effective. If you have a small, clean dataset with only a few variables, a simple linear regression or a random forest model might be more efficient and easier to interpret. Deep learning is the “heavy artillery” reserved for complex, high-dimensional big data.

How do you ensure a deep learning model is ethical and unbiased?

This is a critical area of research. Bias in deep learning usually stems from bias in the training data. Ensuring diversity in datasets and using techniques like “Explainable AI” (XAI) to understand why a model made a certain decision are essential steps in building ethical AI systems.

Conclusion: The Future of Data-Informed Decision Making

The “Brains Behind the Bytes” are getting smarter every day. As we move toward a future defined by real-time insights and AI-driven safety surveillance, the bottleneck is no longer the algorithm—it’s the access to data. We have the mathematical tools to solve some of the world’s most pressing problems, but those tools are only as good as the data they can reach.

At Lifebit, we believe that the most impactful discoveries shouldn’t be trapped behind data silos or restricted by geographical borders. Our Federated AI platform provides the secure, real-time access needed to power the next generation of deep learning analytics. Whether you are a government agency tracking public health trends, a biopharma giant searching for the next breakthrough cure, or a clinical researcher looking for rare disease markers, our Trusted Research Environment and Trusted Data Lakehouse ensure your research is fast, compliant, and globally connected.

The projected 36% growth in data science roles over the next decade isn’t just a statistic; it’s a call to action. It reflects a world that is increasingly reliant on the ability to turn raw data into actionable intelligence. To stay competitive, organizations must move away from outdated “move-and-copy” data strategies that create security risks and version control issues. Instead, the future lies in using the full power of deep learning exactly where the data lives.

By embracing federated deep learning, we can respect individual privacy while still benefiting from the collective knowledge hidden within the world’s datasets. The era of isolated data is over; the era of collaborative, deep-learning-powered discovery has begun.

Lifebit Federated Biomedical Data Platform

Ready to open up the potential of your biomedical data and lead the next wave of innovation?

Get Started with Lifebit