PV Gets a Brain Boost: How AI is Revolutionizing Drug Safety

The Data Tsunami Threatening Drug Safety

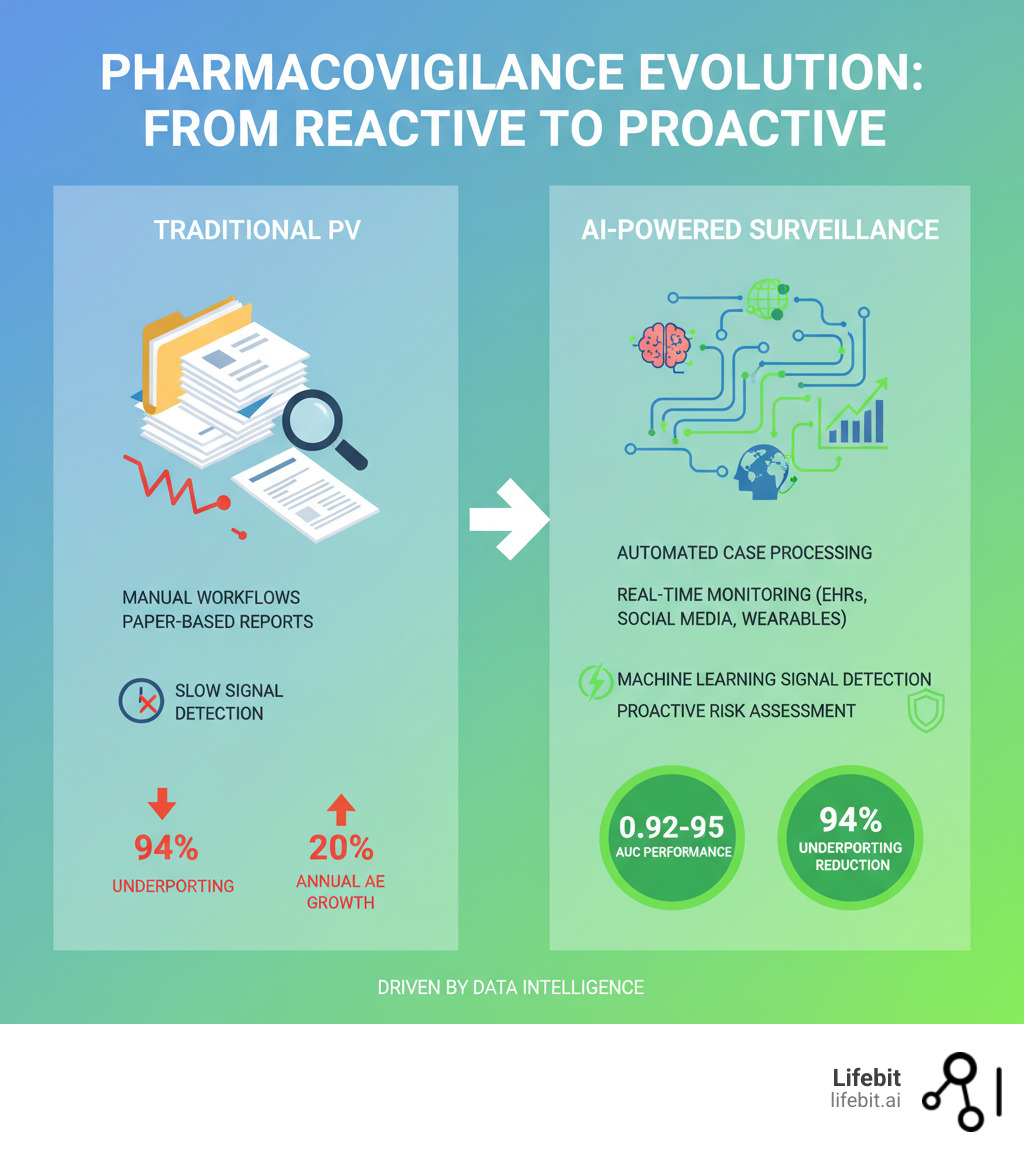

AI for pharmacovigilance is revolutionizing drug safety by automating adverse event detection, accelerating signal detection, and enabling real-time surveillance. It cuts through the manual bottlenecks that have plagued traditional methods for decades.

Key applications of AI in pharmacovigilance:

- Automated Case Processing: NLP extracts and codes adverse events, reducing manual workload by up to two-thirds.

- Advanced Signal Detection: Machine learning models achieve AUCs of 0.92–0.95, outperforming traditional methods.

- Real-Time Monitoring: AI analyzes EHRs, social media, and wearables to detect safety signals as they emerge.

- Improved Accuracy: NLP achieves 70–82% accuracy in extracting adverse drug reactions from unstructured data.

- Predictive Risk Assessment: AI identifies patient-specific risk factors to predict adverse reactions.

The reality is stark: with 1.4 million adverse events processed in 2019 and volume growing 20% annually, traditional systems are overwhelmed. An estimated 94% of adverse drug reactions are missed due to underreporting, while manual processes consume up to two-thirds of PV budgets, delaying signal detection and putting patients at risk.

The gap between data volume and human capacity is becoming a chasm. As drug complexity, polypharmacy, and data sources multiply, the traditional approach cannot scale. AI offers a way to amplify human judgment, not replace it. By automating repetitive tasks and surfacing hidden patterns, it enables a shift from reactive reporting to proactive surveillance.

However, this change requires addressing challenges like data quality, bias, explainability, and regulatory compliance. Companies that succeed will fundamentally change how drug safety works.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit. We build secure, federated platforms for AI for pharmacovigilance that open up real-time insights from siloed data without moving it. My work in computational biology and health-tech has shown me how the right infrastructure makes the impossible, possible.

Why Old-School Pharmacovigilance Is Putting Patients—and Budgets—at Risk

Traditional pharmacovigilance is drowning in a data tsunami. With adverse event volume climbing 20% annually, legacy systems are failing, putting both patients and budgets at risk.

The core problem is manual overload. Old-school PV relies on humans for data entry, reporting, and analysis. This painstaking work consumes up to two-thirds of a company’s PV budget—money that could be spent protecting patients instead of processing paperwork. The typical manual workflow is a cascade of potential delays. An Individual Case Safety Report (ICSR) arrives—perhaps as a fax, email, or call center note. A specialist must first perform triage to determine its validity and reporting timeline. Next comes manual data entry into a safety database, a tedious process prone to human error. Then, a trained coder must interpret the narrative and assign standardized MedDRA (Medical Dictionary for Regulatory Activities) terms. This is followed by a quality control check, medical review, and finally, submission to regulatory authorities. Each step is a potential bottleneck, and with case volumes exploding, the entire system grinds to a halt.

Worse, this expensive manual approach is dangerously slow. By the time a team processes enough cases to spot a pattern, weeks or months may have passed, leaving patients exposed to potential harm. The safety signal that could have changed prescribing behavior remains buried in a backlog. Furthermore, traditional signal detection relies heavily on statistical methods like disproportionality analysis (DPA), which compare the frequency of a drug-event pair in a database to its expected frequency. While useful, these methods are crude. They struggle to identify signals for drugs used to treat the symptoms they might cause (confounding by indication), fail to detect interactions between multiple drugs (the polypharmacy challenge), and can be ‘masked’ when a drug is widely used, making it difficult for a signal to stand out. They are reactive, requiring a significant number of reports before a statistical threshold is met.

Traditional PV was built for a simpler era. Today’s landscape of complex biologics, polypharmacy, and diverse data sources (genomics, wearables, social media) has rendered it obsolete. The old systems cannot handle the current scale, complexity, or speed required.

This is where ai for pharmacovigilance offers a critical advantage. It frees skilled professionals from repetitive tasks, allowing them to focus on the complex analysis and critical decisions that save lives. The question isn’t whether to adopt AI, but how quickly you can implement it to prevent the next safety crisis and mitigate the immense financial risks of failure, which include not only operational overhead but also the catastrophic costs of late-stage product recalls, litigation, and loss of market trust.

From Manual Mayhem to Automated Intelligence: How AI Transforms Pharmacovigilance

AI for pharmacovigilance turns the chaos of adverse event reporting into clarity, shifting drug safety from a reactive scramble to a proactive, intelligent system. It gives experts superpowers, freeing them from manual data entry to focus on the critical decisions that save lives, a change highlighted by recent advances in AI for drug safety monitoring.

Automating Case Intake and Processing

The first change happens at case intake, where AI turns messy, unstructured data into organized, actionable insights.

ICSR automation handles initial intake decisions, such as report validity, freeing up safety teams. The real magic is Natural Language Processing (NLP), which reads and understands text from EHRs, social media, and literature. NLP models can achieve F-measures of 0.82 and 0.72 for adverse drug reaction (ADR) detection from social networks and have hit 70% positive predictive value in EHRs. AI can extract information, automatically code events using terminologies like MedDRA, and summarize narratives. This is achieved through a sequence of sophisticated NLP tasks. Named Entity Recognition (NER) models scan the text to identify and classify key entities like drug names, dosages, symptoms (e.g., ‘headache,’ ‘nausea’), and patient demographics. Relation Extraction then identifies the relationships between these entities, determining that ‘Drug X’ caused the ‘headache.’ AI can also automate the challenging task of MedDRA coding by suggesting the most appropriate terms based on the narrative’s context, dramatically improving consistency and speed compared to manual coding, which is often subjective and variable between coders.

AI-powered duplicate detection is another massive time-saver. The Uppsala Monitoring Centre’s vigiMatch algorithm, for example, processes 50 million report pairs per second—a speed impossible for human reviewers. The result is high-throughput processing that accelerates the entire PV workflow.

Supercharging Signal Detection and Management

Once reports are processed, AI transforms signal detection from a retrospective task into a predictive science. Machine learning models go far beyond traditional analyses. A Gradient Boosting Machine model achieved an exceptional AUC of 0.95 in detecting safety signals, while a knowledge graph-based method hit an AUC of 0.92.

Knowledge graphs are especially powerful, integrating diverse data to uncover hidden relationships between drugs, events, and patient characteristics. This enables precise risk stratification, targeting surveillance to patients with specific risk factors like genetic markers or comorbidities. ML models consistently achieve high performance, representing real safety signals caught earlier and with fewer false alarms. These models can analyze hundreds of variables simultaneously, including patient genetics, comorbidities, lab results, and concomitant medications, to build a highly predictive picture of risk. Knowledge graphs, in particular, create a powerful semantic network of biomedical information. Imagine a graph where ‘Drug A’ is linked to ‘inhibits Protein Y,’ which is in turn linked to a ‘metabolic pathway Z’ that is known to be disrupted in patients with ‘Gene Variant Q.’ A traditional analysis would likely miss this multi-step connection, but an AI traversing the knowledge graph can flag that patients with Gene Variant Q may be at higher risk when taking Drug A, enabling a new level of precision medicine in drug safety.

Real-Time Monitoring and Active Surveillance—No More Waiting for the Next Crisis

AI enables a fundamental shift from reactive reporting to proactive, real-time surveillance. It continuously analyzes data streams from electronic health records, social media, and wearables, providing an up-to-the-minute view of drug safety. This active surveillance taps into previously unharnessed data streams. On social media platforms like X (formerly Twitter) or patient forums like Reddit, AI uses advanced sentiment analysis to distinguish between a user sarcastically saying ‘this drug is a nightmare’ and someone genuinely reporting ‘I had nightmares every night I took this drug.’ For data from wearables, AI can detect subtle physiological changes that precede a patient’s awareness of a symptom. For example, a consistent elevation in resting heart rate or a disruption in sleep patterns following the start of a new medication could be an early, objective indicator of a potential cardiotoxic or neurological side effect, triggering an alert for follow-up long before a formal report would ever be filed.

Early warning systems like MADEx automatically extract information from clinical notes to flag emerging ADRs. The FDA’s Sentinel System, leveraging real-world data for over 250 safety analyses, proves this approach works at scale. This is critical for managing the complexity of polypharmacy, where AI can identify subtle drug-drug interaction signals that would otherwise be missed, especially in vulnerable populations. Real-time capability means catching risks as they emerge, not months later. When patient safety is on the line, that speed makes all the difference.

The Roadblocks: What’s Stopping AI from Saving Drug Safety—And How to Fix It

The main roadblocks for AI for pharmacovigilance are no longer technological. The challenges are governance, inconsistent data, algorithmic bias, and black-box models that regulators won’t accept. Proving causation, not just correlation, is another major hurdle.

Fortunately, these problems have solutions: governance frameworks, data quality initiatives, bias mitigation techniques, Explainable AI (XAI), and causal inference methods.

Fixing Data Quality and Algorithmic Bias

“Garbage in, garbage out” is an ethical crisis in pharmacovigilance. AI models trained on incomplete or biased data will produce flawed results.

Data heterogeneity from sources like EHRs, social media, and claims databases is a major challenge. The solution begins with standardization using common data models and controlled vocabularies. This is where adherence to FAIR data principles—making data Findable, Accessible, Interoperable, and Reusable—becomes critical. Without interoperability, an AI model cannot effectively integrate data from a hospital’s EHR system (using one coding standard) with data from a claims database (using another). Furthermore, a robust data governance framework is essential to manage access rights, enforce quality checks, and ensure the ethical use of patient information across disparate systems.

Algorithmic bias is another risk. AI models trained on historically skewed clinical trial data can underperform for underrepresented populations. This requires deliberate action: data augmentation and resampling techniques to balance datasets, and quantitative bias detection to correct for performance differences. Critically, including diverse datasets from the start is key to ensuring fairness.

Lifebit’s federated AI platform addresses this directly. By enabling secure analysis across datasets from multiple organizations without moving sensitive data, we help create more representative and robust models. By training models on diverse, global datasets while respecting data sovereignty and patient privacy, federated learning directly combats the sampling bias inherent in models trained only on data from a single hospital or a demographically limited clinical trial.

Making AI Decisions Transparent with Explainable AI (XAI)

Trust in AI requires transparency. Clinicians, regulators, and patients need to understand why an AI model flags a safety signal. Explainable AI (XAI) opens up the black box, revealing the reasoning behind a model’s decision. This is not just a ‘nice-to-have’; it’s a prerequisite for trust and adoption.

Methods like LIME and SHAP have proven effective in identifying which features contributed most to a prediction, with studies showing they can help predict adverse outcomes with up to 72% accuracy. LIME (Local Interpretable Model-agnostic Explanations), for instance, explains a single prediction by creating a simpler, transparent model (like a linear regression) that is locally faithful to the complex model’s behavior around that specific data point. SHAP (SHapley Additive exPlanations) takes a game-theoretic approach, calculating the contribution of each feature to the prediction and guaranteeing that the sum of these contributions equals the final prediction outcome. This allows a safety reviewer to see not just that a case was flagged, but that it was flagged because of a specific combination of age, a particular lab value, and a co-prescribed medication. This level of detail is crucial for both regulatory audits and for a clinician to confidently act on an AI-generated alert. This transparency is essential for regulatory acceptance. Agencies like the FDA and EMA require that AI systems be verifiable and auditable. XAI paves the way for AI to become a trusted tool for clinical decision support.

Moving Beyond Guesswork with Causal Inference

Finding a correlation is not the same as proving causation. In drug safety, this distinction is critical to avoid pulling a safe drug from the market or missing a genuine risk.

Causal inference techniques help AI establish true cause-and-effect relationships between drugs and adverse reactions (ADRs), which is especially vital in polypharmacy scenarios. These methods account for confounding factors like age, comorbidities, and other medications. A classic example of confounding is when a new antidepressant is associated with weight gain. Causal inference methods can help determine if the drug is the cause, or if the weight gain is a result of recovery from depression (where appetite loss was a symptom). Techniques like propensity score matching can create balanced comparison groups from observational data by matching patients on the new drug with similar patients not on the drug across dozens of confounding variables (age, disease severity, comorbidities, etc.). This simulates the conditions of a randomized controlled trial, allowing for a much more reliable estimation of the drug’s true causal effect on the adverse event. Advanced tools like causal graph neural networks and the InferBERT framework are moving the field beyond simple pattern-matching. This shift allows PV teams to move from saying “patients on Drug X are associated with Event Y” to “Drug X causes Event Y in this specific patient group,” enabling confident decisions that protect patients.

The New Rulebook: Future-Proofing PV with Advanced AI—And Staying Compliant

AI is moving faster than the regulations that govern it, creating a challenge for a critical field like pharmacovigilance. Success requires understanding the evolving regulatory landscape, preparing for next-gen technologies, and aligning people, processes, and technology.

The Changing Regulatory Landscape for AI in Pharmacovigilance

Regulators like the FDA, EMA, and ICH are actively developing guidance. The FDA’s Emerging Drug Safety Technology Program (EDSTP) facilitates industry dialogue on AI in PV. The trend is toward Good Machine Learning Practice (GMLP), with validation frameworks being developed to establish the credibility of AI models.

The EMA advocates for a risk-based approach, meaning regulatory scrutiny will match the application’s risk level. High-risk uses like automated signal detection will require rigorous validation. A key expectation is clear standards for explainability, as regulators must be able to audit how an AI model reaches its conclusions. This means organizations must prepare a ‘validation package’ for their AI models. This documentation should detail the model’s intended use, the characteristics of the training and testing data, performance metrics (like precision, recall, and AUC), bias assessments, and the outputs from XAI tools. As standards like the ICH’s E2B(R3) format for electronic ICSRs create more structured data, AI’s role in processing this information efficiently and consistently will become even more integral to the compliance workflow.

The Future of AI for Pharmacovigilance: Privacy, Security, and Next-Gen Tech

The future of AI for pharmacovigilance lies in collaborative, privacy-preserving technologies.

Federated learning is transformative, allowing AI models to be trained on global data without sensitive information ever leaving its source. This overcomes privacy barriers and enables large-scale analysis. At Lifebit, our federated AI platform is designed for this, enabling secure, real-time access to biomedical data. This approach is fundamental to building the comprehensive, globally representative datasets needed to detect rare but serious adverse events without compromising security or privacy.

Generative AI and Large Language Models offer sophisticated analysis of unstructured text but heighten data privacy concerns, requiring reevaluation of compliance procedures. Specifically, Generative AI and Large Language Models (LLMs) show immense promise for tasks like auto-generating coherent case narratives from structured data fields for regulatory reports or summarizing thousands of pages of medical literature to accelerate signal investigation. However, their use introduces new risks, most notably ‘hallucinations’—the generation of factually incorrect information. This necessitates a robust human-in-the-loop validation process to ensure the accuracy of any AI-generated content used in a regulatory context. Other technologies like quantum computing and blockchain hold future promise, while in silico trials can improve proactive safety assessments. Our platform, with its Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer), delivers the real-time, AI-driven surveillance needed for large-scale, compliant pharmacovigilance.

Aligning People, Processes, and Technology for Real-World Results

Implementing AI is an organizational challenge, not just a technical one. Success depends on seamless workflow integration, where AI tools fit naturally into existing PV processes. This requires creating new, cross-functional teams that bridge the gap between data science and clinical safety. New roles are emerging, such as the PV Data Scientist, who specializes in building and validating ML models for safety data, and the Clinical AI Translator, who helps safety physicians understand and interpret the outputs of AI systems. A formal AI governance committee should be established to oversee model lifecycle management, monitor for performance drift, and ensure ethical guidelines are followed.

Human-in-the-loop oversight is essential. AI empowers professionals, it doesn’t replace them. Experts must validate and contextualize AI findings. This requires strong governance structures for accountability and clear change management to train teams and adapt processes. A phased adoption strategy is often most effective. Start with a pilot project targeting a well-defined, high-impact problem (e.g., automating duplicate detection). Success here builds momentum and provides valuable lessons for a broader rollout. Ultimately, success requires building a culture of continuous learning where PV professionals are upskilled to become confident consumers and collaborators with AI technology. To succeed, organizations must integrate AI with appropriate oversight, ensure transparency, prioritize data quality, mitigate bias, use Explainable AI, and maintain human control over critical decisions. Aligning people, technology, and processes is the only way to achieve effective AI integration.

Frequently Asked Questions about AI in Pharmacovigilance

Will AI replace pharmacovigilance professionals?

No. AI for pharmacovigilance is a force multiplier, not a replacement. It automates repetitive, high-volume tasks, freeing experts to focus on what they do best: complex signal investigation, risk-benefit analysis, and the critical judgment calls that protect patients. AI empowers human judgment, it doesn’t eliminate it.

How can we trust the outputs of AI in drug safety?

Trust is built on three pillars. First, rigorous validation against known outcomes on diverse datasets. Second, Explainable AI (XAI) methods like LIME and SHAP provide transparency into why a model made a prediction. Third, strong human oversight ensures that experts remain in control, validating AI-generated insights before any action is taken. AI provides the evidence; humans provide the judgment.

What’s the first step to bringing AI into a PV workflow?

Start small, prove value, then scale. Begin with a high-volume, repetitive task like case intake or duplicate detection where AI can deliver an immediate impact. Pilot the solution in a controlled setting to measure success and build internal expertise without disrupting critical operations. The lessons learned from a successful pilot will provide a roadmap for scaling AI for pharmacovigilance across your entire organization.

Conclusion: Don’t Wait for the Next Drug Safety Crisis—Act Now with Smarter Surveillance

The numbers are stark: 1.4 million adverse events processed annually, a number growing 20% each year, while 94% of adverse drug reactions go unreported. Traditional pharmacovigilance cannot keep up, and patients are paying the price.

AI for pharmacovigilance is the proven solution. It’s here now, turning mountains of data from EHRs, social media, and wearables into actionable intelligence. This shift from reactive reporting to proactive surveillance is not just about efficiency—it’s about catching risks before they become crises and empowering PV professionals to focus on the critical decisions that save lives.

The regulatory landscape, led by initiatives like the FDA’s Emerging Drug Safety Technology Program (EDSTP), is evolving to support this change. The technology is ready. The only question is whether you will act now or wait for the next crisis.

At Lifebit, our federated AI platform—including our Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. (Real-time Evidence & Analytics Layer)—is built to deliver these insights securely and at scale.

The time to transform your pharmacovigilance is today. See how to implement real-time adverse drug reaction surveillance.