Seamless Trials: Why Data Integration is Key

Clinical Trial Data Integration: 5 Crucial Benefits

Why Clinical Trial Data Integration is Essential for Modern Research

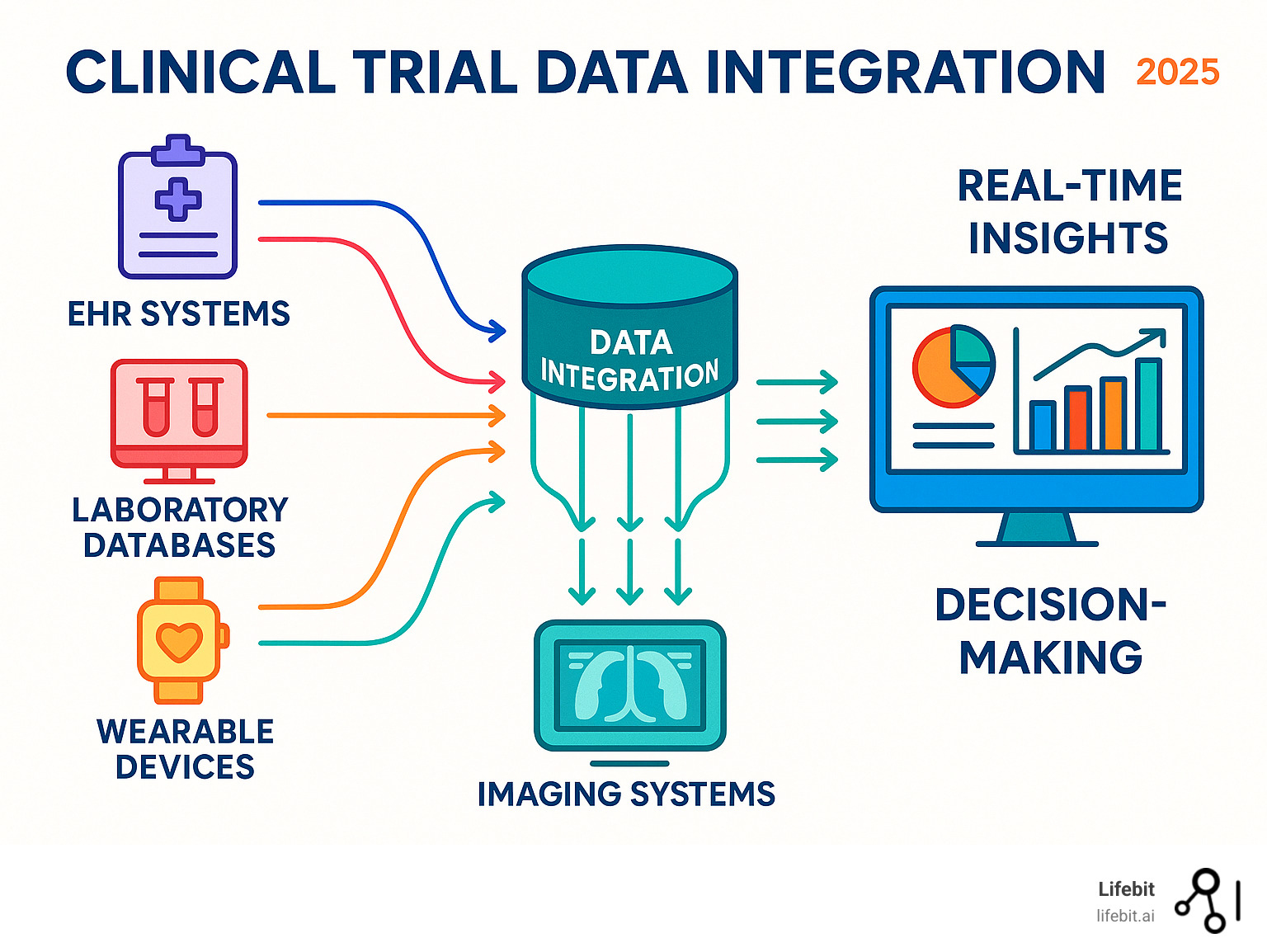

Clinical trial data integration is the process of combining and harmonizing information from multiple sources—including electronic data capture (EDC) systems, electronic health records (EHRs), lab results, imaging data, and wearable devices—into a unified, analyzable dataset. This critical capability enables researchers to gain comprehensive insights, improve patient safety monitoring, and accelerate drug development timelines by overcoming challenges like data silos and format inconsistencies using standards like CDISC and HL7 FHIR.

Modern clinical trials generate massive amounts of data. Phase III trials now produce an average of 3.6 million data points, three times more than 15 years ago. Despite this data explosion, 80% of clinical trials face delays due to recruitment and data management issues.

The stakes are high. Every day a product’s development is delayed can cost between $600,000 to $8 million, and more importantly, these delays mean life-saving treatments reach patients slower.

The problem is that traditional data management approaches can’t keep pace. Data sits in silos, making it nearly impossible to get the real-time insights needed for quick decision-making and patient safety monitoring.

At Lifebit, our expertise in computational biology and biomedical data integration has shown us that seamless data integration isn’t just a nice-to-have—it’s essential for modern clinical research success.

Clinical trial data integration terminology:

- AI for biomarker findy and analysis

- Best AI for Genomics

- trusted research environment

The Foundation: What is Clinical Data Integration and Why is it Crucial?

Clinical trial data integration is the process of standardizing, cleaning, and connecting information from disparate sources so that researchers can use it to make critical decisions. It’s the difference between having a pile of puzzle pieces and seeing the complete picture they create. In an era of data-driven medicine, this process is no longer optional—it is fundamental to success.

This matters because every clinical trial is only as good as its data. When information is trapped in separate systems, researchers may miss critical safety signals, overlook efficacy patterns, or spend weeks manually piecing together information. Clinical trial data integration transforms research by enabling:

- Data-Driven Insights: When all trial data speaks the same language, patterns emerge that single sources could never reveal, such as identifying which patient populations respond best to treatment.

- Improved Patient Care and Safety: Medical monitors can spot concerning trends in real-time. For example, if a patient’s wearable shows an irregular heart rhythm while their lab work indicates elevated liver enzymes, integrated systems can flag this combination immediately.

- Faster Decision-Making: Instead of waiting weeks for manual reports, research teams can run interim analyses on demand, allowing them to stop a harmful trial early or continue a promising one with confidence.

- Reduced Costs and Manual Effort: Automated data flows reduce time spent on tedious data wrangling. Every day saved in drug development can prevent losses of $600,000 to $8 million.

More info about our Clinical Data Integration Platform

The Data Deluge: Key Sources in Clinical Trials

Modern clinical trials generate information from numerous touchpoints, each presenting unique integration challenges. Key sources include:

- EDC (Electronic Data Capture) systems: The backbone of trials, capturing patient demographics, medical histories, and treatment responses. While structured, different EDC vendors use proprietary formats, requiring careful mapping for integration.

- EHR/EMR systems: A goldmine of real-world patient information, providing rich context about health journeys. The challenge lies in its heterogeneity, combining structured data (e.g., ICD-10 codes) with vast amounts of unstructured text (clinical notes) that require NLP to unlock.

- Lab data (LIMS): Scientific results from blood work, biomarker analysis, or genetic testing. This data often arrives in varied formats (e.g., CSV, HL7) with different units of measurement and local lab-specific reference ranges, all of which must be standardized for meaningful analysis.

- Imaging data (DICOM): Crucial information from X-rays, MRIs, and CT scans. While DICOM is a standard for the images themselves, the challenge is integrating these large files with their associated metadata and linking them to specific clinical events and timepoints. Over 95% of oncology trials use medical imaging, making this a critical data stream.

- Wearables and IoT devices: Devices like smartwatches provide a continuous, high-velocity stream of data (e.g., heart rate, activity levels). Integrating this requires robust pipelines to handle the volume and variability, and analytical methods to derive meaningful health insights from raw sensor readings.

- ePROs and eCOAs: Digital tools that capture the patient’s voice, letting them report symptoms and quality of life directly. Integration ensures this subjective but critical data is time-stamped and linked correctly with clinical visit data.

- Real-World Data: Information from insurance claims, pharmacy records, and disease registries that helps researchers understand how treatments work in the real world. The primary integration challenge is accurately and securely linking this external data to trial participants.

- Genomics data: A single patient’s genomic sequence can generate terabytes of information in complex formats like VCF or BAM. Integrating this with clinical outcomes is crucial for precision medicine but requires specialized bioinformatics pipelines and storage solutions.

The Strategic Advantage: Core Benefits of Integration

Effective clinical trial data integration creates ripple effects of improvement across the research ecosystem.

- Improved data quality: Automated processes spot and flag inconsistencies in real-time, cutting review cycle times by up to 80%.

- Accelerated timelines: On-demand interim analyses become possible, with some organizations reducing programming time by 90%.

- Improved safety monitoring: With all data in one system, safety signals that might otherwise go unnoticed become obvious, allowing for faster intervention.

- Streamlined regulatory submissions: When data is pre-standardized to formats like CDISC, regulatory packages are easier to assemble, and approval timelines shrink.

- Increased ROI: Faster timelines, better data quality, and improved safety monitoring lead to significant cost savings and reduced risk.

- Predictive Analytics and AI: Integrated data provides the large, clean, harmonized datasets that machine learning algorithms need to uncover breakthrough findings.

Overcoming Problems: Key Challenges in Data Integration and Interoperability

While clinical trial data integration is powerful, its implementation faces several significant challenges that require careful strategic planning to overcome.

The biggest culprit is data silos, where information is trapped in isolated systems that were never designed to communicate. For instance, a trial’s EDC system, central lab’s LIMS, and the sponsor’s safety database often operate as independent islands. This leads to a lack of interoperability, forcing teams to rely on manual, error-prone data transfers via spreadsheets, which introduces delays and quality risks.

This is compounded by heterogeneity, as data comes in wildly different formats. It can be structured (e.g., lab values in a table), semi-structured (e.g., an ePRO diary with fixed questions and open-ended text boxes), or completely unstructured (e.g., narrative text in a physician’s notes or a radiology report). Extracting standardized, analyzable information from unstructured data, such as specific adverse event terms or tumor measurements from free text, is a major technical hurdle that often requires advanced tools like Natural Language Processing (NLP).

Inevitable data quality issues like missing values, duplicate entries, and contradictory records (e.g., an adverse event dated before the first dose of a drug) are common. While traditional 100% source data verification (SDV) is inefficient and costly, regulators now encourage smarter, risk-based quality management (RBQM) approaches that focus on critical data. An automated integration platform can embed quality checks to flag these issues in real time. Source Data Verification (SDV) Quality in Clinical Research provides more detail.

Finally, security and privacy are paramount. All integration activities must comply with strict regulations like HIPAA and GDPR. This requires robust technical safeguards like encryption and access controls, as well as a strong governance framework to manage data residency and prevent unauthorized access, ensuring patient trust is maintained.

More info about Health Data Interoperability

The Core Process of Clinical Trial Data Integration

Successful integration follows a systematic process to transform scattered data into a useful asset.

- Data mapping: This initial step identifies corresponding fields across different systems (e.g., mapping “patient ID” in EDC to “medical record number” in EHR).

- ETL (Extract, Transform, Load): This is a three-step data makeover. Data is extracted from sources, transformed (cleaned, standardized, and harmonized), and loaded into a central repository.

- Data harmonization: This ensures concepts are consistent, such as mapping different diagnostic codes to the same condition or standardizing units of measurement. More info on Data Harmonization explores this further.

- Validation: Throughout the process, data is checked for logical consistency and accuracy.

- Governance: Clear policies are established for data ownership, access, and quality control.

Integration vs. Reconciliation: Clarifying the Concepts

“Data integration” and “data reconciliation” are related but distinct. Integration is the technical process of combining data into a unified dataset. Reconciliation is the quality assurance process of comparing data across sources to verify consistency.

| Feature | Data Integration | Data Reconciliation |

|---|---|---|

| Purpose | Combine data from different sources into one unified dataset | Verify consistency and accuracy across different data sources |

| Process | Technical process involving programming and data change | Review process comparing specific data points between datasets |

| Timing | Ongoing and often automated | Periodic, at predefined intervals or milestones |

| Output | Consolidated, harmonized dataset | Report of discrepancies and inconsistencies |

| Example | Automatically importing lab results into EDC | Comparing visit dates between EDC and randomization systems |

Both processes are essential, but they serve different purposes in a data management strategy.

Building Bridges: Core Data Standards and Models

Data standards are the common languages that make clinical trial data integration possible, ensuring interoperability, semantic consistency, and regulatory acceptance. Without them, every data exchange becomes a custom, time-consuming project, slowing down research and introducing risk.

Fortunately, the clinical research community has developed robust standards that make seamless integration practical and compliant.

What is Health Data Standardisation?

The Role of CDISC

CDISC (Clinical Data Interchange Standards Consortium) is the gold standard for clinical trial data. Regulatory bodies like the FDA and Japan’s PMDA often require these standards for submissions, making them essential for market approval. Implementing CDISC from study start streamlines the entire data lifecycle.

CDISC provides three key standards for this process:

- CDASH (Clinical Data Acquisition Standards Harmonization): Sets rules for consistent data collection from the start. By designing Case Report Forms (CRFs) using CDASH, data is captured in a standard way, which drastically simplifies later steps.

- SDTM (Study Data Tabulation Model): Organizes collected data into a standard structure for regulatory review. For example, all adverse event data is placed in the “AE” domain with standard variable names (AETERM, AESEV), allowing reviewers to immediately understand the dataset’s structure.

- ADaM (Analysis Data Model): Creates standardized analysis datasets for faster, more reliable statistical reviews. ADaM provides clear traceability from the final analysis back to the SDTM data, which is critical for regulatory transparency.

By using CDISC, everyone from the clinical site to the regulatory reviewer speaks the same data language.

More info on Lifebit’s CDISC Partnership

The Power of HL7 FHIR and Other Models

While CDISC governs trial data, other standards are needed to integrate real-world data from healthcare systems.

HL7 FHIR (Fast Healthcare Interoperability Resources) is revolutionizing how healthcare systems share information. It uses modern, web-based APIs to make real-time data exchange practical and secure. FHIR defines data as “Resources” (e.g., Patient, Observation, Condition), allowing a trial platform to query an EHR and pull specific information—like a patient’s latest lab results—directly and automatically into the trial database.

Health Level Seven Fast Healthcare Interoperability Resources

The OMOP Common Data Model addresses the challenge of analyzing observational data from varied sources like EHRs and insurance claims. OMOP transforms disparate datasets into a common structure and standardizes the terminology (e.g., mapping local drug codes to RxNorm). This allows researchers to run a single analysis across multiple large-scale databases as if they were one, which is especially powerful for integrating EHR data to find eligible patients or build synthetic control arms for clinical trials.

Together, these standards enable the combination of traditional clinical trial data with rich, real-world patient information, providing a more complete picture of how treatments work.

More info on using Real-World Data in Clinical Trials

A Blueprint for Success: Best Practices for Effective Clinical Trial Data Integration

Success in clinical trial data integration requires a strategic approach. The most successful integrations share these common characteristics:

- Strategic Planning: Begin by mapping all potential data sources during study design. Don’t treat integration as an afterthought.

- Cross-Functional Teams: Align sponsors, CROs, sites, and technology vendors early on standard operating procedures and data formats to ensure a smooth process.

- Technology Selection: Choose platforms that support open standards like CDISC and HL7 FHIR. Look for robust APIs and self-service capabilities.

- Robust Data Governance: Establish clear policies for data ownership, quality standards, and security. Automated validation rules can identify anomalies early.

- Scalability and Flexibility: Use cloud-based platforms that can handle growing data volumes and adapt to new data types from emerging digital health technologies.

- Continuous Monitoring: Regularly monitor performance, data quality, and compliance to identify and refine processes.

More info on Trusted Research Environments.

The Modern Toolkit: Platforms and Technologies

Today’s clinical trial data integration relies on sophisticated technologies that automate complex processes.

- Centralized Platforms: Cloud-based solutions that aggregate data from diverse sources into a single source of truth.

- APIs (Application Programming Interfaces): The digital translators that allow different software systems to communicate securely and automatically.

- Cloud Computing: The scalable backbone for handling massive volumes of clinical data, enabling real-time access and advanced analytics.

- Data Lakehouse Architecture: An innovative approach combining the flexibility of data lakes with the structure of data warehouses. Our Data Lakehouse Platform is built on this principle.

- Visualization Tools: Interactive dashboards that transform integrated data into actionable insights for real-time decision-making.

Ensuring Security and Compliance

Protecting sensitive clinical trial data requires a multi-layered defense to build trust with patients, regulators, and partners.

- Pseudonymization: Replacing direct patient identifiers with artificial ones to reduce privacy risks while preserving data utility.

- Access Controls: Role-based controls ensure that users can only see the data necessary for their job.

- Audit Trails: A complete, traceable history of every data access and modification, which is essential for regulatory compliance.

- Encryption: Protecting data both in transit and at rest to prevent unauthorized access.

We ensure our platforms are compliant with global privacy regulations, including:

- HIPAA (Health Insurance Portability and Accountability Act): The US standard for protecting sensitive patient health information. Our platforms are designed as HIPAA Compliant Data Analytics solutions.

- GDPR (General Data Protection Regulation): The strict EU rules on personal data collection and processing.

The Future is Now: AI, Machine Learning, and the Next Wave of Integration

The future of clinical trial data integration is linked with advancements in Artificial Intelligence (AI) and Machine Learning (ML). These technologies are changing how we collect, analyze, and derive insights from clinical data by enabling greater automation, predictive analytics, and anomaly detection.

More info on AI for Precision Medicine.

The Role of AI and Machine Learning

AI and ML are revolutionizing clinical trial data integration in several key ways:

- Automated Data Cleaning and Harmonization: AI algorithms can identify and correct errors, standardize terminologies, and extract structured information from unstructured text like clinical notes.

- AI-Assisted Reconciliation: ML can rapidly compare vast datasets to pinpoint discrepancies for human review, streamlining the reconciliation process.

- Natural Language Processing (NLP): NLP allows computers to interpret human language, opening up valuable insights from unstructured text in EHRs and patient narratives.

- Improved Safety Signal Detection: By analyzing real-time, integrated data, AI can identify subtle safety signals that might be missed by traditional methods.

- Improved Data Quality Management: AI can power sophisticated data quality tools, including generative AI-powered audit trail review, allowing users to interrogate data using natural language.

AI is increasingly being applied across the drug findy and development pipeline, from target identification through clinical trials, helping to accelerate the development of new therapies.

The Future of Clinical Trial Data Integration: Trends and Opportunities

The landscape of clinical trials is evolving rapidly, with several exciting trends shaping the future of integration:

- Federated Learning and Data Analysis: This approach allows AI models to be trained on decentralized data without it ever leaving its source location, preserving privacy while enabling large-scale analysis. Our expertise lies in Federated Data Analysis.

- Digital Twins and In-silico Trials: Creating virtual patient replicas or conducting computer-simulated trials will rely on integrating vast amounts of real-world and genomic data.

- Synthetic Control Arms (SCA): Using integrated real-world data to serve as a control group can reduce the number of patients needed in traditional trials.

- Decentralized Clinical Trials (DCTs): The shift towards remote trial activities amplifies the need for robust, real-time integration from diverse sources like wearables.

- Interoperability-as-a-Service: We anticipate a future where seamless data exchange is provided as a standard service, simplifying setup for research organizations.

Frequently Asked Questions about Clinical Trial Data Integration

Here are answers to common questions about clinical trial data integration.

How does data integration improve patient safety?

Clinical trial data integration improves patient safety by creating a unified, real-time view of all patient information. Instead of data being siloed in separate systems (labs, adverse events, wearables), medical monitors can see a complete picture at once. This allows them to spot concerning patterns much faster—for example, a combination of abnormal lab values and irregular heart rhythms from a wearable. This transforms safety monitoring from a reactive process to proactive patient protection, enabling immediate intervention when necessary.

What is the first step to implementing a data integration strategy?

The first step is strategic planning. Begin by defining your specific goals, such as speeding up database lock or improving safety monitoring. Next, conduct a thorough assessment of your data landscape by mapping all potential data sources, their formats, and update frequency. Finally, establish a cross-functional governance team with representatives from data management, biostatistics, clinical operations, and IT. This team will champion the initiative and ensure alignment on goals and standards. A few weeks of careful planning can save months of headaches later.

How does data integration support regulatory submissions?

Data integration is a game-changer for regulatory submissions. By bringing all trial data together and standardizing it to formats like CDISC throughout the study, you are essentially preparing for submission from day one. This provides several advantages:

- Traceability: Integrated systems provide clear audit trails, allowing regulators to easily trace data from its source to the final analysis.

- Consistency: Data is harmonized and validated continuously, reducing the likelihood of discrepancies and regulatory queries during the review process.

- Confidence: Demonstrating continuous data quality and safety monitoring throughout the trial builds confidence with regulatory reviewers about the reliability of your findings.

The result is faster submission preparation, fewer queries, and shorter timelines to regulatory approval.

Conclusion

The journey through clinical trial data integration reveals a fundamental shift from scattered information to unified, powerful insights. What was once a technical challenge has become the cornerstone of modern clinical research.

We’ve seen that with Phase III trials generating millions of data points, traditional data management is no longer viable. Delays cost millions of dollars and, more importantly, slow the delivery of life-saving treatments to patients.

Integration is essential. When data is unified, medical monitors can spot safety signals in real-time, regulatory submissions flow smoothly with CDISC standards, and AI can predict patient outcomes. The challenges of data silos and security are significant, but they are being overcome by standards like HL7 FHIR, technologies like AI, and approaches like federated learning.

The future is promising, with digital twins, synthetic control arms, and decentralized trials all depending on robust clinical trial data integration. These innovations are happening now, powered by platforms that manage complexity while ensuring security and compliance.

At Lifebit, we understand that behind every data point is a patient. Our next-generation federated AI platform transforms the challenge of integration into an opportunity for breakthrough findings. With our Trusted Research Environment and other platform solutions, we enable researchers worldwide to collaborate securely, analyze data in real-time, and accelerate the path from lab to patient.