How to Manage Pharma Data Without Losing Your Mind

Why Pharma Companies Are Drowning in Data They Can’t Use

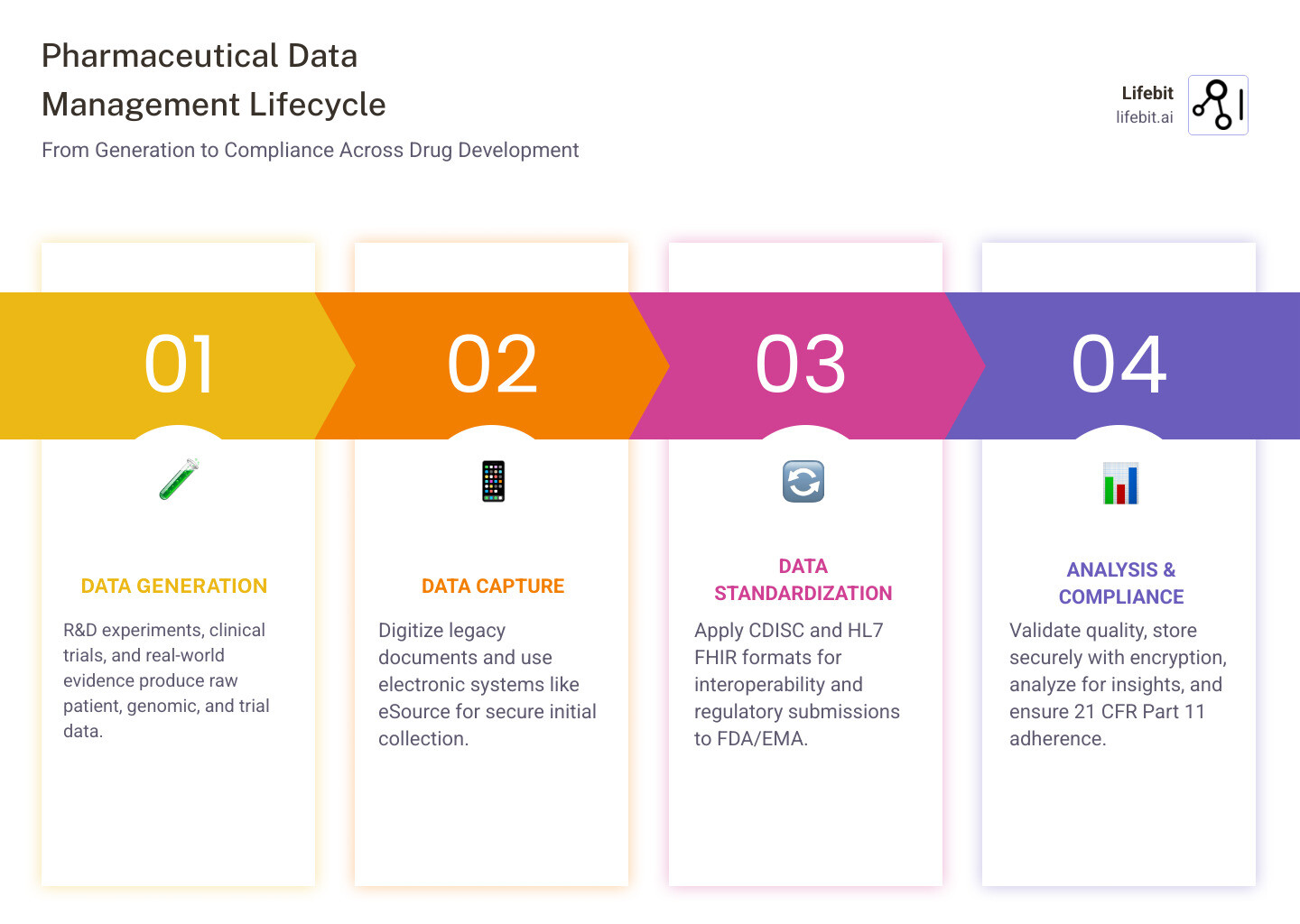

Pharma data management is the process of collecting, organizing, securing, and analyzing pharmaceutical data across the entire drug development lifecycle—from early R&D to post-market surveillance—while ensuring regulatory compliance and data integrity.

In the modern era, the volume of data generated by pharmaceutical companies has exploded. We are no longer just dealing with spreadsheets and lab notebooks; we are managing petabytes of genomic sequences, high-resolution imaging, and real-time sensor data from wearable devices. This “data deluge” is driven by the shift toward precision medicine and the increasing complexity of clinical trials.

What pharmaceutical companies need to manage:

- Clinical trial data: patient demographics, lab results, adverse events, endpoints, and increasingly, patient-reported outcomes (ePRO).

- Regulatory submissions: standardized formats (CDISC, HL7 FHIR) for FDA and EMA, including the Electronic Common Technical Document (eCTD).

- Master data: products, sponsors, facilities, supply chain, and reference data that must be consistent across global operations.

- Real-world evidence (RWE): EHR, insurance claims, genomics, and multi-omic datasets that provide insights into how drugs perform in the general population.

- Legacy data: physical documents, siloed sources, and “dark data” consuming 52% of storage budgets while providing zero value.

- Omics Data: Massive datasets from genomics, proteomics, and metabolomics that require specialized bioinformatic pipelines.

The core challenge: Most pharma organizations are data-rich but information-poor. They generate massive volumes of data but lack the systems to turn it into actionable insights for faster drug discovery, regulatory approval, and patient safety. This is often referred to as the DRIP (Data-Rich, Information-Poor) syndrome.

Here’s the uncomfortable truth: about 80% of scientific data becomes unavailable within 20 years—lost to outdated formats, unsecured platforms, or sheer neglect. Meanwhile, companies waste resources storing “dark data” that never gets analyzed, while critical information sits locked in silos across departments, geographies, and systems.

The pharmaceutical industry faces unique pressures that make data management particularly challenging. You’re dealing with sensitive patient information under strict regulations like HIPAA and GDPR. You need to comply with 21 CFR Part 11 for electronic records. You’re coordinating complex clinical trials across multiple sites. And you’re expected to accelerate drug development timelines while maintaining perfect data integrity.

To address these challenges, companies must adhere to the ALCOA+ principles, which state that data must be Attributable, Legible, Contemporaneous, Original, and Accurate, as well as Complete, Consistent, Enduring, and Available. Without a robust management framework, achieving ALCOA+ compliance at scale is virtually impossible.

The good news? Digital transformation tools can reverse this situation. Companies that implement robust data management see dramatic results: 70% reduction in resources allocated to data acquisition and preparation, 20-25% productivity gains from better collaboration systems, and faster paths from discovery to market.

I’m Maria Chatzou Dunford, CEO and Co-founder of Lifebit, where we’ve spent years building federated platforms that help pharmaceutical organizations manage biomedical data across secure, compliant environments. Through my work in computational biology and genomics, I’ve seen how proper pharma data management transforms research speed, regulatory confidence, and patient outcomes.

Similar topics to Pharma data management:

Why 80% of Scientific Data Vanishes and How to Save Yours

It sounds like a horror movie plot, but it’s the reality of modern drug development. Research published in Nature highlights that 80% of scientific data becomes unavailable in just two decades. This happens because data is often stored in fragile formats, on unencrypted mobile devices that get lost, or within siloed legacy systems that no one knows how to access anymore.

As researchers move on to new roles or companies, the “tribal knowledge” required to interpret old datasets vanishes. If a dataset isn’t properly metadata-tagged and stored in a centralized repository, it effectively ceases to exist for the organization. This leads to the “re-discovery” of information, where companies spend millions of dollars repeating experiments that have already been conducted.

When we talk about data loss, we aren’t just talking about a missing spreadsheet. We are talking about millions of dollars in R&D investment, years of patient contributions, and critical insights into drug safety that could prevent future clinical failures. To stop this “data decay,” we need to move beyond simple storage and toward comprehensive biopharma data integration.

The FAIR Principles: A Framework for Longevity

To combat data loss, the industry is increasingly adopting the FAIR Data Principles. These principles ensure that data is:

- Findable: Data and metadata should be easy to find for both humans and computers.

- Accessible: Once the user finds the required data, they need to know how it can be accessed, possibly including authentication and authorization.

- Interoperable: The data needs to integrate with other data. In addition, the data need to interoperate with applications or workflows for analysis, storage, and processing.

- Reusable: Metadata and data should be well-described so that they can be replicated and/or combined in different settings.

Turning Dark Data into Actionable Pharma Data Management Insights

“Dark data” is the information your company collects during its daily activities but fails to use for any meaningful purpose. In pharma, this often looks like abandoned drug development records, binders of lab notes, or sales data sitting in a drawer. Statistics show that 52% of a business’s storage budget is spent on this dark data—essentially paying to store things you can’t even see.

To turn this around, we recommend a four-step process:

- Digitization: Converting legacy physical documents and unstructured files into searchable digital formats using OCR (Optical Character Recognition) and AI-driven extraction.

- Harmonization: Using consistent terminologies and abbreviations so that data from a study in 2005 can be compared to a study in 2025. This involves mapping local codes to international standards like SNOMED-CT or LOINC.

- Advanced Search Tools: Implementing custom search tools that allow researchers to query across different studies and data types. Imagine a scientist being able to ask, “Show me all adverse events related to liver toxicity across all Phase II trials in the last 10 years.”

- Knowledge Management: Creating a centralized, cloud-based platform where teams can brainstorm and share insights securely. This moves data from a passive asset to an active participant in the research process.

By shedding light on this dark data, companies can identify production bottlenecks, optimize formulations, and even revive promising compounds that were shelved for the wrong reasons, such as a lack of statistical power in a small trial that could be corrected by pooling data from multiple sources.

Building a Robust Clinical Data Management (CDM) System

Clinical Data Management (CDM) is the “high-test fuel” that runs the drug development engine. Without high-quality, statistically interpretable data, the FDA or EMA will never approve your drug, no matter how effective it is. A robust CDM system ensures that the data collected from clinical trials is accurate, complete, and reliable.

The shift from paper to electronic systems has been a game-changer for Pharma data management. While some companies initially resisted due to cost or connectivity issues, the benefits of Electronic Data Capture (EDC) are undeniable. Modern CDM systems now integrate with eSource, Lab Information Management Systems (LIMS), and even wearable devices to provide a holistic view of the patient journey.

The Clinical Data Management Lifecycle

A successful CDM process follows a rigorous lifecycle to ensure data quality:

- Protocol Review and Design: Data managers review the clinical protocol to identify what data needs to be collected and design the Case Report Forms (CRFs).

- Database Build and Validation: The electronic database is created, and edit checks (automated logic tests) are programmed to catch errors during data entry.

- Data Entry and Cleaning: Data is entered into the system, and discrepancies are flagged. Data managers issue “queries” to clinical sites to resolve these issues.

- Medical Coding: Unstructured data like adverse events or concomitant medications are coded using standardized dictionaries (MedDRA, WHO-Drug).

- Data Reconciliation: Ensuring that data from external sources (like central labs or SAE databases) matches the clinical database.

- Database Lock: Once the data is deemed clean and complete, the database is locked, and no further changes can be made. This data is then used for statistical analysis.

The Compliance Framework: 21 CFR Part 11 and ICH E6

Compliance isn’t just a checkbox; it’s the foundation of trust. Key regulations include:

- 21 CFR Part 11: This FDA regulation sets the criteria under which electronic records and electronic signatures are considered trustworthy and equivalent to paper records. It requires strict access controls, audit trails, and system validations. If you cannot prove who changed a data point and why, your entire submission is at risk.

- ICH E6 (GCP): These international ethical and scientific quality standards ensure that trials are conducted safely and the results are credible. The recent R2 and R3 updates emphasize a risk-based approach to data management.

| Feature | Paper-Based Systems | Electronic Data Capture (EDC) |

|---|---|---|

| Data Entry | Manual, prone to transcription errors | Direct, with real-time validation |

| Security | Physical locks, hard to track access | Encryption, unique access codes |

| Audit Trail | Manual “strike-throughs” and initials | Automatic, permanent timestamped logs |

| Speed | Slow, requires physical transport | Instant, enabling near real-time review |

| Data Quality | Retrospective cleaning | Proactive, real-time error catching |

Maintaining this level of control requires sophisticated clinical data governance to ensure that the “noise” is removed without losing the “signal” of the research. For more technical depth, researchers can explore ensuring data integrity in pharma through modern Quality Management Systems.

Essential Quality Checks for Pharma Data Management Systems

To keep your data “clean,” you need more than just a database; you need a process. This includes:

- Data Standardization: Using global standards like CDISC (Clinical Data Interchange Standards Consortium) and HL7 FHIR (Fast Healthcare Interoperability Resources). CDISC standards like SDTM (Study Data Tabulation Model) and ADaM (Analysis Data Model) are mandatory for FDA submissions.

- Medical Coding: Converting unstructured text (like a doctor’s note saying “patient has blisters”) into standardized terms using dictionaries like MedDRA (for adverse events) or WHO-Drug (for medications). This allows for cross-study safety analysis.

- Discrepancy Management: Identifying and flagging inconsistencies (e.g., a male patient listed as pregnant) and resolving them through a traceable query process. This also includes identifying “outliers” that might indicate fraud or equipment failure at a specific clinical site.

Master Data Management (MDM): The Secret to 25% Higher Productivity

If CDM is about the “depth” of a single trial, Master Data Management (MDM) is about the “breadth” of the entire organization. MDM is the practice of creating a single, “golden” version of truth for critical data points like customer (HCP) profiles, product lists, and facility locations.

In many pharma companies, data is fragmented across departments. The R&D team might use one ID for a chemical compound, while the manufacturing team uses another, and the commercial team uses a third. This lack of a “common language” leads to massive inefficiencies. MDM solves this by creating a centralized hub that synchronizes data across all systems.

When your data is siloed, your marketing team might have one address for a doctor, while your clinical team has another. This leads to wasted effort and missed opportunities. By implementing a strong enterprise data platform, companies can bridge these silos.

Benefits of MDM include:

- 20-25% Productivity Boost: Social technologies and integrated data management allow teams to collaborate faster and find information without digging through redundant databases. Researchers spend less time “cleaning” data and more time analyzing it.

- Better Decision-Making: Executives can see a holistic view of the company’s performance across 5 continents. For example, they can track the global inventory of a specific API (Active Pharmaceutical Ingredient) in real-time.

- Customer Intelligence: By mastering customer data, pharma companies can tailor their omnichannel engagement, ensuring the right medical information reaches the right healthcare professional at the right time. This is critical for launching new therapies in a crowded market.

- Supply Chain Resilience: MDM allows companies to track raw materials from the supplier to the finished product. In the event of a recall, a robust MDM system can identify affected batches in minutes rather than weeks.

- Regulatory Agility: When regulators ask for a list of all facilities involved in the production of a biologic, MDM provides an instant, accurate answer, reducing the risk of non-compliance.

Future-Proofing Pharma Data Management with AI and eSource

The future of Pharma data management isn’t just about storing data; it’s about making that data work for you. We are seeing a massive shift toward eSource, where data is captured directly from the source (like an EHR or a wearable device) into the clinical database, eliminating the need for manual transcription. This reduces errors and allows for “decentralized clinical trials” (DCTs), where patients can participate from the comfort of their homes.

At Lifebit, we are pioneering the use of AI-enabled data governance to help organizations navigate this complexity. AI is no longer a futuristic concept; it is a practical tool for managing the scale of modern biomedical data.

How AI is Transforming Data Management:

- Predict Safety Risks: By analyzing real-time data streams from clinical trials and social media, AI can identify potential adverse events before they become widespread issues. This “pharmacovigilance 2.0” is essential for patient safety.

- Automate Data Cleaning: Machine learning algorithms can spot patterns of errors that a human might miss, such as subtle inconsistencies in lab results across different sites. This significantly speeds up the time to database lock.

- Natural Language Processing (NLP): AI can read through thousands of pages of unstructured clinical notes or medical literature to extract relevant data points, turning “dark data” into structured insights.

- Support Federated Research: This is perhaps the most significant breakthrough. Our federated AI platform allows researchers to access and analyze data across different institutions (like hospitals or biobanks) without the data ever leaving its original, secure environment. This is critical for meeting strict GDPR and HIPAA requirements while still enabling global collaboration.

The Rise of Federated Learning

In traditional data management, you have to move data to a central location to analyze it. This is slow, expensive, and creates security risks. With Federated Learning, the AI model goes to the data. The data stays behind the institution’s firewall, and only the “insights” (model weights) are shared. This allows pharma companies to collaborate on rare disease research or large-scale genomic studies without compromising patient privacy or intellectual property.

As we move toward 2030, the integration of Digital Twins—virtual representations of patients or manufacturing processes—will further rely on flawless data management. These twins allow researchers to simulate drug effects in a virtual environment before ever dosing a human subject, potentially saving years of development time.

Frequently Asked Questions about Pharma Data Management

What is the “Data-Rich, Information-Poor” (DRIP) situation?

The DRIP situation describes organizations that have massive amounts of data but lack the processes, tools, or harmonization to turn that data into valuable information. In pharma, this often leads to “reinventing the wheel” because researchers don’t know that the data they need already exists elsewhere in the company. It is estimated that scientists spend up to 80% of their time just finding and preparing data, leaving only 20% for actual analysis.

How does 21 CFR Part 11 ensure data security?

It ensures security by requiring technical controls like audit trails (which record who did what and when), authority checks (to ensure only authorized users can access sensitive data), and operational system checks (to ensure the sequence of steps is followed). It essentially makes electronic data as legally binding and traceable as a signed paper document. It also requires that systems are validated to ensure they perform consistently as intended.

Why is data standardization critical for drug discovery?

Without standards like CDISC, every study would use different names for the same thing (e.g., “Heart Attack” vs. “Myocardial Infarction”). Standardization allows researchers to combine datasets from multiple studies to find rare patterns, helps regulators review submissions faster, and reduces the risk of errors during data transfer. It is the foundation of interoperability in the healthcare ecosystem.

What is the difference between CDM and MDM?

Clinical Data Management (CDM) focuses on the data within a specific clinical trial, ensuring its accuracy for regulatory approval. Master Data Management (MDM) focuses on the core data entities across the entire enterprise (like products, employees, and locations) to ensure consistency across all business units and systems.

How does GDPR impact pharma data management?

GDPR (General Data Protection Regulation) requires that patient data be handled with extreme care, emphasizing “privacy by design.” It gives patients the right to know how their data is being used and the right to be forgotten. For pharma companies, this means implementing robust encryption, anonymization, and often using federated data models to avoid moving sensitive data across borders.

What is eSource in clinical trials?

eSource refers to the electronic capture of initial clinical findings. Instead of writing data on a paper chart and then typing it into a computer, the data is entered directly into a digital system (like an iPad or an EHR). This eliminates transcription errors and provides real-time access to trial data for monitors and sponsors.

Conclusion

Managing pharmaceutical data doesn’t have to be a nightmare. By moving away from siloed legacy systems and embracing digital transformation, you can protect your R&D investment and accelerate the delivery of life-saving treatments to patients.

The path forward involves three key pillars: integrity (ensuring your data is ALCOA+ compliant), integration (breaking down silos through MDM and harmonization), and innovation (leveraging AI and federated governance).

As the industry moves toward more complex modalities like cell and gene therapies, the ability to manage and interpret multi-omic data will become a primary competitive advantage. Companies that master their data today will be the ones leading the breakthroughs of tomorrow.

At Lifebit, we provide the next-generation federated AI platform that makes this possible. We help you access global biomedical data securely, harmonize it for real-time insights, and ensure that your research is always compliant, no matter where in the world your data sits.

Ready to stop drowning in data and start driving breakthroughs?