From Silos to Synergy: Mastering Biopharma Data Integration

Biopharma data integration: 97% Crisis Solved

The Data Crisis: 97% of Biopharma Data Is Wasted—Here’s How to Fix It

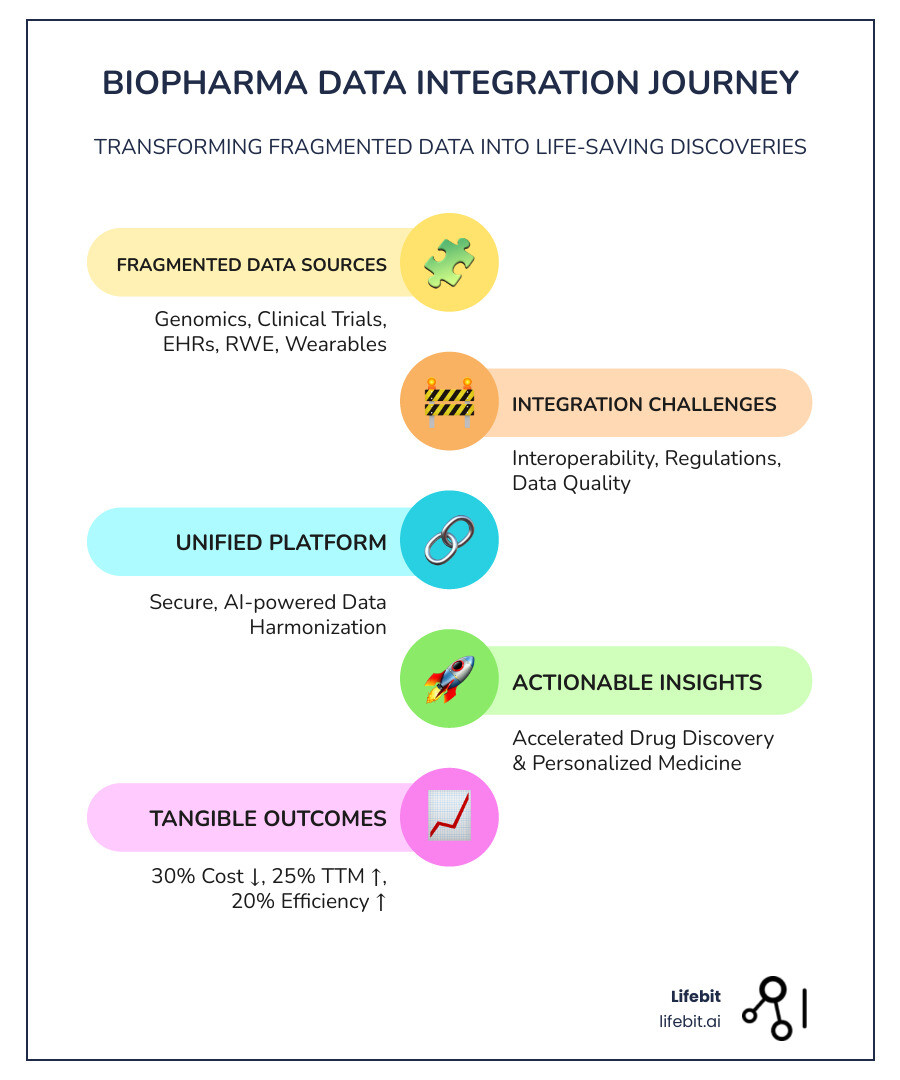

Biopharma data integration is the process of connecting and harmonizing diverse datasets—from genomics and clinical trials to electronic health records and real-world evidence—into a unified, actionable view that accelerates drug findy, improves patient outcomes, and ensures regulatory compliance.

What you need to know:

- The Problem: An astonishing 97% of healthcare data goes unused, and over 70% of pharma research data searches end in failure due to fragmented systems and data silos.

- The Solution: Modern data integration platforms use AI, cloud technology, and federated architectures to connect disparate data sources without moving sensitive information.

- The Impact: Organizations implementing integrated workflows have seen a 30% reduction in development costs, 25% faster time to market, and 20% increase in operational efficiency.

- Key Technologies: Cloud platforms, AI/ML for harmonization, interoperability standards (FHIR, OMOP), and federated learning enable secure, compliant data access at scale.

The biopharma industry generates more data than ever before. Phase III clinical trials now produce 3.6 million data points on average—three times what they collected a decade ago. Add genomic sequencing, proteomics, wearable devices, and electronic health records, and you have a data deluge that should be powering breakthrough findies.

Instead, most of it sits locked away in silos.

Legacy systems can’t talk to each other. Data formats clash. Regulatory frameworks like GDPR and HIPAA create walls around sensitive information. The result? Researchers waste months hunting for data that already exists. Critical insights stay hidden. Drug development slows to a crawl.

This isn’t just an IT problem—it’s a patient problem. Every day lost to data fragmentation is a day that life-saving treatments stay out of reach.

The good news? The technology to fix this exists today. Cloud platforms, AI-powered harmonization tools, and federated architectures can connect your data without compromising security or compliance. The challenge is knowing how to build a strategy that works for your organization’s unique needs.

I’m Maria Chatzou Dunford, CEO of Lifebit. We build federated platforms for secure biopharma data integration across genomics, clinical, and real-world evidence. This guide will show you how to transform your fragmented data into a strategic asset that accelerates findy and improves patient outcomes.

Open up Faster Cures and Cut Costs: The ROI of Integrated Data

Here’s the truth: biopharma data integration pays for itself—and then some.

When you break down data silos and connect your genomic databases, clinical trial systems, and real-world evidence sources, you’re not just tidying up your IT infrastructure. You’re fundamentally changing how fast you can bring therapies to patients and how much it costs to get there.

The numbers tell the story. Industry reports show organizations can see a 30% reduction in development costs after integrating their workflows. They also cut their time to market by 25% and boosted operational efficiency by 20%. That’s not abstract ROI—that’s real money staying in your budget, real months shaved off development timelines, and real patients getting treatments sooner.

Accelerated drug findy is just the beginning. When your data works together, you can optimize clinical trials by identifying the right patient populations faster, advance personalized medicine by matching treatments to individual genetic profiles, and strengthen pharmacovigilance by spotting safety signals earlier across multiple data sources.

Crucially, you can improve real-world evidence utilization. Connecting what happens in controlled trials with what happens in actual patient care—data from wearables, electronic health records, patient registries—gives you the complete picture of how treatments perform in the real world.

From Data to Findy: Speeding Up Drug Development

Drug development is painfully slow and expensive. The average new drug takes over a decade and costs billions to bring to market. Biopharma data integration changes that equation.

By combining genomic sequencing data with proteomic information and existing drug libraries, you can fast-track target identification. Instead of spending years hunting for promising molecular targets, AI algorithms trained on integrated datasets can pinpoint candidates in a fraction of the time.

The proof is in the numbers. Clinical drug trials using AI to find, design, or repurpose molecules jumped from 27 in 2021 to 67 in 2023. That surge happened because researchers finally had access to the massive, harmonized datasets that AI needs to work its magic.

Integrated data also accelerates biomarker findy. Analyzing genetic markers alongside patient outcomes and treatment responses helps identify which biological signals actually matter for understanding disease progression and predicting drug response.

AI-driven molecule design becomes possible when you feed algorithms comprehensive data about molecular structures, biological pathways, and clinical outcomes. Researchers can use predictive models to design compounds with a much higher probability of success, moving beyond traditional trial-and-error.

Changing Patient Care with Personalized Medicine

The one-size-fits-all approach to medicine is dying. Biopharma data integration is what makes personalized medicine actually work. When you connect patient health records with omics data—genomics, proteomics, metabolomics—and layer in real-world data, you can see which patients will respond to which treatments.

This is patient stratification, and it’s revolutionizing drug development. Instead of giving the same chemotherapy to every cancer patient, you can identify subgroups based on genetic markers and biomarker profiles. You know before treatment starts who’s likely to respond and who might experience serious adverse effects.

Custom treatments become possible with this level of insight. Oncology is leading the way, with integrated data from tumor registries and genetic screenings helping doctors find biomarkers that predict drug response. This means patients get therapies custom to their specific cancer’s genetic profile.

Predictive modeling takes it even further. By integrating patient health records with omics data and applying machine learning, you can forecast an individual’s response to a drug before they take the first dose. This allows for optimized treatment plans and helps avoid medications that won’t work for their particular biology.

The 4 Data Problems Blocking Your R&D Pipeline

Even with all the promise of biopharma data integration, the path forward is blocked by real, frustrating obstacles that cost your organization time, money, and potentially life-saving findies.

We’ve worked with dozens of biopharma organizations, and the same four challenges come up again and again: data diversity, interoperability, regulatory compliance, and data security. These aren’t just technical hiccups. They’re fundamental roadblocks that prevent brilliant researchers from accessing the insights they need.

The good news? Once you understand these problems, you can solve them. Let’s break down what you’re really up against.

Taming the Data Beast: Diversity, Volume, and Velocity

Imagine trying to make sense of a conversation where one person speaks English, another speaks binary code, and a third is singing opera. That’s what your data systems are trying to do every day.

Biopharma data integration must handle an incredible mix of formats. You have structured clinical trial data, complex multi-omics datasets (genomics, proteomics), and unstructured data like physician’s notes, scientific literature, and lab reports.

Now layer on the sheer volume. Clinical trials generate 3.6 million data points on average in Phase III alone—three times more than a decade ago. Add real-world data (RWD) streaming in from wearables and mobile health apps, and you’ve got a data tsunami.

The velocity matters too. Some data needs real-time processing for safety monitoring, while other datasets arrive in massive batches. Your integration strategy must handle both.

And here’s the kicker: none of this matters if your data quality is poor. Data cleaning and quality management are absolutely essential. Without them, you’re building your analysis on quicksand.

The Compliance Tightrope: Navigating Regulations and Security

Managing data diversity is hard. Doing it while staying compliant with global regulations is even harder, with a constant risk of fines and reputational damage.

The regulatory landscape is a maze of overlapping requirements. GDPR governs European patient data, HIPAA sets the rules for protected health information in the United States, and 21 CFR Part 11 defines FDA requirements for electronic records. Each has different technical and documentation needs.

Patient privacy is at the heart of these regulations. Your data governance framework must protect that trust while still enabling the secure data sharing that makes collaboration possible. The challenge intensifies when working with partners like academic institutions, CROs, and hospitals. How do you share data securely without compromising compliance or data integrity in the biopharmaceutical sector?

Traditional approaches often fail. Copying data to central repositories creates security risks and compliance headaches. Limiting access kills collaboration. You need a different approach—one that lets you analyze data where it lives, without moving it, while maintaining complete audit trails.

This is exactly why federated architectures have become so critical for modern biopharma data integration.

The Lifebit Tech Stack: Powering Next-Gen Biopharma Data Integration

You can’t fix the data crisis with a spreadsheet and good intentions. You need technology built for the unique challenges of biopharma data integration—technology that can handle sensitive patient data, connect disparate systems, and satisfy regulators.

The tools exist today. Cloud platforms offer the scalability and computational power to process millions of data points. When combined with Artificial Intelligence (AI), Machine Learning (ML), interoperability protocols, and innovative approaches like federated learning, you get a data ecosystem that’s both intelligent and secure.

At Lifebit, we’ve built our platform around these core technologies because we’ve seen how they transform what’s possible. But this isn’t just about our technology—it’s about understanding how these pieces work together to solve real problems.

How AI and Machine Learning Drive a Data-Driven Revolution

AI and ML are game-changers for biopharma data integration. Think about the harmonization problem—all those different data formats and standards. AI-powered data harmonization can automatically clean, normalize, and standardize these diverse datasets, turning chaos into order in days or even hours.

Once your data is integrated, predictive analytics comes into play. ML models can forecast drug efficacy, identify potential safety signals, and optimize trial designs. Natural Language Processing (NLP) extracts insights from unstructured text—physician notes, scientific papers, lab reports—that would otherwise remain locked away.

The evidence is clear. As noted in Nature, we are experiencing scientific discovery in the age of artificial intelligence, where AI helps find patterns humans might miss. The number of clinical drug trials involving AI for molecule findy or design jumped from 27 in 2021 to 67 in 2023. That’s measurable impact.

AI and ML models become exponentially more powerful when trained on truly integrated datasets that combine genomics, clinical outcomes, and real-world evidence. They learn from every data point, continuously improving their predictions.

Building Bridges: Interoperability Standards and Platforms

Data silos threaten efficiency for nearly half of pharma businesses, a result of systems built in isolation. Breaking down these walls requires universal standards and platforms that can implement them at scale.

FHIR (Fast Healthcare Interoperability Resources) is the modern standard for exchanging healthcare information, while the OMOP Common Data Model provides a standardized format for observational health data. These standards create a common language, allowing your clinical trial systems, genomic databases, and real-world evidence sources to communicate seamlessly.

But standards alone aren’t enough. You need platforms to orchestrate data movement while maintaining security and governance. That’s where federated architectures come in—and it’s exactly what we’ve built at Lifebit with our federated biomedical data platform.

Federated platforms solve a critical problem: how to analyze data from multiple sources without moving it. This is vital for regulatory compliance and data sovereignty. Patient data can stay in the hospital, genomic data in the research lab, and real-world evidence with the provider—while your researchers still get unified insights across all of it.

This approach builds bridges between organizations, creating an ecosystem where collaboration happens naturally and innovation accelerates, all while keeping sensitive data secure and compliant.

Your Blueprint for a Future-Proof Data Integration Strategy

You’ve seen the problems and the potential. Now, how do you build a data integration strategy that works?

Creating a robust biopharma data integration strategy is an ongoing journey. It requires thoughtful planning, smart architectural decisions, and a commitment to data governance. The goal is to build a system that can grow and adapt as your organization evolves and new technologies emerge.

Think of it as building a foundation for a house you’ll live in for decades. You need to plan for today’s needs while anticipating tomorrow’s possibilities. This means considering scalability from day one, establishing clear governance frameworks, and choosing technologies that won’t lock you into outdated approaches.

Designing Your Architecture: Centralized vs. Federated Models

When designing your data integration architecture, you face a fundamental choice: centralize everything in one place, or keep data distributed where it lives? This decision will shape your costs, security, and compliance.

| Feature | Data Lake | Data Lakehouse | Federated Data Platform |

|---|---|---|---|

| Cost | Low storage, high processing complexity | Balanced storage & processing | Lower data movement cost, higher initial setup for governance |

| Security | Complex to secure, often raw data | Improved, with data warehouse features | High, data remains at source, granular access controls |

| Scalability | Highly scalable for raw data | Highly scalable, supports structured data | Highly scalable across distributed sources |

| Data Sovereignty | Low, data often moved to central location | Medium, data often moved to central location | High, data remains in its original jurisdiction |

| Data Movement | High, data moved for processing | High, data moved for processing | Minimal, only queries or aggregated insights move |

| Compliance | Challenging to maintain across raw data | Easier with structured layers | Improved by keeping data at source, strong governance |

Traditional centralized models—like data lakes—pull everything into one location. But moving massive amounts of sensitive patient data creates enormous costs, security vulnerabilities, and compliance nightmares, especially when dealing with global regulations like GDPR and HIPAA.

Federated models flip this approach on its head. Instead of moving data to the analysis, you bring the analysis to the data. Information stays in its original jurisdiction, under its original governance, while you gain secure, controlled access for research.

This is practically transformative. Our federated platforms at Lifebit, including our Trusted Research Environment (TRE) and Trusted Data Lakehouse (TDL), enable organizations to access global biomedical data without the headaches of centralization. You get a “single source of truth” without actually moving the truth. Data sovereignty stays intact, security improves, and compliance becomes manageable. You can learn more info about federated data platforms and how they’re changing biopharma data integration on our website.

Future Trends in Biopharma Data Integration

The data integration landscape is accelerating fast. Several powerful trends are reshaping how we connect and analyze biomedical data.

Agentic AI is moving beyond today’s ML models. These systems will autonomously generate hypotheses and design experiments, acting as AI colleagues to push research forward. They require deeply integrated, harmonized data spanning genomics, clinical trials, and real-world evidence.

Global research collaboration is becoming essential for rare diseases, pandemic preparedness, and large-scale cancer research. Initiatives like The European Health Data Space (EHDS) are building frameworks for secure cross-border health data exchange, setting precedents for other regions where we operate—from Canada and the USA to Israel and Singapore.

The integration of real-world data is shifting from batch to real-time. Continuous data streams from wearables and EHRs allow clinical trials to adapt on the fly and pharmacovigilance to detect safety signals faster.

As highlighted by McKinsey in their top ten trends in life sciences digital and analytics, we’re also seeing the rise of advanced analytics and digital twin technology. These virtual models simulate patient responses to treatment, requiring incredibly rich, harmonized datasets to function accurately.

These trends all point to one truth: the data integration strategy you build today must be flexible, secure, and scalable enough for the innovations of tomorrow.

Frequently Asked Questions about Biopharma Data Integration

We hear the same concerns from biopharma leaders all the time. You’re not alone in wrestling with these questions. Let’s tackle them head-on.

What is the first step to creating a data integration strategy?

The first step isn’t about technology; it’s about clarity. Before any investment, conduct a thorough assessment of your current IT landscape, organizational structure, and strategic goals. Ask fundamental questions:

- Where does your data live right now?

- What formats are you dealing with?

- Who needs access to what, and why?

- What specific business outcomes are you chasing (e.g., faster drug findy, improved trial efficiency, reduced costs)?

Without this clear understanding, any technology investment is just an expensive guess. Start with clarity, not code.

How does data integration support regulatory submissions to agencies like the FDA and EMA?

This is where biopharma data integration is mission-critical. Regulatory agencies like the FDA and EMA demand data that meets the highest standards of integrity, traceability, and transparency. An integrated platform is your secret weapon for meeting these requirements.

- Data Integrity: Harmonizing data from R&D, clinical trials, and manufacturing eliminates inconsistencies and reduces errors, which is fundamental for approval. As research shows, data integrity within the biopharmaceutical sector in the Era of Industry 4.0 is essential for regulatory success.

- Comprehensive Evidence: You can demonstrate safety and efficacy by pulling together a cohesive narrative backed by verifiable data from every stage of development.

- Auditability and Traceability: A well-integrated system creates an unbroken chain of custody. When regulators ask, you can trace every data point back to its source, building trust and speeding up approvals.

Can small to mid-sized biotechs afford to implement advanced data integration solutions?

Yes—and you can’t afford not to. While “advanced data integration” can sound expensive, modern cloud-native and federated solutions have democratized access to enterprise-grade capabilities.

Cloud platforms offer flexible, pay-as-you-go models that eliminate huge upfront infrastructure costs. You don’t need to build massive data centers or hire armies of IT specialists.

Federated architectures, like those we’ve built at Lifebit, let you start small. Integrate your most critical datasets first and scale as your needs and resources grow. You’re not locked into a massive, all-or-nothing investment.

Consider the alternative: the cost of not integrating your data. Missed opportunities, delayed timelines, compliance risks, and failed regulatory submissions add up fast—and they can sink a small biotech. The ROI from accelerated drug findy and reduced costs typically outweighs the investment, making this a strategic move that levels the playing field.

From Fragmented Data to Life-Saving Findies

We’ve journeyed through the landscape of biopharma data integration, and the conclusion is clear: your data is a strategic asset waiting to be activated.

Every genomic sequence, clinical trial result, and patient record represents a potential breakthrough. When connected, these pieces become the innovation driver that defines the next generation of medicine. That’s your competitive advantage.

But what really matters are patient outcomes. Faster drug findy means someone’s mother gets a treatment sooner. Better data integration means a child with a rare disease finds hope. More efficient clinical trials mean breakthrough therapies reach people months or even years earlier.

The future of medicine is not just about new molecules, but about connecting the data that powers their findy. We’re moving to an era where global collaboration happens in real-time, securely and compliantly. Where AI can learn from millions of patient records without compromising a single person’s privacy. Where a researcher in London can work seamlessly with data from Singapore, Toronto, and Tel Aviv—all without moving that sensitive information an inch.

This isn’t science fiction. At Lifebit, we’ve built our entire platform—the Trusted Research Environment (TRE), Trusted Data Lakehouse (TDL), and R.E.A.L. layer—around this vision. We’ve seen how federated architectures transform organizations from data-rich but insight-poor to genuinely data-driven powerhouses.

The 97% of wasted healthcare data isn’t a permanent condition. It’s a solvable problem. Solving it requires a smart strategy, the right technology, and a commitment to making data work for you.

Are you ready to open up the full potential of your biomedical data? Discover how a federated biomedical data platform can transform your R&D and join us in shaping the future of medicine. Patients can’t afford to wait any longer.